Delidded Ryzen 7 5800X3D CPU Runs 10 Degrees Celsius Cooler

No risk it, no biscuit.

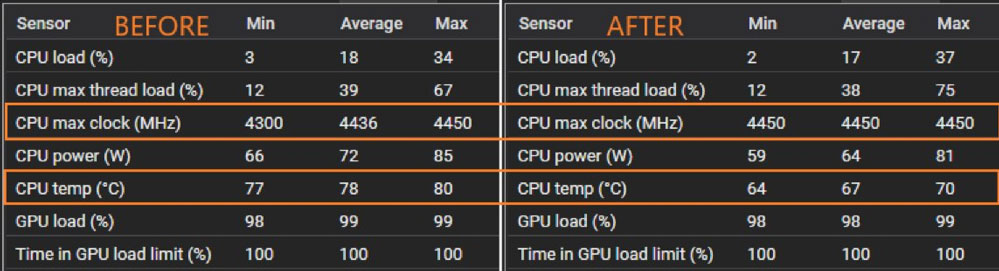

A PC enthusiast called Madness has put an AMD's Ryzen 7 5800X3D under the knife. He successfully performed a delicate operation to remove the integrated heat spreader (IHS) without killing the chip - a procedure popular with the CPU overclocking community dubbed delidding. After some prodding by a Hardware Luxx editor, Madness shared some exciting test results, comparing key CPU performance stats when gaming before and after the delid operation. The delidded Ryzen 7 5800X3D ran faster, consumed less power, and ran 10 degrees Celsius cooler in the same system when taxed by playing Forza Horizon 5.

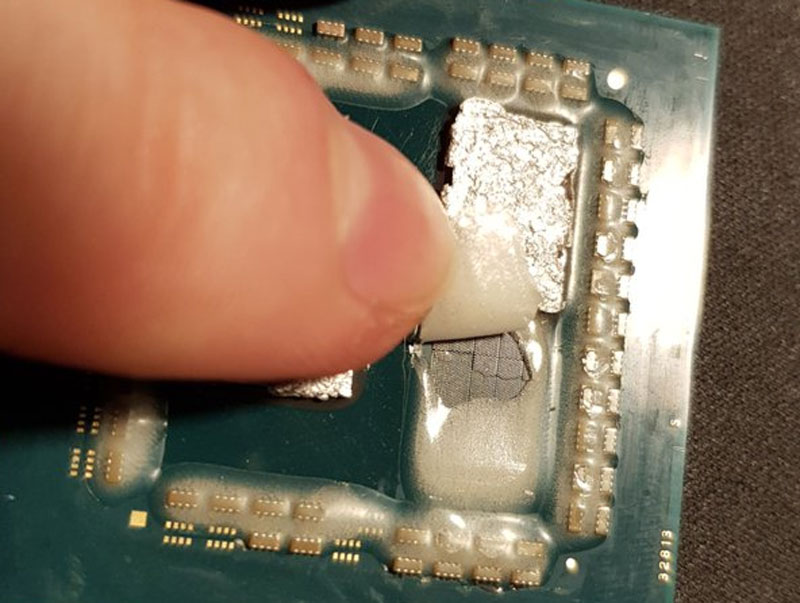

The Ryzen 7 5800X3D with its IHS removed smiles alongside some telltale but unsophisticated tools - sharp knife blades. The Twitter user used the blades to pry up the edges of the IHS while simultaneously applying between 150 to 200 degrees Celsius via a heat gun. With previous-gen processors, this process is somewhat nerve-wracking. Still, the 5800X3D adds a layer of jeopardy by positioning a multitude of surface mount components between the IHS 'legs'. Those would be all too easy to accidentally knife during the delidding. More established CPU designs can be delidded with less risk.

After delidding, an enthusiast might replace the factory TIM (thermal interface material) with something like a liquid metal compound or run the chip 'naked' with the risk of direct cooler contact on the silicon. Instead, Madness has followed up to say that he added Conductonaut compound to the dies and replaced the IHS.

Ultimately, the results of delidding are more important than the process, and Madness achieved an impressive result. The Ryzen 7 5800X3D ran 10 degrees Celsius cooler under heavy workloads after the operation. It isn't the only benefit; the 3D V-cache chip also showed improved power consumption and better boost clocks. The Ryzen 7 5800X3D is a locked chip, so you can't overclock the CPU, but workarounds have emerged that allow tuning. It would be interesting to see the results of overclocking with the delidded chip.

Another exciting image from Madness revealed a vacant second pad area in the 5800X3D with a sliver of protective material lifted off. It isn't normal for a single CCD Ryzen to have a second pad like this.

AMD's Zen 4 CPUs will arrive later this year, and the 3D V-cache versions will follow with only a tiny timing gap.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

Alvar "Miles" Udell ReplyMadness has followed up to say that they added Conductonaut compound to the dies and replaced the IHS.

So does this serve as confirmation that AMD is now using lesser quality solder on their chips? -

rluker5 AMD is enabling PCIe5 on their upcoming products. I'm guessing they will use it as a selling point.Reply

How long before the 5800X3d falls slightly behind the 12600k in GPU bound gaming scenarios just like my Haswell/H97 setup with a mining bust RX 6800 has because Z97 only has PCIe3 and doesn't support rebar due to not being named on some driver checklist, or not getting the code in a bios update?

And isn't the cache held on the CPU by the same forces that hold layers of clay together that can be loosened by water? If I had one that thing would stay glued/soldered just to eliminate the chance of contamination and shifting. -

helper800 Reply

You can run a 3090 ti on pcie3 x16 and its the same performance as on pcie5 x16 give or take 2%.rluker5 said:AMD is enabling PCIe5 on their upcoming products. I'm guessing they will use it as a selling point.

How long before the 5800X3d falls slightly behind the 12600k in GPU bound gaming scenarios -

rluker5 Reply

You are just mentioning what is necessary.helper800 said:You can run a 3090 ti on pcie3 x16 and its the same performance as on pcie5 x16 give or take 2%.

And is true only in many games so long as they don't benefit from rebar/sam or you are framerate limited: AMD Smart Access Memory Tested, Benchmarked | TechSpot Sam and rebar should both also work on PCIe3, but they don't and won't just like whatever new thing won't work on gen4.

But even 2-5% will be larger than the benefit of a faster, yet still 100% GPU bound CPU.

You know they have to compare these things on 1080p low, 720p to see which is faster they are so GPU bound.

PCIe4 has been made a selling point. I'm just saying PCIe5 will as well. Or else why would anybody buy a Zen4 that isn't a Threadripper or Epyc? -

helper800 Reply

PCIe 4 or higher is not relevant to GPU performance. Unless you have a very limited GPU, as far as, memory bandwidth goes there is little (less than 2% which is run to run variance) to no benefit above PCIe gen 3 speed.rluker5 said:You are just mentioning what is necessary.

And is true only in many games so long as they don't benefit from rebar/sam or you are framerate limited: AMD Smart Access Memory Tested, Benchmarked | TechSpot Sam and rebar should both also work on PCIe3, but they don't and won't just like whatever new thing won't work on gen4.

But even 2-5% will be larger than the benefit of a faster, yet still 100% GPU bound CPU.

You know they have to compare these things on 1080p low, 720p to see which is faster they are so GPU bound.

PCIe4 has been made a selling point. I'm just saying PCIe5 will as well. Or else why would anybody buy a Zen4 that isn't a Threadripper or Epyc? -

rluker5 Reply

In terms of theoretical bandwidth saturation limitations I agree with you, as I agreed with the last guy on this topic.helper800 said:PCIe 4 or higher is not relevant to GPU performance. Unless you have a very limited GPU, as far as, memory bandwidth goes there is little (less than 2% which is run to run variance) to no benefit above PCIe gen 3 speed.

But there is valid practical proof in the real world that many games the performance difference is 5% and more because of other features that have been attached exclusively to the newer PCIe standard. I referenced some of that in the link in my last post.

And in regards to my original point: how much improvement does a slightly faster CPU see over a slightly slower one in a completely GPU bound gaming scenario as most would encounter when dealing with current or next gen AMD or Intel? would it be 5%?

My original point is that there will likely be some feature that improves GPU bound gaming performance (maybe direct storage?) that will come out shortly after AMD introduces their next gen CPUs and GPUs that will both have PCIe5. And even if the 5800X3D were 1000x as fast as the 12600k in games, because it will be run GPU bound, it will be slower by however much that feature speeds the GPU. Just like it will be slower than every CPU that can outpace the next gen GPUs at next gen game settings while also running PCIe5.

But I could be wrong. Question is whether or not AMD, Intel or Nvidia will come up with a reason to upgrade to PCIe5 hardware. So far AMD has come up with 2 to upgrade to gen4 in regards to GPU communication. -

Globespy What exactly were the "impressive results" in terms of performance gains? Running a bit cooler is expected with delidded/LM, but not worth it unless the FPS performance improvements are in line with the risk involved. If we're talking a handful of extra frames, why bother?Reply -

Ogotai Replyrluker5 said:Or else why would anybody buy a Zen4 that isn't a Threadripper or Epyc?

um probably the obvious reason, cost. i was looking at picking up Threadripper a while ago, i could use the PCIe lanes that it had, but due to cost, i didnt. instead i kept my 5900x, and just shuffled some hardware around. one of the reasons i kind of miss the X99 system i upgraded from, the pci e lanes it had. -

helper800 Reply

I agree that there is a lot of PCIe bus speed that is not being utilized and that it would be prudent of AMD, Intel, and Nvidia to make use of it in some way to pull ahead. In terms of resizable bar and SAM there are more than a few cases where they cause FPS regression instead of small gains. That is not to say that they are not overall useful features to have turned on as they have an aggregate benefit to FPS across many games. I was trying to point out that PCIe being more fast has little to no benefit that is sizable enough to drive a purchasing decision, not to say it wont in the future.rluker5 said:In terms of theoretical bandwidth saturation limitations I agree with you, as I agreed with the last guy on this topic.

But there is valid practical proof in the real world that many games the performance difference is 5% and more because of other features that have been attached exclusively to the newer PCIe standard. I referenced some of that in the link in my last post.

And in regards to my original point: how much improvement does a slightly faster CPU see over a slightly slower one in a completely GPU bound gaming scenario as most would encounter when dealing with current or next gen AMD or Intel? would it be 5%?

My original point is that there will likely be some feature that improves GPU bound gaming performance (maybe direct storage?) that will come out shortly after AMD introduces their next gen CPUs and GPUs that will both have PCIe5. And even if the 5800X3D were 1000x as fast as the 12600k in games, because it will be run GPU bound, it will be slower by however much that feature speeds the GPU. Just like it will be slower than every CPU that can outpace the next gen GPUs at next gen game settings while also running PCIe5.

But I could be wrong. Question is whether or not AMD, Intel or Nvidia will come up with a reason to upgrade to PCIe5 hardware. So far AMD has come up with 2 to upgrade to gen4 in regards to GPU communication. -

-Fran- Reply

Well, you are most likely wrong as nVidia kept PCIe4 for their next lineup, same as Intel, and AMD is the only one reportedly going to use PCIe5 in their next gen of GPUs.rluker5 said:But I could be wrong. Question is whether or not AMD, Intel or Nvidia will come up with a reason to upgrade to PCIe5 hardware. So far AMD has come up with 2 to upgrade to gen4 in regards to GPU communication.

The jump from PCIe3 to PCIe4 may be worth it (big IF), but it looks like PCIe5 is completely not justified yet. It's really expensive to certificate them for PCIe5 and later PCIe6 when you're not really going to use neither the features nor bandwidth.

Regards.