Intel Drops Arc A750 Price to $249, With Improved Drivers

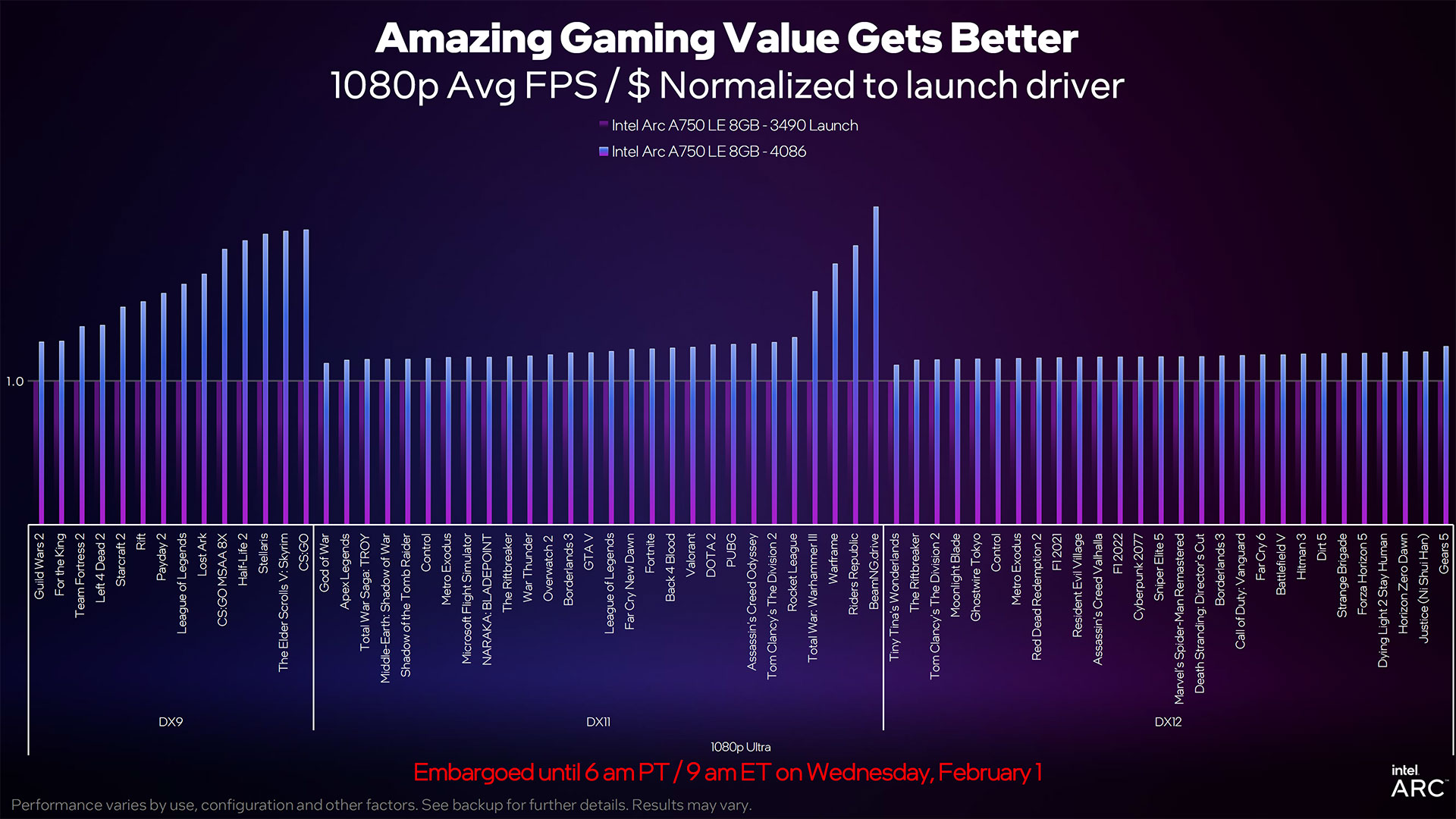

DX9 performance has increased by 43% since launch

The Intel Arc Alchemist architecture brought a third competitor to the best graphics cards, and while it can't top the GPU benchmarks in terms of performance, there's certainly a strong value proposition. At the same time, there are areas where the drivers still need tuning — Minecraft with ray tracing being one example that comes immediately to mind since it's one of the games in our standard test suite. But Intel hopes to encourage further adoption with the latest announcement of a $40 price cut to the Intel Arc A750, bringing it down to just $250.

It's difficult to say exactly how many people have purchased Intel Arc graphics cards — mobile or desktop — since they first became available in the spring of 2023. I've seen claims that Intel dedicated GPUs accounted for up to 4% of total sales in Q4 2022, but so far Arc GPUs don't show up as individual entries on the Steam Hardware Survey (which will be updated with January data shortly, so maybe that will change). Certainly, dropping the price of the A750 by 14% can't hurt.

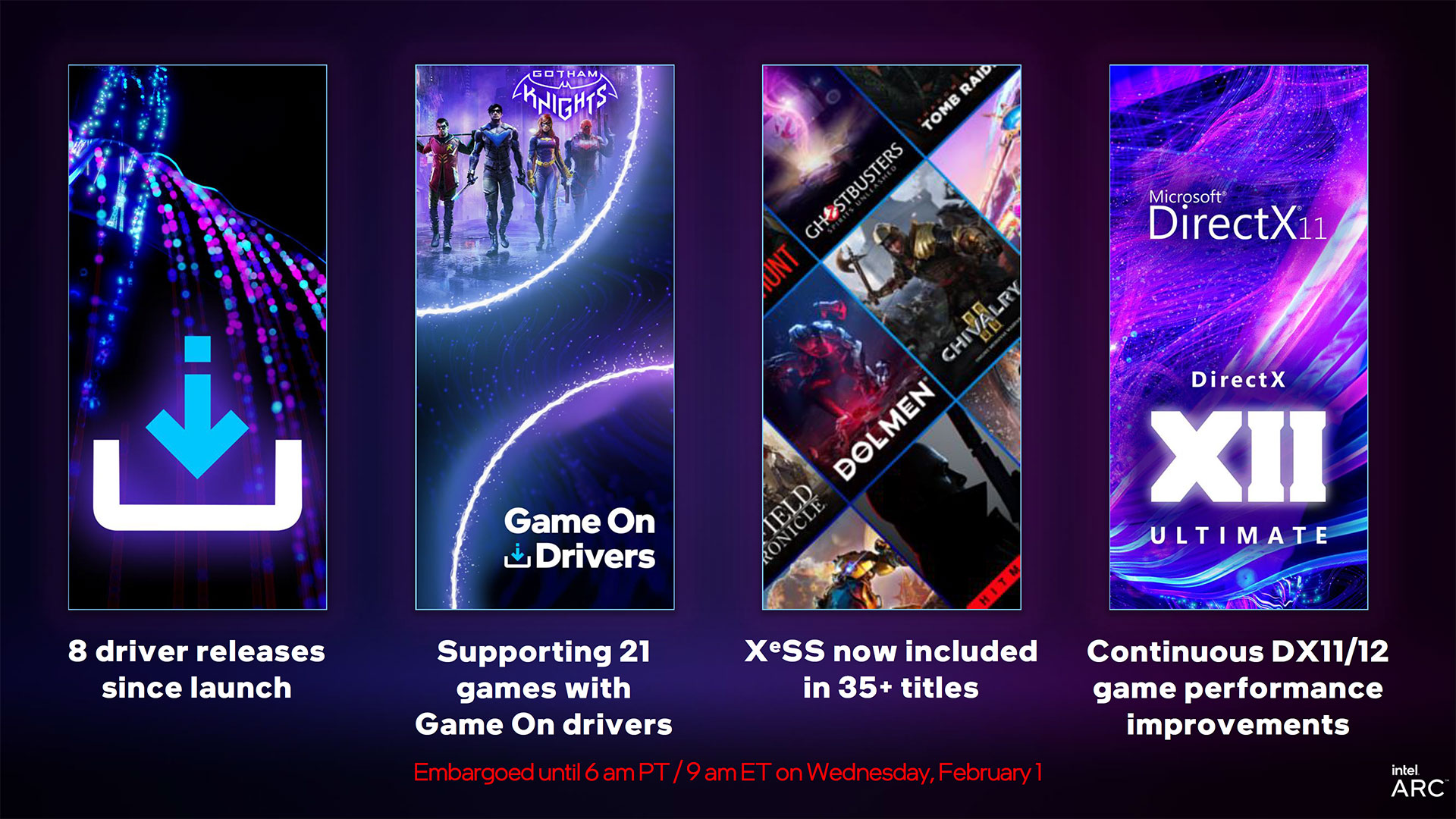

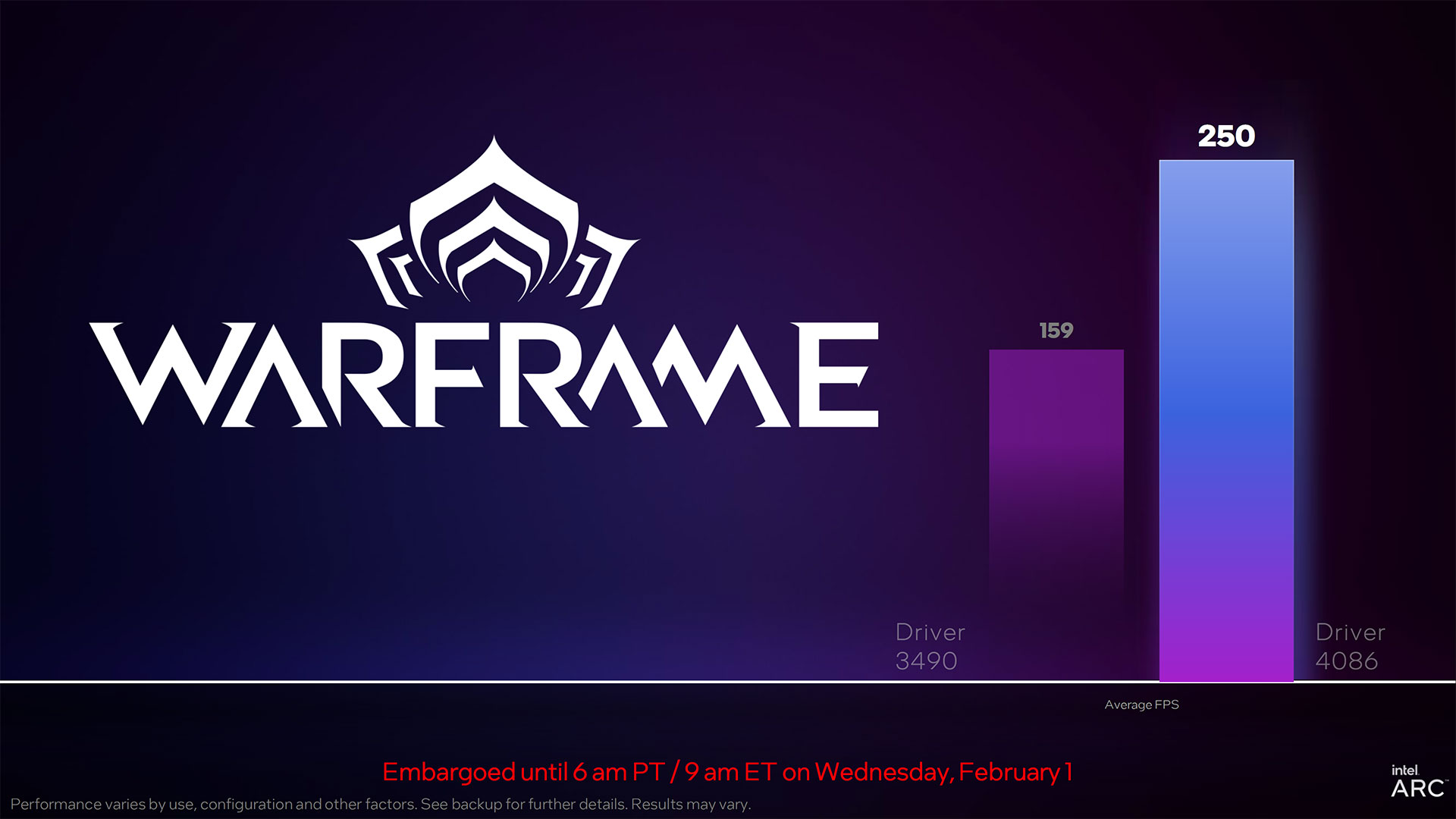

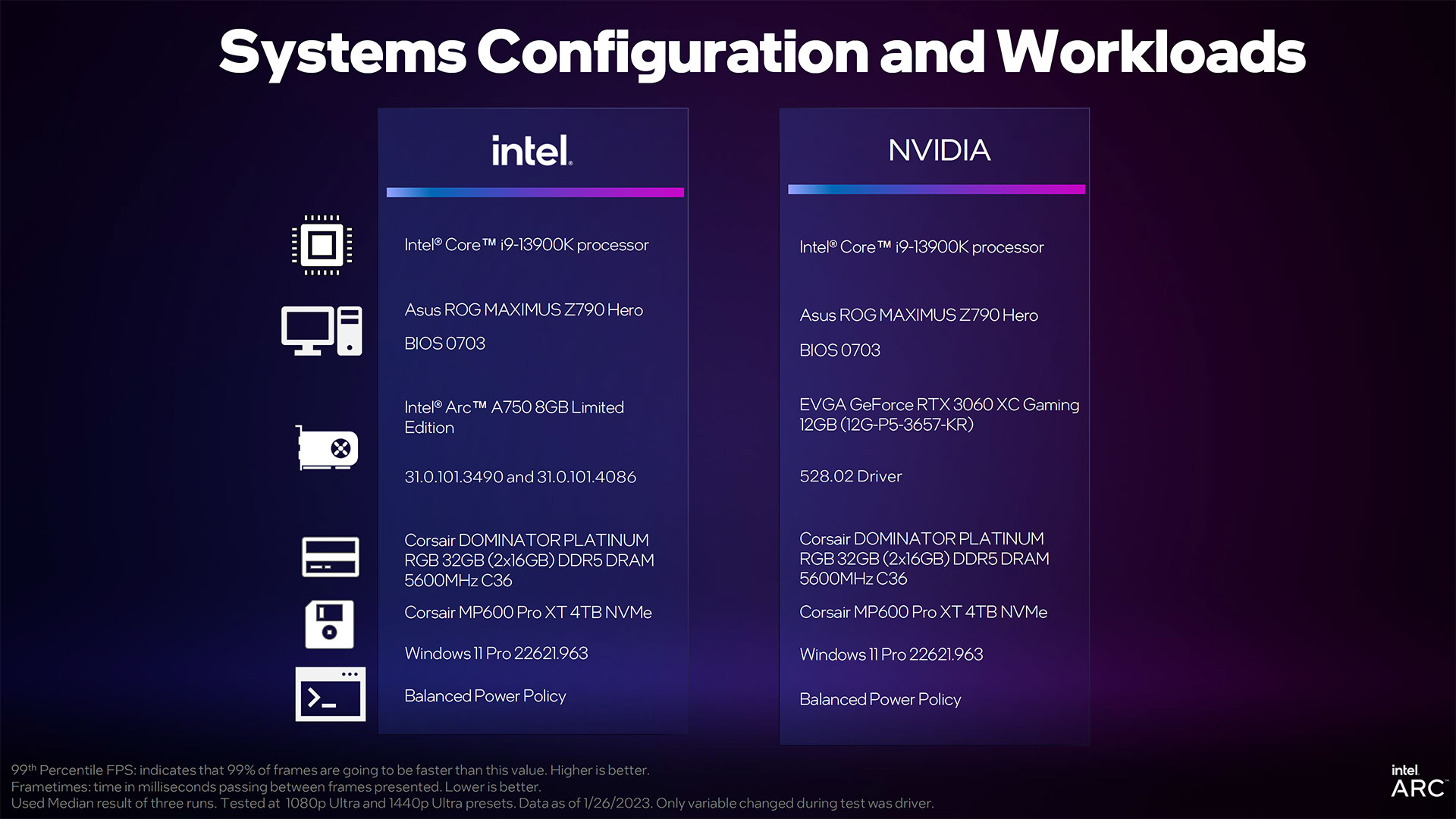

It's not just about lowering the price, either. Since launching with the 3490 drivers back in October 2022, Intel has delivered three WHQL drivers and at least four beta drivers. The latest beta, version 4090, became available last week, and improving performance and compatibility has been a key target for all of the driver updates.

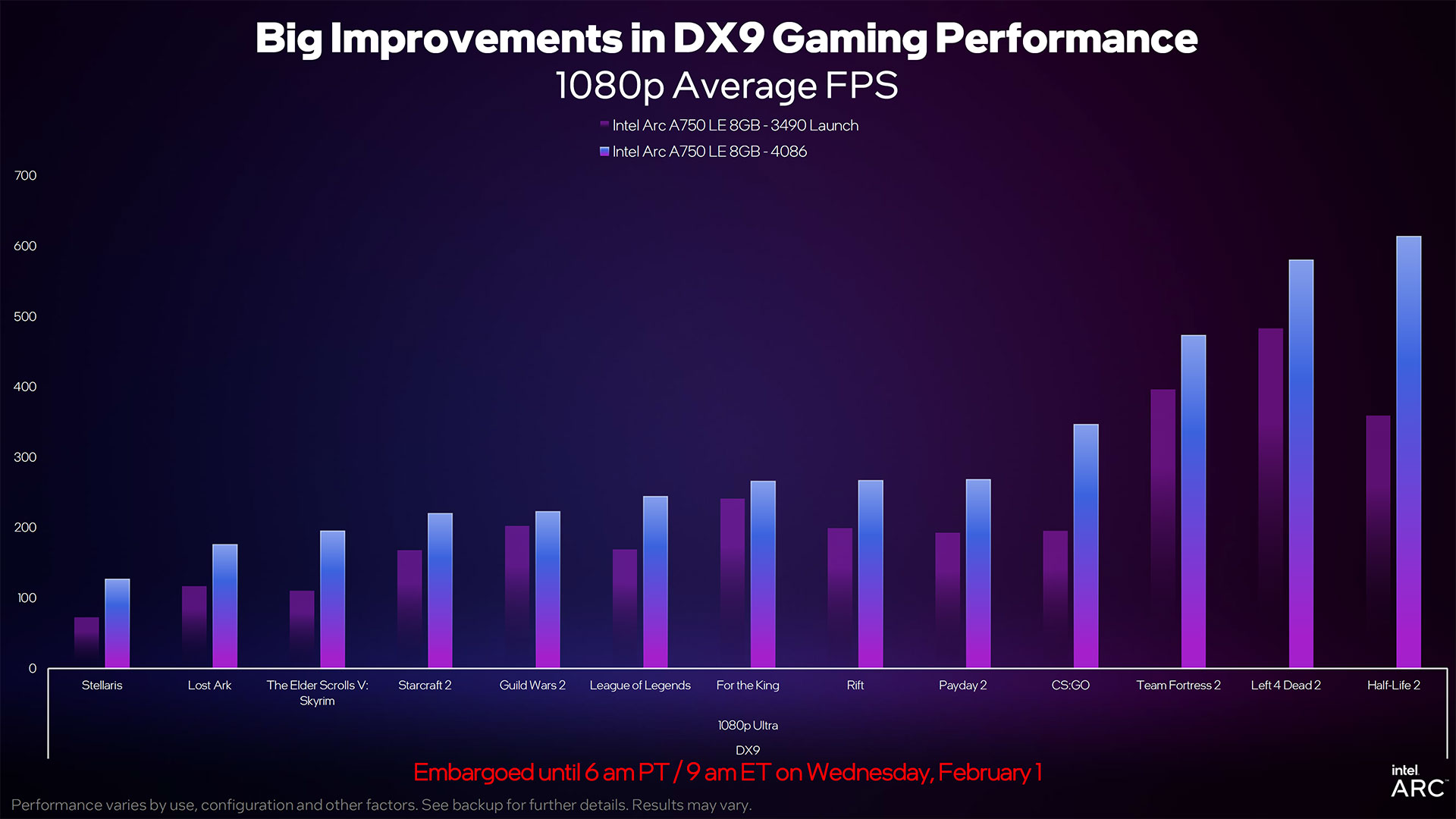

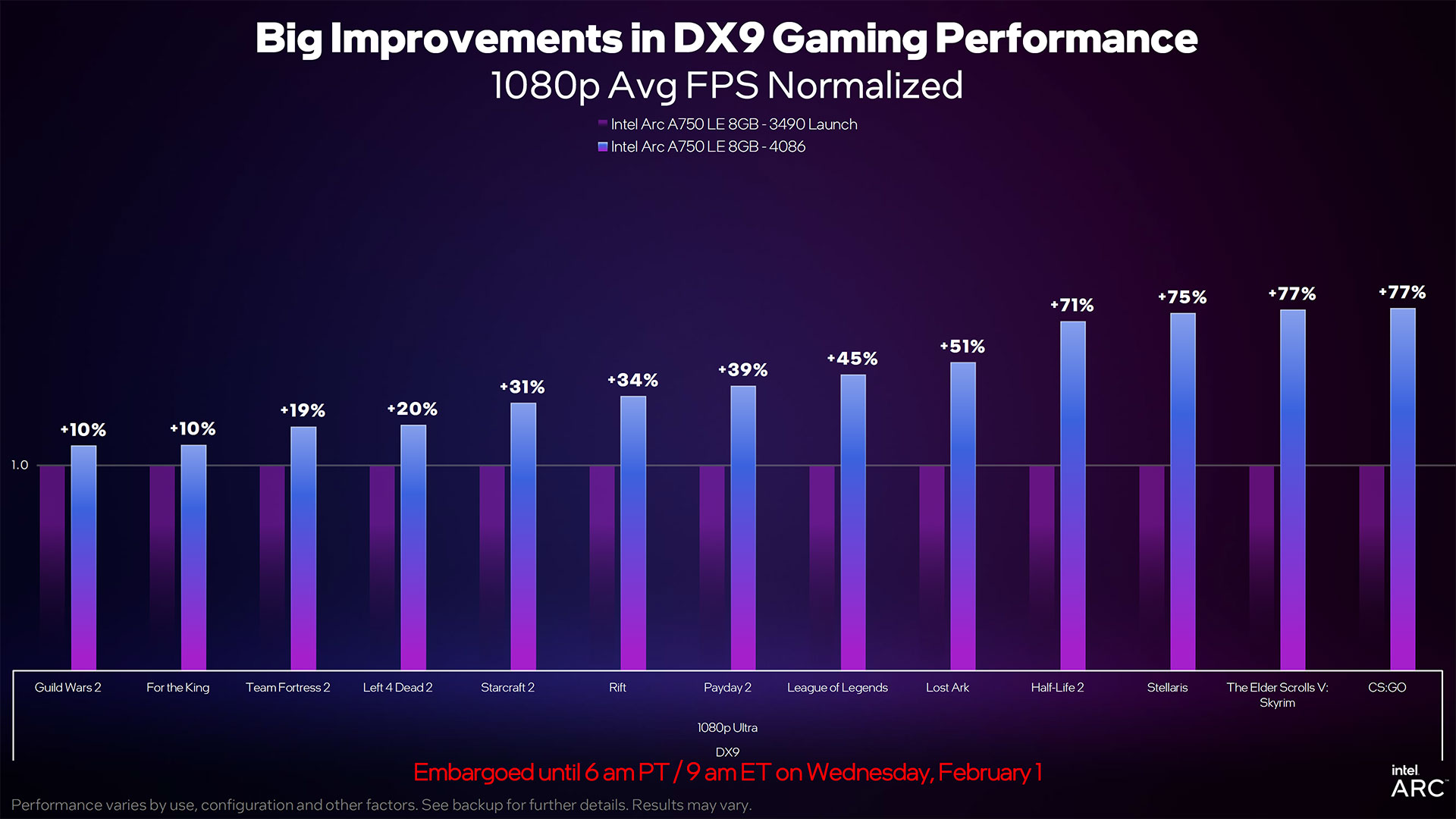

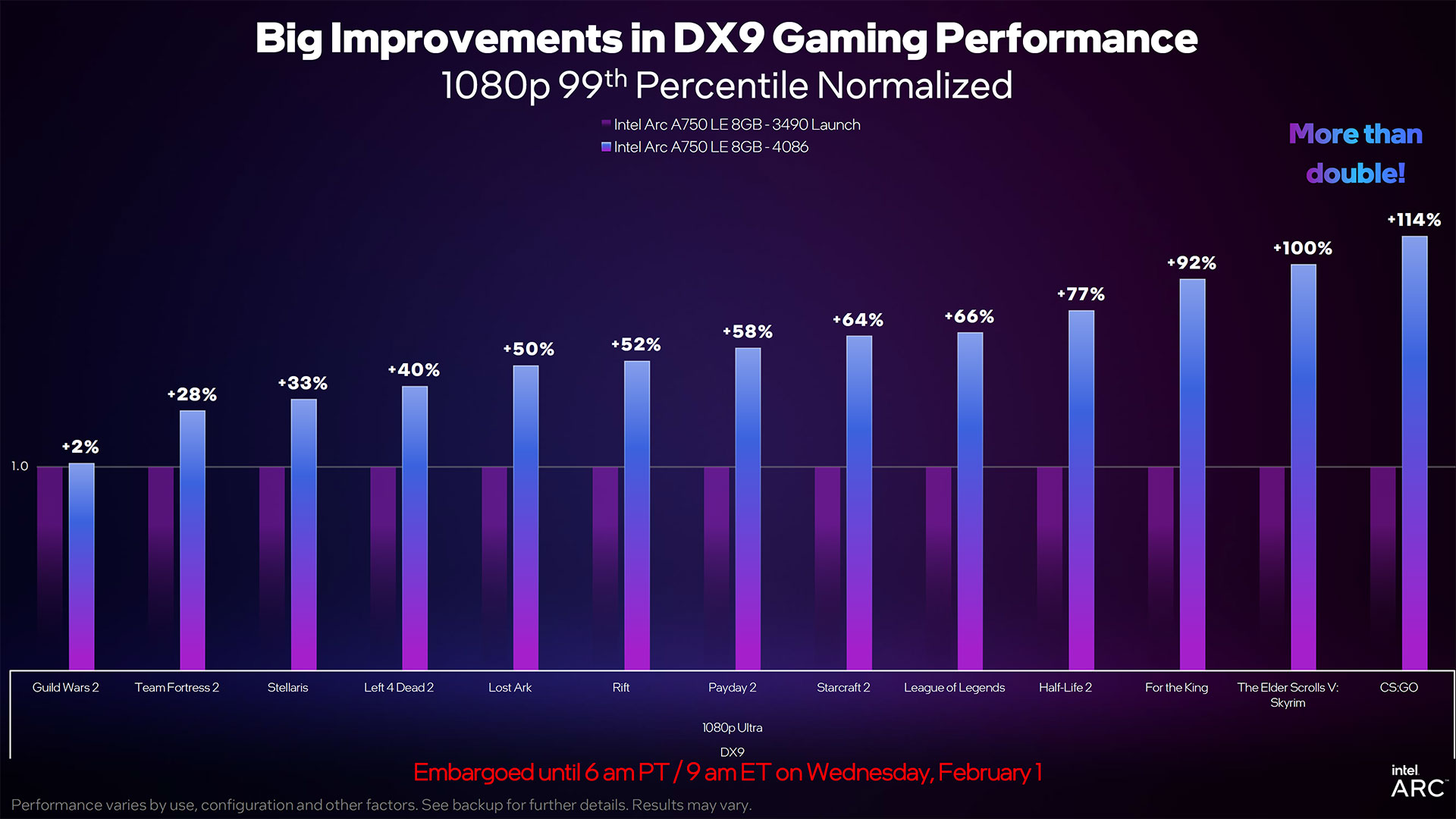

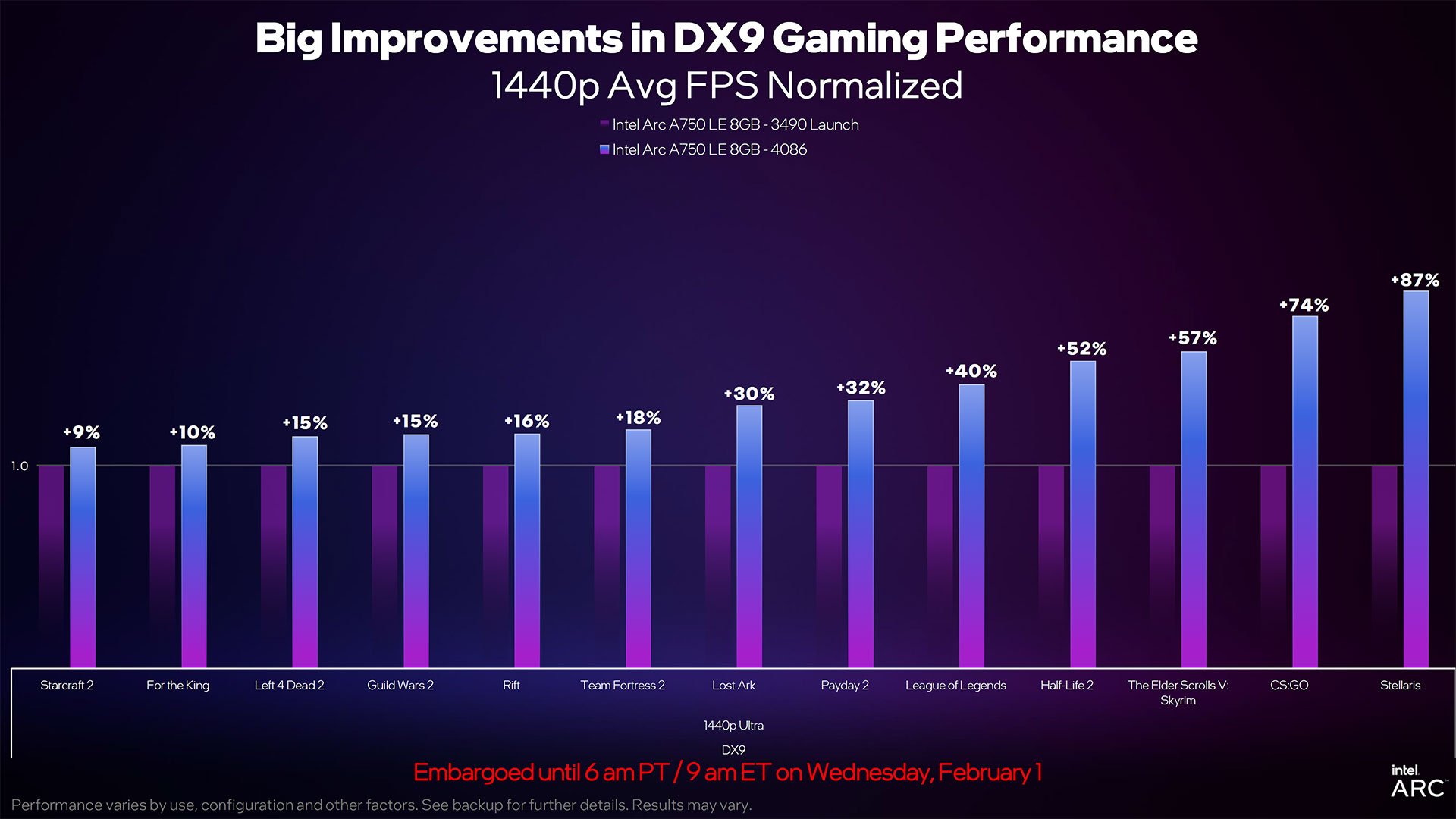

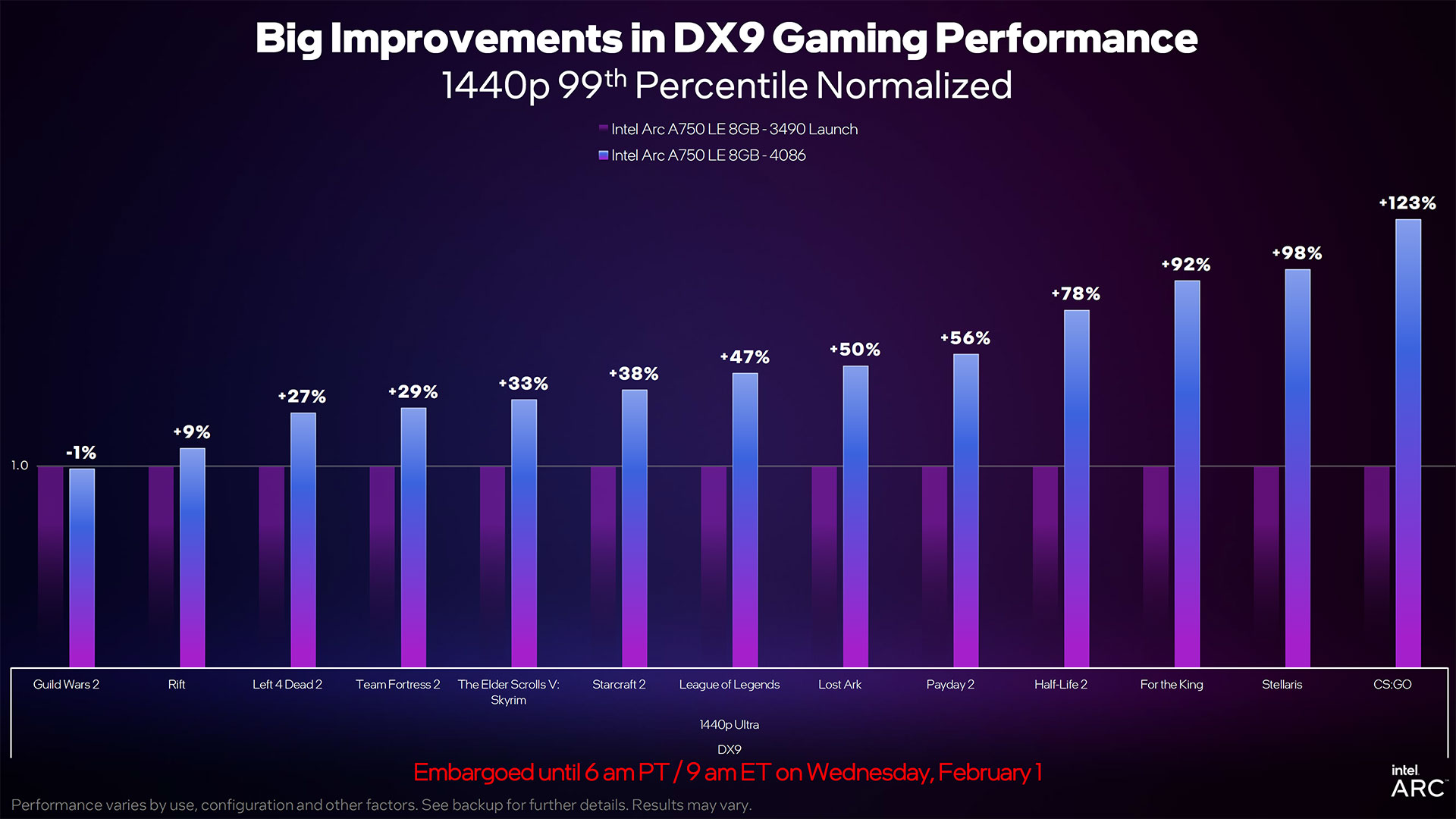

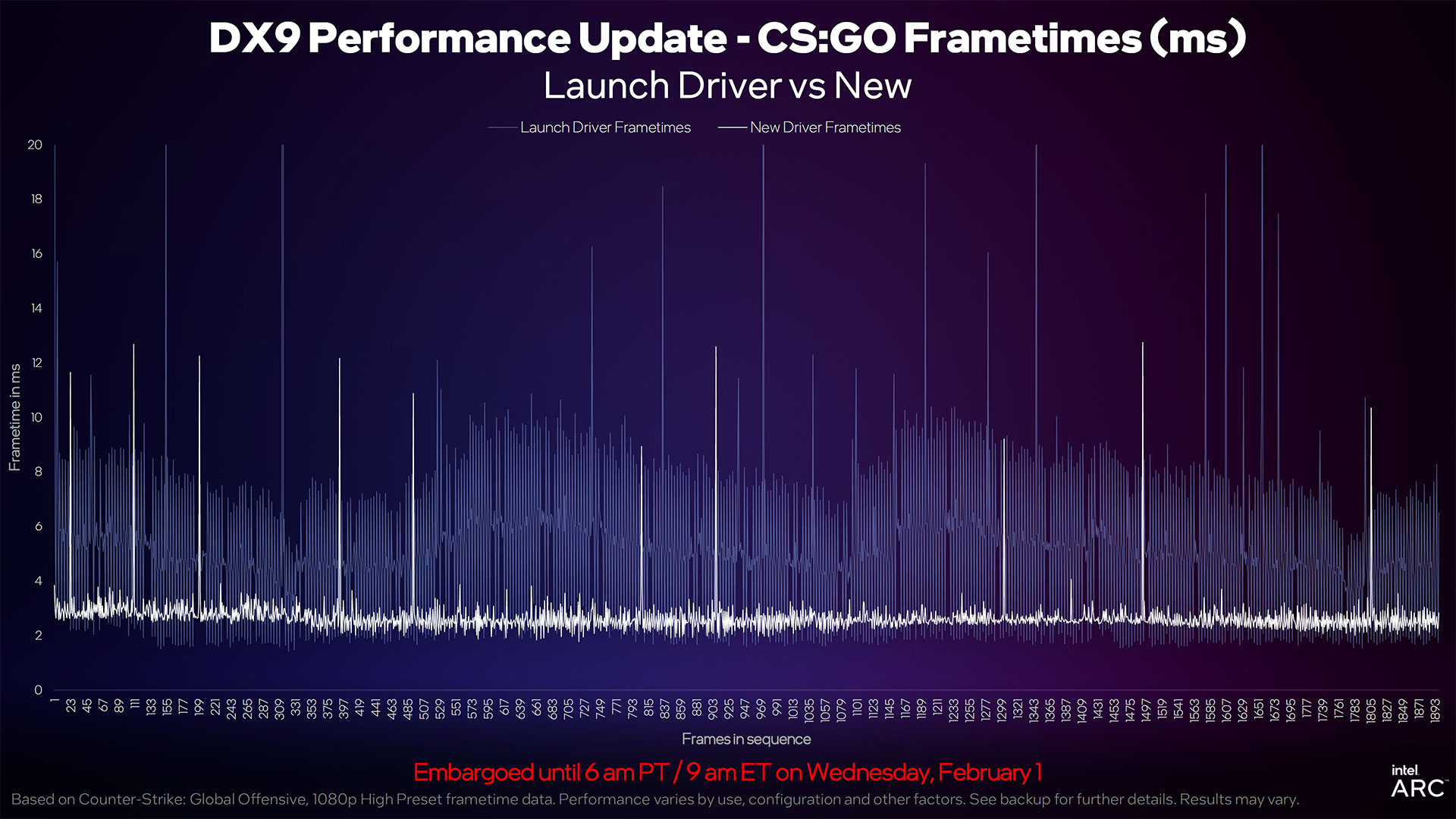

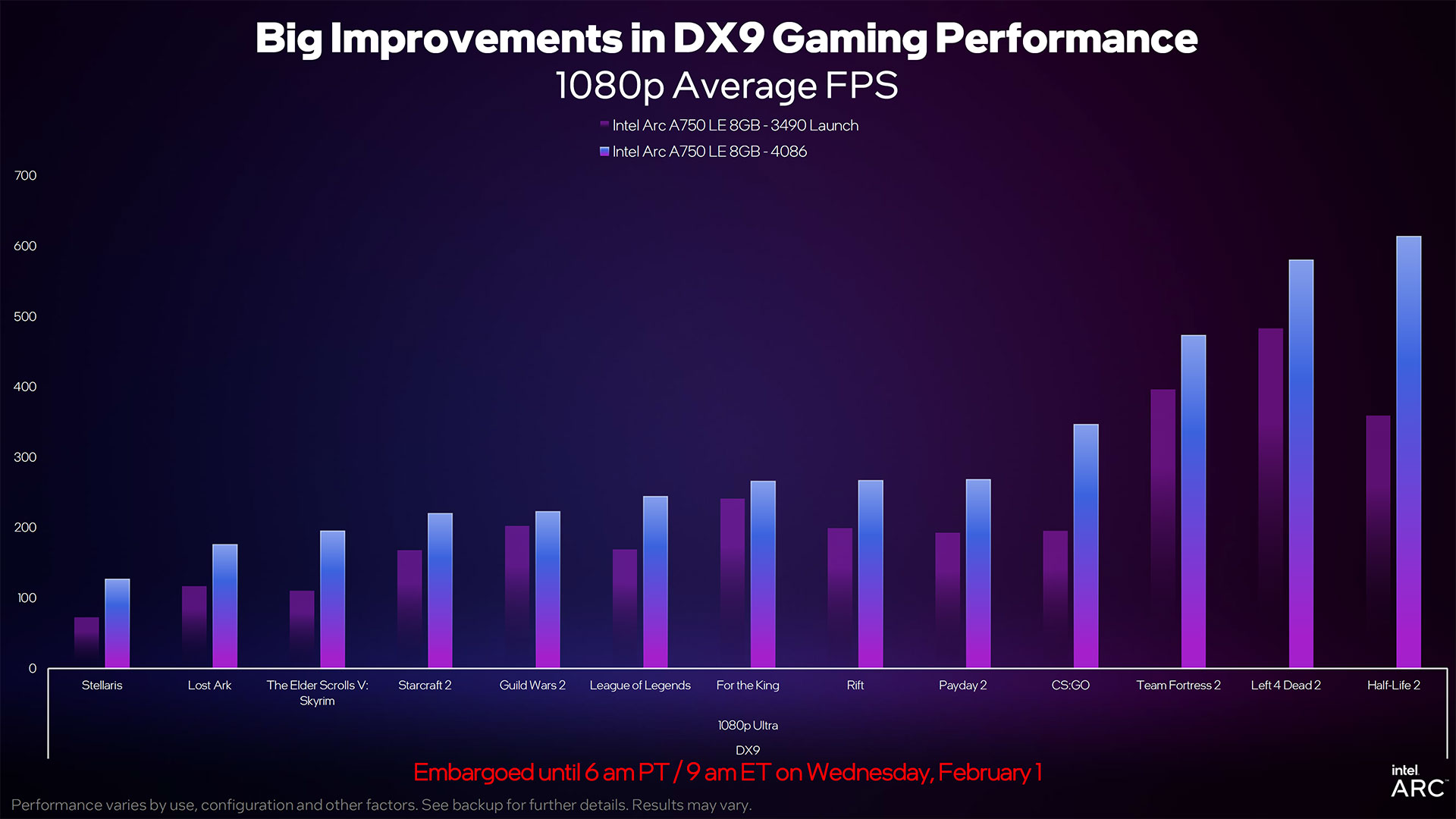

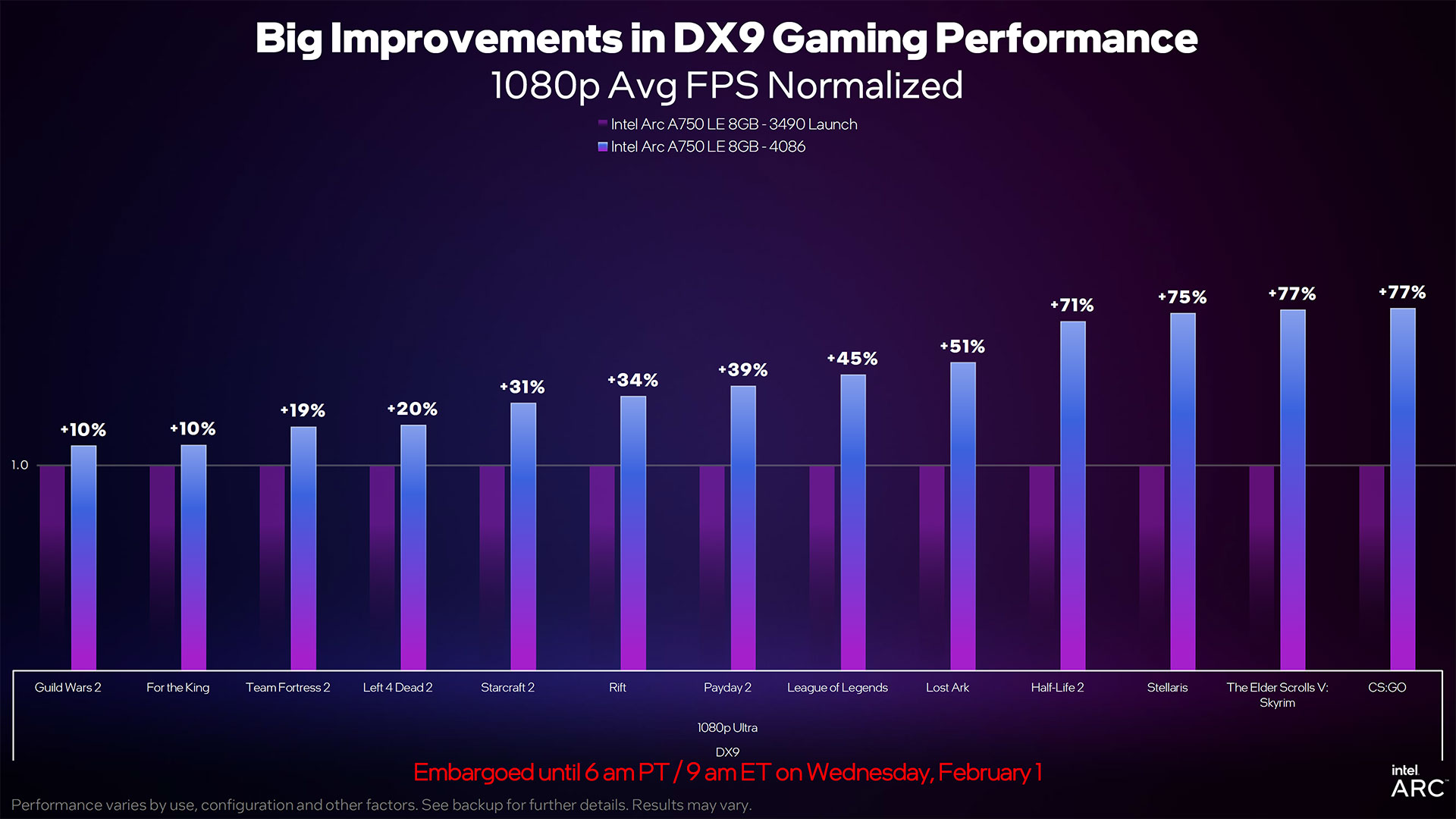

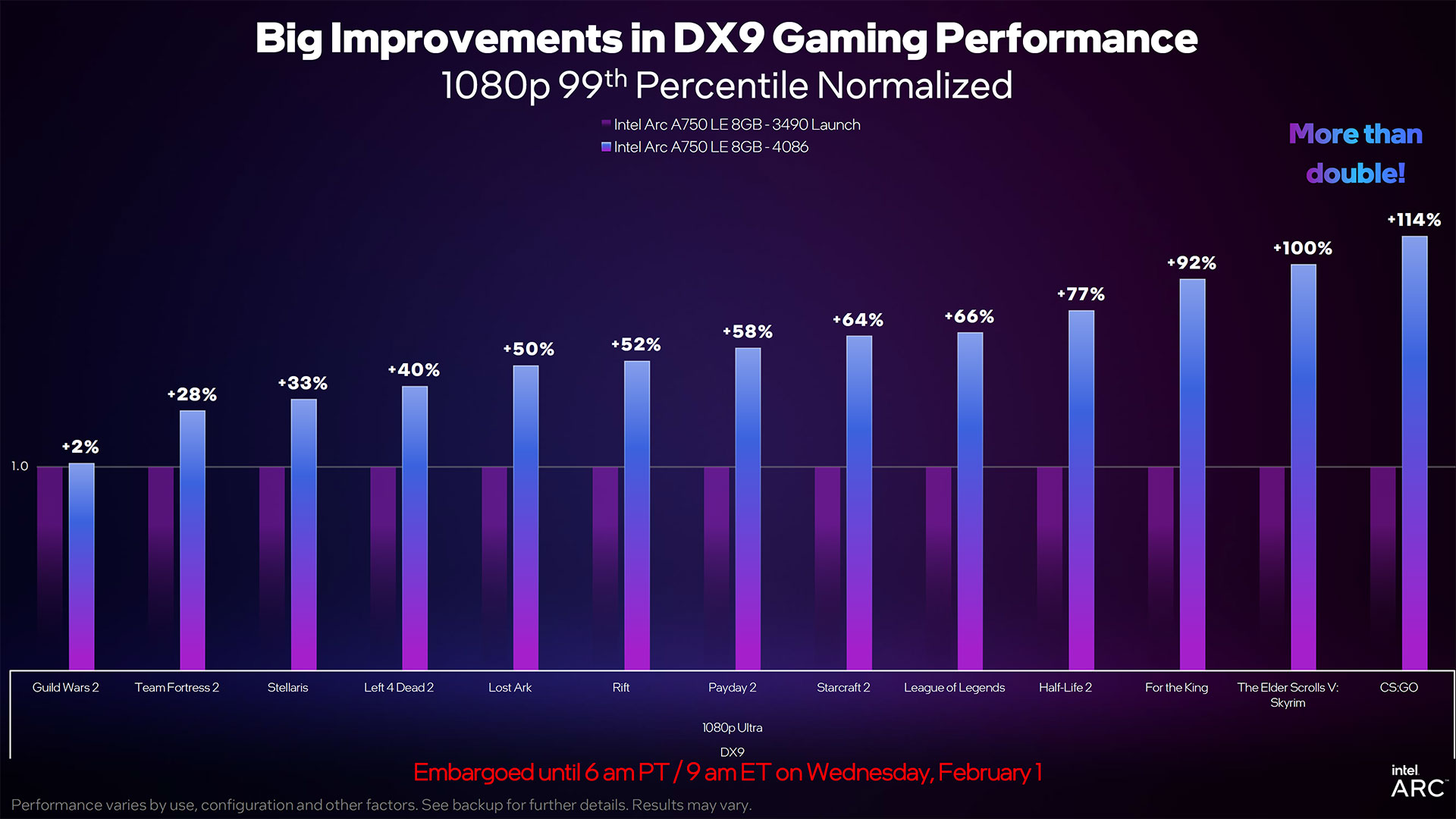

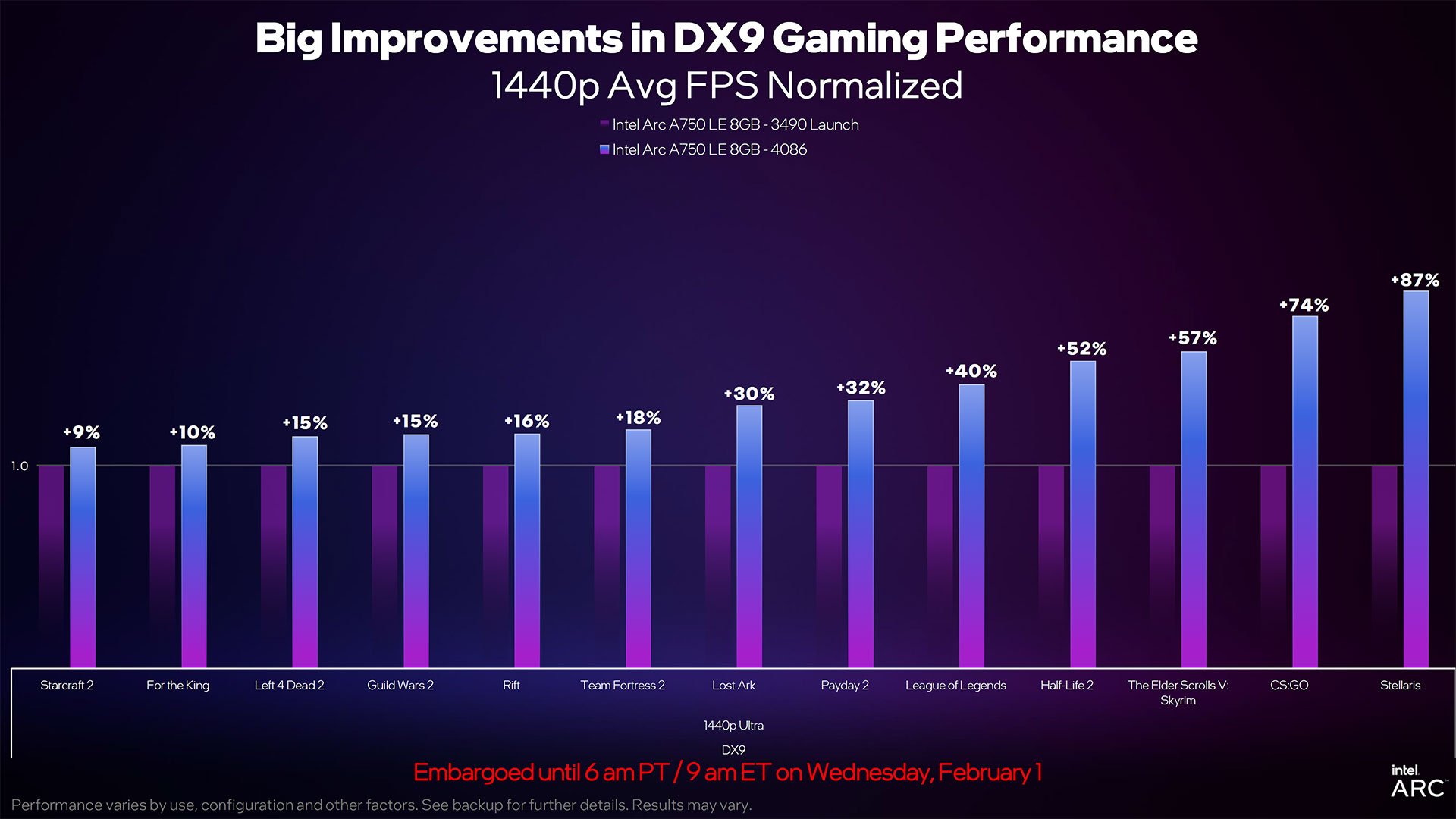

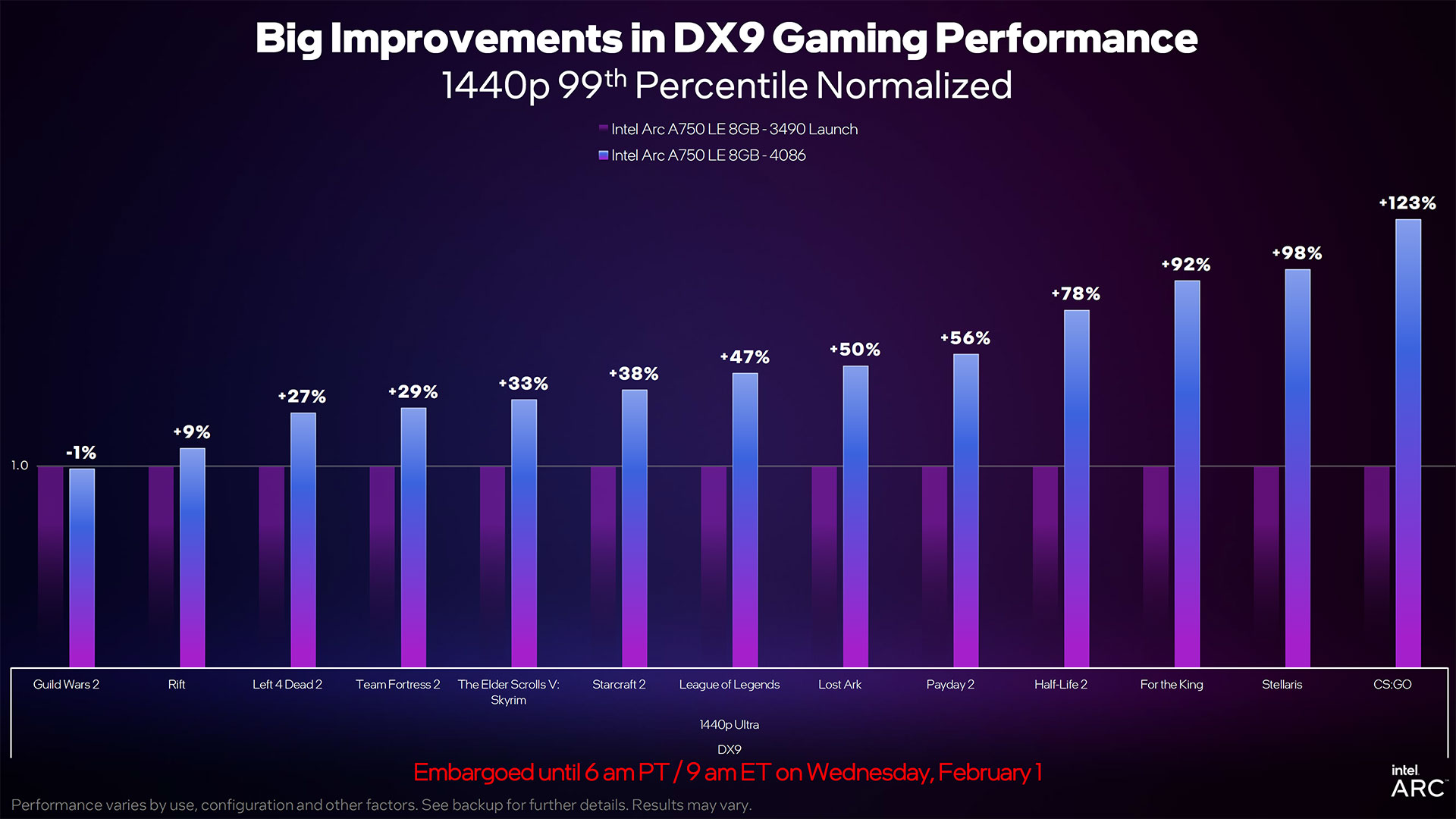

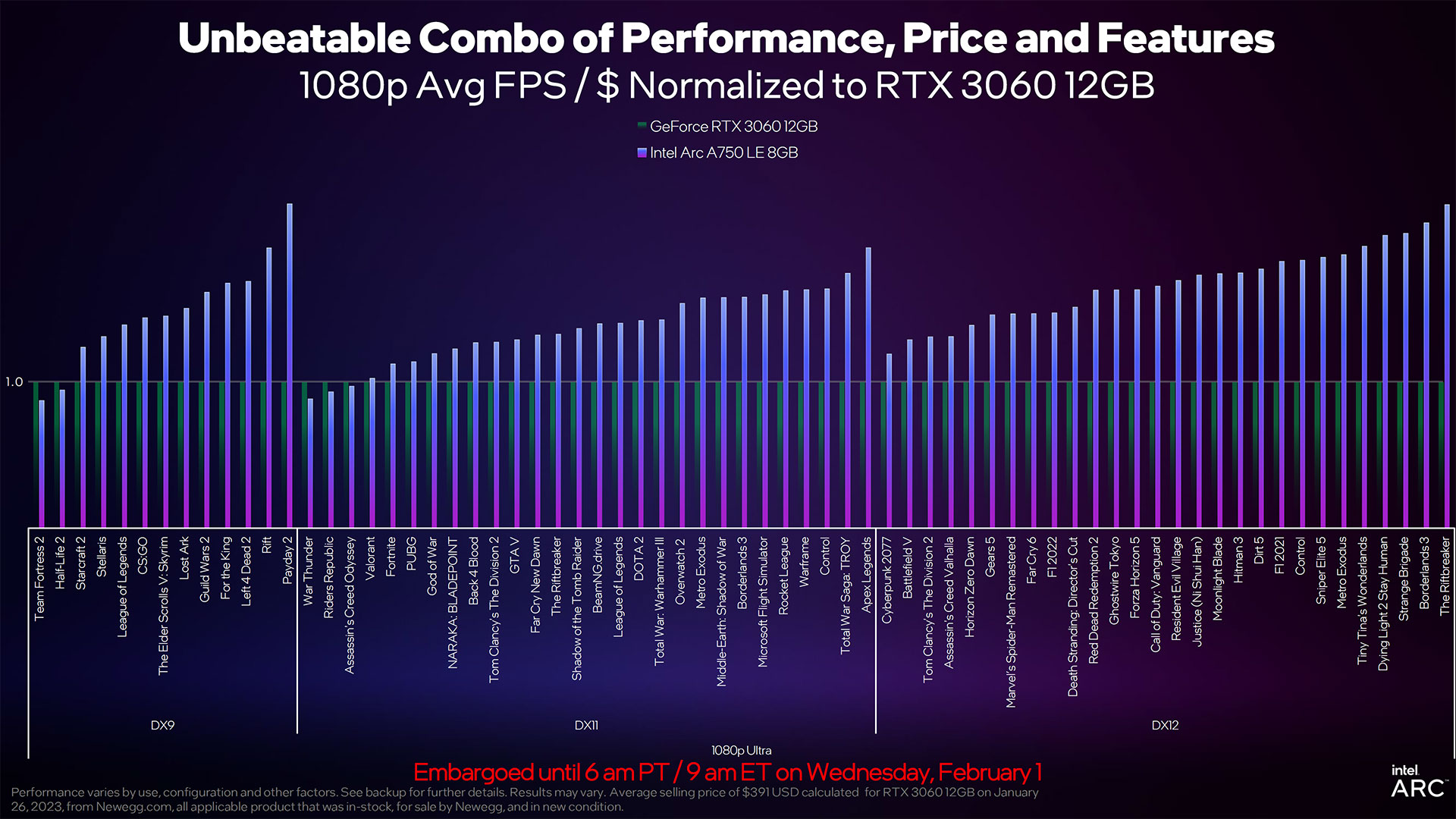

DirectX 9 performance, an area that Intel hadn't really focused on prior to the Arc launch, has been one of the biggest benefactors of the newer drivers. Intel claims that, across a test suite of thirteen games, average framerates at 1080p have improved by 43%, and 99th percentile fps has improved by 60%. At 1440p, the average fps increased by 35% while 99th percentile fps improved by 52%.

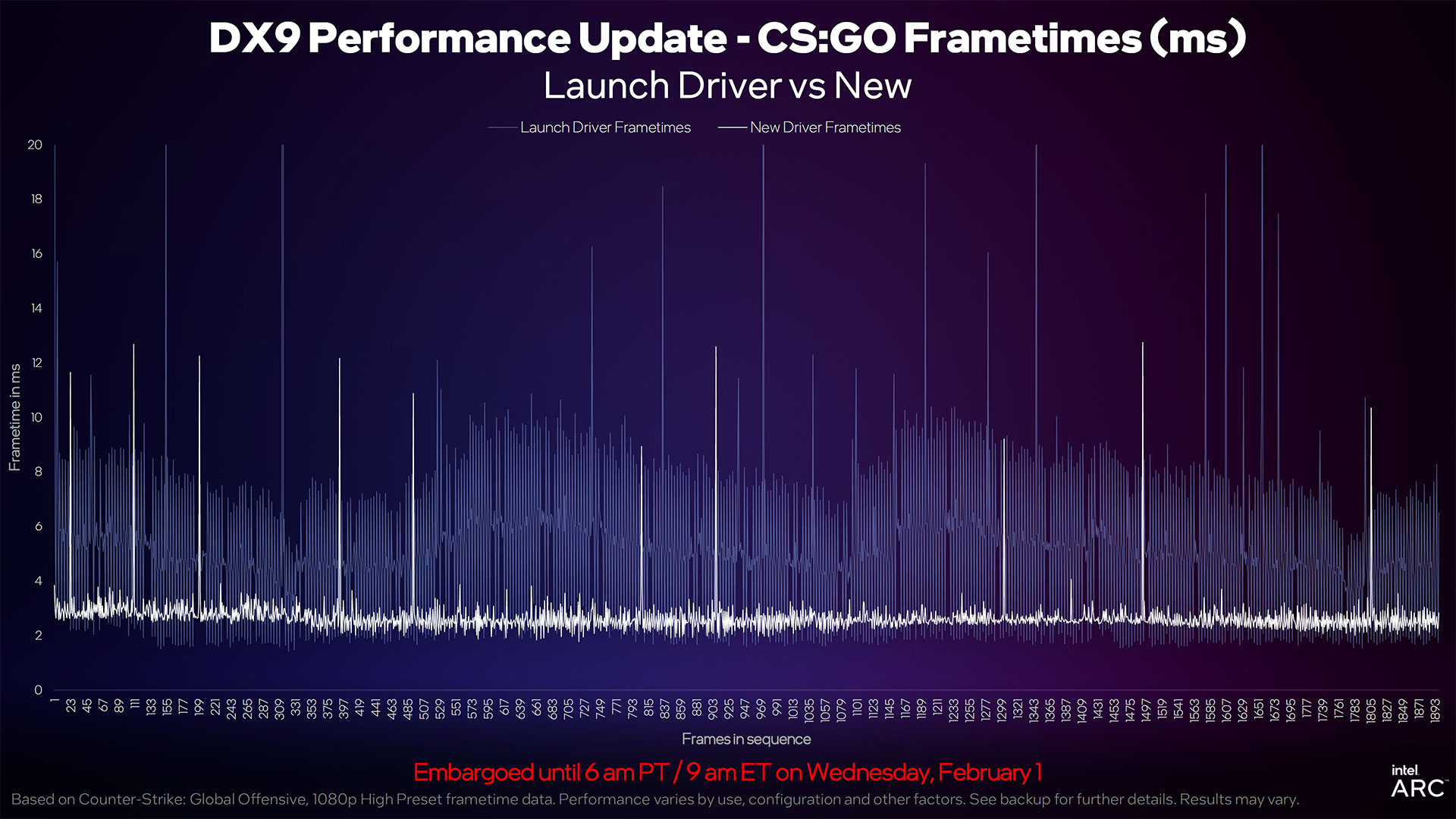

Granted, the test suite for DX9 games isn't so much about making games that ran poorly suddenly run well. The worst performing of the suite, Stellaris, looks to have performed at about 75 fps with the launch drivers, whereas it's now getting more like 130 fps. And Half-Life 2 went from just under 400 fps to about 600 fps. Even so, the overall experience has improved, and framerate consistency and frame times are much more stable.

It's also interesting that Intel continues to show the Arc A750 as an RTX 3060 competitor, mostly ignoring (in charts) AMD's own RX 6600. That's probably because AMD has a much stronger value proposition, with the RX 6600 regularly selling for $225, give or take. Our testing puts it slightly below the A750 (and RTX 3060), but the price cut does make up for the higher power use on Intel's GPU.

Intel is also continuing to push its XeSS (Xe Super Sampling) AI upscaling algorithm as an alternative to Nvidia's DLSS and AMD's FSR technologies. The adoption rate isn't nearly as high, but considering how new Intel is to the dedicated GPU arena, getting 35 games to support XeSS in the first six months or so is pretty decent.

Another feature of the Arc GPUs that's more than just "pretty decent" is the video encoding and decoding support. Arc was the first modern GPU to offer full AV1 support, and the quality of the Quick Sync Video encoding goes head to head with Nvidia's best (with AMD trailing on previous generation GPUs, though we still need to look at the latest RDNA 3 chips).

But it's not all sunshine and flowers. Our own testing of Bright Memory Infinite (using the Bright Memory Infinite benchmark on Steam) and Minecraft shows there's still room for improvement. Another interesting aspect of the Arc GPUs that we've discovered is that, using a Samsung Odyssey Neo G8 32-inch monitor, the DisplayPort connection can only run at up to 4K and 120 Hz, while Nvidia's RTX 20-series and later (using DP1.4a) all support 4K and 240 Hz via Display Stream Compression.

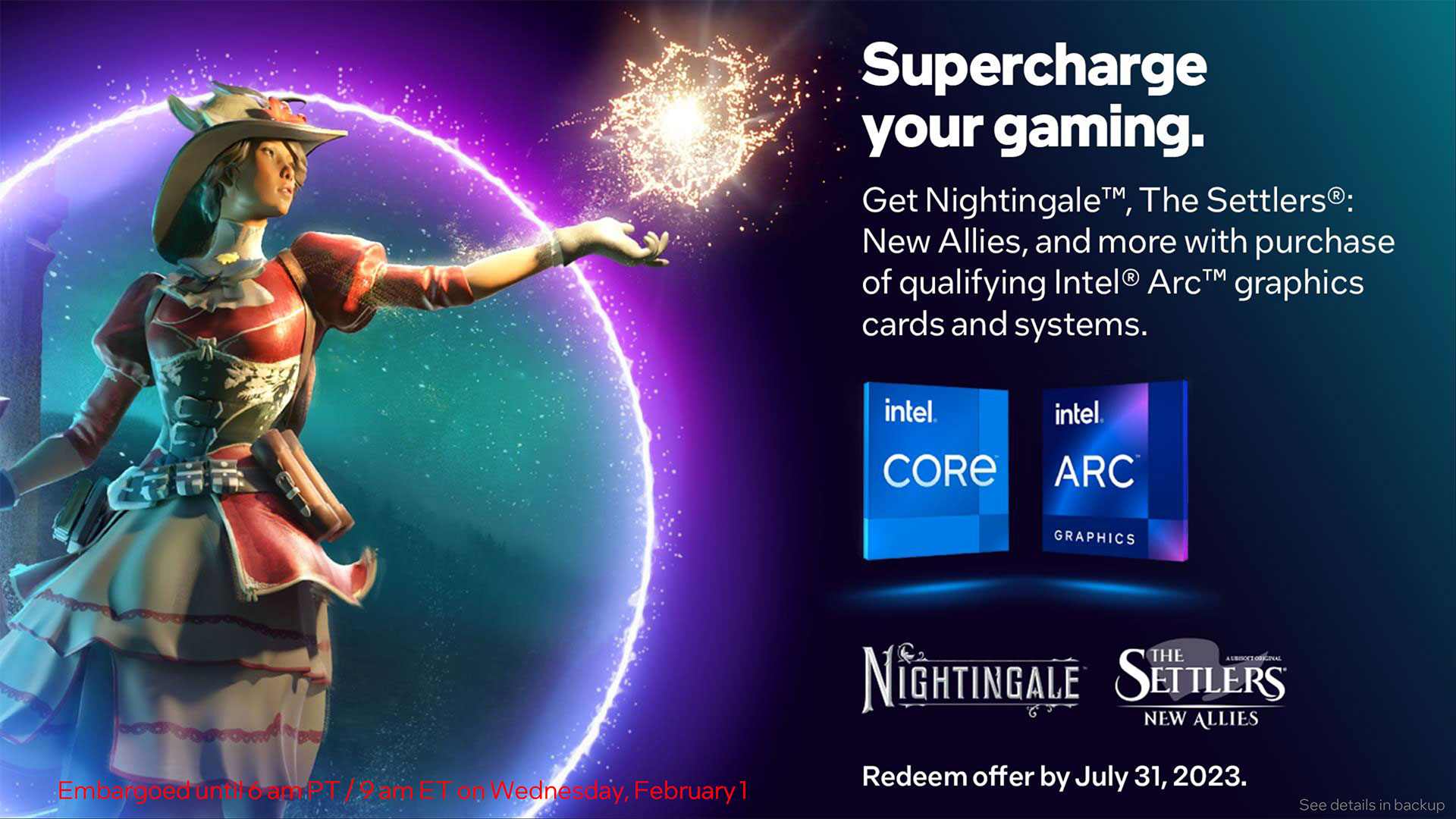

Ultimately, lowering the price of the A750 by $40 probably won't change the minds of millions of gamers, but it does make the overall package more attractive. Intel has also added Nightingale and The Settlers: New Allies to the software bundle for anyone who purchases a new Arc graphics card or system equipped with an Arc GPU. As we've noted before, Intel may not have the fastest cards on the planet, but the value proposition is certainly worth considering.

Which does bring up an interesting question: What's happened with the Arc A580? That's supposed to have the same 8GB of GDDR6 as the A750, but with 24 Xe-cores instead of 28 Xe-cores (3,072 shaders vs. 3,584 shaders on the A750). It also has a lower TBP of 175W compared to 225W and a Game clock of 1700 MHz, or at least that's the theory. With the new price on the A750, the space for an A580 continues to shrink, but maybe Intel could still release something in the $199–$219 range. We're still waiting...

The full slide deck from Intel is included below, for reference.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

zodiacfml Exactly as I said on LTTs last Intel Arc video. They have to match the RX 6600 in price as it is as fast or faster at lower TDPs. But now A750 wins for me as it has AV1 encodingReply -

KyaraM This certainly the card more attractive for lower-end builds! The driver updates also show that they are dedicated to work on them and improve as much as possible. Btw, Mindfactory says they sold about 65 750s, 60 Asrock and five Limited Edition cards, for what those statistics are are worth. It's only a single store, sadly, and they only officially sell in Germany. Most sold was the 380 with over 200 and then the 770 with 40 is last, btw.Reply -

InvalidError If the A750 is down to $250, then the A580 would have to be $200 at most to make any sense. That would leave the rumored A50 about half-way between the A380 and A580.Reply

I doubt the desktop A5xx would be economically viable without a DG2-256 refresh to save ~150sqmm per die and almost double yield per wafer.

People wanting a worthwhile sub-$200 current-gen GPU upgrade like me are likely far more numerous than AMD, Nvidia and Intel would like, just as the Steam survey suggests. I'm not surprised at all that Intel's A380 appears to be the most popular option. If my GTX1050 decided to blow up today, I would probably get an A380 too unless my friend who still does crypto-mining in winter for heating offers me a really good price for one of his GPUs.KyaraM said:Most sold was the 380 with over 200 and then the 770 with 40 is last, btw. -

horsemama Reply

Even on PCIE 3, RX 6500 XT is a better option over the A380 and the rx 6400 if you need low profile. The software on Intels side is just not there.InvalidError said:If the A750 is down to $250, then the A580 would have to be $200 at most to make any sense. That would leave the rumored A50 about half-way between the A380 and A580.

I doubt the desktop A5xx would be economically viable without a DG2-256 refresh to save ~150sqmm per die and almost double yield per wafer.

People wanting a worthwhile sub-$200 current-gen GPU upgrade like me are likely far more numerous than AMD, Nvidia and Intel would like, just as the Steam survey suggests. I'm not surprised at all that Intel's A380 appears to be the most popular option. If my GTX1050 decided to blow up today, I would probably get an A380 too unless my friend who still does crypto-mining in winter for heating offers me a really good price for one of his GPUs. -

InvalidError Reply

I wouldn't spend over $120 on a GPU with only 4GB of VRAM in 2023. And the RX6500 is a no-go for me even if I got one for free since I need at least three video outputs.horsemama said:Even on PCIE 3, RX 6500 XT is a better option over the A380 and the rx 6400 if you need low profile. The software on Intels side is just not there. -

PEnns Any company that can put a hint of fear into the pocket books of AMD and Nvidia is fine in my book.Reply

I hope Intel throws serious money and effort at their GPU products. The rewards could be quite respectable. -

PlaneInTheSky Soemone should test an obscure DirectX9 title to find out if these optimisations are game-specific for well-known titles, or if the overall DirectX9 support is improved accross the board.Reply

Anyway, good job driver team, those are massive improvements. -

JarredWaltonGPU Reply

They're almost certainly game specific in most cases, though it's possible some apply to a wider range of games. Testing older and less known DX9 games to look for driver improvements is unfortunately a rather time consuming process with not much reward. I'll have to see if there's something quick I could do with a game not in the charts, though.PlaneInTheSky said:Soemone should test an obscure DirectX9 title to find out if these optimisations are game-specific for well-known titles, or if the overall DirectX9 support is improved accross the board.

Anyway, good job driver team, those are massive improvements. -

InvalidError Reply

To me, it doesn't seem like it would make much sense to focus on game-specific optimizations until the low-hanging generic fruits are sorted out, so I'd expect a good chunk of gains to apply across the board.PlaneInTheSky said:Soemone should test an obscure DirectX9 title to find out if these optimisations are game-specific for well-known titles, or if the overall DirectX9 support is improved accross the board.

For example, Intel is touting a major driver bottleneck breakthrough in its newest update with its presentation graphs showing massive improvements in frame time consistency with variance reduced by 50+% across most of the board. That can be expected to have substantial repercussion across all titles that hit those bottlenecks and that may even include titles running legacy API translation. -

RichardtST Now we're talking! I'll get one for the office and see if it runs Minecraft Java/OpenGL. If it does, kudos. If not, shame. Saw some charts showing that Radeon has finally fixed their MC problems, so am dying to test that too...Reply