Intel SC23 Update: 1-Trillion Parameter AI Model Running on Aurora Supercomputer, Granite Rapids Benchmarks

Intel shares the latest info on its HPC and AI initiatives.

At Supercomputing 2023, Intel provided a slew of updates on its latest HPC and AI initiatives, including new information about its fifth-gen Emerald Rapids and future Granite Rapids Xeon CPUs, Guadi accelerators, new Max Series GPU benchmarks against Nvidia's H100 GPUs, and the company's work on the 'genAI' 1-trillion-parameter AI model that runs on the Aurora supercomputer.

Upon completion, Aurora is widely expected to take the crown as the fastest supercomputer in the world at two Exaflop/s (EFlop/s) of performance. However, Intel hasn't yet shared details about Aurora's formal benchmark submission for the Top500 list — the company says it's leaving that announcement to the Department of Energy and the Argonne National Laboratory. If tradition holds, the Top500 organization will release those hotly-anticipated results later today. In the meantime, Intel's update includes plenty of new tidbits to chew over.

Aurora Supercomputer Benchmarks

At full capacity, Intel's Aurora supercomputer will wield 21,248 HBM2E-equipped Sapphire Rapids Xeon Max CPUs and 60,000 Xeon Max GPUs, making it the largest-known GPU deployment in the world. As mentioned, Intel isn't releasing benchmarks for the Top500 submission yet, but the company did share the performance of a few workloads with a partial complement of the system running.

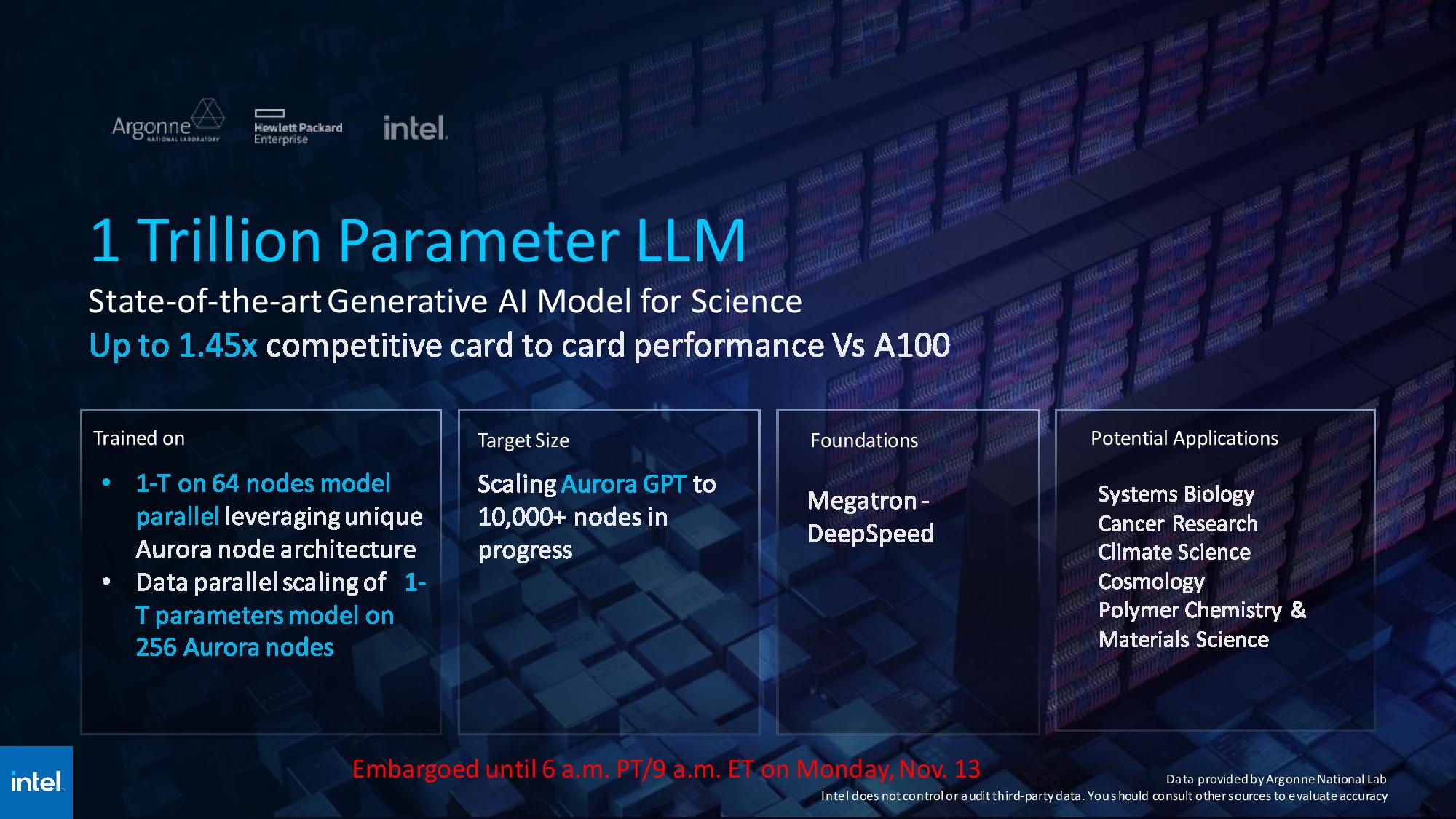

Intel and the Argonne National Labs have tested Aurora in the genAI project, a trillion-parameter GPT-3 LLM foundational AI model for science. Due to the copious amount of memory present on the Data Center GPU Max 'Ponte Vecchio' GPUs, Aurora can run the massive model with only 64 nodes. Argonne has run four instances of the model in parallel on 256 total nodes. This workload will eventually scale to 10,000 nodes after the workloads have been tuned.

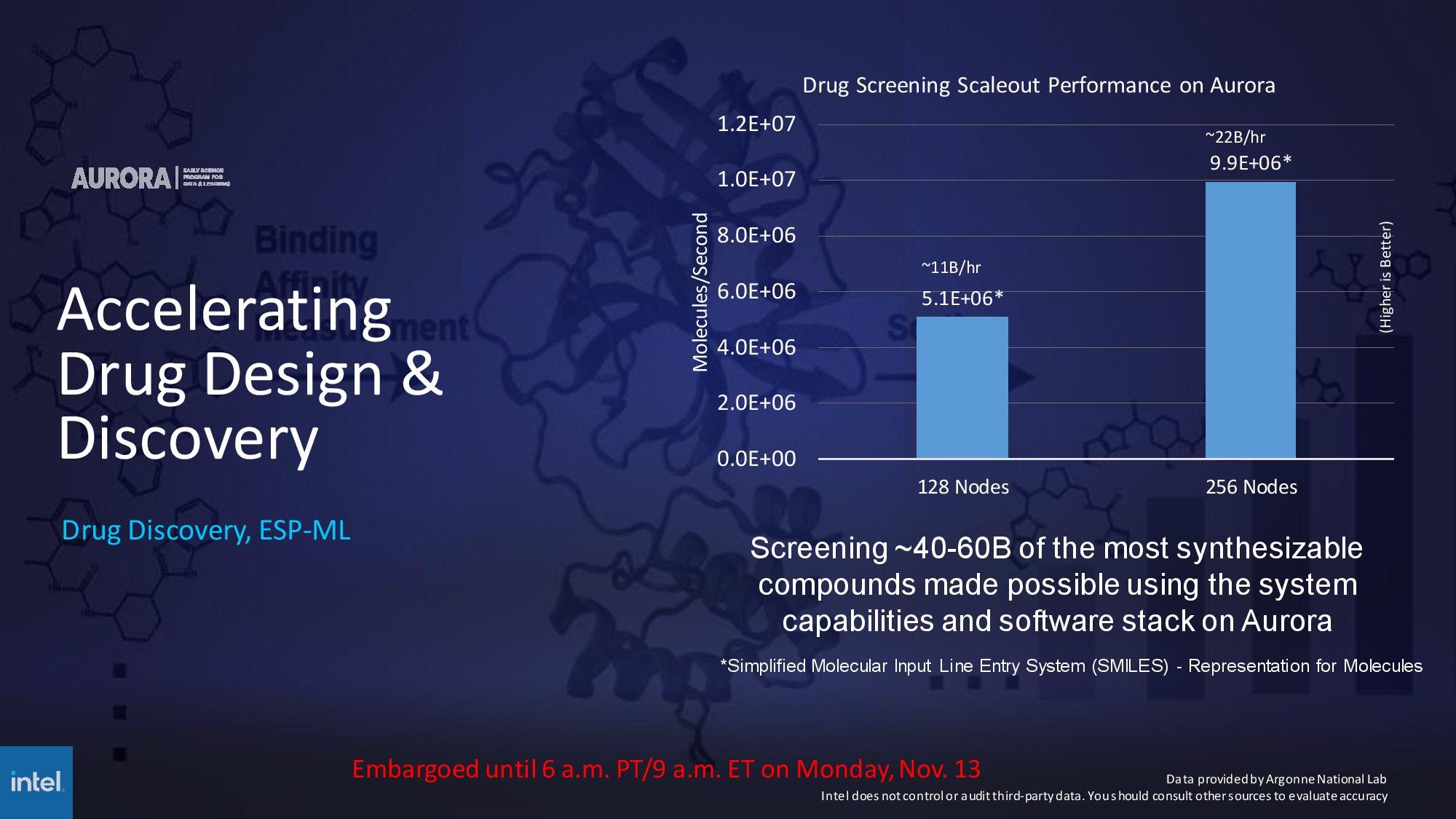

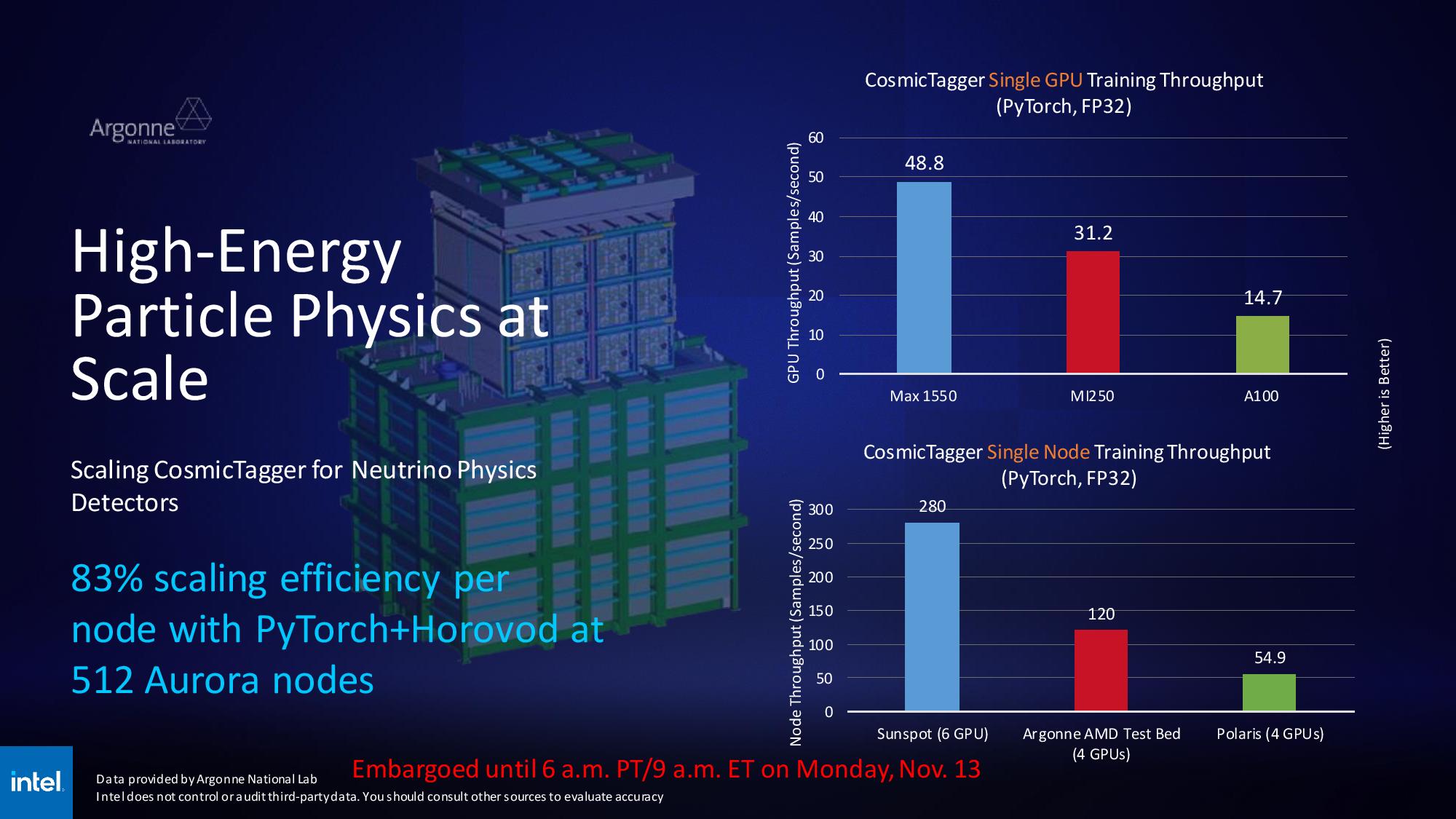

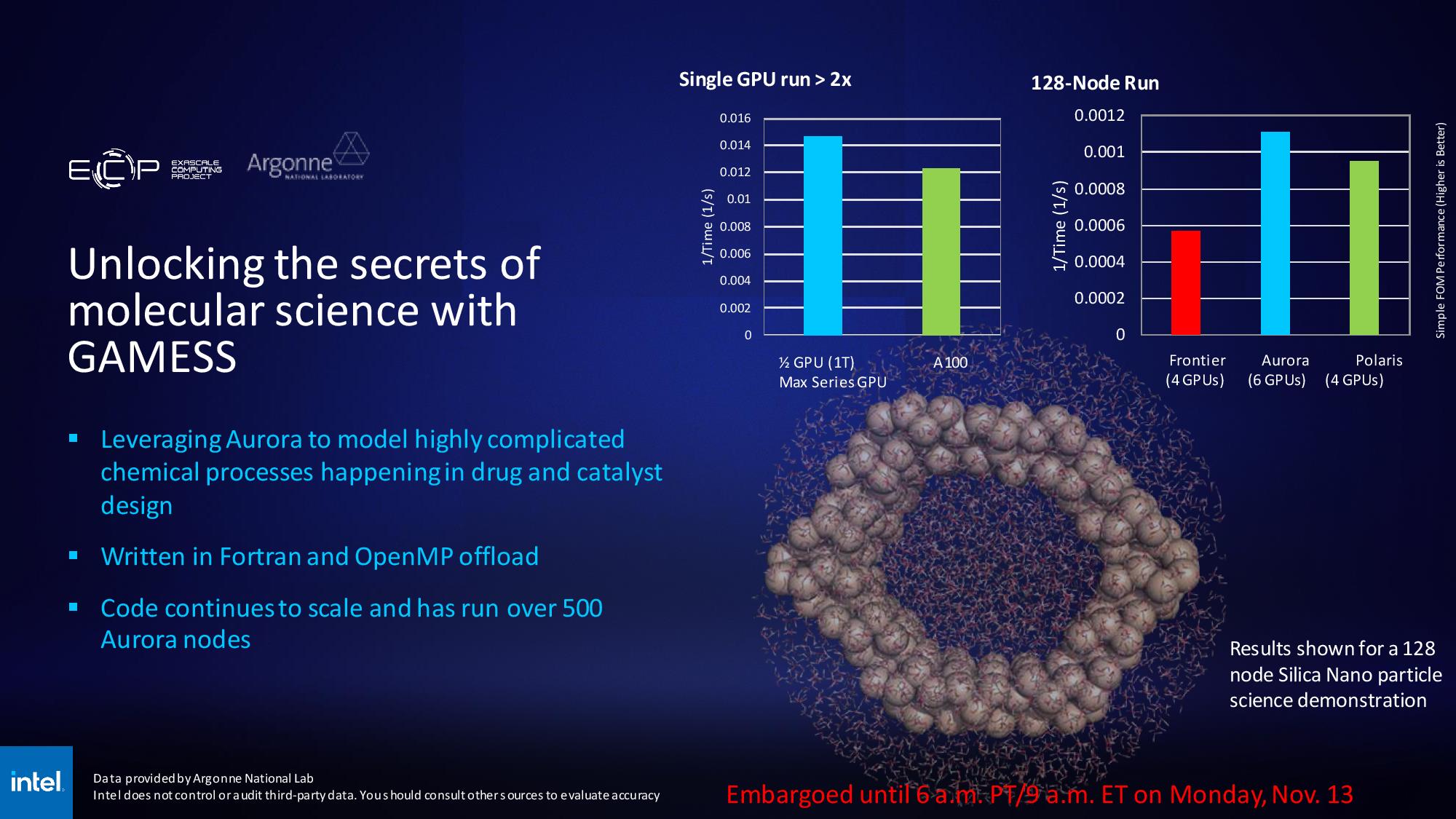

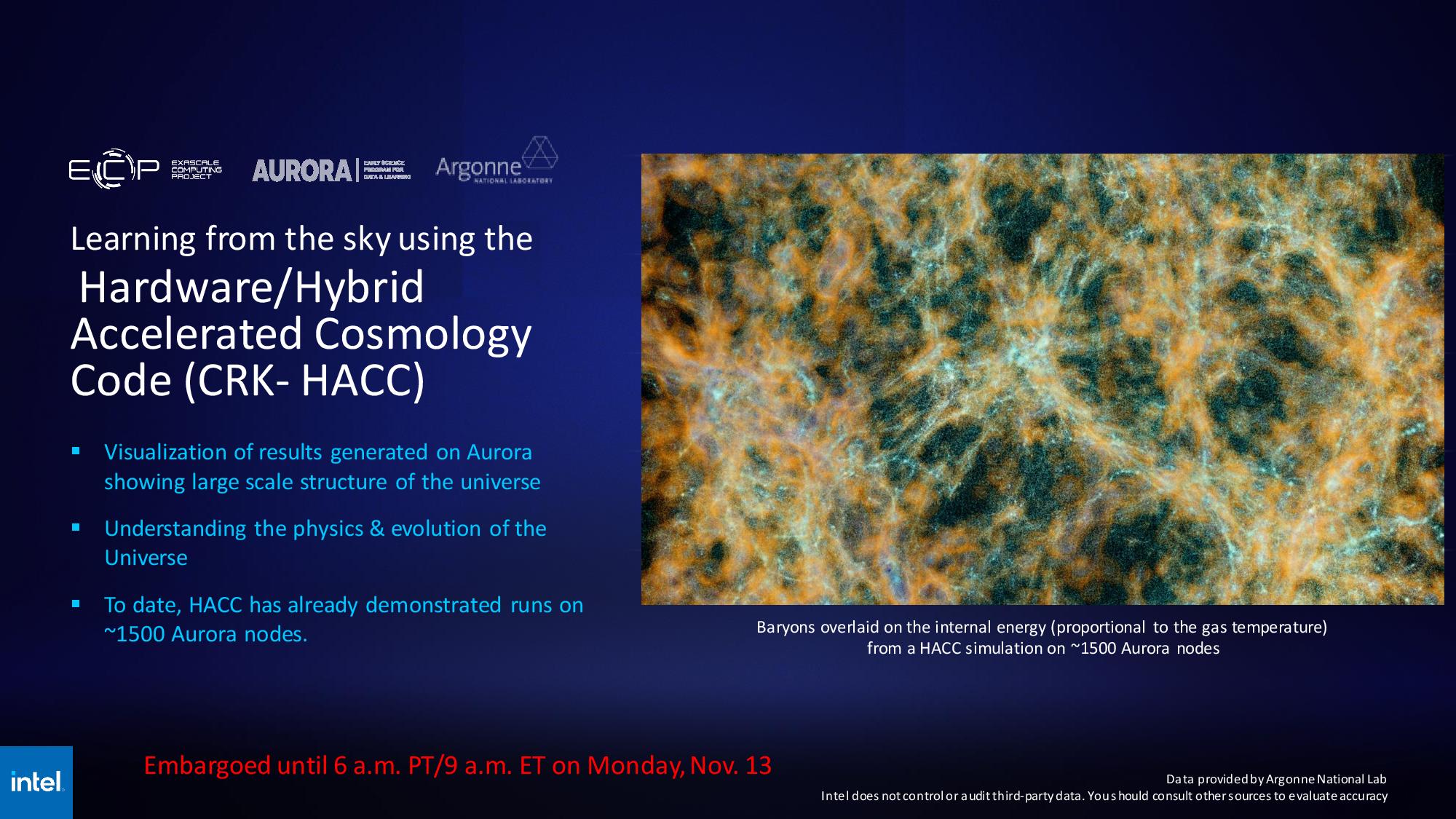

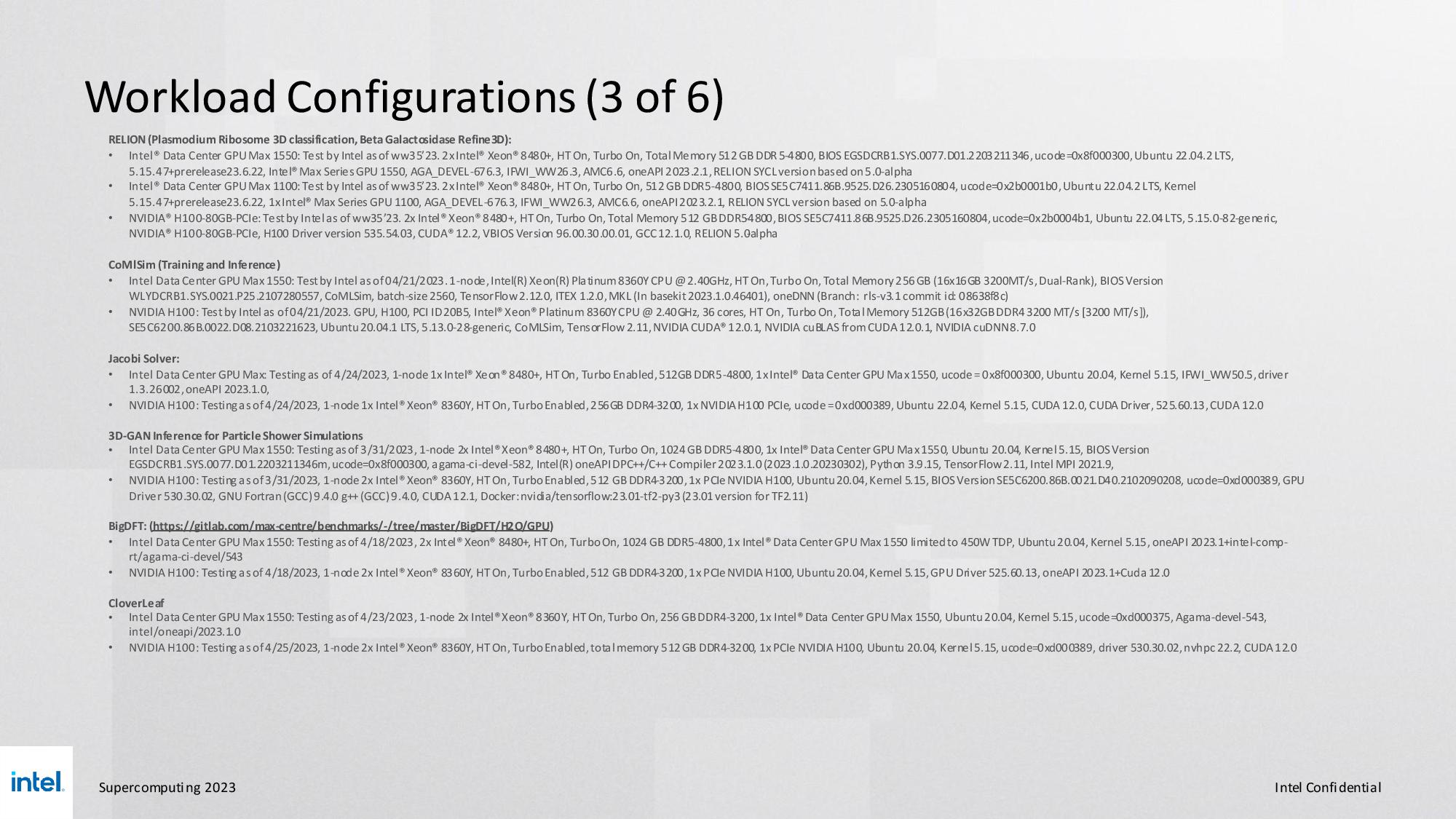

Intel also highlighted strong scaling from 128 to 256 nodes in a drug-screening AI inference application, ESP-ML, but Argonne's benchmarking against competing GPUs is much more interesting: Intel claims a single Max 1550 GPU offers a 56% speedup over AMD's MI250 accelerators and a 2.3X advantage over Nvidia's previous-gen A100 GPUs in CosmicTagger training with PyTorch/FP32. The results also indicate strong scaling, with a six-GPU Sunspot test node exhibiting 83% performance scaling. As a result, the Sunspot node delivered more than twice the performance of a four-GPU AMD test system with unknown GPUs, and five times the performance of a quad-GPU node with the aging Polaris.

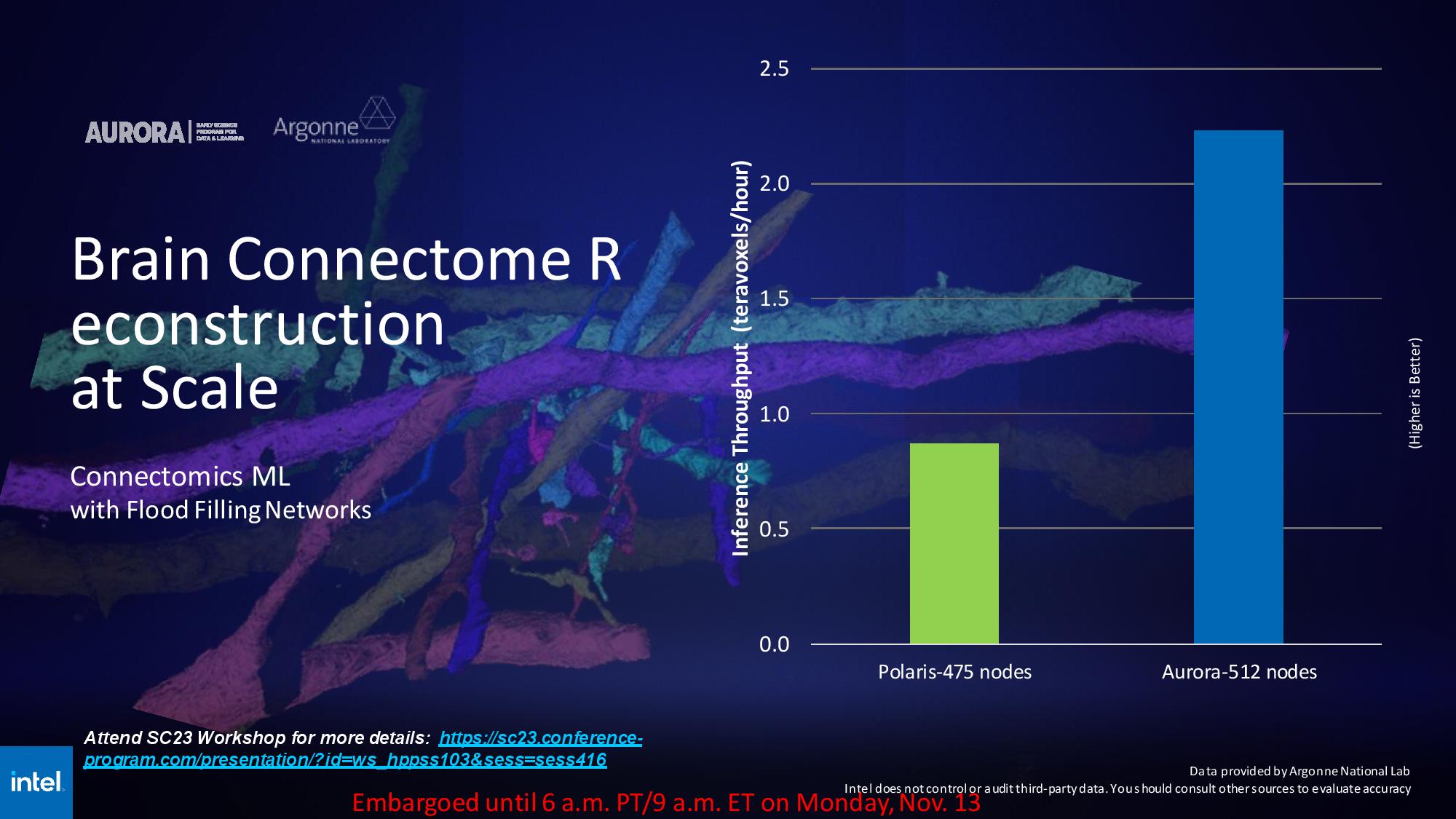

Argonne also tested 512 Aurora nodes against a 475-node Polaris in a brain connectome workload (Connectomics ML) that models a mouse's brain, highlighting a 2X advantage over Polaris.

Fifth-Gen 'Emeralds Rapids' Xeon, Granite Rapids Benchmark Projections

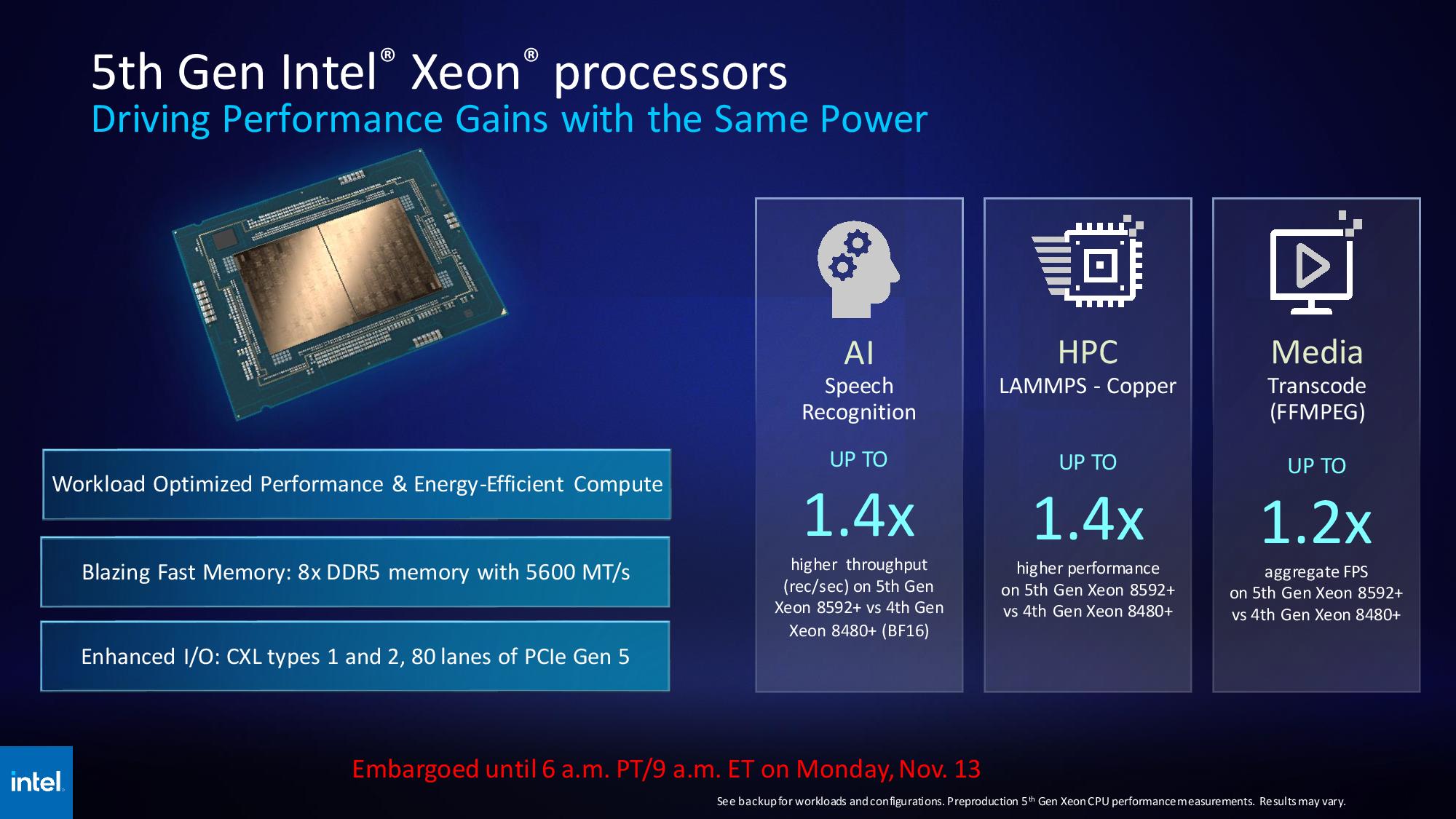

Intel's data center roadmap remains on track, with the fifth-gen Emerald Rapids chips slated for launch on December 14. Intel teased benchmarks of the flagship 64-core Xeon 8592+ compared to its predecessor, the 56-core fourth-gen Xeon 8480+. As always, take vendor-provided benchmarks with some salt (you can find the test notes in the last album of this article).

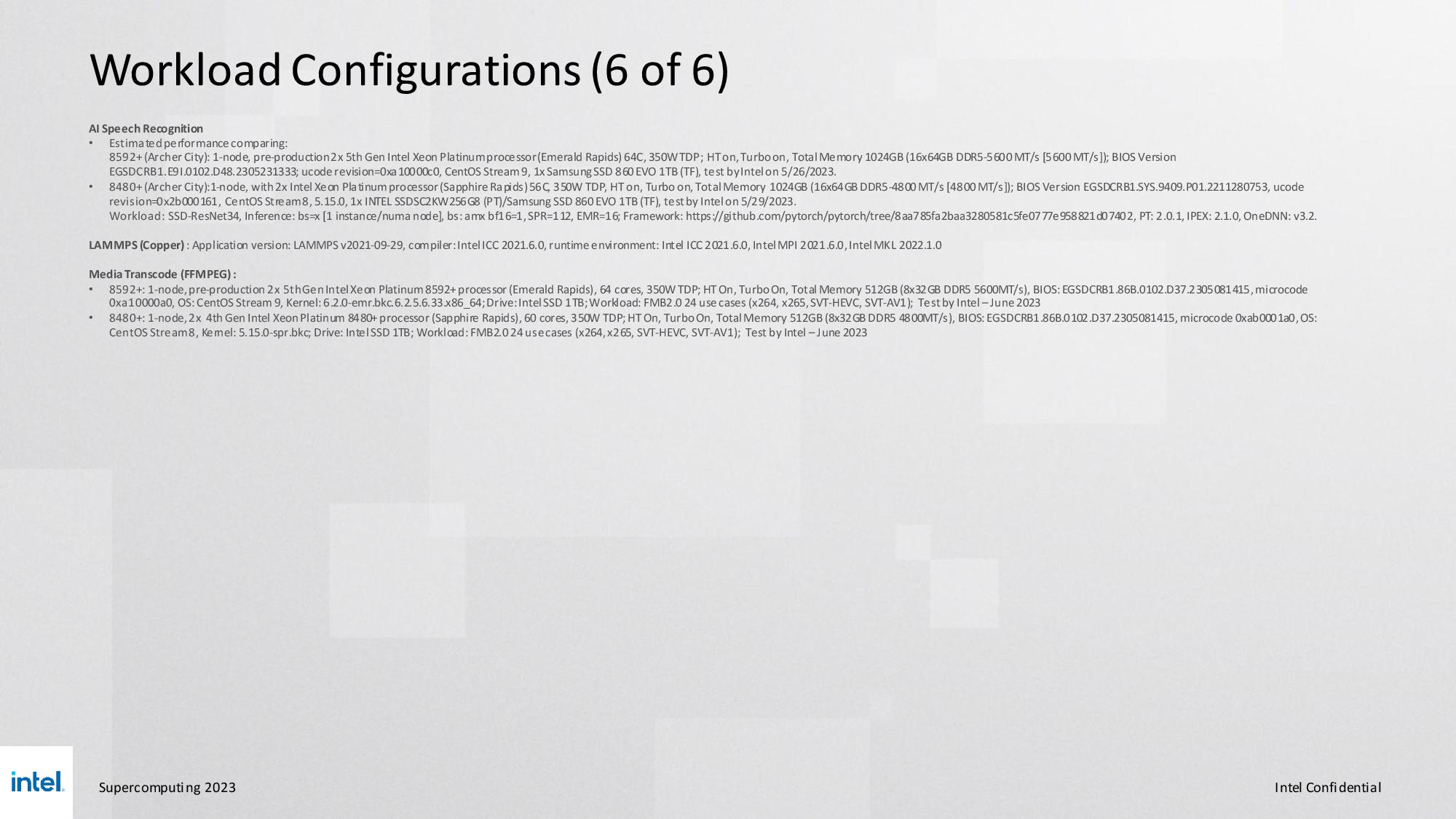

As you'd expect from a higher core count, the 8592+ notches a 1.4X gain in AI speech recognition and the LAMMPS benchmark while also delivering a 1.2X gain in the FFMPEG media transcode workload.

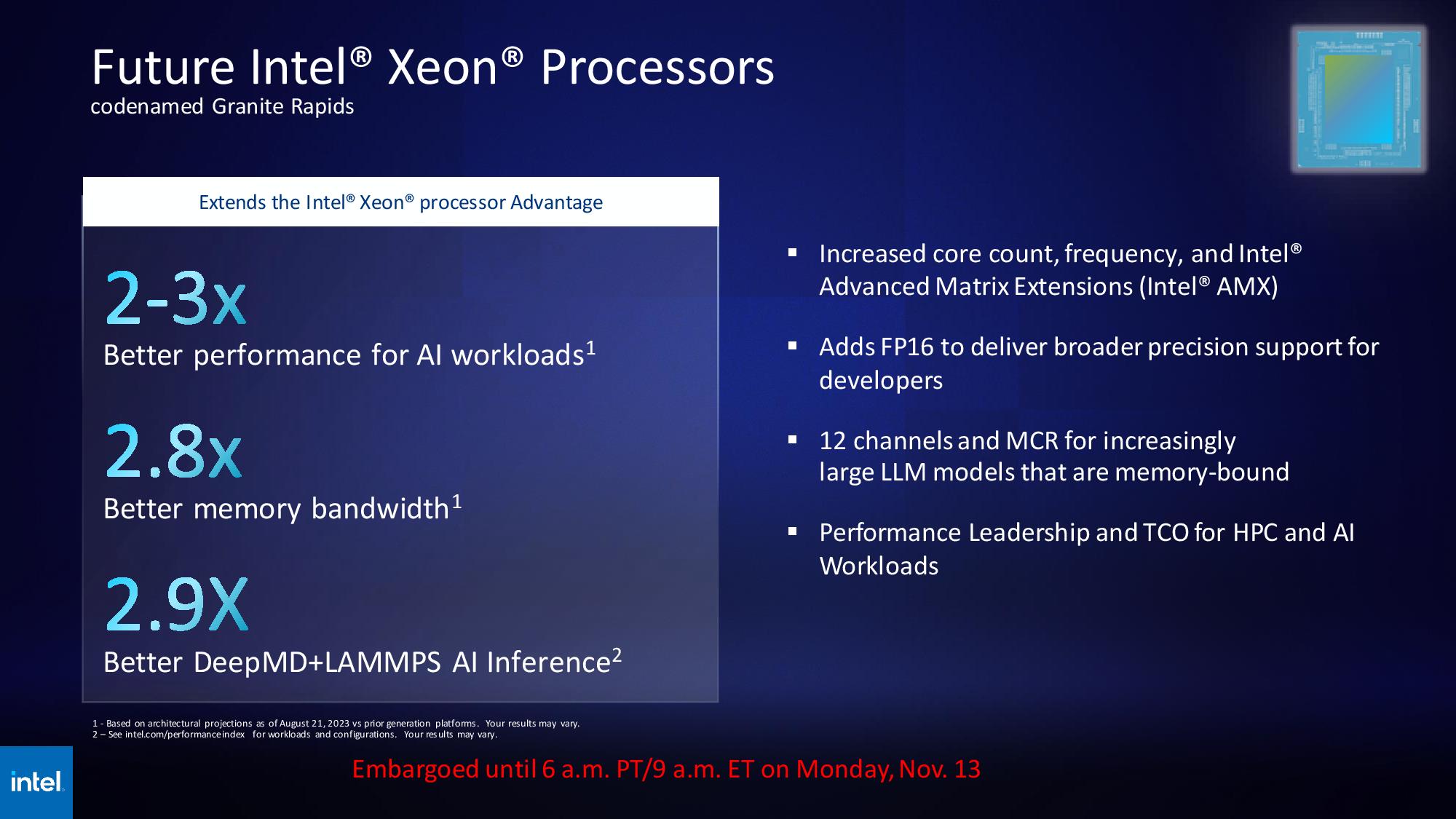

Intel also provided performance projections for its future Granite Rapids Xeons, which will come fabbed on the 'Intel 3' node. These chips will add more cores, higher frequencies, hardware acceleration for FP16, and support 12 memory channels — including the new MCR memory DIMMs that tremendously boost memory throughput. All told, Intel claims a 2-3X improvement in AI workloads, a 2.8X boost in memory throughput, and a 2.9X improvement in the DeepMD+LAMMPS AI inference workload.

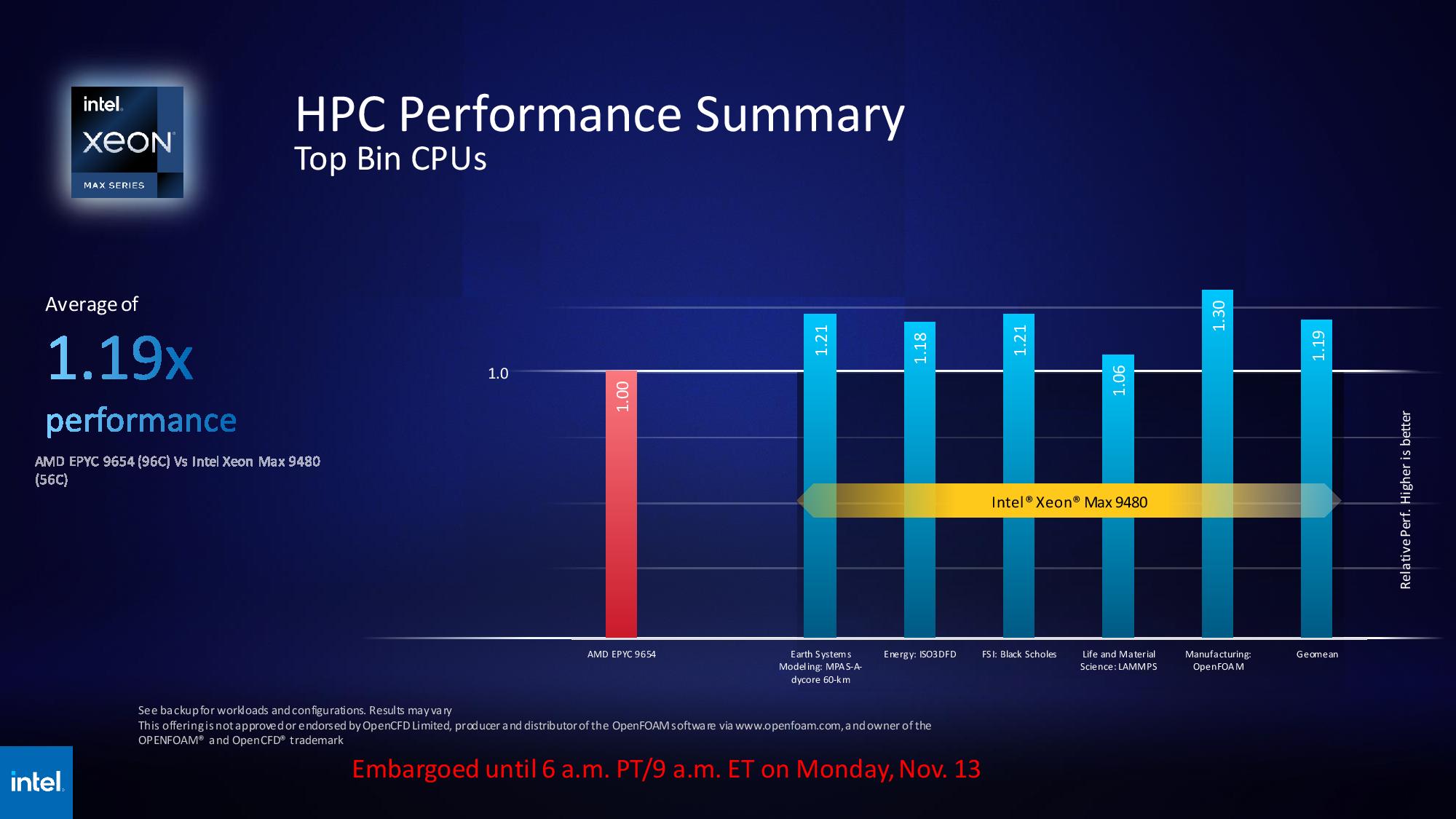

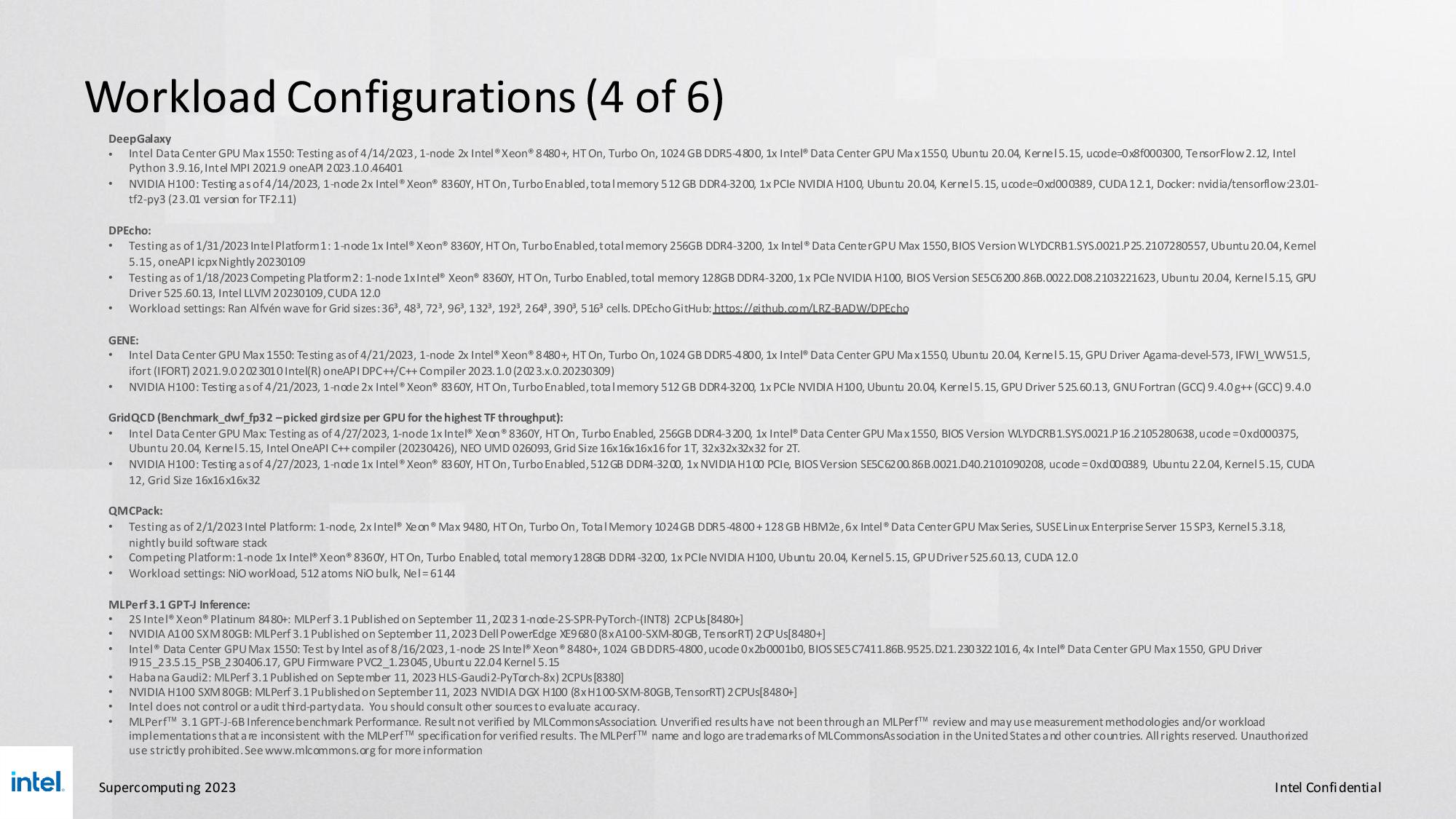

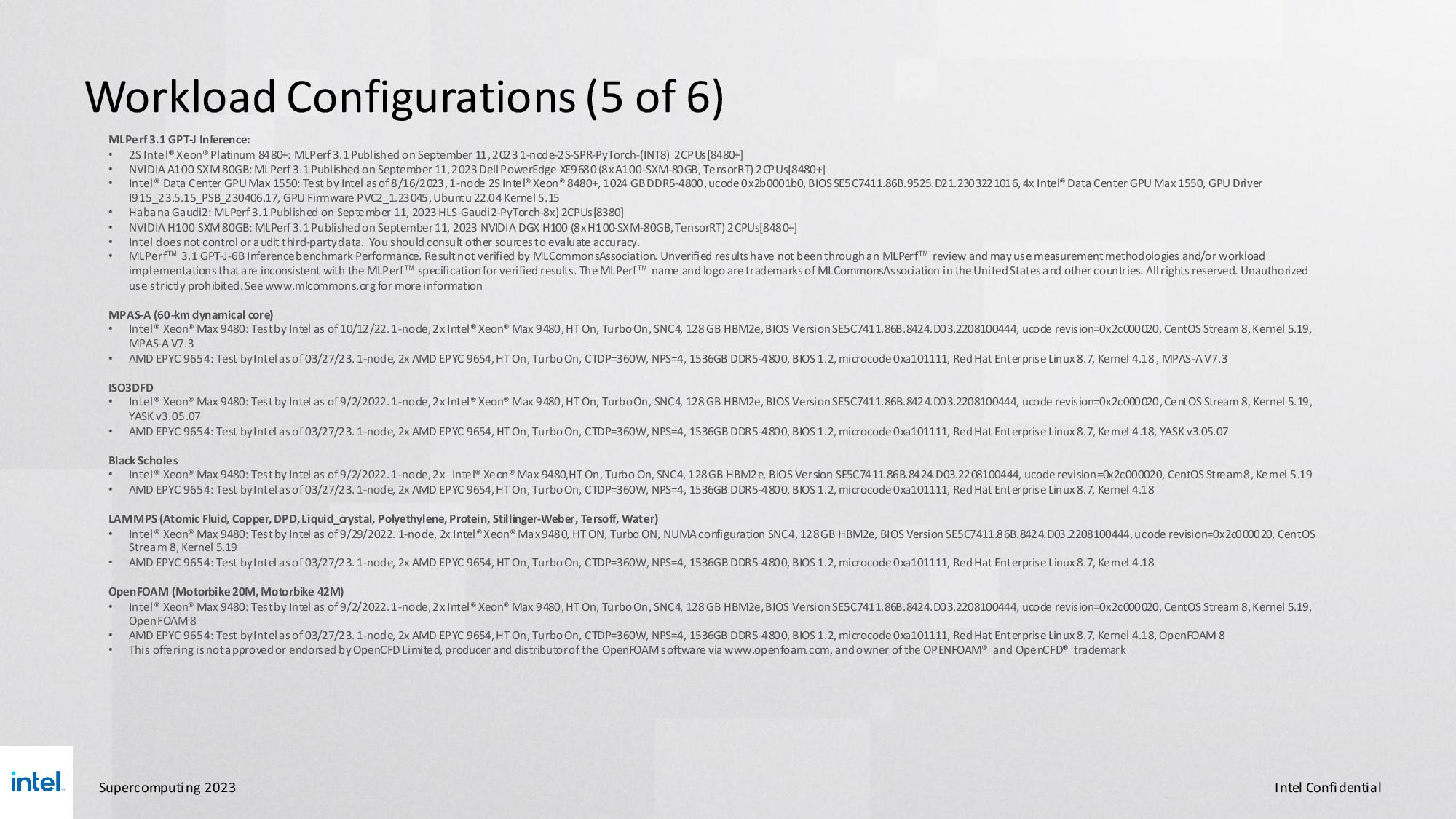

Intel's HBM2E-equipped Xeon Max CPUs are shipping now. Intel compared its 56-core Intel Max 9480, equipped with 64GB of on-package HBM memory, going head-to-head with AMD's 96-core EPYC 9654. Intel's chosen workloads for this spate of benchmarks are comprised of the targeted use case in memory-constrained applications that will naturally benefit the Xeon chip. Overall, Intel claims an average 1.2X advantage over the EPYC contender in a range of workloads spanning simulations, energy, material sciences, manufacturing, and financial services workloads.

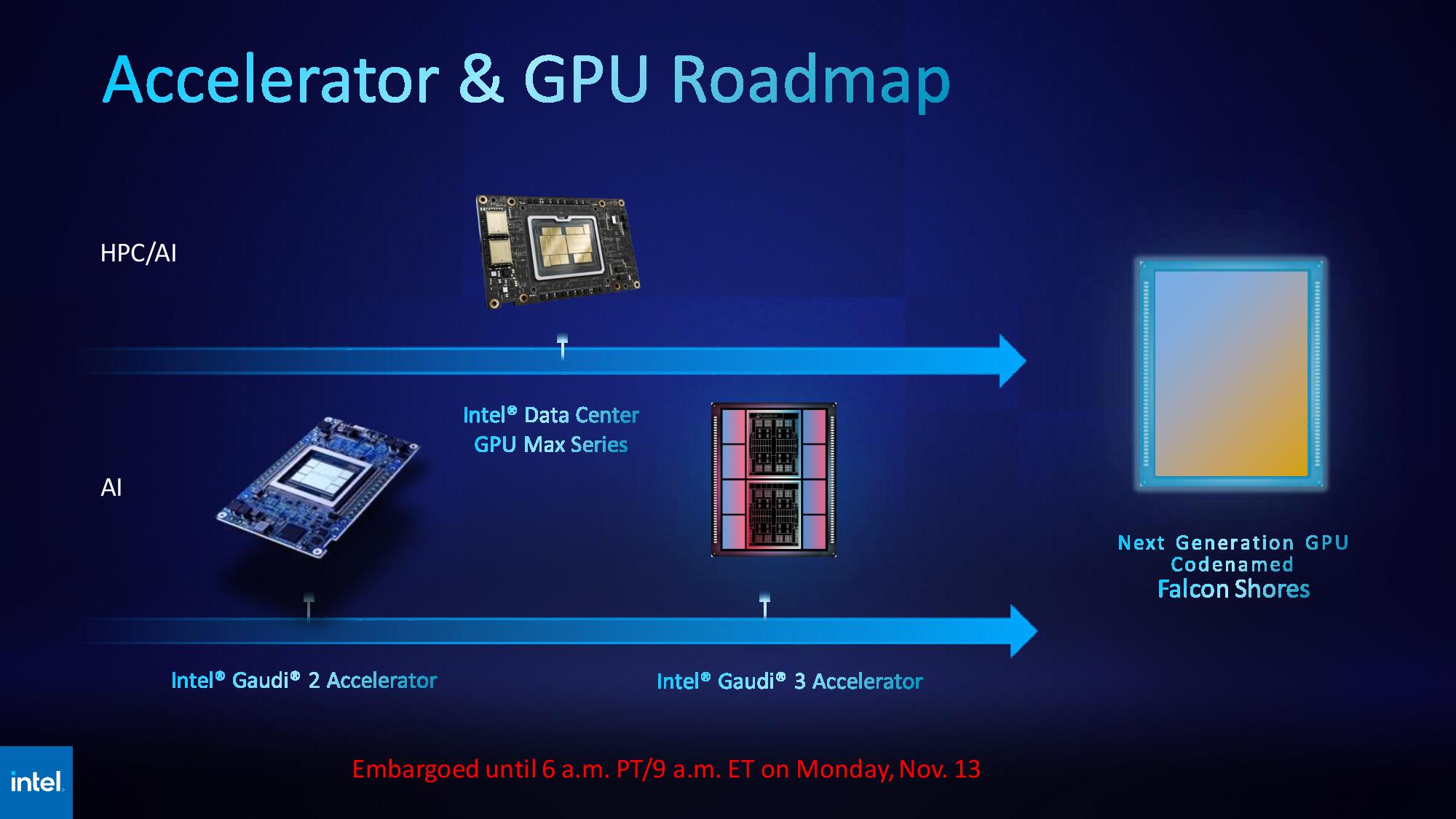

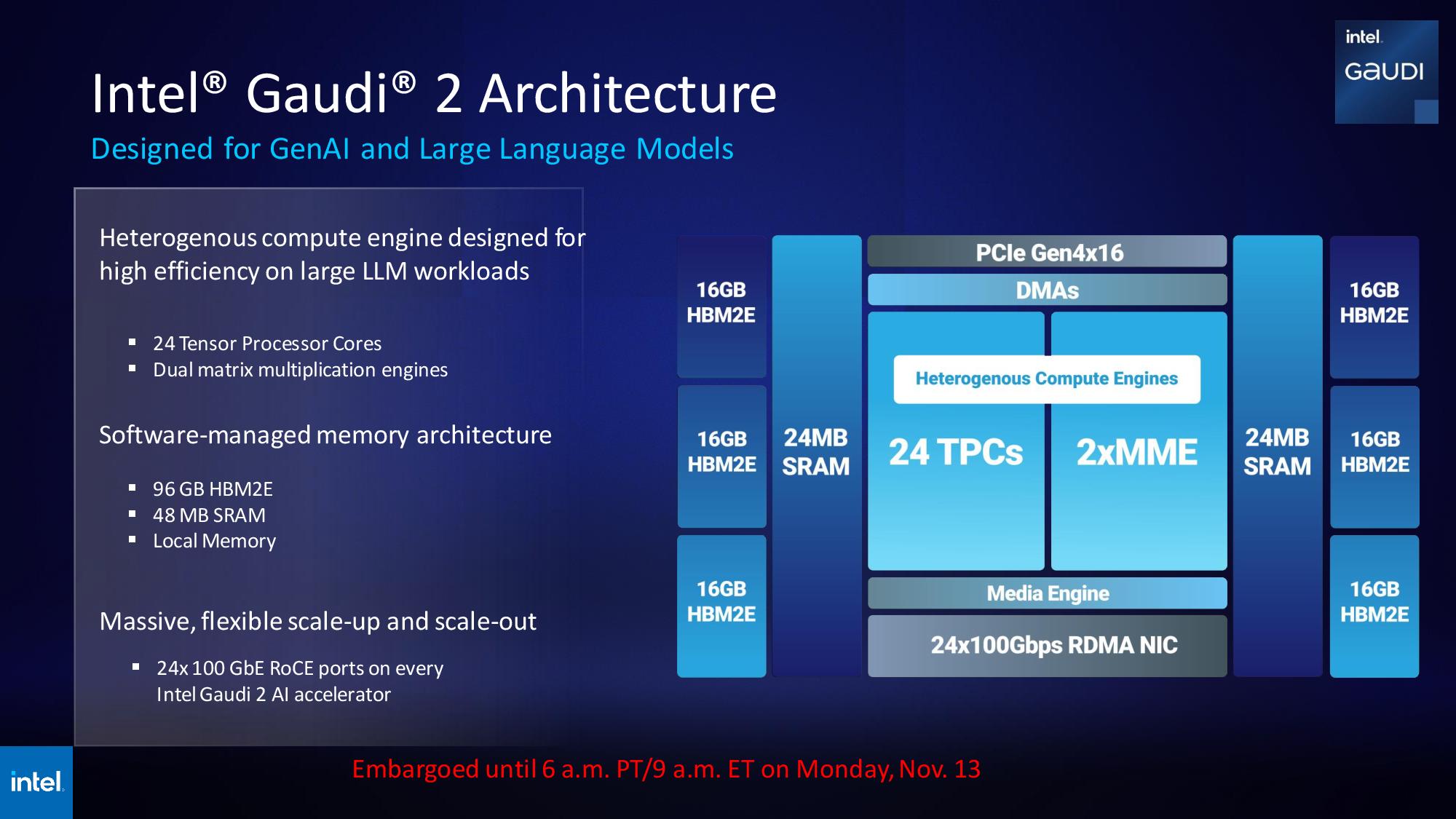

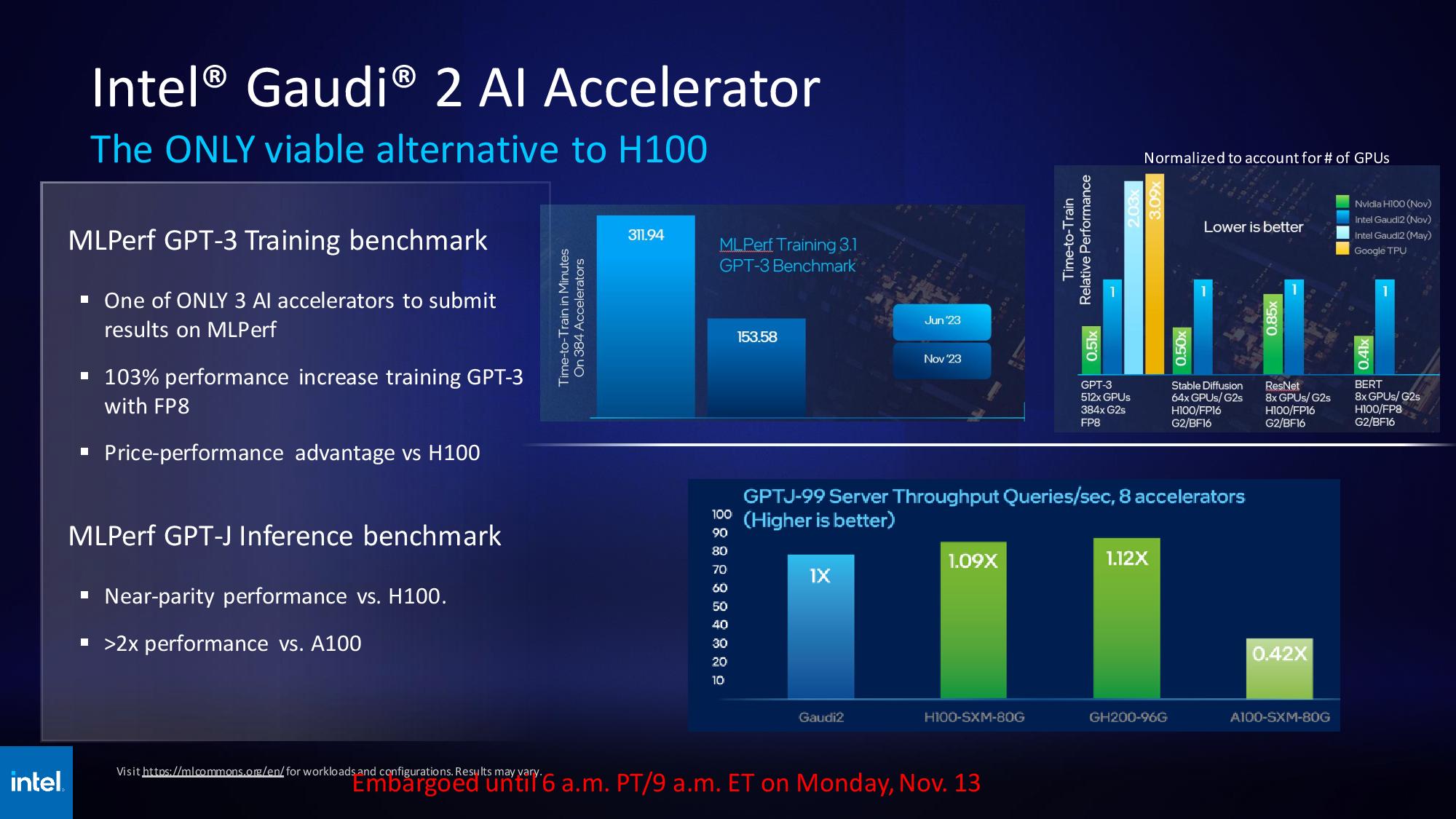

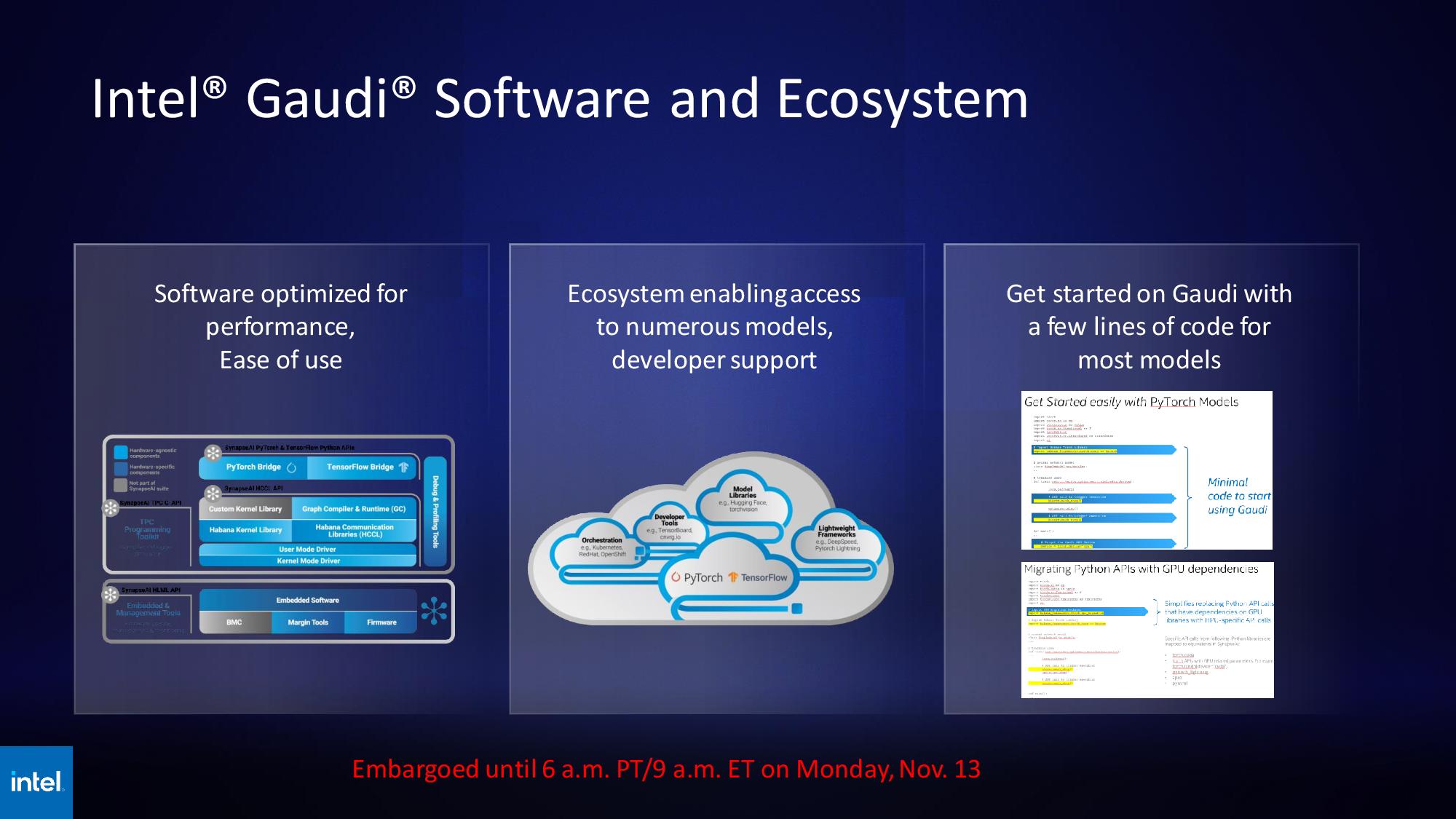

Gaudi 3 and Falcon Shores

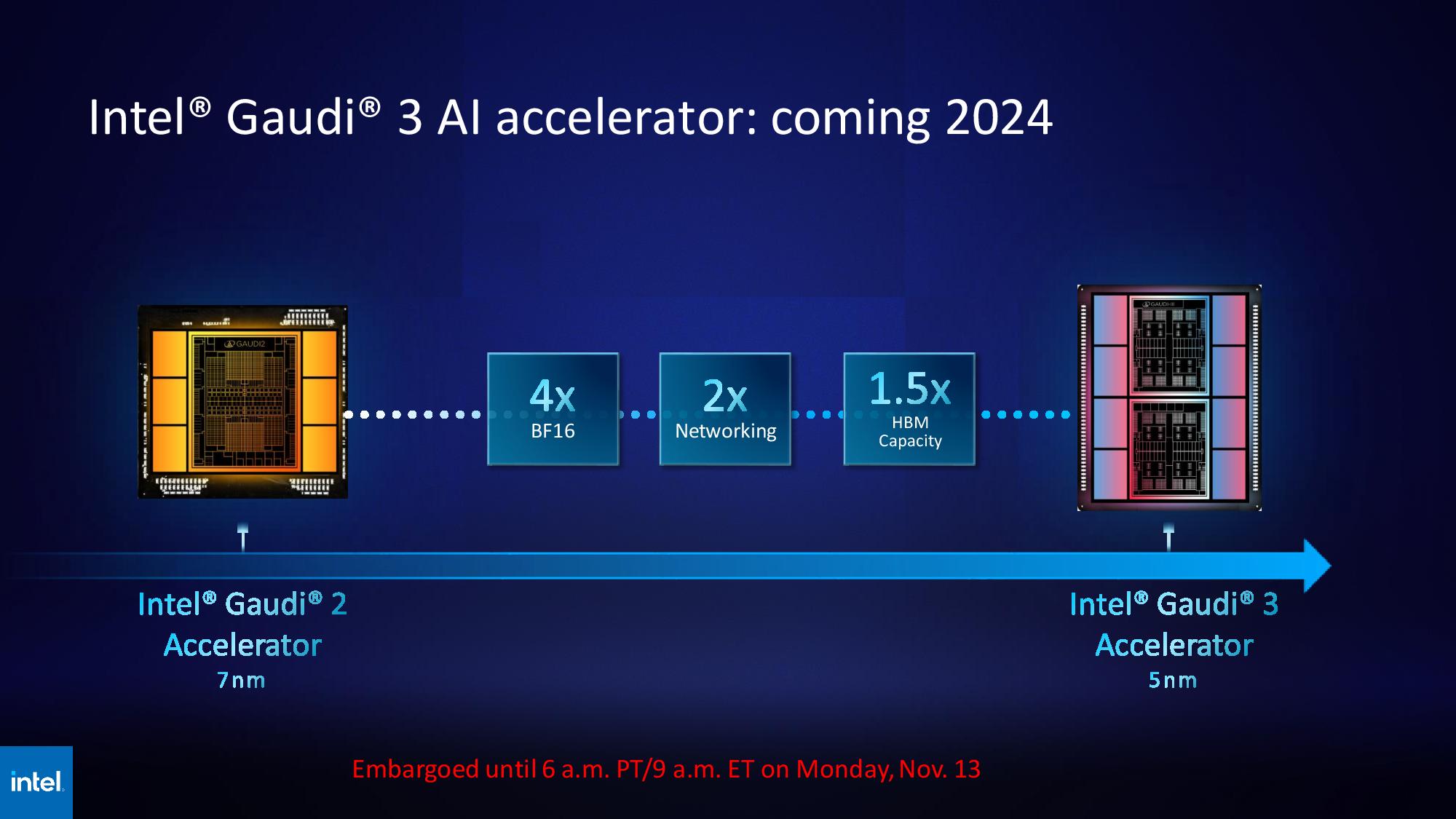

Intel shared a few details about the forthcoming Gaudi 3, which will mark the last Guadi accelerator before the company merges its Gaudi and GPU lineups into one singular product — Falcon Shores. The 5nm Gaudi 3 will have four times the performance in BF16 workloads than Gaudi 2, twice the networking (Gaudi 2 has 24x inbuilt 100 GbE RoCE Nics), and 1.5 times more HBM capacity (Gaudi 2 has 96 GB of HBM2E). As we can see in the graphic, Gaudi 3 moves to a tile-based design with two compute clusters, as opposed to the single-die solution Intel uses for Gaudi 2.

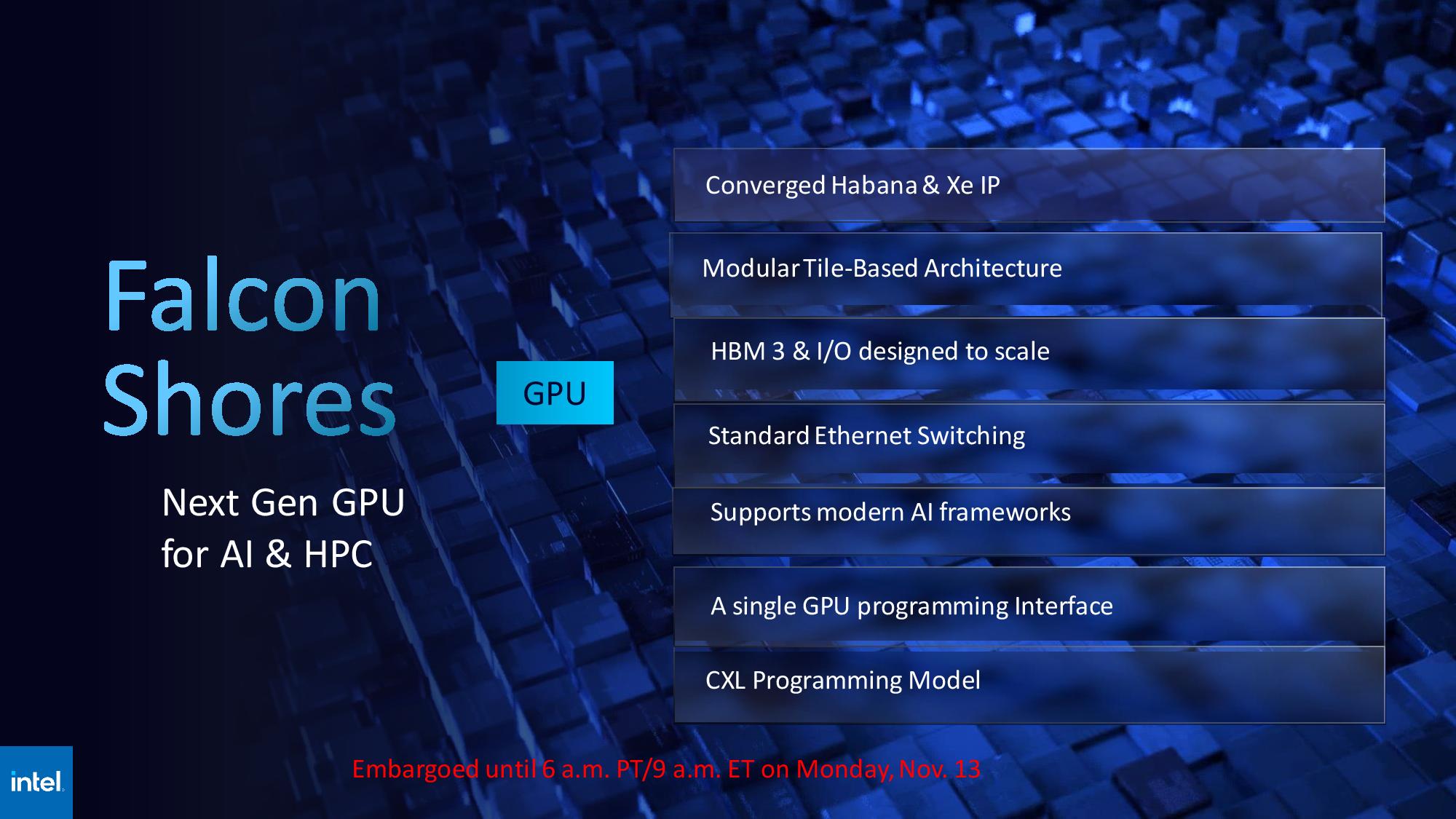

Intel has been slow to provide details on its future Falcon Shores GPU but reiterated that despite merging aspects of the Habana Gaudi IP and Xe GPU IP, the tile-based Falcon Shores will look and function as a single GPU through the OneAPI programming interface. Falcon Shores will employ HBM3 memory and Ethernet switching and support the CXL programming model. Additionally, applications tuned for the Gaudi accelerators and Xeon Max GPUs will be forward-compatible with Falcon Shores, thus providing its customers with code continuity between the two vastly different GPU and Gaudi lineups.

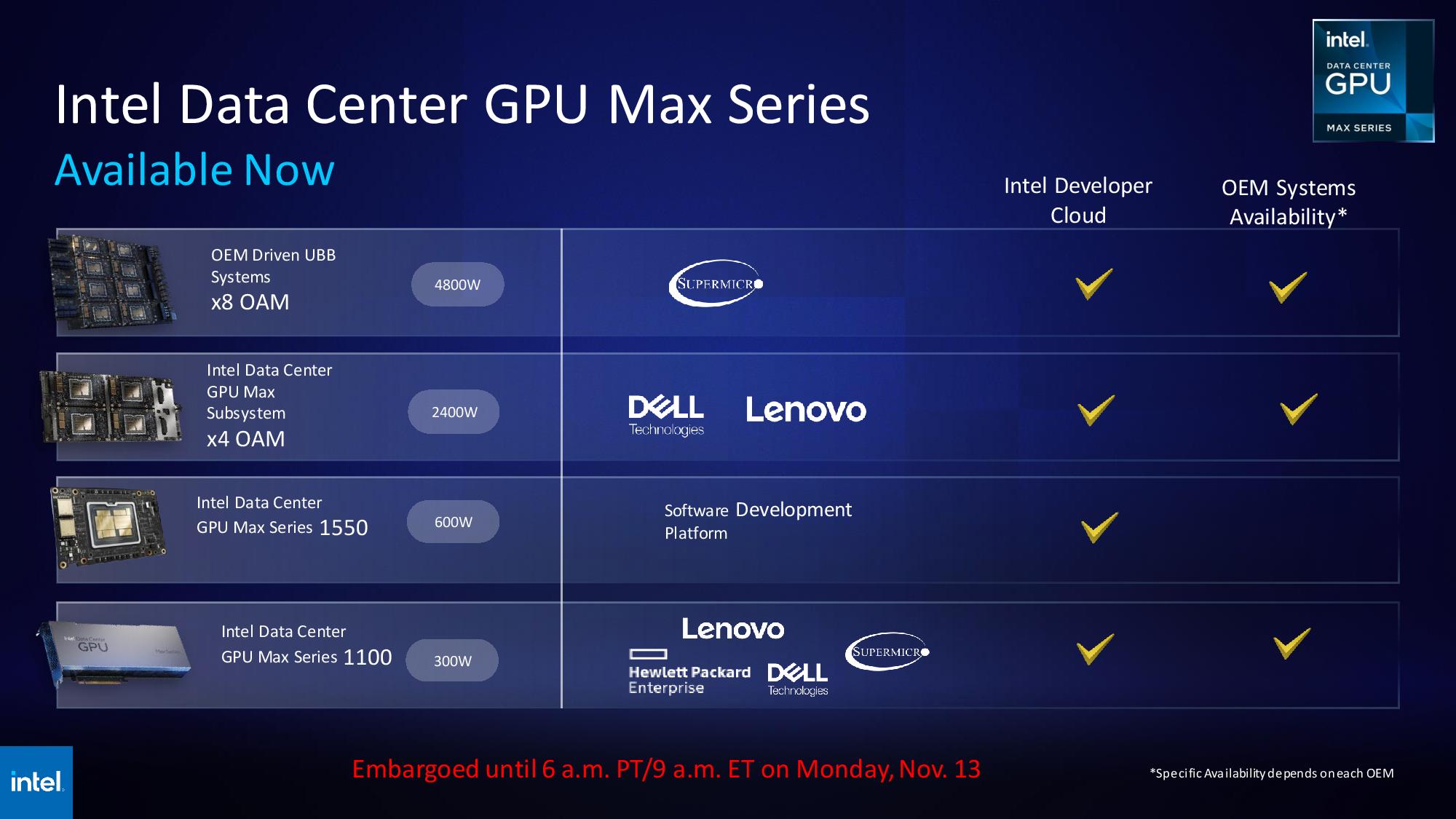

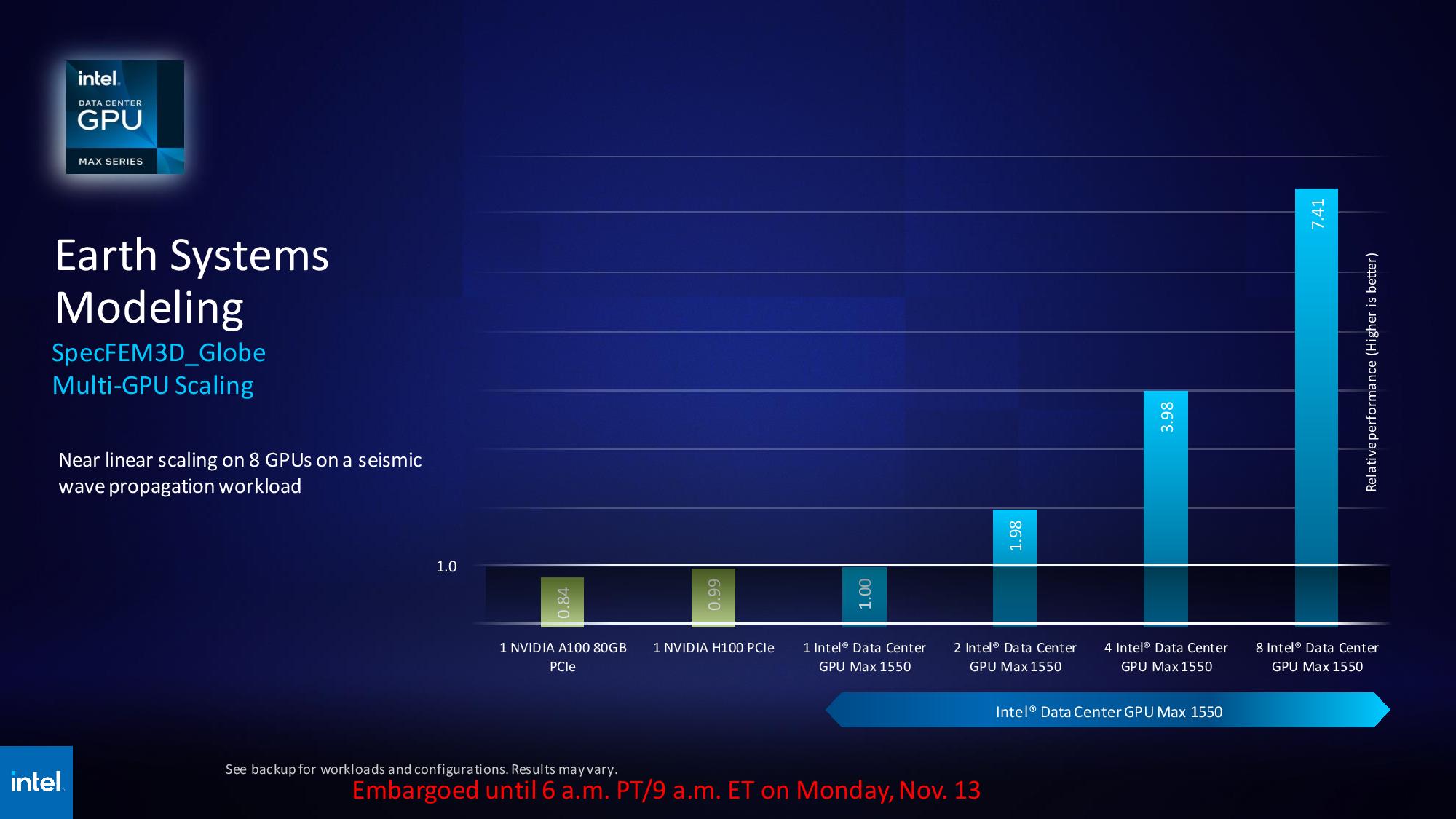

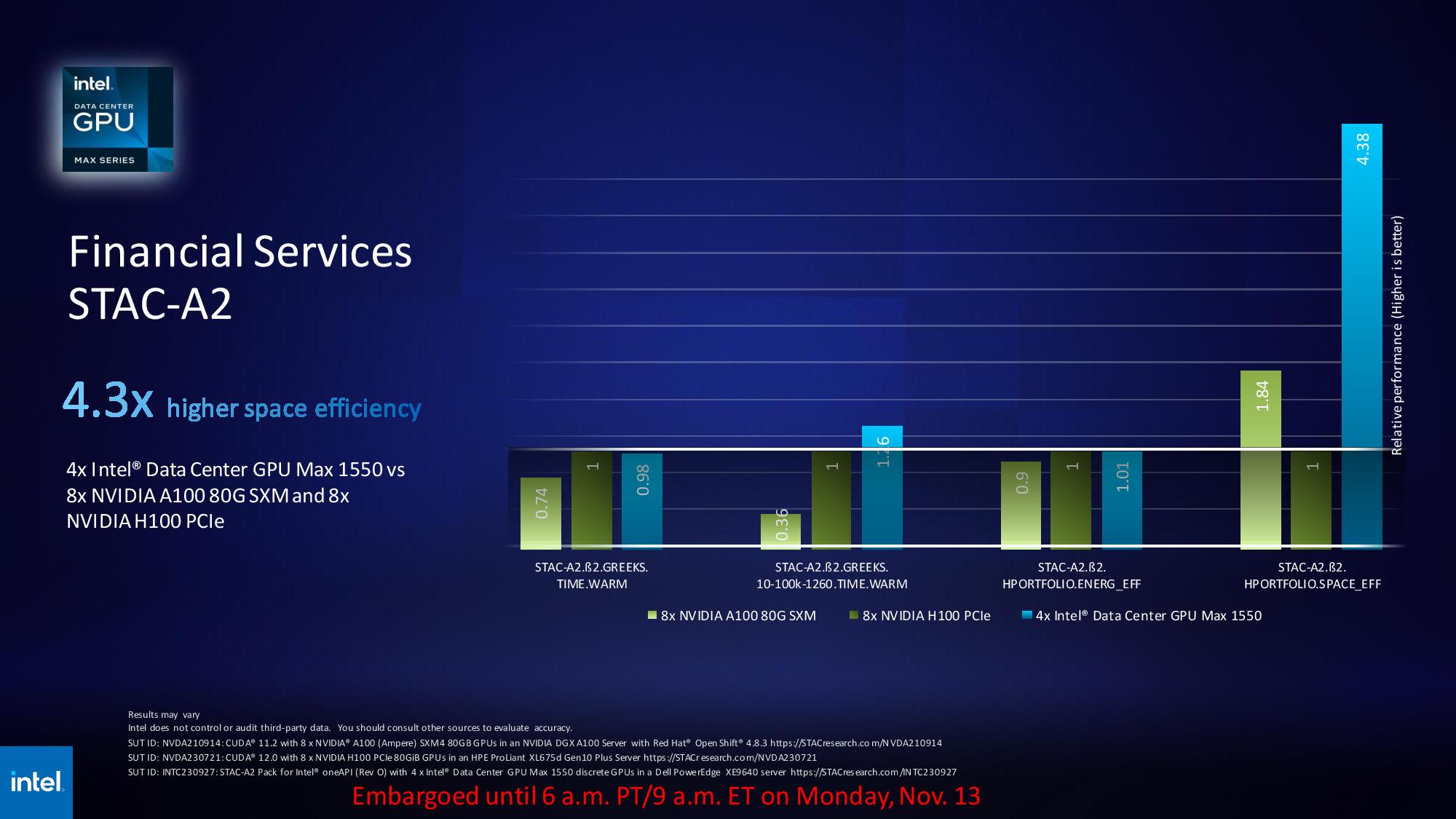

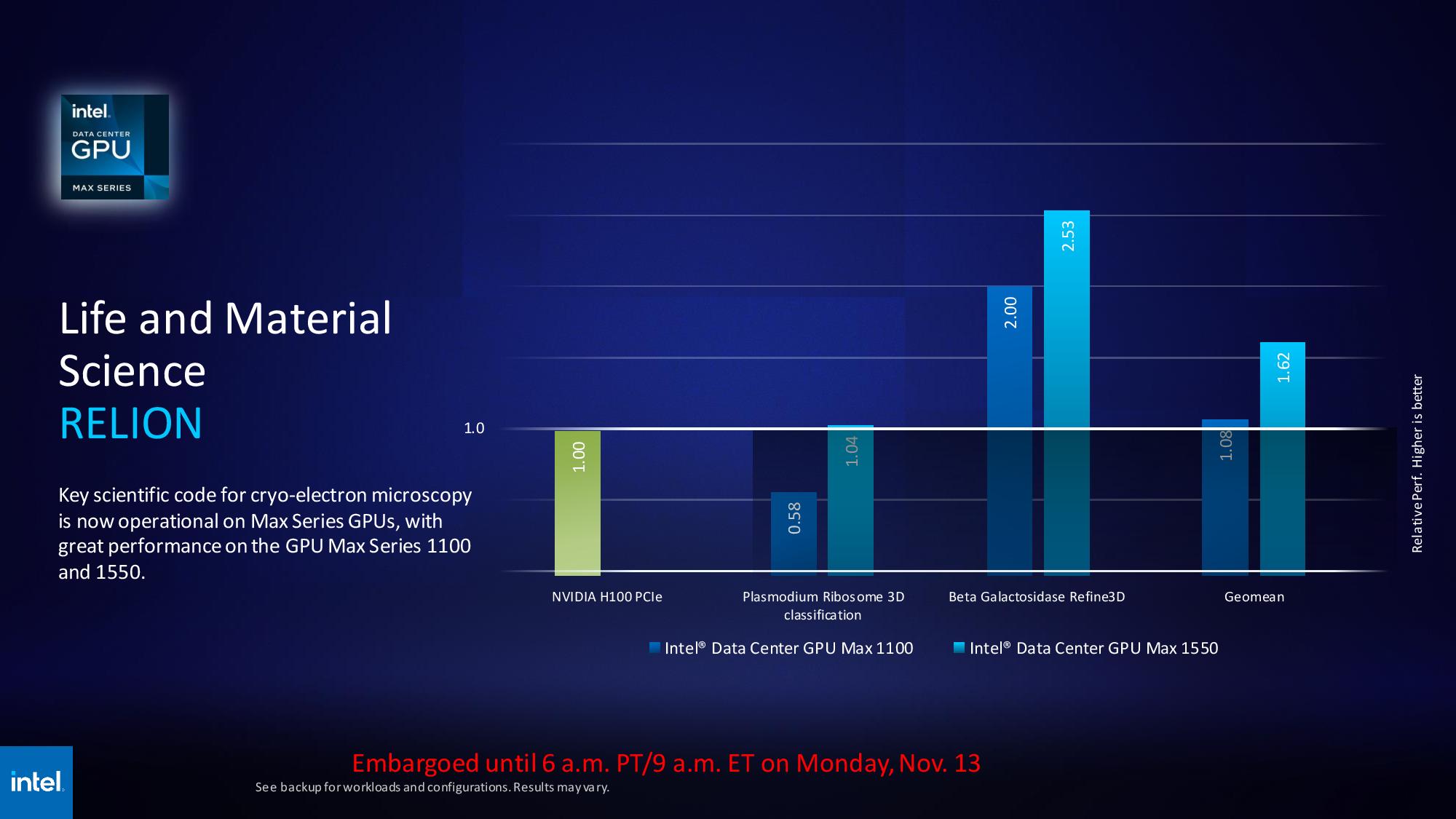

Intel Data Center GPU Max Series

Intel's Data Center GPU Max Series is now shipping to customers, with systems with eight OAM form factor GPUs available from Supermicro, while Dell and Lenovo have four-OAM GPU servers on offer. The GPU Max Series 1100 PCIe cards are also widely available from multiple vendors.

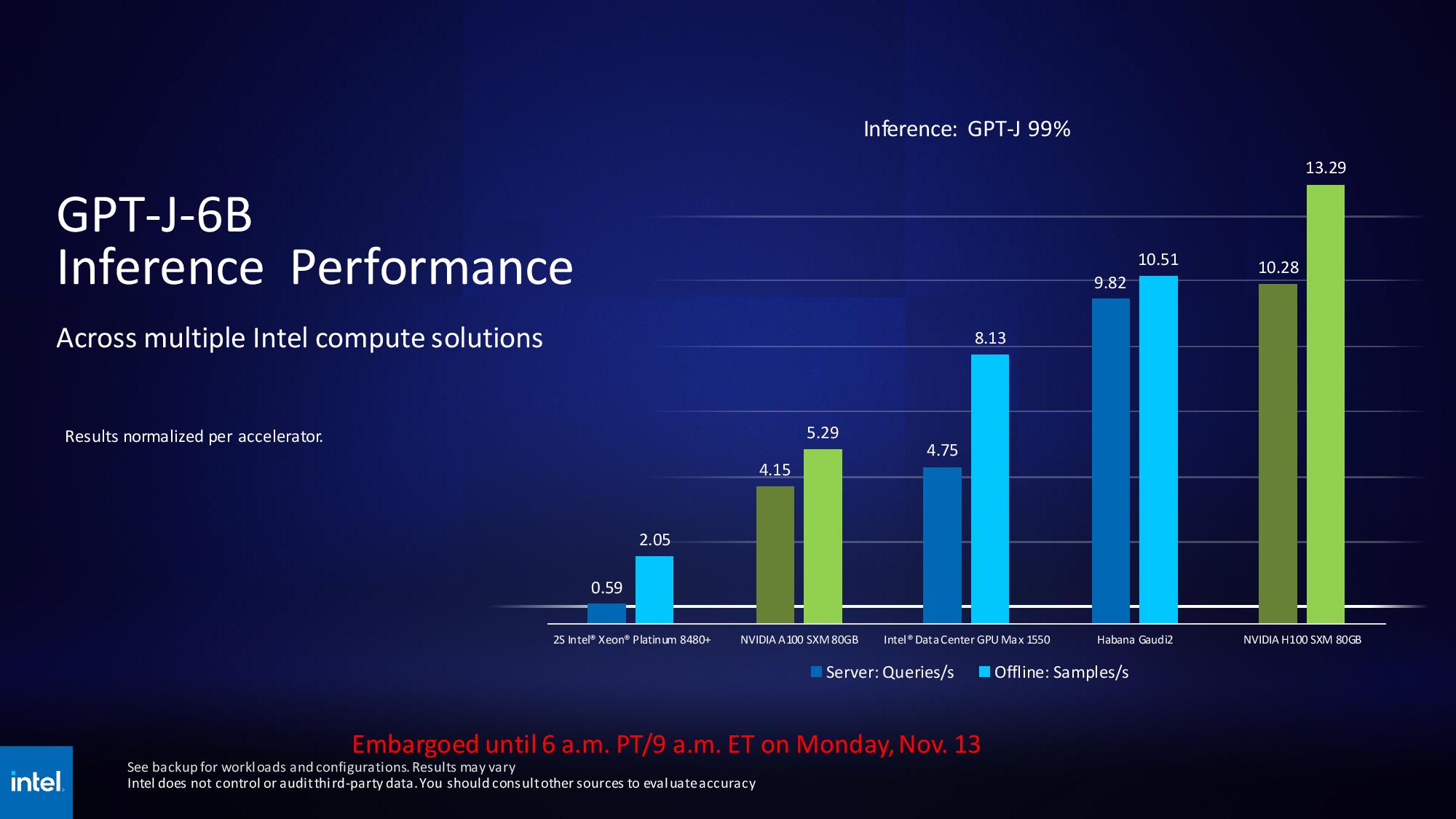

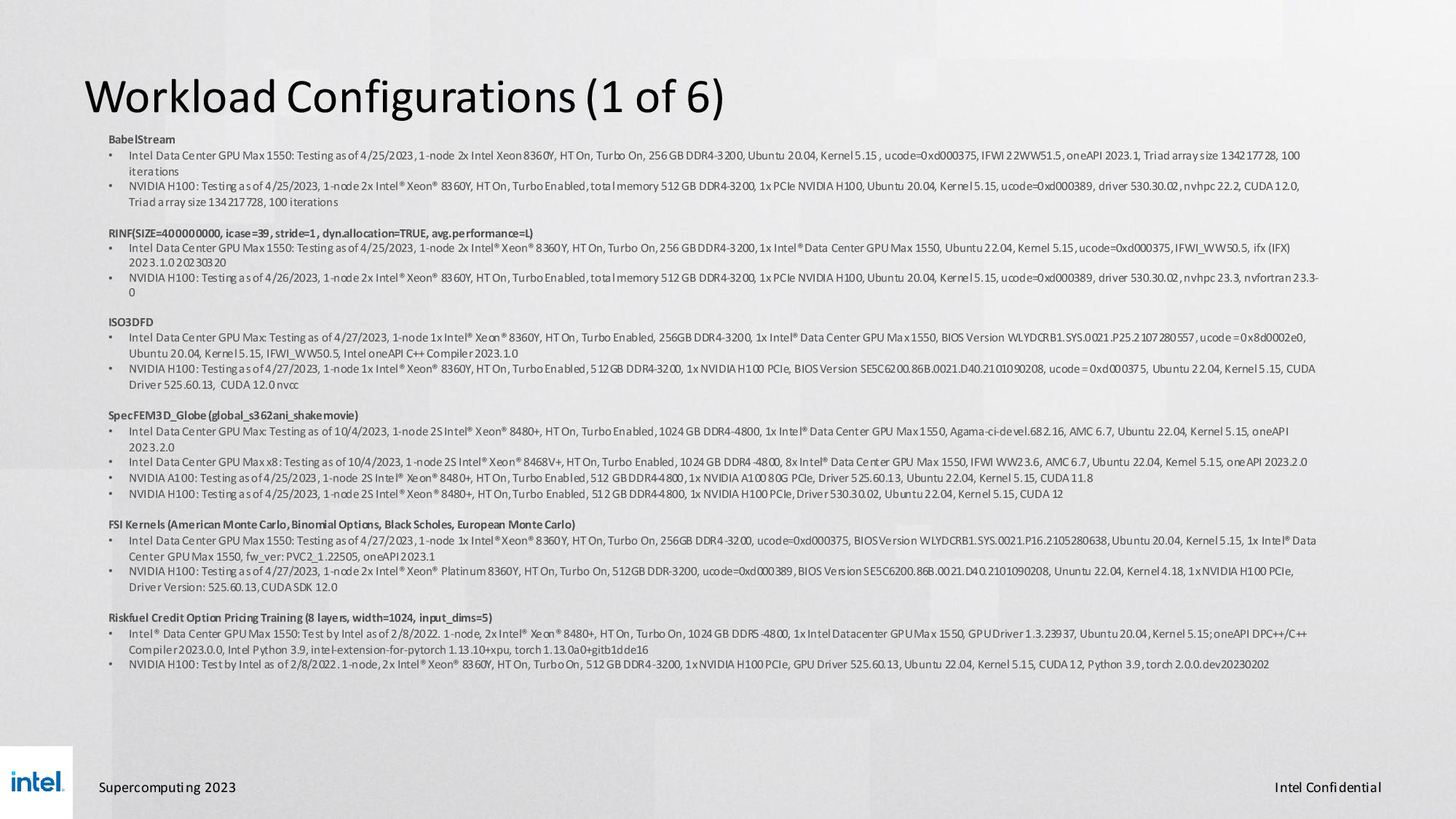

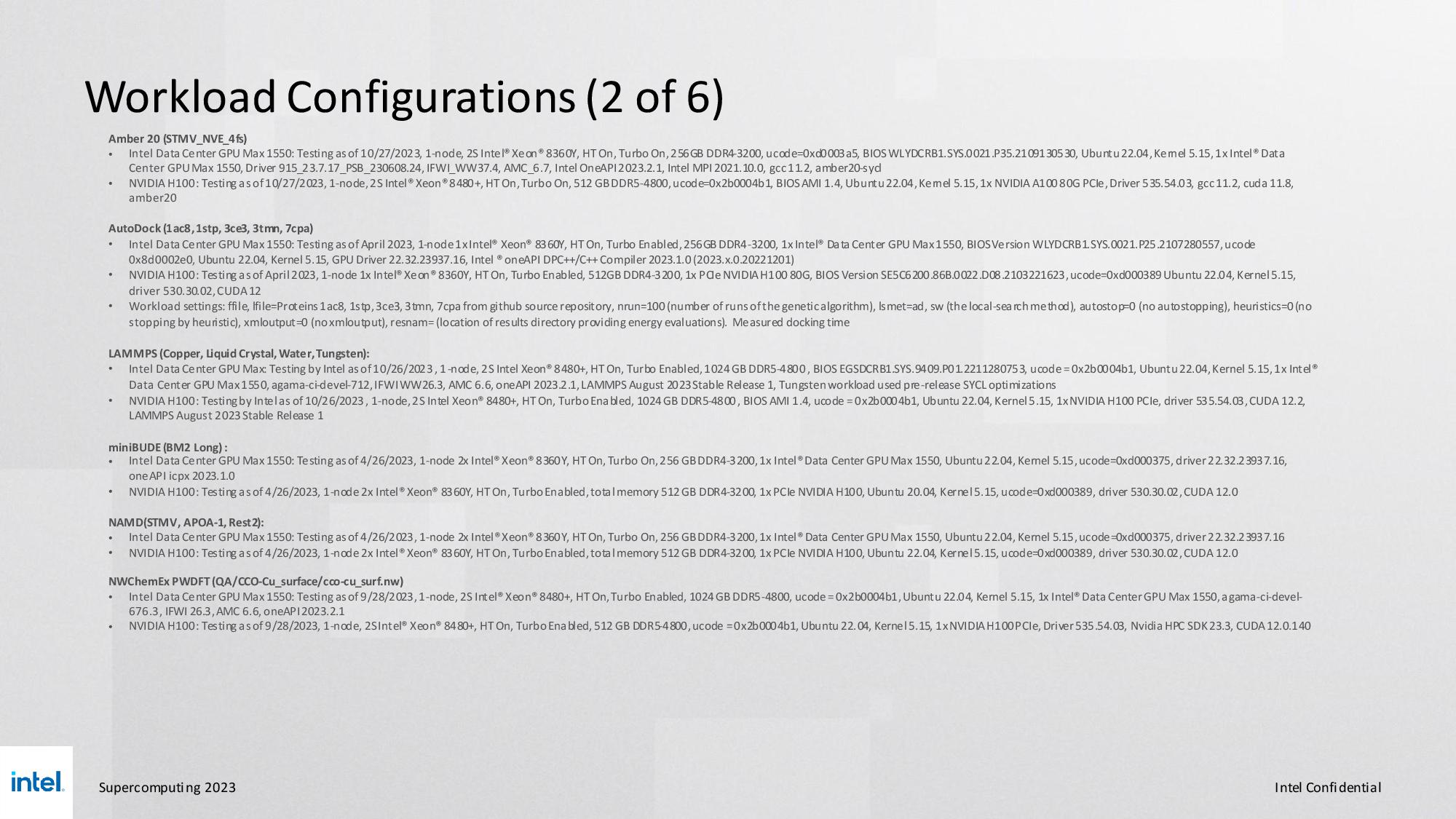

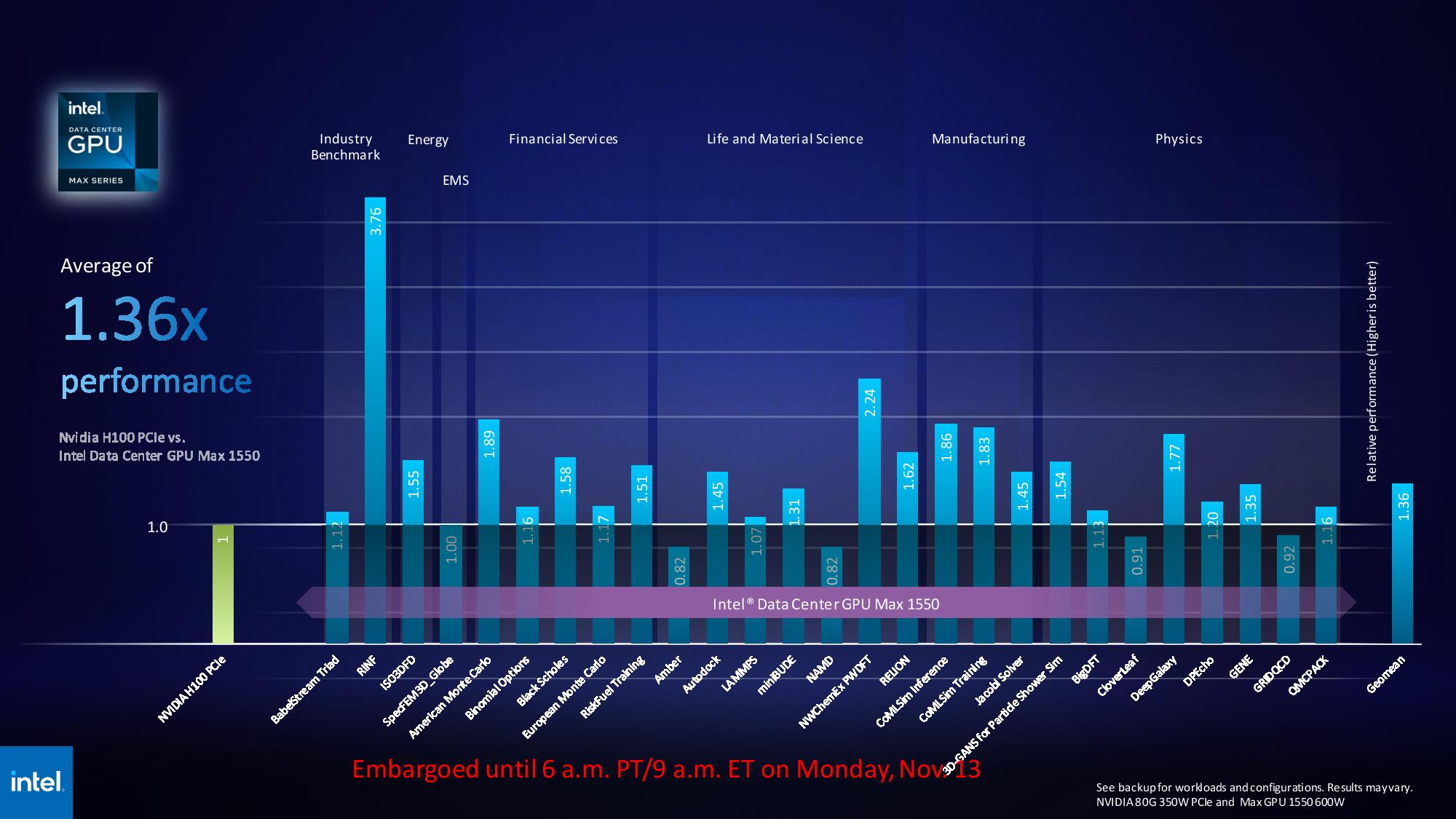

Intel's benchmarks compare its Max 1550 in the OAM form factor — a 600W GPU — compared to Nvidia's PCIe form factor H100 — a 350W competitor. As such, these benchmarks aren't a good litmus for apples-to-apples performance. Intel cited difficulty in gaining access to OAM form factor H100 GPUs as the reason for the benchmark disparity.

Now we await the Top500 submission from the Argonne National Laboratory for the Aurora supercomputer to see if Intel can unseat the AMD-powered Frontier as the fastest supercomputer in the world. That update is expected later today.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

weber462 Support Petals AI. Support BOINC. Normal people should have access to AI. Normal people should have access to processing power.Reply -

vanadiel007 Replyweber462 said:Support Petals AI. Support BOINC. Normal people should have access to AI. Normal people should have access to processing power.

No. It's only a matter of time before we see AI coin that can only be mined using AI neural networks. -

bit_user Replynew Max Series GPU benchmarks against Nvidia's H100 GPUs

It's telling they only compared themselves to the H100 on non-AI workloads. For AI, they could only compete against the older A100. -

bit_user Reply

As far as I can tell, Petals is only usable for inference. For training (and many HPC workloads), you need all that processing power to be very tightly-integrated with high-bandwidth interconnects and having fast access to huge amounts of data.weber462 said:Support Petals AI. Support BOINC. Normal people should have access to AI. Normal people should have access to processing power.

As far as normal people having access to it, there are cloud providers (including Nvidia) where you can rent time. -

jkflipflop98 Replyweber462 said:Support Petals AI. Support BOINC. Normal people should have access to AI. Normal people should have access to processing power.

Normal people don't have the electrical capacity to even boot up the amount of silicon it takes to run something like this. You basically need your own electrical sub-station to deliver the amount of energy this kind of thing takes.

Sure, in 20 years any old laptop at bestbuy will be able to do what these systems are doing, but for right now your best bet is to do as BU says and rent some cloud time. -

bit_user Reply

I think you missed @weber462 's point, which was to suggest that distributed computing could substitute for these kinds of supercomputers - not that an ordinary person would have even a single one of the Aurora-class machine in their home.jkflipflop98 said:Normal people don't have the electrical capacity to even boot up the amount of silicon it takes to run something like this.

As I pointed out, distributed computing is only applicable to a rather limited set of problems. It's great when it does apply.

I'm not saying you're wrong, but I think there's probably not (yet) a technology roadmap that would get us there. More difficult than merely scaling compute performance is going to be improving energy efficiency to match.jkflipflop98 said:Sure, in 20 years any old laptop at bestbuy will be able to do what these systems are doing, but for right now your best bet is to do as BU says and rent some cloud time.

Anyone interested in the subject should take a close read through this (especially the slides):

https://wccftech.com/amd-lays-the-path-to-zettascale-computing-talks-cpu-gpu-performance-plus-efficiency-trends-next-gen-chiplet-packaging-more/ -

bit_user Reply

I don't see where it says that in the article, but I would point out that Fortran has continued evolving, like C, C++, and other mature languages:DougMcC said:I threw up a little bit when I read the page about the project written in Fortran.

https://en.wikipedia.org/wiki/Fortran#Modern_Fortran

I've never used Fortran, but I'd be surprised if they hadn't added enough quality-of-life improvements to it, for it to be something I could live with.