Nvidia Blackwell GB202 GPU Rumored to Feature 384-bit GDDR7

No 512-bit memory interface for Nvidia's flagship.

Renowned hardware leaker @kopite7kimi corrected their own prediction that Nvidia's next-generation flagship graphics processing unit based on the Blackwell architecture would feature a 512-bit memory interface. It will not. Nvidia's GPU, currently known as GB202, will apparently continue to use a 384-bit memory bus, but will adopt GDDR7 type of memory.

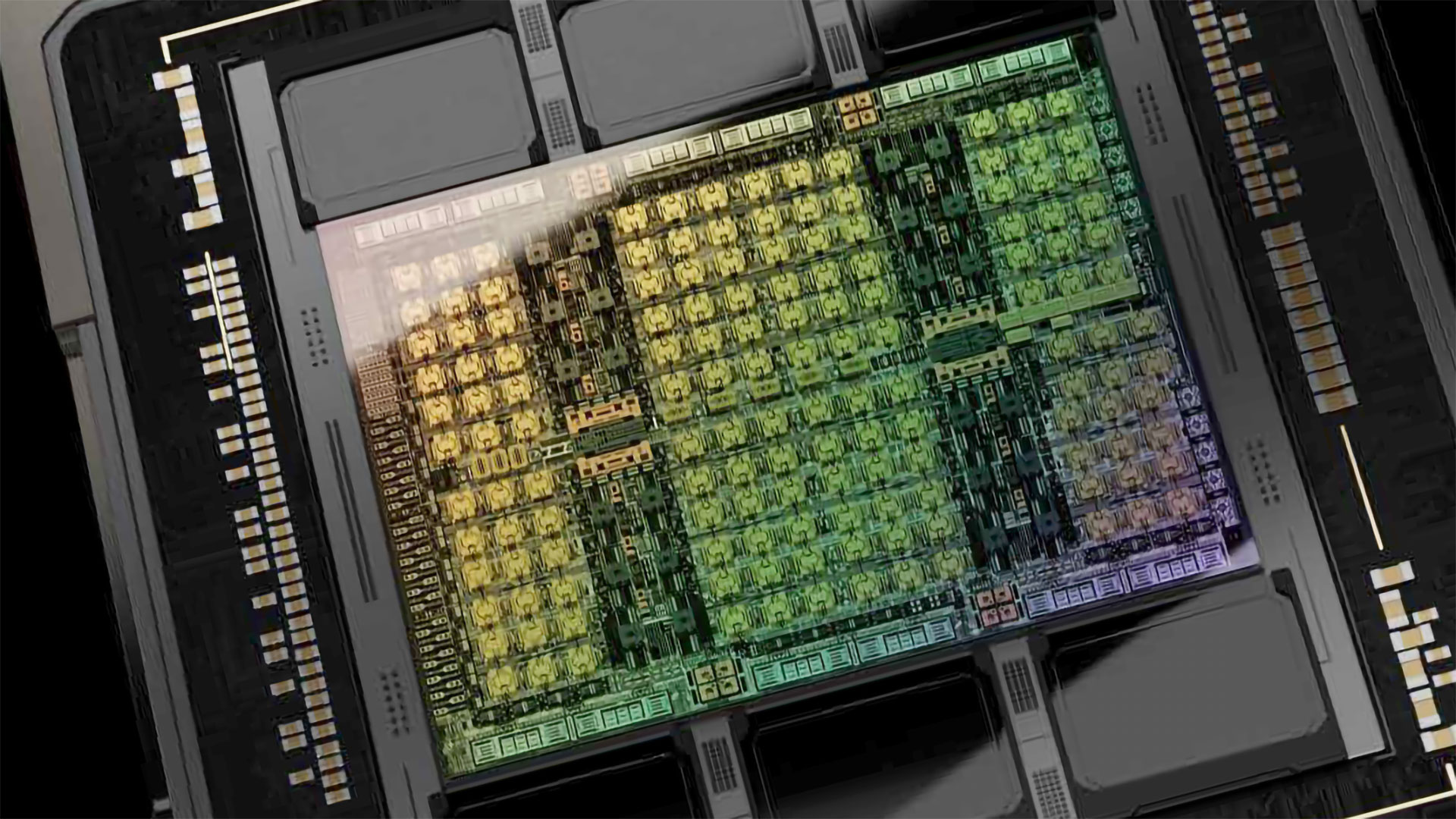

Nvidia's GB202 GPU is projected to feature up to 24,576 CUDA cores, a 33% increase over the number of CUDA cores packed in the AD102 GPU (18,432 CUDA cores). Rumors suggest the company will use one of TSMC's 3nm-class fabrication processes to make its GPUs based on the Blackwell architecture — though it remains to be seen whether Nvidia and TSMC will customize TSMC's 3nm-class nodes for GPUs, or if they'll stick to the default.

"Damn, we must adjust our evaluation of [GeForce] RTX 5090/5080," wrote kopite7kimi in an X (formerly Twitter) post.

"I think I probably made an empirical mistake," said kopite7kimi in another post. "I mistakenly applied the ratio of Ada Lovelace's L2 [cache] and [memory controller] to Blackwell, [which led to an incorrect assumption regarding GB202's 512-bit memory interface]."

When asked whether they meant a 384-bit memory interface for GB202 graphics processing unit, they replied positively, clarifying that the part will also use GDDR7. While a 512-bit bus would enable Nvidia to massively increase bandwidth available to its next-generation flagship graphics cards (presumably named the Nvidia GeForce RTX 5090), using GDDR7 over a 384-bit interface will also provide tangible benefits.

| null | GPC | TPC per GPC | SM per TPC | CUDA Cores per SM | CUDA Core Count | Row 0 - Cell 6 |

| GA100 | 8 | 8 | 2 | 64 | 8192 | Row 1 - Cell 6 |

| GA102 | 7 | 6 | 2 | 128 | 10752 | Row 2 - Cell 6 |

| GH100 | 8 | 9 | 2 | 128 | 18432 | Row 3 - Cell 6 |

| AD102 | 12 | 6 | 2 | 128 | 18432 | Row 4 - Cell 6 |

| GB100 | 8 | 10 | 2 | 128 | 20480 | Row 5 - Cell 6 |

| GB202 | 12 | 8 | 2 | 128 | 24576 | Row 6 - Cell 6 |

If Nvidia uses Micron's 32 GT/s 16 Gb ICs, then its RTX 5090 will get 1.536 TB/s of memory bandwidth — that's up from the the 1.008 TB/s the RTX 4090, the best graphics card available today, gets. Yet, with 16 Gb ICs, Nvidia will still have to stick to 24 GB of memory on its premium consumer board.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

FoxJupi I will 100% be buying this. Why? Because my first GPU was a 590 so posting a picture next to the new 5090 will make a great Reddit post. Oh, and gaming.Reply -

jp7189 33% more core; 50% more memory bandwidth. It doesn't seem like a 512 bit memory is needed if GDDR7 is ready in time and can hit those speeds.Reply