Nvidia Claims Higher RTX Frame Rates With DLSS Using 3DMark Port Royal Benchmarks

3DMark today updated its Port Royal benchmark, which is used to quantify performance in ray tracing-enabled graphics cards, with support for Nvidia’s Deep Learning Super Sampling (DLSS) tech.

Nvidia commemorated the occasion with a new GeForce Game Ready driver made to offer “optimum performance” in Port Royal. The GeForce Game Ready 418.81 WHQL driver also introduces support for the RTX-equipped laptops that are starting to debut.

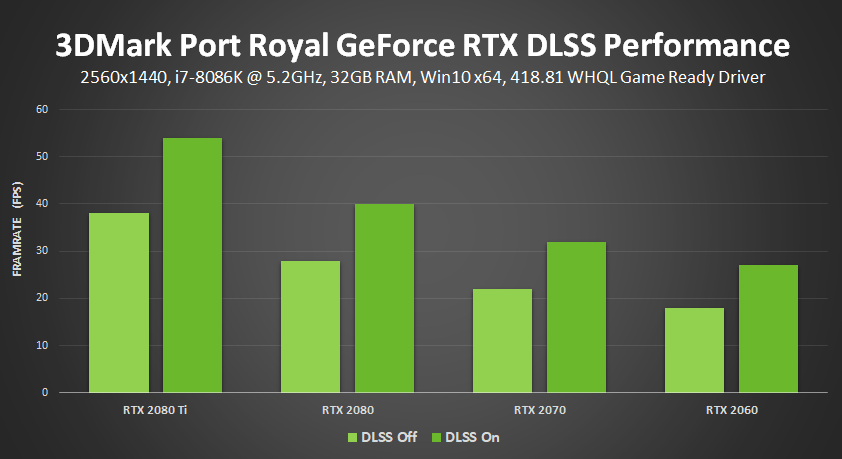

But the focus is very much on how Port Royal highlights the performance of RTX graphics cards. Nvidia published a separate blog post today, comparing the benchmark results for various RTX cards at 1440p with DLSS enabled or disabled, showing frame rate increases of over 10 frames per second in some cases and indicating that each of the company’s RTX graphics cards benefit from DLSS.

Album credit: Nvidia

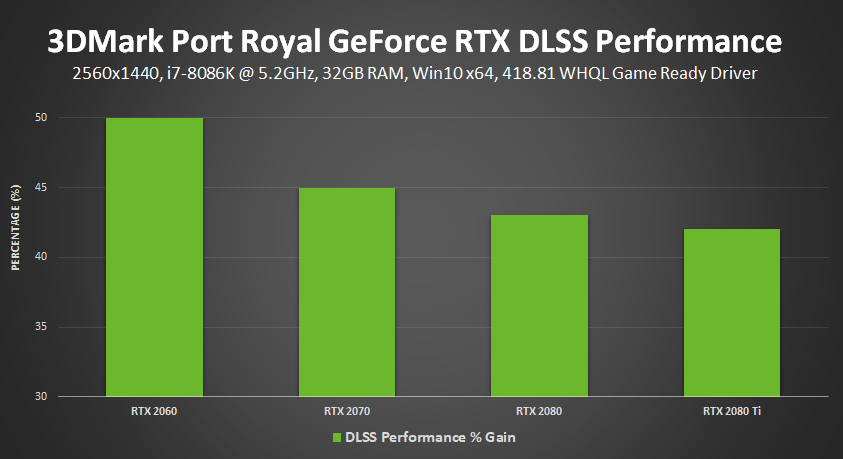

The gains vary from card to card. Nvidia reported the slightest performance gains with the RTX 2080 Ti and the highest gains with the RTX 2060. The smallest gain was around 40 percent, and the highest was 50 percent. We haven’t verified these results for ourselves, though, so we can’t speak to their veracity.

Nor can we speak directly to Nvidia’s claims that “anti-aliasing is improved, detail is sharper and game elements seen through transparent surfaces are vastly improved” when Port Royal is run with DLSS enabled.

Nvidia has published comparison images of Port Royal running with DLSS enabled and disabled on its website. It’s explained the steps you’ll need to take to run the benchmark yourself too, if you shelled out for an RTX graphics card and want quantifiable proof that you’re actually getting better graphics.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Nathaniel Mott is a freelance news and features writer for Tom's Hardware US, covering breaking news, security, and the silliest aspects of the tech industry.

-

Giroro Reply21741031 said:sweet, when will I see this benefit in games I actually play?

Never... because by the time Devs figure out how to use it Nvidia will have mothballed DLSS for whatever next year's anti-aliasing gimmick is. Just like every other "new/better/faster" proprietary AA acronym Nvidia has marketed with every new graphics card line in recent memory.

-

AlistairAB A machine learning approach to a non-interactive benchmark... so "1440p" DLSS is nVidia lying, most likely based on 1080p native, and you don't control the camera making it ridiculously simple. Not impressed as usual with DLSS.Reply

I really wish nVidia would stop labeling their AA method based on the target upscale, and base it on the native resolution like everyone else. -

xprats002 Any day now I'll be able to enjoy the vast improvement in VR performance thanks to the Simultaneous Multi-Projection on my 1080ti. Right? Soon?Reply -

AlistairAB This actually shows a useful niche for DLSS: In game cut-scenes. Since the camera is fixed, the training is pretty effective. This looks much better than the Infiltrator demo, where I preferred the TAA. I still think DLSS will most likely be useless for gaming, but for a cut-scene, that would work. With DLSS you are basically downloading extra info, so it takes more storage than complete real time, but way less than full recorded video. You could run an in game cut-scene at a lower resolution but have it impress with visuals and clarity. I'm trying to be positive here :)Reply

I do want to point out that it is still a wasteful technology. The DLSS technology required die space on the GPU and extra cost in the data center. Much better would have been to invest that money in enlarging the game performance. The RTX 2060 with the large die could equal the 2080 ti in game performance if the 2080 ti was solely devoted to traditional raster performance. The die size ballooned and is inefficient with RTX. -

AlistairAB RTX 2060 is 445mm2 at 12nm. 1080 Ti is 471mm2 at 16nm. We know that from the Radeon 7, we can expect at least a 40 percent reduction in die size from 7nm. So the 1080 ti at 7nm would have been about 471mm2 * 0.6 = 282 mm2.Reply

2080 Ti performance (clock speed increases of at least 20 percent) at 7nm with only 282 mm2. Without the wasteful RT and DLSS technology added to the die...

Vega 7 is 331mm2, so the 2080 ti equivalent 1080 ti at 7nm would be much cheaper to make than Vega 7, and much faster. We all know nVidia is trying to push their own tech instead of giving us what we want... -

bigpinkdragon286 Wonder if anybody could actually work out a reasonably accurate cost / benefit ratio for adding the Tensor cores to the GPU die, VS just using that space for more general purpose cores?Reply

The problem with adding the custom cores to the GPU is, all of your development work for those cores gets thrown away as soon as they get left out of the GPU, unless of course your code can also run on the general compute cores, which then brings the custom cores benefit into question.

So, how long is NVIDIA going to require consumers to pay for cores they may never get full use of? Replacing those Tensor cores with the general purpose cores would work for every single game. Will we see Tensor cores on all new GPUs from NVIDIA going forward, or are they segmenting their own market? -

extremepenguin By the time DLSS becomes mainstream it will have been replaced by DirectML as part of the DX12 API.Reply