Nvidia Unveils Big Accelerator Memory: Solid-State Storage for GPUs

Nvidia and IBM propose a new GPU-SSD interoperability framework.

Microsoft's DirectStorage application programming interface (API) promises to improve the efficiency of GPU-to-SSD data transfers for games in a Windows environment, but Nvidia and its partners have found a way to make GPUs seamlessly work with SSDs without a proprietary API. The method, called Big Accelerator Memory (BaM), promises to be useful for various compute tasks, but it will be particularly useful for emerging workloads that use large datasets. Essentially, as GPUs get closer to CPUs in terms of programmability, they also need direct access to large storage devices.

Modern graphics processing units aren't just for graphics; they're also used for various heavy-duty workloads like analytics, artificial intelligence, machine learning, and high-performance computing (HPC). To process large datasets efficiently, GPUs either need vast amounts of expensive special-purpose memory (e.g., HBM2, GDDR6, etc.) locally, or efficient access to solid-state storage. Modern compute GPUs already carry 80GB–128GB of HBM2E memory, and next-generation compute GPUs will expand local memory capacity. But dataset sizes are also increasing rapidly, so optimizing interoperability between GPUs and storage is important.

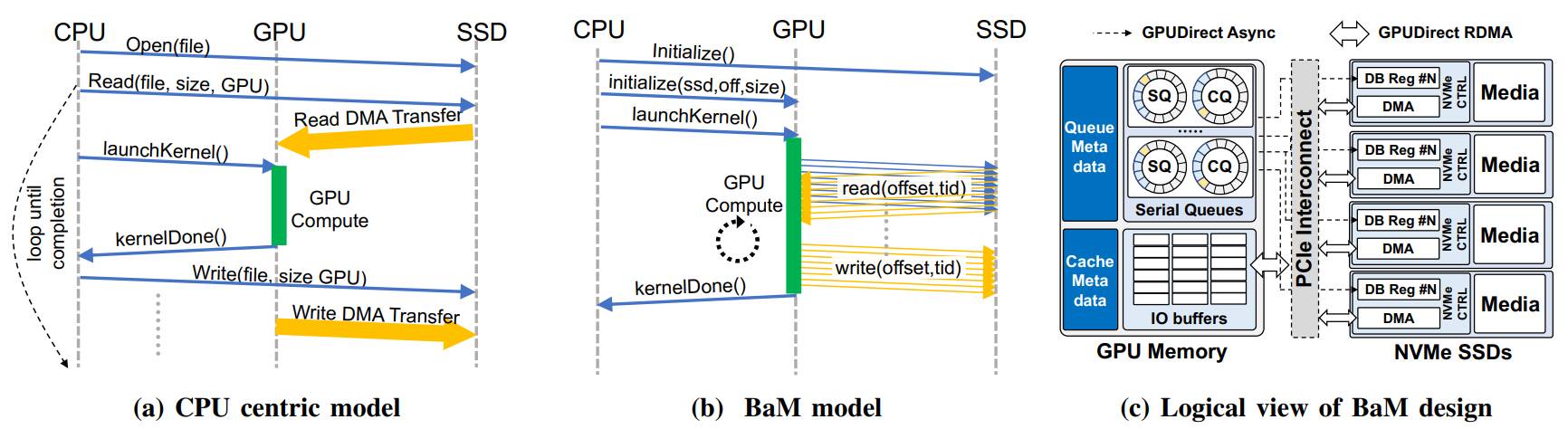

There are several key reasons why interoperability between GPUs and SSDs has to be improved. First, NVMe calls and data transfers put a lot of load on the CPU, which is inefficient from an overall performance and efficiency point of view. Second, CPU-GPU synchronization overhead and/or I/O traffic amplification significantly limits the effective storage bandwidth required by applications with huge datasets.

"The goal of Big Accelerator Memory is to extend GPU memory capacity and enhance the effective storage access bandwidth while providing high-level abstractions for the GPU threads to easily make on-demand, fine-grain access to massive data structures in the extended memory hierarchy," a description of the concept by Nvidia, IBM, and Cornell University cited by The Register reads.

BaM essentially enables Nvidia GPU to fetch data directly from system memory and storage without using the CPU, which makes GPUs more self-sufficient than they are today. Compute GPUs continue to use local memory as software-managed cache, but will move data using a PCIe interface, RDMA, and a custom Linux kernel driver that enables SSDs to read and write GPU memory directly when needed. Commands for the SSDs are queued up by the GPU threads if the required data is not available locally. Meanwhile, BaM does not use virtual memory address translation and therefore does not experience serialization events like TLB misses. Nvidia and its partners plan to open-source the driver to allow others to use their BaM concept.

"BaM mitigates the I/O traffic amplification by enabling the GPU threads to read or write small amounts of data on-demand, as determined by the compute," Nvidia's document reads. "We show that the BaM infrastructure software running on GPUs can identify and communicate the fine-grain accesses at a sufficiently high rate to fully utilize the underlying storage devices, even with consumer-grade SSDs, a BaM system can support application performance that is competitive against a much more expensive DRAM-only solution, and the reduction in I/O amplification can yield significant performance benefit."

To a large degree, Nvidia's BaM is a way for GPUs to obtain a large pool of storage and use it independently from the CPU, which makes compute accelerators much more independent than they are today.

Astute readers will remember that AMD attempted to wed GPUs with solid-state storage with its Radeon Pro SSG graphics card several years ago. While bringing additional storage to a graphics card allows the hardware to optimize access to large datasets, the Radeon Pro SSG board was designed purely as a graphics solution and was not designed for complex compute workloads. Nvidia, IBM, and others are taking things a step further with BaM.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

Ogotai " improve the efficiency of GPU-to-SSD data transfers for games in a Windows environment, but Nvidia and its partners have found a way to make GPUs seamlessly work with SSDs without a proprietary API "Reply

a friend just about spit his coffee out when i showed him this line. and said, yea this coming from someone who is pretty much the king of proprietary technology. he has high doubts Nvidia will share this with any one, and IF it does, it will more then likely, no be cheap. -

mac_angel I think it's about time we changed the naming and term of GPU since it can mean very different things now. While the technology may still be very much the same at the core, the functionality, as well as some of these newer technologies are obviously very specific to things NOT related to graphics acceleration, such as gaming and/or rendering and production. A lot of these technologies will never be seen in commercial products.Reply -

hotaru251 I am all for a "next big leap" (as gpu's not had any in awhile besides niche ray tracing) but question is...will it be used enoguh to make it worth it?Reply

These only really benefit if companies make stuff that benefits from it.

Rather not having another "rtx" price hike for a feature thats not used often. -

freedomfries Direct acces to RAM? Sounds like a great new vulnerability vector to tinker with.Reply -

Kamen Rider Blade Now for AMD to improve upon it's Radeon SSG technology and do the same thing, but have the nVME drive located near the GPU and not need proprietary API's to implement the benefits.Reply -

FunSurfer For gaming, Microsoft's DirectStorage API still lacks the GPU implementation of gaming files decompression that will ease the CPU load (but will also increase GPU load), with most gamers having 1TB to 2TB NVMe SSD, there should be enough space on the SSD for an uncompressed game files folder, to load the files directly from it to the VRAM. This can be implemented NOW without buying new hardware with the existing DirectStorage API without loading the GPU and the CPU.Reply -

Geef Bah they just need to throw an M.2 slot on the back of the graphics card! Gamers would have no problem buying themselves a new M.2 drive JUST for their massively expensive graphics card. :ROFLMAO:Reply -

TerryLaze Reply

It's something that works without an API, AMD has done this already, it doesn't need anything that nvidia holds the patents for.Ogotai said:he has high doubts Nvidia will share this with any one, and IF it does, it will more then likely, no be cheap.

https://www.amd.com/en/products/professional-graphics/radeon-pro-ssg