Researchers Benchmark Experimental RISC-V Supercomputer

Monte Cimone cluster combines 32 RISC-V cores.

A group of researchers from the Università di Bologna and Cineca has explored an experimental eight-node 32-core RISC-V supercomputer cluster. The demonstration showed that even a bunch of humble SiFive's Freedom U740 system-on-chips could run supercomputer applications at relatively low power. Moreover, the cluster worked well and supported a baseline high-performance computing stack.

Need for RISC-V

One of the advantages of the open-source RISC-V instruction set architecture is the relative simplicity of building a highly custom RISC-V core aimed at a particular application that will offer a very competitive balance between performance, power consumption, and cost. It makes RISC-V suitable for emerging applications and various high-performance computing projects that cater to a particular workload. The group explored the cluster to prove that RISC-V-based platforms can function for high-performance computing (HPC) from a software perspective.

"Monte Cimone does not aim to achieve strong floating-point performance, but it was built with the purpose of 'priming the pipe' and exploring the challenges of integrating a multi-node RISC-V cluster capable of providing an HPC production stack including interconnect, storage, and power monitoring infrastructure on RISC-V hardware," the description of the project reads (via NextPlatform).

For its experiments, the team took an off-the-shelve Monte Cimone cluster consisting of four dual-board blades in a 1U form-factor built by E4, an Italian HPC company (note that E4's Monte Cimone cluster consists of six blades). The Monte Cimone is a platform 'for porting and tuning HPC-relevant software stacks and HPC applications to the RISC-V architecture,' so the choice was well justified.

The Cluster

The Monte Cimone 1U machines utilized two SiFive's HiFive Unmatched developer motherboards powered by SiFive's heterogeneous multicore Freedom U740 SoC that integrates four U74 cores running at up to 1.4 GHz and one S7 core using the company's proprietary Mix+Match technology as well as 2MB of L2 cache. In addition, each platform has 16GB of DDR4-1866 memory and a 1TB NVMe SSD.

Each node also sports a Mellanox ConnectX-4 FDR 40 Gbps host channel adapter (HCA) card, but for some reason, RDMA did not work even though the Linux kernel could recognize the device driver and mount the kernel module to manage the Mellanox OFED stack. Therefore, two of the six nodes were equipped with Infiniband HCA cards with a 56 Gbps throughput to maximize available inter-node bandwidth and compensate for the lack of RDMA.

One of the critical parts of the experiment was porting essential HPC services required to sun supercomputing workloads. The team reported that porting NFS, LDAP and the SLURM job scheduler to RISC-V was relatively straightforward; then, they installed an ExaMon plugin dedicated to data sampling, a broker for transport layer management, and a database for storage.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Results

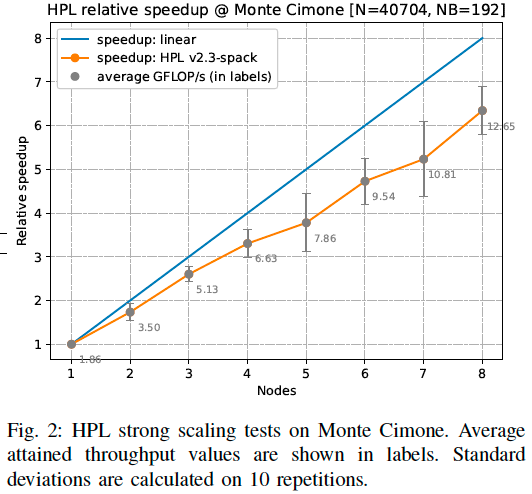

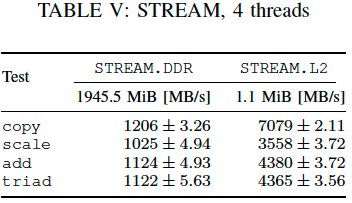

Since using a low-power cluster designed for software porting purposes for actual HPC workloads does not make sense, the team ran HPL and Stream benchmarks to measure GFLOPS performance and memory bandwidth. The results were a mixed bag, though.

The peak theoretical performance of SiFive's U74 core is 1 GFLOPS, which suggests that a peak theoretical performance of one Freedom U740 SoC should be 4 GFLOPS. Unfortunately, each node only reached a sustained 1.86 GFLOPS performance in HPL, which means that an eight-node cluster's peak compute capability should be in the 14.88 GFLOPS ballpark assuming a perfect linear scaling. The whole cluster reached a sustained peak performance of 12.65 GFLOPS, which is 85% of the extrapolated attainable peak. Meanwhile, due to relatively poor scaling of the SoC, 12.65 GFLOPS is 39.5% of the entire machine's theoretical peak, which may not be that bad for an experimental if we do not take the poor scaling of the U740 model into consideration.

Regarding memory bandwidth, each node should yield about 14.928 GB/s of bandwidth using one DDR4-1866 module. In reality, it never went above 7760 MB/s, which is not a good result. Actual benchmark results in upstream, unmodified Stream are even less impressive as a 4-threaded workload only attained bandwidth of no more than 15.5% of the available peak bandwidth, which is well below results of other clusters. On the one hand, these results demonstrate mediocre memory subsystem of the Freedom U740, but on the other hand they also show that software optimizations could improve things.

In terms of power consumption, the Monte Cimone cluster delivers just what it promises — it is low. For example, the actual power consumption of one SiFive Freedom U740 peaks at 5.935W under CPU-intensive HPL workloads, whereas in idle, it consumes around 4.81W.

Summary

The Monte Cimone cluster used by the researchers is perfectly capable of running an HPC software stack and appropriate test applications, which is already good. In addition, the SiFive's HiFive Unmatched board and E4's systems indulge in software porting purposes, so the smooth operation of NFS, LDAP, SLURM, ExaMon, and other programs was a pleasant surprise. Meanwhile, the lack of RDMA support was not.

"To the best of our knowledge, this is the first RISC-V cluster which is fully operational and supports a baseline HPC software stack, proving the maturity of the RISC-V ISA and the first generation of commercially available RISC-V components," the team wrote in its report. "We also evaluated the support for Infiniband network adapters which are recognized by the system, but are not yet capable to support RDMA communication."

But actual performance results of the cluster fell short of expectations. Such effects fell under the condition of the mediocre performance and capabilities of the U740, but software readiness played a role. That said, while HPC software can work on RISC-V-based systems, it cannot deliver on the expectations. It will change once developers optimize programs for the open-source architecture and proper hardware is released.

Indeed, the researchers say that their future work involves improving the software stack, adding RDMA support, implementing dynamic power and thermal management, and using RISC-V-based accelerators.

As for hardware, SiFive can build SoCs with up to 128 high-performance cores. Such processors are for data center and HPC workloads, so expect them to have proper performance scalability and a decent memory subsystem. Also, once SiFive enters these markets, it will need to ensure software compatibility and optimizations, so expect the chipmaker to encourage software developers to tweak their programs for the RISC-V ISA.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

jkflipflop98 They never mention the other side of the highly specialized coin.Reply

Sure, when you're doing the workload that your custom part was designed to do then it's highly efficient and gets the job done fast. But you can never change and pivot to a different workload using the same hardware. If your requirements change over time, you have to purchase all new hardware.