TSMC Controls Production of AI Processors

Nvidia and AMD benefit from the AI and HPC trends, but TSMC controls chip production

While everyone praises Nvidia — whose market capitalization broke $1 trillion earlier this year — for the ongoing artificial intelligence (AI) and high-performance computing (HPC) megatrends, there's another company that not only heavily benefits from said AI and HPC megatrends, but which basically controls production of AI processors.

Tens of Billions of Dollars

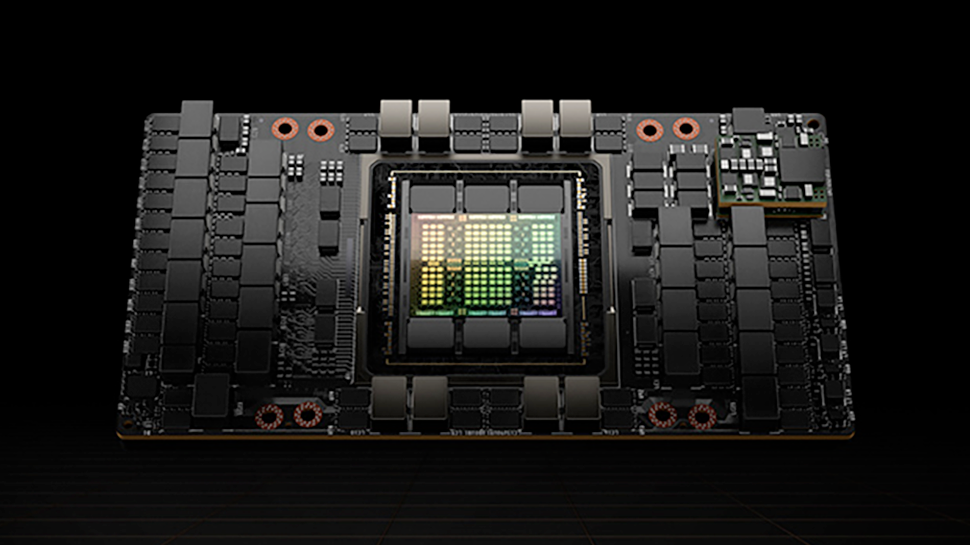

That company is TSMC — which produces some of the most complex processors for AI and HPC machines ever built, including those from Nvidia, AMD, Intel, Tenstorrent, Cerebras, and Graphcore (just to name a few), according to a report from DigiTimes. Nvidia's A100 and H100 (as well as its A800 and H800 derivatives for the Chinese market), the most popular compute GPUs used for AI and HPC workloads, are made at TSMC, as are AMD's EPYC CPUs and Instinct GPUs. Rising AI and HPC stars such as Tenstorrent, and developers of such exotic things as wafer scale processors such as Cerebras also chose TSMC for their products.

While TSMC does not disclose how much it earns from selling CPUs, GPUs, and specialized processors or SoCs for AI, datacenter, HPC, and servers, these products use a lot of silicon — so TSMC probably earns tens of billions making those products for its high-profile customers. For example, Nvidia's GH100 compute GPU has a die size of 814 mm2, whereas AMD's EPYC 'Genoa' uses 12 Zen 4-based CCD chiplets — each measuring around 72 mm2 and thus using 864 mm2 of N5 silicon.

While we do not have revenue splits for TSMC's rivals Samsung Foundry and GlobalFoundries, given that these companies are so significantly behind the Taiwanese contract maker of chips, it is safe to say that TSMC benefits from AI and HPC in general. It particularly dominates shipments of AI GPUs, as it makes them for both Nvidia (which controls over 90% of shipments) and AMD (which controls less than 10%).

AI and HPC Gaining Importance for TSMC

TSMC itself provides a rather detailed revenue split that clearly distinguishes between automotive, IoT, smartphones, and high-performance computing, but it is not detailed enough to tell the difference between chips for AI, HPC, client PCs, servers, and game consoles. For TSMC, all of these processors and SoCs belong to the HPC segment — a segment that is thriving.

HPC products accounted for 30% of TSMC's revenue, or $10.389 billion, in 2019. That same year, smartphone SoCs accounted for 49% of TSMC's revenue, or $16.97 billion. But the share of HPC products in TSMC's revenue has been growing: the category accounted for 33% in 2020 ($15 billion), 37% in 2021 ($21 billion), and 41% in 2022 ($31.11 billion). The trend has been the opposite for smartphone SoCs, which accounted for 39% of TSMC's sales in 2022 ($29.59 billion).

While AMD and Nvidia buy boatloads of datacenter-oriented silicon from TSMC, Apple is still the largest customer for the world's No. 1 maker of chips — especially now that it has both smartphone and PC SoCs (which fall into the HPC category). Apple alone was responsible for approximately 23% of TSMC's total sales in 2022, according to DigiTimes.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

More Chips Incoming

As the semiconductor sector recovers from its downturn, rising interest for generative AI is stimulating the market. Nvidia is benefiting greatly from this AI surge through its TSMC-manufactured A100/A30/A800 and H100/H800 compute GPUs. Similarly, AMD is expanding orders with TSMC for its upcoming Instinct MI300 series products that will enter mass production in the second half of 2023 at TSMC's N5-class node.

In addition, Apple, AMD, and Nvidia are committed to use TSMC's N3 (3nm-class) and N2 (2nm-class) production technologies for future chips, according to the DigiTimes report.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.