Alleged GDDR6X shortage could briefly hinder Nvidia gaming GPU supply starting August — affected models include RTX 4070 through RTX 4090

Thankfully, the supply constraints of affected GPUs will reportedly be brief.

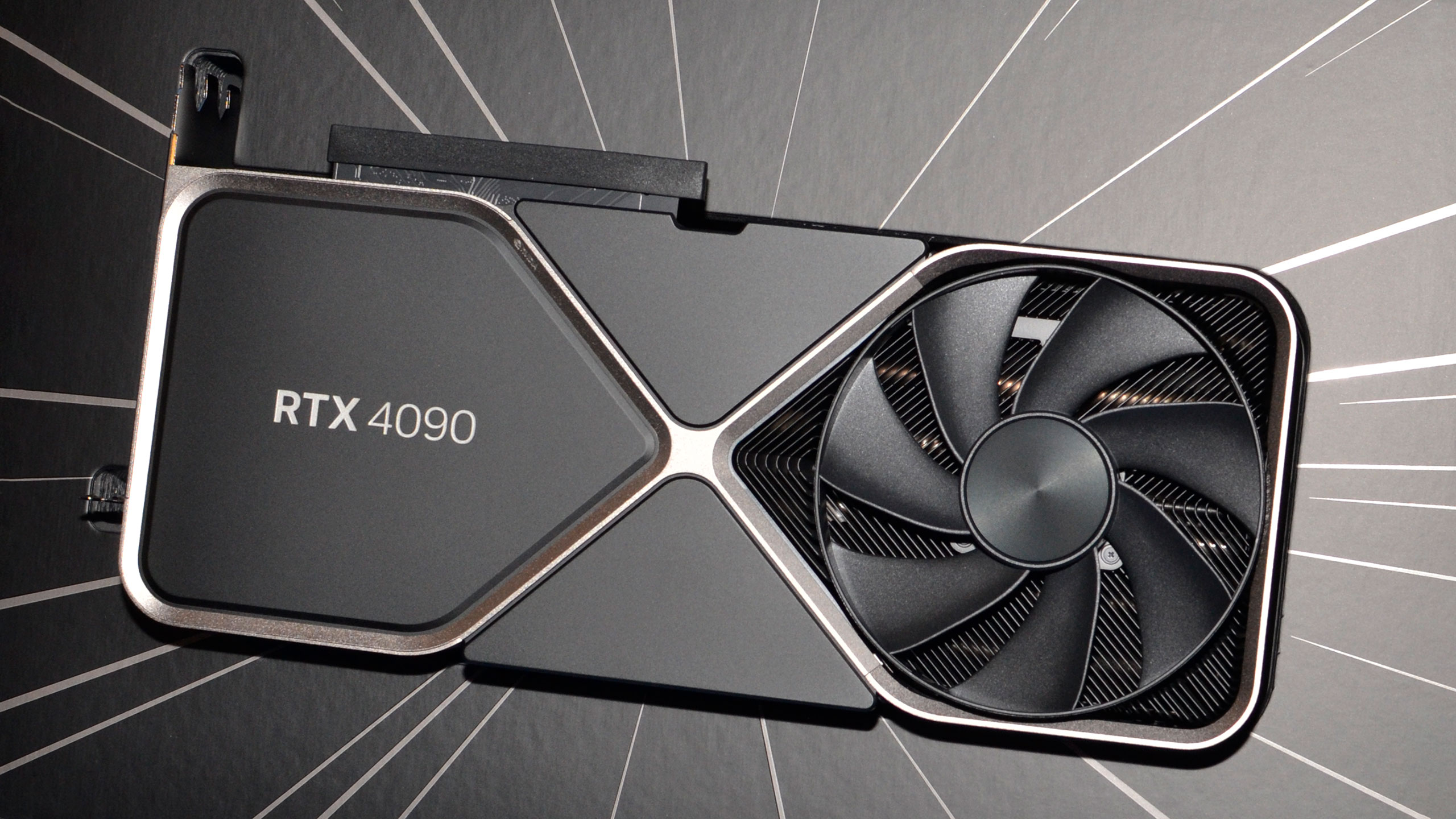

A rumor has emerged suggesting Nvidia's GeForce RTX 40 series, some of which are the best graphics cards, will reportedly face brief supply constraints beginning next month. According to a report from ChannelGate—sourced from Harukaze5719—a failed batch of GDDR6X memory modules will temporarily constrain the RTX 4070 and RTX 4090 starting in August.

A batch of Micron GDDR6X memory modules has purportedly failed quality control, forcing it to be replaced. The batch could affect all graphics cards that utilize GDDR6X memory modules. This temporary hiccup is only expected to reduce the supply of affected GPUs briefly and is "not expected to last too long."

To combat this hiccup, Nvidia is reportedly "adopting a supply and demand balance strategy" for its RTX 40-series graphics cards in China.

The only consumer-based graphics cards that use GDDR6X memory are Nvidia's Ada Lovelace GPUs, from the RTX 4070 to the RTX 4090. AMD's current RX Radeon 7000 series doesn't use GDDR6X, so the Red Team isn't affected by the alleged bad batch of Micron GDDR6X chips.

Nvidia's mobile Ada Lovelace lineup doesn't entirely use GDDR6X, either. Models such as the RTX 4050 (mobile), RTX 4060, and RTX 4060 Ti are the only RTX 40 series GPUs unaffected by the GDDR6X shortage. All three GPUs use slower GDDR6 memory.

Take this information with a pinch of salt; it comes from a rumor, so it could be false. Either way, the good news is that this incident is expected to only briefly affect mid-range and high-end RTX 40 series supply. By the looks of it, China will be the most heavily impacted market. Nvidia purportedly only applied a supply chain strategy in China to combat Micron's quality control failure.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

Avro Arrow Honestly, I think that GDDR6X is pretty overrated because Radeons just use GDDR6 and there hasn't been any notable performance deficit that anyone has noticed. The only advantage that I've seen related to GDDR6X has been lower power draw at the same clock speeds. It hasn't really translated into any real performance gains.Reply

There's a Tom's article from last year that chronicles just how meaningless the difference between GDDR6 and GDDR6X is. The articles analysis is based on performance differences between an RTX 3060 with GDDR6 and an RTX 3060 with GDDR6X:

Nvidia RTX 3060 Ti Gets Few Benefits from GDDR6X, Says Reviewer -

DS426 Reply

I think GDDR6X's performance advantage is much more relevant at the high-end, largely as you mentioned to provide better performance-per-watt, which indeed 4070-4090 provide a little better PPW than their Radeon counterparts. That might also be largely true going to DDR7 as otherwise RDNA4 wouldn't hold back as it's rumored to be -- even without a powerhouse high-end model.Avro Arrow said:Honestly, I think that GDDR6X is pretty overrated because Radeons just use GDDR6 and there hasn't been any notable performance deficit that anyone has noticed. The only advantage that I've seen related to GDDR6X has been lower power draw at the same clock speeds. It hasn't really translated into any real performance gains.

There's a Tom's article from last year that chronicles just how meaningless the difference between GDDR6 and GDDR6X is. The articles analysis is based on performance differences between an RTX 3060 with GDDR6 and an RTX 3060 with GDDR6X:

Nvidia RTX 3060 Ti Gets Few Benefits from GDDR6X, Says Reviewer -

Amdlova Amd is throwing cache and more cache to counter lowe bandwidth of memory.Reply

Nvidia has better gddr and power efficiency.

And 4 of 5% performance for only change memory is good enough. If gddr7 can achieve 20% like the rumors do will another level of graphics.

It's basically free performance with out increasing the melting power -

umeng2002_2 How many GPUs does nVidia™ sell in China? All we here are stories about nVidia's™ GPU supply in China. Is China their biggest market or something? I don't get it.Reply -

jeffy9987 Reply

nvidia basically owns the whole chinese market i think they have 90+ market shere thereumeng2002_2 said:How many GPUs does nVidia™ sell in China? All we here are stories about nVidia's™ GPU supply in China. Is China their biggest market or something? I don't get it. -

Avro Arrow Reply

Radeon doesn't make a real powerhouse model mostly because they know that people with that kind of money to spend on a video card all want GeForce so the rather steep cost incurred in creating said card would be a complete waste.DS426 said:I think GDDR6X's performance advantage is much more relevant at the high-end, largely as you mentioned to provide better performance-per-watt, which indeed 4070-4090 provide a little better PPW than their Radeon counterparts. That might also be largely true going to DDR7 as otherwise RDNA4 wouldn't hold back as it's rumored to be -- even without a powerhouse high-end model. -

purpleduggy "Shortages" every time they want to scalp and create artificial scarcity. Just use HBM on-die chiplets. Way higher bandwidth. Its time for 1024-bit GPU memory.Reply -

TheHerald Reply

Totally not true. If the 4090 was amd's it would sell like hotcakes.Avro Arrow said:Radeon doesn't make a real powerhouse model mostly because they know that people with that kind of money to spend on a video card all want GeForce so the rather steep cost incurred in creating said card would be a complete waste. -

RealSibuscus Oh, look at this, another excuse for Nvidia to up their prices for no fault to the customerReply