Incoming RTX 5090 could need massive power stages for possible 600-watt power draw – Nvidia’s next flagship GPU rumored to sport copious amounts of VRMs

And we thought the 4090 was power-hungry.

Nvidia’s soon-to-launch RTX 5090 may push the limits for how much power a gaming GPU can consume, as the card may use 29 VRMs in total, according to Benchlife.info.

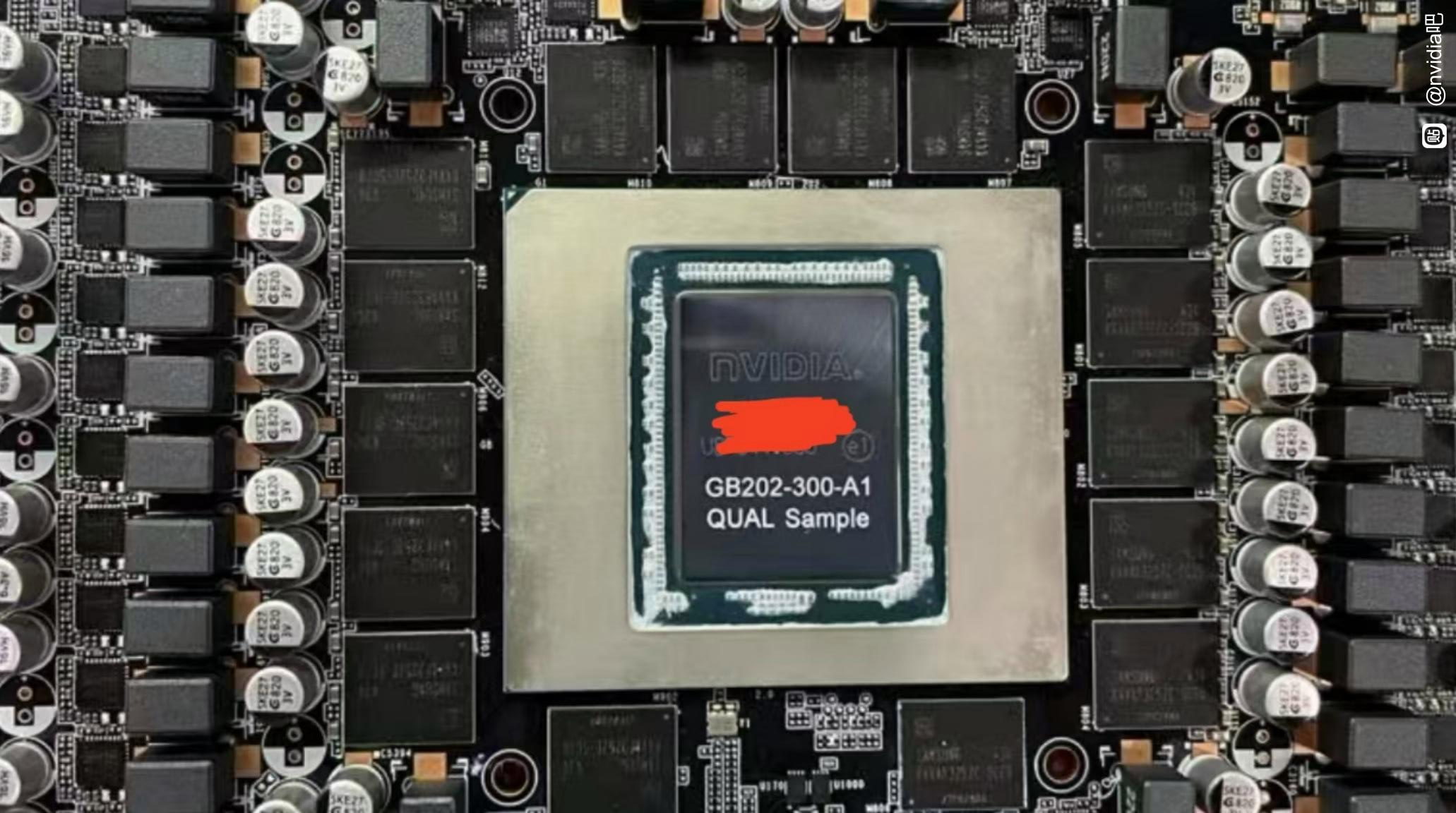

The Chinese outlet apparently followed up on an image of what seems to be a pre-release model of a top-end Blackwell GPU, potentially the 5090; the image was published to the semiconductor enthusiast forum Chiphell and ostensibly depicts a GB202 qualification sample chip. Given that the GPU appears to come with 32GB of VRAM and lots of power stages and looks to have a die size of roughly 700 mm2 or so, it’s been assumed this is the 5090.

While most of the power stages are visible in the image, it’s hard to say how many there are in total and how they’re organized. Potentially relying on sources familiar with Nvidia’s impending RTX 50 series, Benchlife.info claims that the 5090 uses a 16+6+7 power stage configuration. This makes for 29 total VRMs, a substantial increase over the 23 VRMs that the RTX 4090 Founders Edition used in a 20+3 configuration.

A VRM configuration divided into three parts would be a departure from what Nvidia did with the 4090 and 3090 (Ti). Both of those cards used the vast majority of their VRMs for the GPU, and then allocated three for the memory. Some third-party GPUs set up power stages in three parts, but usually, the second group only has one or two stages.

Presumably, the first two groups are allocated to the graphics chip, which would make sense if the 5090 has a 600-watt Total Graphics Power (TGP), a rumor that Benchlife.info claims is accurate. That would leave the remaining seven stages for the memory, more than twice what the 4090 needs for its 24GB of GDDR6. If true, this implies that the 5090’s rumored 32GB of memory might consume significantly more power than VRAM on Nvidia’s previous cards.

If the 5090 does use a 29-stage power design, Nvidia may need a bigger or at least better cooler than it uses for the 4090. The 4090s cooler is mostly the same as the one used for the 3090 Ti but with some slight modifications. With an extra 150 watts to cool, though, more significant alterations may be necessary unless Nvidia is okay with hotter GPU temperatures or elevated noisiness.

Benchlife.info’s article also referenced rumors that the 5090 would support PCIe 5.0 and come with a 14-layer PCB to accommodate the high-power design of the GPU better, but since publication, that part of the piece has been edited out. It’s unclear why the publication deleted these details, especially since Benchlife.info had been the first to claim PCIe 5.0 support and a 14-layer PCB back in September.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Matthew Connatser is a freelancing writer for Tom's Hardware US. He writes articles about CPUs, GPUs, SSDs, and computers in general.

-

MergleBergle WHEW! This thing is going to cost more than my next gaming PC, and draw nearly as much power.Reply

Not being the type who needs to get the latest AAA titles the instant they come out, but plays games that are less demanding, this won't be for me, but there will be a market, however niche it might be ;) -

bourgeoisdude Reply

I thought it was the FX5800?evilpaul said:I bet they just have it run super loud. 5700FX time.

I'm wondering why NVIDIA is even producing something this size when they shouldn't even need to this generation? -

txfeinbergs Reply

... because, profit. No matter what it costs, every single one will be bought by scalpers and sold for an even higher price.bourgeoisdude said:I thought it was the FX5800?

I'm wondering why NVIDIA is even producing something this size when they shouldn't even need to this generation? -

husker Technology companies have a big problem - at least on the consumer side. They can continue to improve on their products and explain very technically and accurately how they are improved. Eventually, the product matures to a point of diminishing returns, which challenges users to feel a meaningful difference in the real-world experience of using the product. Such things are nice and will always exist, but at some point, your $80,000 BMW is good enough and you're not actively looking to buy a $3,000,000 Bugatti.Reply -

txfeinbergs Reply

We aren't really there yet though (although from a price standpoint, I agree with you). Games still don't really come close to "real life" yet. I saw an AI video of a Grand Theft Game made to look photo realistic and it was clear we have a ways to go. No one will be able to afford it though.husker said:Technology companies have a big problem - at least on the consumer side. They can continue to improve on their products and explain very technically and accurately how they are improved. Eventually, the product matures to a point of diminishing returns, which challenges users to feel a meaningful difference in the real-world experience of using the product. Such things are nice and will always exist, but at some point, your $80,000 BMW is good enough and you're not actively looking to buy a $3,000,000 Bugatti. -

txfeinbergs Reply

That is easy. 220V outlet like you use for your dryer and oven. Now you will have to wire one in your office. "Poor" people will have a double extension cord that will allow you to plug into two separate outlets in two different rooms.hotaru251 said:wonder what Nvidias gonna do when gpu's take more than a NA outlet can supply.