The RTX 5090's GB202 GPU will reportedly be the largest desktop chip from Nvidia since 2018 coming in at 744mm-squared — 22% larger than AD102 on the RTX 4090

We're once again approaching reticle size limits.

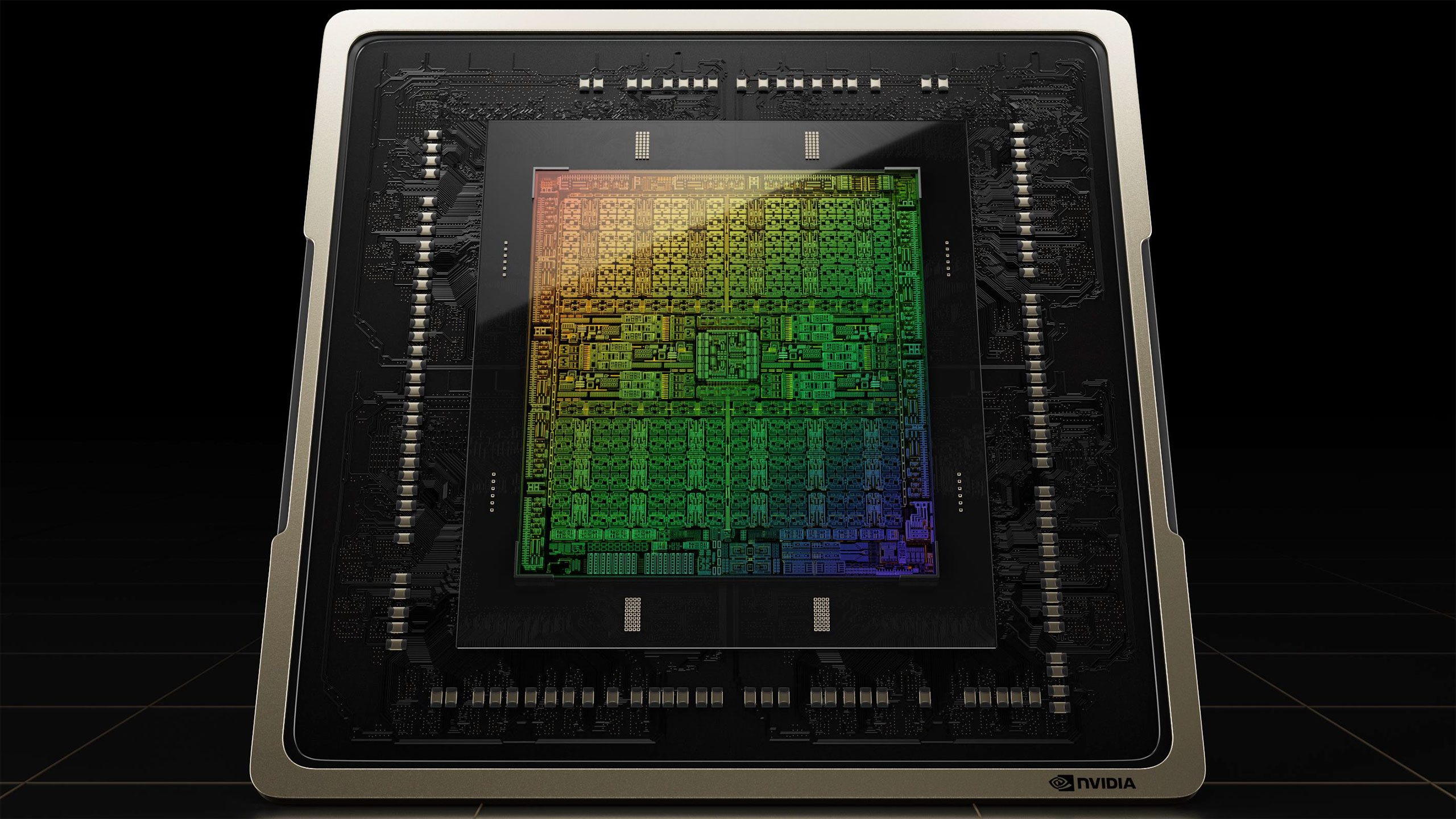

Nvidia's RTX 5090 will undoubtedly be a powerhouse GPU, and part of the reason for that will be the enormous GB202 chip powering it that allegedly comes in at an 744mm-squared, according to renowned hardware leaker MEGAsizeGPU at X. If the leak proves correct, the RTX 5090's die size sees a sizeable bump 22% bump compared to the RTX 4090's AD102 GPU. That would likely drive prices up — both for Nvidia and the end-customers.

The Nvidia Blackwell RTX 50-series desktop GPUs are rumored to utilize TSMC's 4NP process (5nm), which is an enhanced version of the 4N used with the Ada Lovelace architecture (RTX 40-series). This process node is expected to offer a nice 30% bump in density, but that might not be fully accurate for the RTX 50 series. Regardless, a 744mm-squared die size is nearing reticle size limits (around 850mm^2).

To counteract this exact limitation, Nvidia employed a multi-chiplet design in its data center Blackwell B200 chips, but that notion apparently isn't viable for desktops given the high packaging costs and whatnot.

As per the tipster, the RTX 5090's GB202 GPU spans 744mm-squared (24mm x 31mm), while the entire package comes in at 3528mm-squared (64mm x 56mm). Package sizes aren't as important since they include the die itself along with capacitors, resistors, and other structural materials. In any case, the RTX 5090 will be the largest consumer GPU (in terms of die size) that we've seen since 2018. Turing manages to retain its crown since the TU102 (RTX 2080 Ti) GPU is just 10mm^2 larger than the GB202, per this leak.

| GPU | Codename | Die Size | vs GB202 (GB202 = 100%) |

|---|---|---|---|

| GB202 | Blackwell | 744mm-squared | 100% |

| AD102 | Ada Lovelace | 609mm-squared | 82% |

| GA102 | Ampere | 628mm-squared | 84% |

| TU102 | Turing | 754mm-squared | 101% |

| GP102 | Pascal | 471mm-squared | 63% |

Nvidia always builds some redundancy into its GPUs, so the top-end RTX 5090 solution likely doesn't use a fully enabled die. 'Prime' chips where the full-fat die works will typically get reserved for data center and professional GPUs. The fully-enabled GB202 chip allegedly offers 192 SMs and going by current leaks, with the RTX 5090 having an 88% enabled GB202 die (170 SMs).

That matches up perfectly with the RTX 4090, which was also limited to 88% (128 out of 144 SMs). The RTX 5080, per reports, sees a heavy nerf, with just 84 SMs so, die sizes could be roughly half as large as GB202. Nvidia will most probably incur heavy manufacturing costs for the RTX 5090, but that will invariably get passed down to the end customer. We expect to see a very large price delta between the 80-class and 90-class Blackwell cards.

A recent leak claims that Jensen Huang will debut Nvidia's flagship RTX 5090 and RTX 5080 GPUs at CES 2025. Afterward, mainstream RTX 5070 and 5070 Ti GPUs are expected sometime later during the first quarter, followed by the budget RTX 5060 offerings.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.

-

bit_user If you look back through the history of Nvidia GPUs, their die sizes always got bigger when they released another generation on the same manufacturing node. That's both because nodes tend to get cheaper over time and because it's really hard to offer substantially more performance on the same node without using more die area. Sure, they can tweak with the microarchitecture, but that's not going to deliver the kind of generational improvements people have come to expect of GPUs.Reply

That said, I expect Nvidia isn't going to do us any favors on pricing. If the launch price of the GB102 isn't 22% higher than the AD102's, I bet it'll at least be close! I also suspect peak power could be as much as 22% higher, as well. Especially when you consider it's got 33% more channels of GDDR6X and it's running at a higher data rate. -

acadia11 Ok, since I’m doing the rational thing and not splurging on a B200 for my AI needs … fine I’ll be practical and settle for a Rtx 5090, …Reply -

Mama Changa This is close to the theoretical maximum die size of ~858mm^2 on low NA EUV. This is why they have no choice but to move to chiplets eventually, as high 0.55 NA EUV halves that maximum size, so no giant power hungry monolithic chips for 2nm. I'm not sure if Rubin is on N3P, but it seems likely if Nvidia's timeframe for release is on shcedule, as I doubt 2nm will be ready in time.Reply -

dimar I'm super hyped about independent benchmarks of RTX 5090 along with Ryzen 9 9950X3D and whatever Intel's top Ultra CPU will be. I'm prepared to be disappointed as well :-) Also hoping AMD to make something competitive to 5090Reply -

jlake3 Reply

Got some bad news for ya on an AMD equivalent to the 5090.dimar said:I'm super hyped about independent benchmarks of RTX 5090 along with Ryzen 9 9950X3D and whatever Intel's top Ultra CPU will be. I'm prepared to be disappointed as well :) Also hoping AMD to make something competitive to 5090

Rumours have been that AMD’s top RDNA4 die ran into development issues that were gonna cost a lot of money/engineering hours to fix, and since their datacenter/AI plans don’t utilise RDNA (they have compute-optimised CDNA dies for that) and gaming GPUs at that price are a low sales volume, they decided just to cancel it and that’s how “focus on the midrange” became the plan for RX 8000. -

Giroro Replyjlake3 said:Got some bad news for ya on an AMD equivalent to the 5090.

Rumours have been that AMD’s top RDNA4 die ran into development issues that were gonna cost a lot of money/engineering hours to fix, and since their datacenter/AI plans don’t utilise RDNA (they have compute-optimised CDNA dies for that) and gaming GPUs at that price are a low sales volume, they decided just to cancel it and that’s how “focus on the midrange” became the plan for RX 8000.

On the other hand, AMD is copying Nvidia's strategy of missing their planned GPU launch window by 3-6 months. They couldn't have just launched this year, because AMD has no idea what to sell their cards for until Nvidia fixes the prices.

They gotta do something with their engineers with all that extra time on their hands.

It's not like AMD needed that time to let manufacturing catch up harvesting their infinite AI money pit, like Nvidia. -

Giroro If the RTX 5090 has a smaller die than the RTX 2080 Ti, than the 5090 will be cheaper than the 2080 Ti, obviously.Reply

It's not like the mark ups on these GPUs are so insanely high over the base cost of the die that a 20% increase is die cost would be a barely noticeable blip compared to the price is the product, or anything.

I mean, they'll still probably raise the price of a 5090 by a lot, Because it's going to be for AI, not gaming.

Or they'll deliberately gimp AI performance in their gaming cards, which is still on the table... But then why would Nvidia waste the silicon on gamers at all?

Nvidia is not a gaming company anymore. We're just going to have to deal with it. -

jp7189 Reply

Nvidia's CUDA dominates specifically because it works even on entry level cards. Cost conscious college kids get to play with it, and then after a few years become influential with company money. Nvidia won't do anything to jeopardize that. They'll use VRAM sizes to entice people and companies to go with higher tiers. RTX 6000ada is pretty much the same as a 4090 but with double the VRAM and 4x the price.Giroro said:If the RTX 5090 has a smaller die than the RTX 2080 Ti, than the 5090 will be cheaper than the 2080 Ti, obviously.

It's not like the mark ups on these GPUs are so insanely high over the base cost of the die that a 20% increase is die cost would be a barely noticeable blip compared to the price is the product, or anything.

I mean, they'll still probably raise the price of a 5090 by a lot, Because it's going to be for AI, not gaming.

Or they'll deliberately gimp AI performance in their gaming cards, which is still on the table... But then why would Nvidia waste the silicon on gamers at all?

Nvidia is not a gaming company anymore. We're just going to have to deal with it.

I could see a world where they release a 24GB 5090 with 2GB chips and later a ti or titan with 36GB, 3GB chips. (I don't believe the 512 bit memory bus rumors.)

Likewise a RTX 6000 black might have 48GB to start and a 72GB option down the road. -

bit_user Reply

They also back off the clock speed and each card comes with a substantially longer warranty that's rated for 24/7 compute workloads. Some of their professional (formerly Quadro) cards also include ECC memory, such as that one. In terms of power, it's rated for only 300 W.jp7189 said:RTX 6000ada is pretty much the same as a 4090 but with double the VRAM and 4x the price.

At my job, we once used Quadro P1000 cards for a certain project. It bugged me that we didn't use GTX 1050 Ti, which had higher clocks and ran up to 75 W instead of like 51 W, while costing only half as much. The answer I got back is that we had to use the Quadro version for its longer warranty, as well as that our workload potentially voided the warranty and CUDA ToS of the GTX product. -

jp7189 Reply

Can't comment on the tos.. never dug in to it. Regarding warranty on specific products I've used, the MSI suprim 4090 is 3 years same as the pny 6000ada. The former has an option to enable ECC in the nvidia control panel though I'm not sure how to verify it actually works. The later also uses much slower non-X vram, but that's still way faster than swapping to system ram if you need more than the 24GB on the 4090.bit_user said:They also back off the clock speed and each card comes with a substantially longer warranty that's rated for 24/7 compute workloads. Some of their professional (formerly Quadro) cards also include ECC memory, such as that one. In terms of power, it's rated for only 300 W.

At my job, we once used Quadro P1000 cards for a certain project. It bugged me that we didn't use GTX 1050 Ti, which had higher clocks and ran up to 75 W instead of like 51 W, while costing only half as much. The answer I got back is that we had to use the Quadro version for its longer warranty, as well as that our workload potentially voided the warranty and CUDA ToS of the GTX product.