Can Your Old Athlon 64 Still Game?

Game Benchmarks: Far Cry

The oldest game we look at is Far Cry, released in March 2004. With its lush tropical islands and open environments, Far Cry was a beautiful and demanding game in its day. As we would expect, Far Cry is a single-threaded game. The single-core Athlon 64s were the top gaming CPUs back then and there was nothing nowhere near the GPU power of the 8800 GS available at that time.

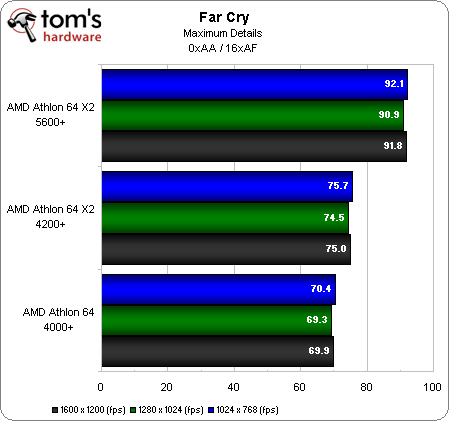

We used the Hardware OC Far Cry Benchmark Utility v 1.8 for testing Far Cry and had it set to maximum details and 16xAF.

Taking a look at the results, we see quite a large amount of CPU scaling taking place. The high-clocked X2 5600+ is able to pull over 20 FPS ahead of the A64 4000+. Our 8800GS is having an easy time at these settings, so we are CPU-bound and see about the same results at all resolutions. The big surprise is that the X2 4200+ is able to beat the A64 4000+ despite 200 less MHz and half the L2 cache per core. The best explanation for this is that while Far Cry is not multi-threaded, the Nvidia display drivers we use are. It seems, in this game, that multi-threaded drivers are making a difference in performance.

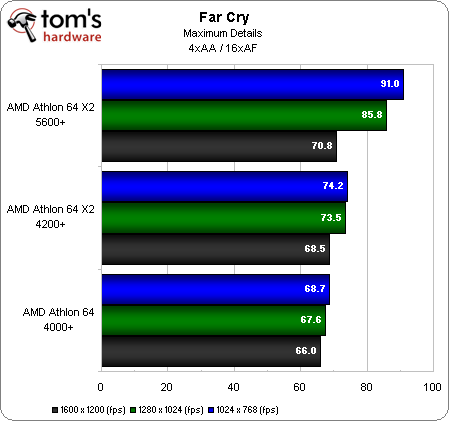

Enabling 4x FSAA we again see a large FPS difference between these CPUs with the high-clocked X2 5600+ able to show a better picture of how the 8800 GS performs. At 1600x1200 we see that the 8800 GS starts to become the limiting factor, although we still observe a couple FPS spacing between the CPUs even at these settings.

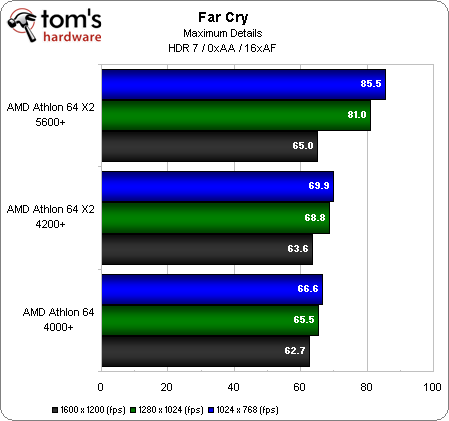

HDR was not part of the original Far Cry but was added along with Shader Model 3.0 support in patch 1.3. Just like with 4x AA, we see a slight drop in FPS at 1280x1024, and a 20 FPS drop at 1600x1200. This drop is barely evident with the A64 4000+ as the CPU limited the FPS results.

With Far Cry, we see some interesting results for our three CPUs—the X2 5600+ taking top honors. But in the end, all three CPUs offer very playable performance. This once-hardware-demanding game is no struggle for a GPU like the 8800 GS, even with 4xAA or HDR.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Game Benchmarks: Far Cry

Prev Page Game Benchmarks: F.E.A.R. Next Page Game Benchmarks: NFS: Carbon-

Schip FIRST POST!!! Nice Article though. I knew my brother would soon be doomed with his P4 2.8c ;)Reply -

"AMD Athlon 64 X2 4200 + dual-core, which has a 2.2 GHz Manchester architecture with 512 MB L2 cache per core."Reply

oau! that's a lot of cache :D -

neiroatopelcc I haven't read the actual article yet, but I bet the simple answer is no!Reply

I've got a backup gaming rig at home that barely cuts it. An x2 1.9ghz (oc'ed to 2.4) with an 8800gtx and 3gb memory. That rig struggles at 1280x1024 in some situations, and it can only be attributed to the cpu really. -

bf2gameplaya 2.8GHz Opteron 185 (up from 2.6GHz) with 2x1MB L2 cache is the ultimate s939 CPU....blows these weak benchmarks away.Reply

Who would have thought DDR would have such durability? There's something to be said for CAS2! -

neiroatopelcc But your opteron cpu still limits the modern graphics cards.Reply

Two years back I bought my 8800gtx, and realized it wouldn't come to its full potential in my opteron 170 (@ 2.7). A friend with another gtx paired with an e6400 chip (@ 3ghz) scored a full 30% higher in 3dmark than I, and it showed in games. Even in wow where you'd expect a casio calculator would deliver enough graphics power.

In short - ye ddr still work if you've got enthusiast parts, but that can't negate the effect a faster cpu would give. At least at decent resolutions (22" wide) -

dirtmountain This is a great article! It will give me something to show when i'm talking to people about a new system or just a GPU/PSU upgrade. Great job by Henningsen.Reply -

NoIncentive I'm still using a P4 3.0 @ 3.4 with 1 GB DDR 400 and an nVidia 6800GT...Reply

I'm building a new computer next week. -

randomizer I can echo the findings in Crysis. It didn't matter what settings I ran with a 3700 Sandy and an X1950 pro, the framerate was almost the same (albeit low 20s because the card is slower). Added an E6600 to the mix and my framerate tripled at lower settings.Reply

It would have been interesting to see how a 3000+ Clawhammer (C0 stepping) would do in Crysis. Single-channel memory, poor overclocking capabilities... FAIL! -

ravenware bf2gameplaya2.8GHz Opteron 185 (up from 2.6GHz) with 2x1MB L2 cache is the ultimate s939 CPU....blows these weak benchmarks away.Who would have thought DDR would have such durability? There's something to be said for CAS2!Reply

Thia ia true about the DDR. I recall an article on toms right after the release of the AM2 socket which tested identical dual core processors against their 939 counterparts; the tests showed little to no performance gains.

Great article, their has been some discussion about this in the forums as well.

I currently own a 939 4200+ x2 that's paired with a 7800GT; and this article shows what I thought to be accurate about the AMD64 chips. Their not as fast as some of the C2D's but they still kick ass.

Good job pointing out the single core factor in newer games too. As soon as the crysis demo was released I upgraded my San Diego core to a dual core and noticed the difference in crysis immediately.

This article gives me further confidence in my decision to hold on upgrading my system. I want to hold out for Windows7 D3D11 and more money to build an ape sh** machine :D

Nice article!!