What Does DirectCompute Really Mean For Gamers?

We've been bugging AMD for years now, literally, to show us what GPU-accelerated software can do. Finally, the company is ready to put us in touch with ISVs in nine different segments to demonstrate how its hardware can benefit optimized applications.

GPGPU Gets Another Practical Application

It seems like only a few months ago that the crew here at at Tom’s Hardware started approaching hardware vendors and software developers with our desire to more thoroughly evaluate the capabilities of OpenCL- and DirectCompute-capable components using real-world metrics. We've gone into as much depth as possible, but there just didn't seem to be much to report on. Sure, we'd run tests in Metro 2033 with its DirectCompute-based depth of field filter turned on and off. But the only conclusion we could draw was, "Wow, that sure hammers performance."

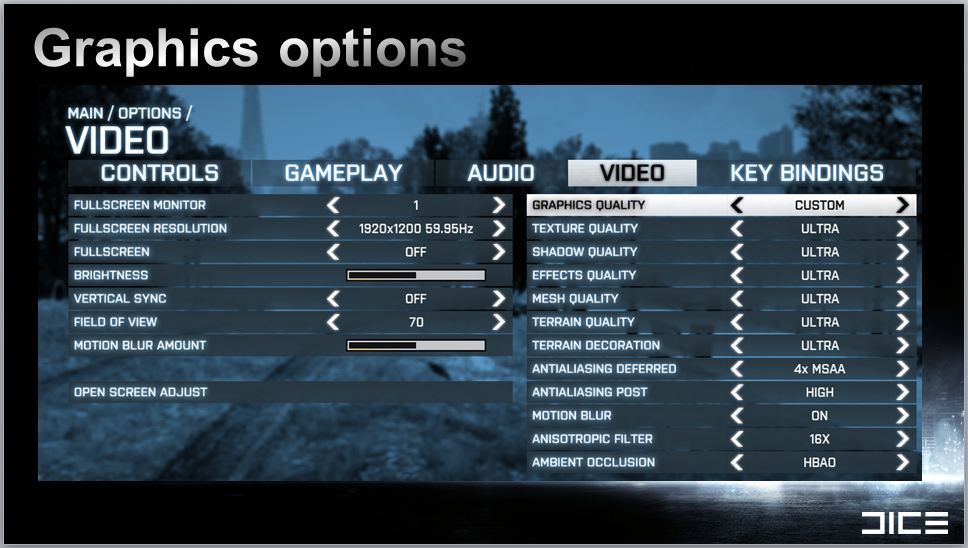

Finally, that situation is changing. A growing roster of games now implements DirectCompute. We're testing four of them in this piece: Battlefield 3, DiRT 3, Civilization 5, and of course, Metro 2033. Unlike most of the game testing we do at Tom’s Hardware, our focus here is not on raw system or component performance. Yes, this is another piece AMD helped us put together with technical insight and help talking to developers, so we're looking at the company's APUs and comparing them to discrete graphics. But there is more to this story than frame rate impact. It’s about enabling techniques for achieving realism that were previously infeasible in the days before GPU-based compute assistance.

“Getting more speed in games based purely on hardware revisions is not reaching the same sort of lofty heights we’ve seen in many years past,” says Neal Robison, director of ISV relationships at AMD. “Software developers typically didn’t have to recode their software because advancements in the hardware would give them an uplift that was, in many cases, double the performance of the previous generation. But now it’s getting to the point where we’re adding cores rather than beefing up the individual chips. Developers actually have to make some changes to their software—in some cases fundamental architectural changes. Heterogeneous compute is one of those keys that will allow you as a developer to literally get at the guts of the processor and make that giant leap forward with your software to encourage folks to upgrade.”

Robison's assessment of what developers will do with heterogeneous computing seems spot on when it comes to applications like Adobe Premiere Pro CS 5 (specifically, its CUDA-enabled Mercury Playback Engine) and video transcoding. Both parallelized workloads readily take advantage of optimizations for graphics architectures. However, we haven't yet seen a performance-oriented benefit attributable to OpenCL or DirectCompute in games. There, both APIs seem to be enabling software developers with new approaches to augmenting reality. We're still curious, though: how, exactly, are the top titles exploiting the latest in heterogeneous computing, and what's to come moving forward? Answering those questions requires developer feedback, and that's exactly what we sought out.

Before we go there, let's take a quick second to talk about performance. As we just saw last week in Battle At $140: Can An APU Beat An Intel CPU And Add-In Graphics?, there are well-defined limits to what you can expect out of today's APUs. We ran Metro. We ran Battlefield. We ran DiRT 3. In each case, these forward-looking games were moderately playable at 1024x768 using their lowest detail settings. Leaning harder on graphics resources for processing OpenCL or DirectCompute isn't going to change that story. More likely is that you'll get an opportunity to play a favorite game on an APU-equipped laptop that wouldn't have run smoothly previously.

But remember that we're a couple of months away from a new wave of CPUs from Intel and Trinity-based APUs from AMD. The performance bar is about to rise, and proper support for both compute standards will almost certainly affect the way your favorite title looks, providing both companies can demonstrate to us higher frame rates from their next-gen parts.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: GPGPU Gets Another Practical Application

Next Page DirectCompute Helps Enable Ambient Occlusion-

de5_Roy would pcie 3.0 and 2x pcie 3.0 cards in cfx/sli improve direct compute performance for gaming?Reply -

hunshiki hotsacomanHa. Are those HL2 screenshots on page 3 lol?Reply

THAT. F.... FENCE. :D

Every, single, time. With every, single Source game. HL2, CSS, MODS, CSGO. It's everywhere. -

hunshikiTHAT. F.... FENCE. Every, single, time. With every, single Source game. HL2, CSS, MODS, CSGO. It's everywhere.Reply

Ha. Seriously! The source engine is what I like to call a polished turd. Somehow even though its ugly as f%$#, they still make it look acceptable...except for the fence XD -

theuniquegamer Developers need to improve the compatibility of the API for the gpus. Because the consoles used very low power outdated gpus can play latest games at good fps . But our pcs have the top notch hardware but the games are playing as almost same quality as the consoles. The GPUs in our pc has a lot horse power but we can utilize even half of it(i don't what our pc gpus are capable of)Reply -

marraco I hate depth of field. Really hate it. I hate Metro 2033 with its DirectCompute-based depth of field filter.Reply

It’s unnecessary for games to emulate camera flaws, and depth of field is a limitation of cameras. The human eye is able to focus everywhere, and free to do that. Depth of field does not allow to focus where the user wants to focus, so is just an annoyance, and worse, it costs FPS.

This chart is great. Thanks for showing it.

It shows something out of many video cards reviews: the 7970 frequently falls under 50, 40, and even 20 FPS. That ruins the user experience. Meanwhile is hard to tell the difference between 70 and 80 FPS, is easy to spot those moments on which the card falls under 20 FPS. It’s a show stopper, and utter annoyance to spend a lot of money on the most expensive cards and then see thos 20 FPS moments.

That’s why I prefer TechPowerup.com reviews. They show frame by frame benchmarks, and not just a meaningless FPS. TechPowerup.com is a floor over TomsHardware because of this.

Yet that way to show GPU performance is hard to understand for humans, so that data needs to be sorted, to make it easy understandable, like this figure shows:

Both charts show the same data, but the lower has the data sorted.

Here we see that card B has higher lags, and FPS, and Card A is more consistent even when it haves lower FPS.

It shows on how many frames Card B is worse that Card A, and is more intuitive and readable that the bar charts, who lose a lot of information.

Unfortunately, no web site offers this kind of analysis for GPUs, so there is a way to get an advantage over competition.

-

hunshiki I don't think you owned a modern console Theuniquegamer. Games that run fast there, would run fast on PCs (if not blazing fast), hence PCs are faster. Consoles are quite limited by hardware. Games that are demanding and slow... or they just got awesome graphics (BF3 for example), are slow on consoles too. They can rarely squeeze out 20-25 FPS usually. This happened with Crysis too. On PC? We benchmark FullHD graphics, and go for 91 fps. NINETY-ONE. Not 20. Not 25. Not even 30. And FullHD. Not 1280x720 like XBOX. (Also, on PC you have a tons of other visual improvements, that you can turn on/off. Unlike consoles.)Reply

So .. in short: Consoles are cheap and easy to use. You pop in the CD, you play your game. You won't be a professional FPS gamer (hence the stick), or it won't amaze you, hence the graphics. But it's easy and simple. -

kettu marracoI hate depth of field. Really hate it. I hate Metro 2033 with its DirectCompute-based depth of field filter.It’s unnecessary for games to emulate camera flaws, and depth of field is a limitation of cameras. The human eye is able to focus everywhere, and free to do that. Depth of field does not allow to focus where the user wants to focus, so is just an annoyance, and worse, it costs FPS.Reply

'Hate' is a bit strong word but you do have a point there. It's much more natural to focus my eyes on a certain game objects rather than my hand (i.e. turn the camera with my mouse). And you're right that it's unnecessary because I get the depth of field effect for free with my eyes allready when they're focused on a point on the screen. -

npyrhone Somehow I don't find it plausible that Tom's Hardware has *literally* been bugging AMD for years - to any end (no pun inteded). Figuratively, perhaps?Reply