What Does DirectCompute Really Mean For Gamers?

We've been bugging AMD for years now, literally, to show us what GPU-accelerated software can do. Finally, the company is ready to put us in touch with ISVs in nine different segments to demonstrate how its hardware can benefit optimized applications.

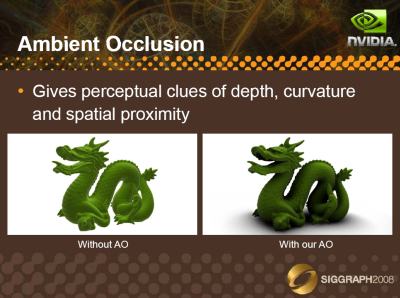

DirectCompute Helps Enable Ambient Occlusion

The GPU’s ability to execute massively parallelized workloads has been amply discussed on Tom’s Hardware. CPUs and GPUs are simply suited to different types of applications as a result of their respective architectures. When you apply an effect to an image, for instance, that operation must be repeated to many thousands of pixel elements simultaneously. If you have a quad-core host processor and a graphics engine with 2000 ALUs, are there any guesses as to which approach has more potential to make efficient use of available compute resources?

In theory, this approach to processing can yield a significant bandwidth increase, since you're going to GDDR5 at up to 4 GT/s rather than system memory at 1600 MT/s or so, along with a massive boost to compute performance and performance per watt when the right algorithms are used. Also consider that, while the CPU remains superior to the GPU in many tasks, the resources and latencies required to communicate between both chips may incur more of a penalty than simply running some tasks entirely in the GPU.

Making use of this additional GPU potential requires additional software work. There are several ways to exploit the hardware's capabilities, and DirectCompute, part of DirectX, is Microsoft’s API that sits between the GPU and applications. In the past, we've covered how early efforts to leverage the general-purpose compute capabilities of the GPU benefited academic and vertical markets like research (think SETI@Home and Folding@Home), scientific modeling, and exploration. From here, GPGPU began to filter down into the consumer market, but only in a very few niches, especially video processing and transcoding. Now, we’re seeing DirectCompute and OpenCL fulfill additional roles. Game developers are starting to implement DirectCompute-based methods for improving rendering, AI, occlusion, lighting, and physics.

Using DirectCompute For Ambient Occlusion

One of the most popular uses of DirectCompute in gaming is ambient occlusion, a shading technology (originally developed by Industrial Light and Magic over a decade ago) that seeks to emulate how light interacts with surfaces and textures. Rays emanate from every surface, and those that reach the background contribute to the brightness of the surface, while a ray that strikes another object offers no illumination, having been absorbed. Thus objects surrounded by other objects are dark, and those with no nearby obstructions are brighter.

If you imagine a corner in a shaded alleyway, the corner will look darker to your eye because less light is able to penetrate into it and bounce out of it. The wall structures inhibit the light in that area apart from any shade from adjacent buildings. Ambient occlusion seeks to account for this sort of “light trapping.” Without it, illumination tends to look flat and artificial.

Gareth Thomas of Codemasters supplied us with the following captures from DiRT 3 to help illustrate. Note in particular the areas around the base of the tires.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

“High-definition ambient occlusion is a screen space effect that takes the depth of the scene and computes a darkening factor based on whether that area is in a ‘valley’ or not from the point of view of the depth buffer,” explains Thomas. “For example, the crevices in between the tires in the screenshots should be darker because less bounced light can reach those areas. The HDAO provides us with a term that approximates how much bounced light each pixel can see. This is then incorporated into the lighting model when rendering the scene. It is not a perfect technique because the depth of the scene from the camera’s point of view is not really enough information to correctly calculate ambient occlusion. However, it is certainly better than not using an occlusion term.”

Current page: DirectCompute Helps Enable Ambient Occlusion

Prev Page GPGPU Gets Another Practical Application Next Page Ambient Occlusion, Continued-

de5_Roy would pcie 3.0 and 2x pcie 3.0 cards in cfx/sli improve direct compute performance for gaming?Reply -

hunshiki hotsacomanHa. Are those HL2 screenshots on page 3 lol?Reply

THAT. F.... FENCE. :D

Every, single, time. With every, single Source game. HL2, CSS, MODS, CSGO. It's everywhere. -

hunshikiTHAT. F.... FENCE. Every, single, time. With every, single Source game. HL2, CSS, MODS, CSGO. It's everywhere.Reply

Ha. Seriously! The source engine is what I like to call a polished turd. Somehow even though its ugly as f%$#, they still make it look acceptable...except for the fence XD -

theuniquegamer Developers need to improve the compatibility of the API for the gpus. Because the consoles used very low power outdated gpus can play latest games at good fps . But our pcs have the top notch hardware but the games are playing as almost same quality as the consoles. The GPUs in our pc has a lot horse power but we can utilize even half of it(i don't what our pc gpus are capable of)Reply -

marraco I hate depth of field. Really hate it. I hate Metro 2033 with its DirectCompute-based depth of field filter.Reply

It’s unnecessary for games to emulate camera flaws, and depth of field is a limitation of cameras. The human eye is able to focus everywhere, and free to do that. Depth of field does not allow to focus where the user wants to focus, so is just an annoyance, and worse, it costs FPS.

This chart is great. Thanks for showing it.

It shows something out of many video cards reviews: the 7970 frequently falls under 50, 40, and even 20 FPS. That ruins the user experience. Meanwhile is hard to tell the difference between 70 and 80 FPS, is easy to spot those moments on which the card falls under 20 FPS. It’s a show stopper, and utter annoyance to spend a lot of money on the most expensive cards and then see thos 20 FPS moments.

That’s why I prefer TechPowerup.com reviews. They show frame by frame benchmarks, and not just a meaningless FPS. TechPowerup.com is a floor over TomsHardware because of this.

Yet that way to show GPU performance is hard to understand for humans, so that data needs to be sorted, to make it easy understandable, like this figure shows:

Both charts show the same data, but the lower has the data sorted.

Here we see that card B has higher lags, and FPS, and Card A is more consistent even when it haves lower FPS.

It shows on how many frames Card B is worse that Card A, and is more intuitive and readable that the bar charts, who lose a lot of information.

Unfortunately, no web site offers this kind of analysis for GPUs, so there is a way to get an advantage over competition.

-

hunshiki I don't think you owned a modern console Theuniquegamer. Games that run fast there, would run fast on PCs (if not blazing fast), hence PCs are faster. Consoles are quite limited by hardware. Games that are demanding and slow... or they just got awesome graphics (BF3 for example), are slow on consoles too. They can rarely squeeze out 20-25 FPS usually. This happened with Crysis too. On PC? We benchmark FullHD graphics, and go for 91 fps. NINETY-ONE. Not 20. Not 25. Not even 30. And FullHD. Not 1280x720 like XBOX. (Also, on PC you have a tons of other visual improvements, that you can turn on/off. Unlike consoles.)Reply

So .. in short: Consoles are cheap and easy to use. You pop in the CD, you play your game. You won't be a professional FPS gamer (hence the stick), or it won't amaze you, hence the graphics. But it's easy and simple. -

kettu marracoI hate depth of field. Really hate it. I hate Metro 2033 with its DirectCompute-based depth of field filter.It’s unnecessary for games to emulate camera flaws, and depth of field is a limitation of cameras. The human eye is able to focus everywhere, and free to do that. Depth of field does not allow to focus where the user wants to focus, so is just an annoyance, and worse, it costs FPS.Reply

'Hate' is a bit strong word but you do have a point there. It's much more natural to focus my eyes on a certain game objects rather than my hand (i.e. turn the camera with my mouse). And you're right that it's unnecessary because I get the depth of field effect for free with my eyes allready when they're focused on a point on the screen. -

npyrhone Somehow I don't find it plausible that Tom's Hardware has *literally* been bugging AMD for years - to any end (no pun inteded). Figuratively, perhaps?Reply