OpenCL And CUDA Are Go: GeForce GTX Titan, Tested In Pro Apps

We initially had trouble getting the GeForce GTX Titan to work with OpenCL and CUDA. Finally, though, there are drivers available that fix all of that. Now we can figure out if the Titan makes a good workstation-oriented alternative to Nvidia's Quadros.

OpenGL: TcVis And NX

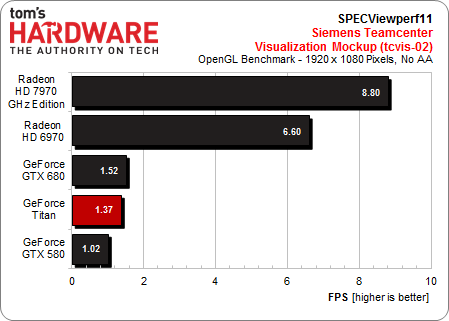

Siemens Teamcenter Visualization Mockup (tvcis-02)

As we saw in the Pro/ENGINEER benchmark, these numbers show why it's better to use professional-class hardware and drivers for workstation-oriented software. AMD's Radeon HD 7970 GHz Edition is the only card that even comes close to being usable, and when we say it comes close, we don’t mean it actually gets there. The GeForce GTX Titan’s performance is nowhere near acceptable for this type of work.

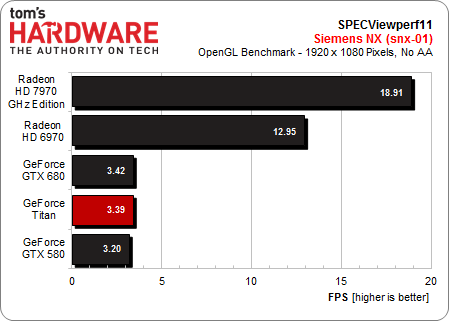

Siemens NX (snx-01)

The same picture emerges once again. AMD's Radeon HD 7970 GHz Edition manages a frame rate that's three times as fast as the Titan.

We know from the numbers we're running for our workstation story that Nvidia's Quadro cards are highly competitive in professional applications. The same cannot be said about the company's desktop-oriented boards, though. Apart from EnSight and Maya, even a $1,000 GeForce GTX Titan just isn't usable.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: OpenGL: TcVis And NX

Prev Page OpenGL: Pro/ENGINEER And SolidWorks Next Page OpenGL: Unigine Heaven

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

bit_user Thanks for all the juicy new benchmarks!Reply

BTW, I'm hoping the OpenCL benchmarks all make it to the GPU Charts. I'd like to know how the HD 7870 stacks up, at least. Being a new owner of one, I'm pleased at the showing made by the other Radeons. I had expected Titan to better on OpenCL, based on all the hype.

-

bit_user k1114Why are there not workstation cards in the graphs?Because it would be pointless. They use the same GPUs, but clocked lower and with ECC memory.Reply

The whole point of Titan was to make a consumer card based on the Tesla GPU. I don't think AMD has a separate GPU for their workstation or "SKY" cards.

-

crakocaine Theres something weird with your ratGPU gpu rendering openCL test results. you say lower is better in seconds but yet the numbers are arranged to make it look like more seconds is better.Reply -

mayankleoboy1 Too much data here for a proper conclusion. Here is what i conclude :Reply

In Pro applications :

1. 7970 is generally quite bad.

2. Titan has mixed performance.

3. Drivers make or break a card.

In more consumer friendly 'general' apps :

1. 7970 dominates. Completely.

2. 680 is piss poor (as expected)

3. 580 may or may not compete.

4. Titan is not worth having.

AMD needs to tie up moar with Pro app developers. Thats the market which is ever expanding, and will bring huge revenue.

Would have been interesting to see how the FirePro version of 7970 performs compared to the HD7970. -

-Fran- bit_userBecause it would be pointless. They use the same GPUs, but clocked lower and with ECC memory.The whole point of Titan was to make a consumer card based on the Tesla GPU. I don't think AMD has a separate GPU for their workstation or "SKY" cards.Reply

It isn't pointless, since it helps put into perspective where this non-pro video card stands in the professional world. It's like making a lot of gaming benchmarks out of professional cards with no non-pro cards. You need perspective.

Other than that, is was an interesting read.

Cheers! -

slomo4sho I would honestly have liked to see a GTX 690 and 7990(or 7970 x-fire) in the mix to see how titan performs at relatively equal price points.Reply -

260511 mayankleoboy1Too much data here for a proper conclusion. Here is what i conclude :In Pro applications : 1. 7970 is generally quite bad. 2. Titan has mixed performance.3. Drivers make or break a card. In more consumer friendly 'general' apps :1. 7970 dominates. Completely.2. 680 is piss poor (as expected)3. 580 may or may not compete.4. Titan is not worth having.AMD needs to tie up moar with Pro app developers. Thats the market which is ever expanding, and will bring huge revenue.Would have been interesting to see how the FirePro version of 7970 performs compared to the HD7970.Reply

Show me one benchmark where AMD actually does well? the amount of fanboyism in your comment is unsettling, go back to your cave, Troll.