Tom's Hardware Graphics Charts: Performance In 2014

Two years and two graphics card generations have passed since the last major update to our famous graphics card performance charts. It's time to get them back up to speed. We introduce modern benchmarks, new measurement equipment, and fresh methodology.

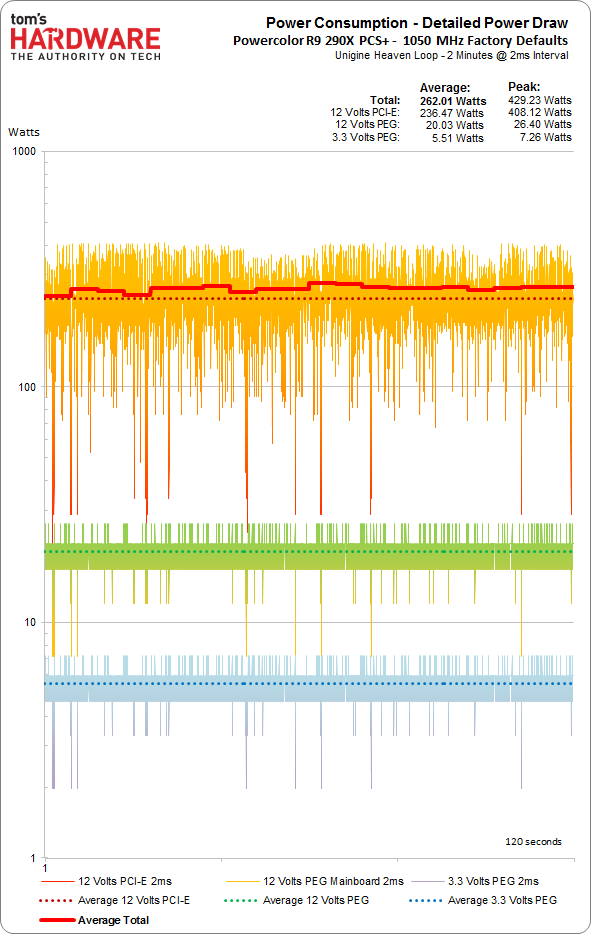

How We Measure Power Consumption

Measurement Equipment and Methodology

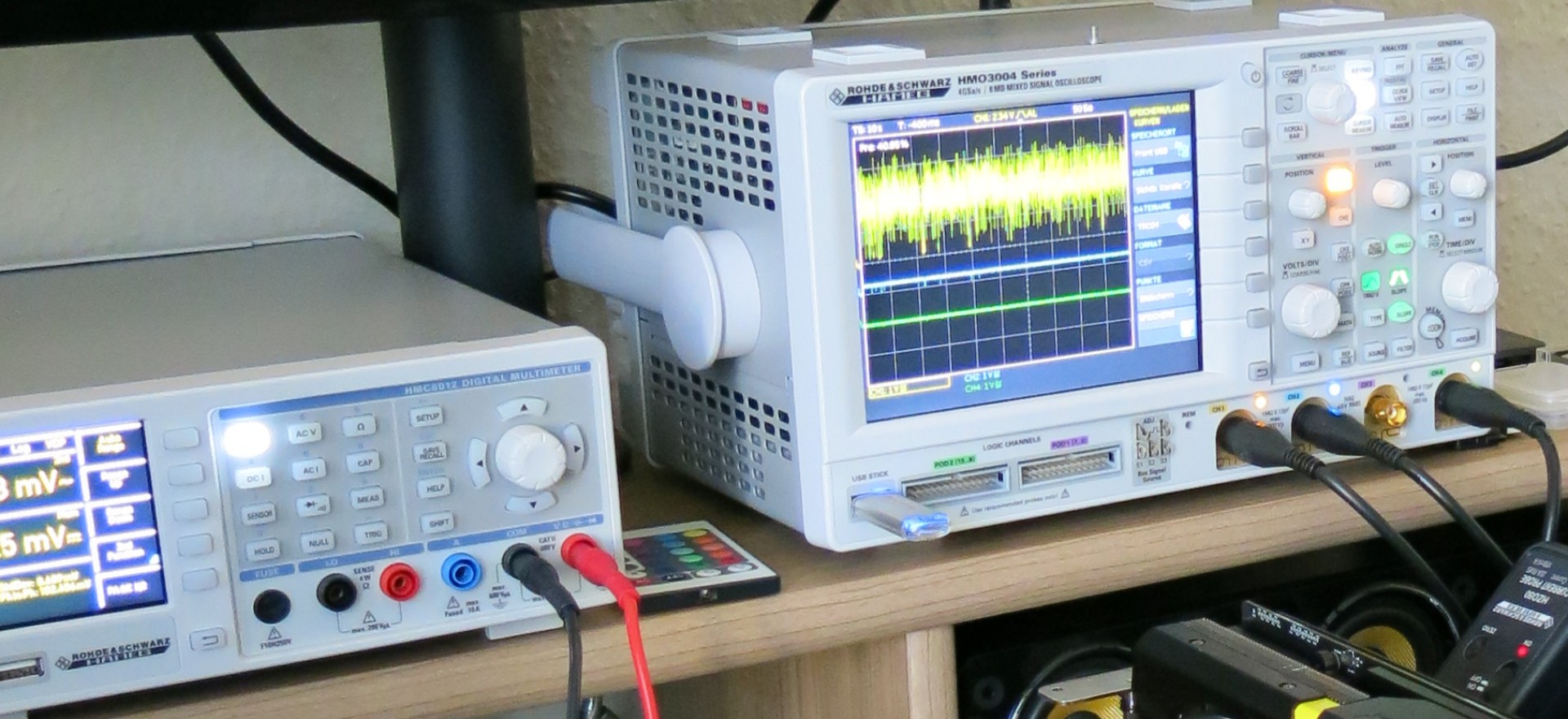

Our power consumption test setup was planned in cooperation with HAMEG (Rohde & Schwarz) to yield accurate measurements at small sampling intervals, and we've improved the gear continuously over the past few months.

AMD’s PowerTune and Nvidia’s GPU Boost technologies introduce significant changes to loading, requiring professional measurement and testing technology if you want accurate results. With this in mind, we're complementing our regular numbers with a series of benchmarks using an extraordinarily short range of 100 μs, with a 1 μs sampling rate.

We get this accuracy from a 500 MHz digital storage oscilloscope (HAMEG HMO 3054), while measuring currents and voltages with the convenience of a remote control.

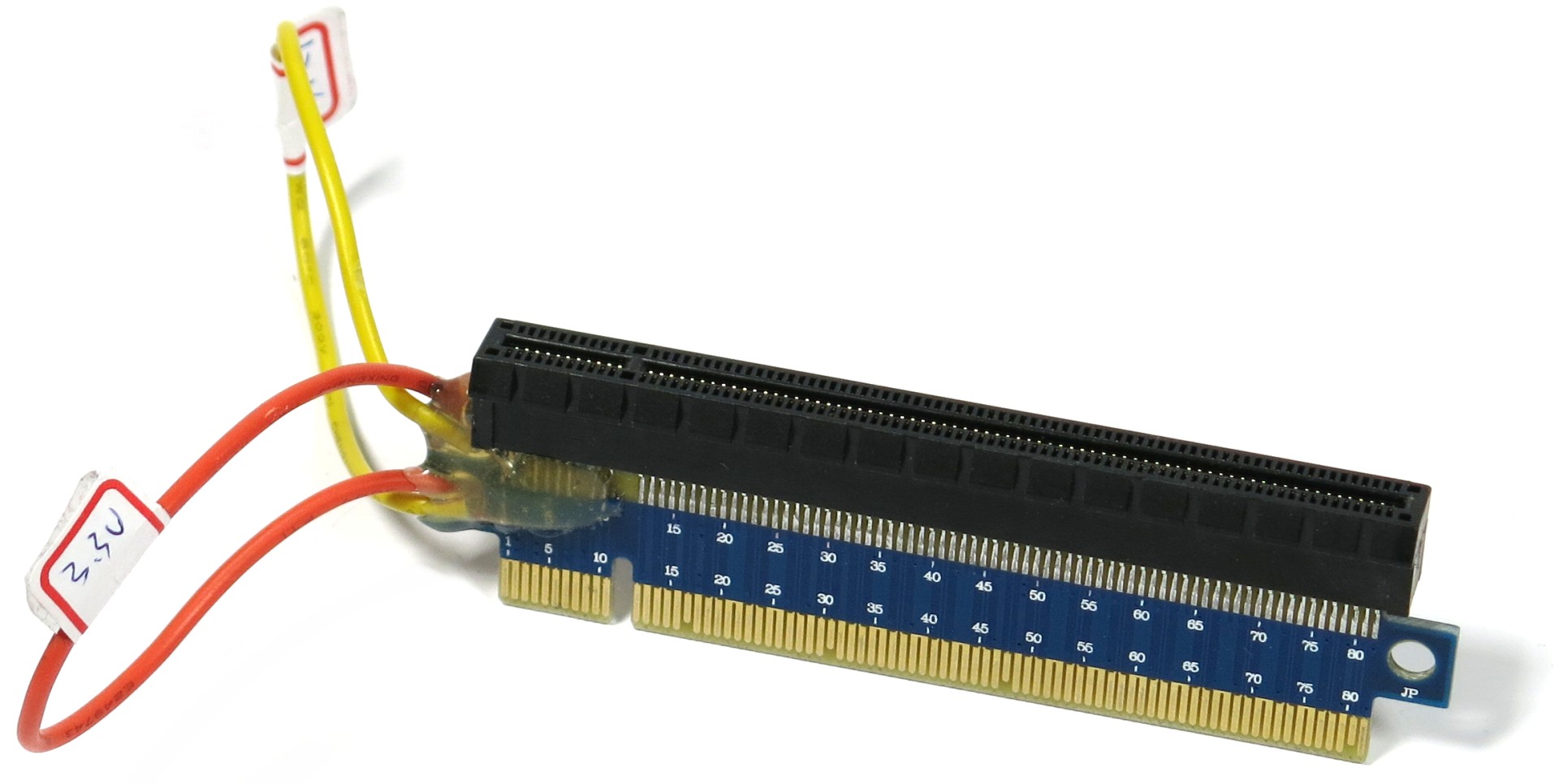

The measurements are captured by three high-resolution current probes (HAMEG HZ050), not only through a riser card for the 3.3 and 12 V rails (which was custom-built to fit our needs, supports PCIe 3.0, and offers short signal paths), but also directly from specially-modified auxiliary power cables.

Voltages are measured from a power supply with a single +12 V rail. We're using a 2 ms resolution for the standard readings, which is granular enough to reflect changes from PowerTune and GPU Boost. Because this yields so much raw data, though, we keep the range limited to two minutes per chart.

| Measurement Procedure | Contact-free DC measurement at PCIe slot (using a riser card)Contact-free DC measurement at external auxiliary power supply cableVoltage measurement at power supply |

|---|---|

| Measurement Equipment | 1 x HAMEG HMO 3054, 500 MHz digital multi-channel oscilloscope 3 x HAMEG HZO50 current probes (1 mA - 30 A, 100 kHz, DC) 4 x HAMEG HZ355 (10:1 probes, 500 MHz) 1 x HAMEG HMC 8012 digital multimeter with storage function |

| Power Supply | Corsair AX860i with modified outputs (taps) |

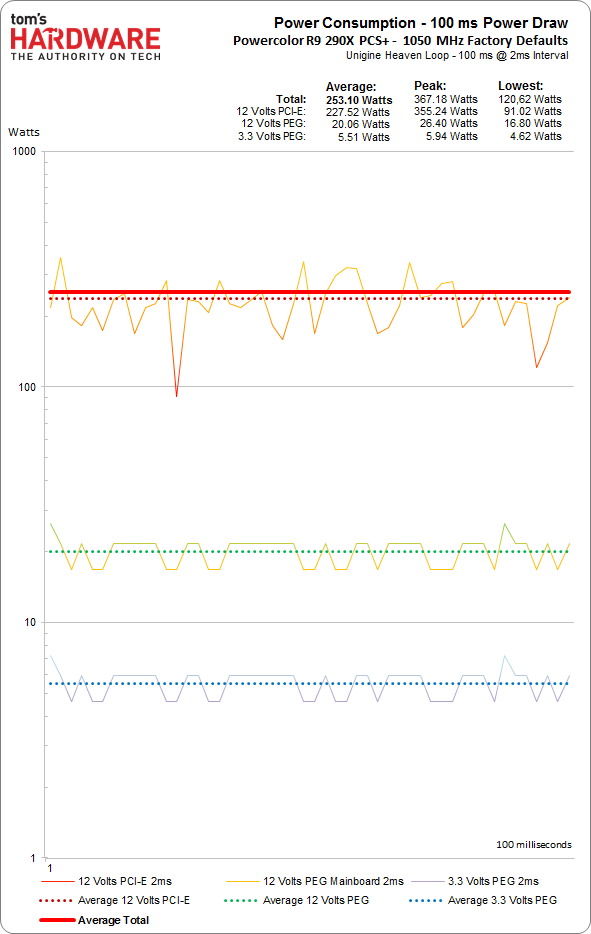

A Lot Can Happen in 100 Milliseconds...

...and we mean a lot! Let’s take a look at an analysis of all three voltage rails using a 2 ms sample across 100 ms (giving us 50 data points). Just looking at those results makes us pity the power supply. Jumps between 91 and 355 W over the auxiliary power connectors are pretty harsh. The fluctuations aren't as crazy on the other rails.

On the bright side, neither AMD nor Nvidia graphics cards with auxiliary power connectors fully utilize the 75 W made available through a PCI Express x16 slot. That hasn't always been the case. Additionally, there's far less variance over the PCI Express interface, no doubt benefiting stability.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

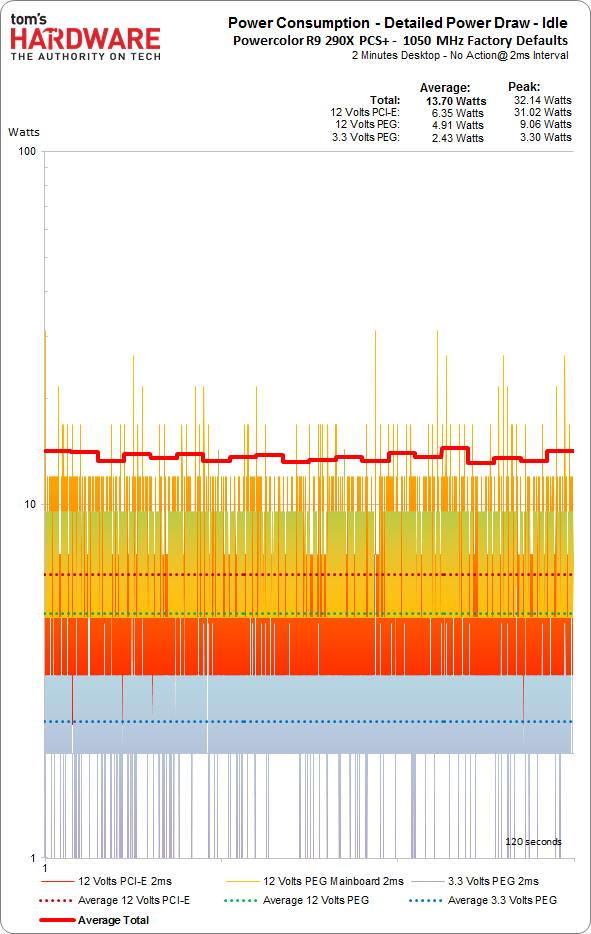

High-Resolution Measurements

We wrap this part of our introduction up with illustrations of power consumption at idle and under a gaming workload. Again, all of this will get explained in more detail in an upcoming article.

Here's what's interesting: AMD's Radeon R9 290X demonstrates an idle power figure under 14 W. However, the many peaks up to 32 W skew that figure up if you're sampling more slowly. With on-board memory factored out, really, all that's left of the power use comes in under 12 W.

The differences aren't just apparent at idle, either. Power consumption under the effects of a gaming workload also turns out to be lower than what older/slower equipment would have us believe. Those massive disparities between our gear and slower equipment only showed up in the last two generations of AMD's hardware, so it's a fairly recent phenomenon. But it does mean the company gets beaten up more than it should in most reviews.

Current page: How We Measure Power Consumption

Prev Page The Components In Our Reference Build Next Page How We Measure Noise

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

blackmagnum Thank you Tom's team for updating the charts. You're my goto when I'm upgrading my rigs. I'll be waiting... Bring on yesterday's gems.Reply -

tomfreak First thing Tom need is to bench how PCIE 2.0 8x vs 16x perform on a modern top end GPU. Since 290X are passing the bandwidth from crossfire bridge to PCIE, may be is time to check them again? As I recall AMD do not recommend putting 290x XDMA crossfire on PCIE 2.0 8x. Please check this outReply -

cypeq First it's great to see new charts.Reply

I was never a fan of this style of benchmarking. It sure gives clean graph of gpu capabilities which we always needed. I would love to see new bottleneck analysis. Or at least parallel test done on midrange PC.

Everyone should keep mind that these charts represent performance of <1% PC builds out there.

13278215 said:First thing Tom need is to bench how PCIE 2.0 8x vs 16x perform on a modern top end GPU. Since 290X are passing the bandwidth from crossfire bridge to PCIE, may be is time to check them again? As I recall AMD do not recommend putting 290x XDMA crossfire on PCIE 2.0 8x. Please check this out

If I recall correctly we are at this moment at the edge of PCI 2.0 x8 which = PCI 1.0 x16 . Next or following gen will finally outdate PCI 1.0 in single and PCI 2.0 in dual GPU configs as there will finally be noticeable bottle necks. -

mitcoes16 Any Steam OS or GNU/Linux benchmarks?Reply

It would be nice to add any opengl crossplattform game as any ioquake based one or something more modern and test it under MS WOS and under GNU / Linux

Better if it is future Steam OS to let us know the performance at the same game under MS WOS and under GNU/Linux.

Also it would be nice to test at MS WOS with and without antivirus, perhaps avast that is free or any other of your preference.

Last but not least, in opengl or in directx there are version changes and being able to split cards generations by opengl / directx version support would help as a current price / performance index based in your sponsored links prices. -

mitcoes16 No 720p tests?Reply

720p ( 1280x720 píxels = 921.600 píxels) is half 1080p more or less

1080p (1920\00d71080 píxels = 2.073.600 pixels)

And when a game is very demanding or you prefer to play with better graphics playing at 720p is a great option

Of course,latest best GPUs would be able to play at 4k and full graphics, but when we read the benchmarks we want to know also if our actual card CAN play at 720p (1k) or what the best ones can do at 1k to be able to compare

Also even it is not a standard or accurate, for benchmarking purposes calling 720p (1k) 1080p (2k) and 2160p (4K) wouldbeeasier to understand in a fast sight than UHD FHD and HDR, that can be used too UHD (4k) FHD (2k) HDR (1k) -

InvalidError Reply

720p does not stress most reasonably decent GPUs much and how many people would drop resolution to 720p these days with all the re-scaling artifacts that might add? In most cases, it would make more sense to stick with native resolution and tweak some of the more GPU/memory-intensive settings down a notch or two - at least I know I greatly prefer cleaner images over "details" that get blurred by the lower resolution and re-scaling that further distorts it.13278758 said:No 720p tests?

Considering how you can get 1080p displays for $100, I would call standardizing the GPU chart on 1080p fair enough: the people who can only afford a $100 display won't care much about enabling every bell and whistle and the people who want to max everything out likely won't be playing on $100 displays and $100 GPUs either. -

2Be_or_Not2Be I really like to see the charts on how much noise a video card's cooling fans make. That makes more of a difference to me as limiting something distracting that I hear every time I game versus getting a louder card with 10 fps more.Reply

I also like seeing how current cards stack up performance-wise to previous generations. That really helps when you're deciding whether to upgrade or not.