The Myths Of Graphics Card Performance: Debunked, Part 2

PCIe: A Brief Technology Primer On PCI Express

Myth: A 16-lane PCIe slot always enables 16 lanes

As Confusing As It May Sound, A x16 PCIe Slot Is Different Than A 16-Lane Configuration

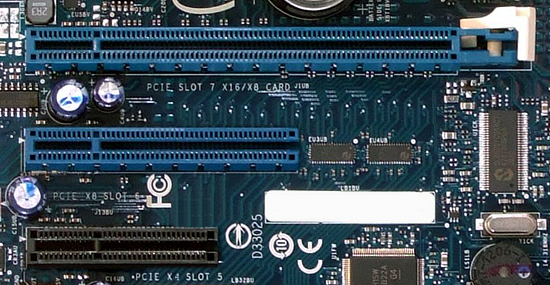

PCI Express as a standard can be confusing. First consider the physical slots. They’re commonly referred to as x1, x4, x8 or x16. This nomenclature makes reference to the slot’s physical size and the maximum number of PCIe lanes that a card inserted into that slot can access.

Smaller cards fit into longer slots (for instance, a x8 or x1 card into a x16 slot), but larger cards do not fit into shorter slots (a x16 card into a x1 slot, for example). With rare exceptions, graphics cards are almost universally designed to communicate over a 16-lane link, thus requiring a corresponding slot. In theory, the PCIe specification allows for up to 32 lanes per slot, though we’ve never seen anything longer than x16.

PCIe 32x slots/cards?

A little-known fact is that, in theory, the PCIe specification allows for up to 32 lanes to be used with any given slot. We have never seen slots wider than 16x however ... the physical size of 32-lane slots would be really big though and, likely, close to impossible to implement on ATX form factor motherboards (as they would create a routing nightmare).

So, let's say that you have your 16-lane graphics card fitted nicely into a x16 slot. Does that mean it’s transferring data across a 16-lane link? Maybe, but maybe not. The number of lanes actively associated with each slot depends on the host architecture (Haswell or Haswell-E, for example), the presence of bridge chips (such as PLX’s PEX 8747) and on the number of cards installed in surrounding slots (hardware strapping on most motherboards dynamically reconfigures lanes based on utilization). Thus, a x16 slot might operate with 16, eight, four, or even one lane active. The only way to tell for sure is through a tool like GPU-Z, though even that can be unreliable.

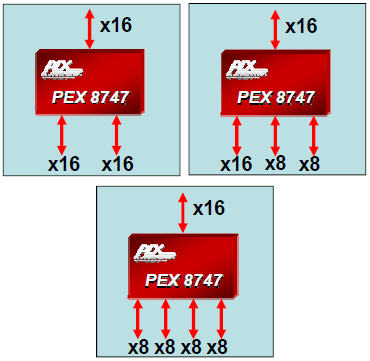

Without going into excessive detail, a major difference between Intel's "premium" LGA 2011 interface (supporting Ivy Bridge-E-based CPUs like the Core i7-4820K, -4930K and 4960X) and Intel's "mainstream" LGA 1150 interface (supporting Haswell-based processors like the Core i7-4770K), is that the higher-end platform exposes up to 40 lanes of third-gen PCIe natively. That’s enough for two cards in x16/x16, three cards in x16/x16/x8 or four cards in x16/x8/x8/x8 configurations). Meanwhile, LGA 1150 is limited to 16 lanes, giving you enough connectivity for one card at x16, two cards in x8/x8, or three cards at x8/x4/x4 in CrossFire (Nvidia does not permit SLI using four-lane links). There are exceptions of course. Intel’s Core i7-5820K drops into 2011-pin sockets, but is deliberately limited to 28-lane PCIe controllers.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

To work around the limited PCIe connectivity available on LGA 1150 platforms, hardware manufacturers use PCIe "bridge chips" that operate like switches, enabling access to a greater number of PCIe lanes. The most famous of those chips, PLX’s PEX 8747, is a PCIe Gen 3 switch, and it isn’t cheap. Mouser has them at roughly $100 each for low volumes. OEMs likely negotiate lower prices on greater quantities, but that remains an expensive component. This chip alone contributes to the jump from ~$200 to ~$300+ between mainstream and enthusiast LGA 1150 motherboards.

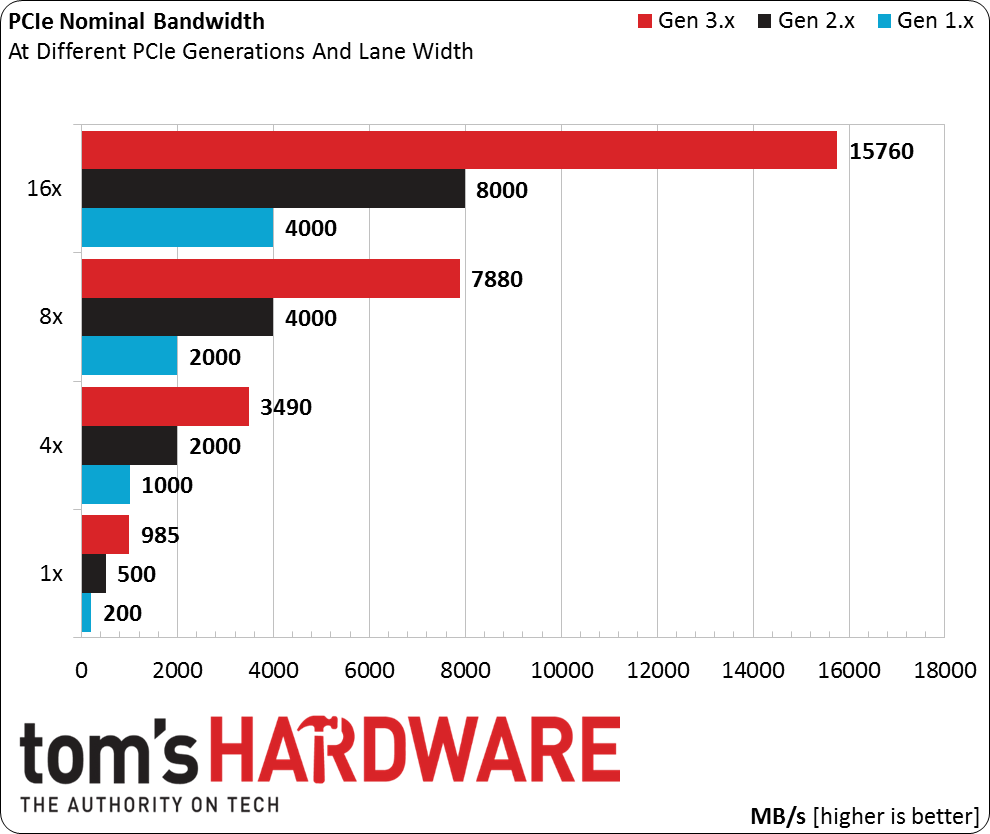

What does all of that translate to from a bandwidth perspective, though?

As you can see, each PCIe generation essentially doubles the bandwidth of the version before. Four lanes of PCIe 3.0 are roughly equivalent to eight lanes of PCIe 2.0, which in turn are roughly equivalent to 16 lanes of first-gen PCIe.

The fourth generation, scheduled for 2015, will be backwards compatible with today’s technology. You can expect PCIe 3.0 (and 2.0) graphics cards to remain current for quite a while, at least from an interface standpoint. If you're already curious about PCIe 4.0, read the PCIe 4.0 FAQ directly from PCI-SIG.

We still haven’t answered the question of how much bandwidth you need, though.

Current page: PCIe: A Brief Technology Primer On PCI Express

Prev Page Revisiting Graphics Cards Myths Next Page Testing PCIe At x16/x8 At Three Generations, From 15.75 To 2GB/s-

iam2thecrowe i've always had a beef with gpu ram utillization and how its measured and what driver tricks go on in the background. For example my old gtx660's never went above 1.5gb usage, searching forums suggests a driver trick as the last 512mb is half the speed due to it's weird memory layout. Upon getting my 7970 with identical settings memory usage loading from the same save game shot up to near 2gb. I found the 7970 to be smoother in the games with high vram usage compared to the dual 660's despite frame rates being a little lower measured by fraps. I would love one day to see an article "the be all and end all of gpu memory" covering everything.Reply

Another thing, i'd like to see a similar pcie bandwidth test across a variety of games and some including physx. I dont think unigine would throw much across the bus unless the card is running out of vram where it has to swap to system memory, where i think the higher bus speeds/memory speed would be an advantage. -

blackmagnum Suggestion for Myths Part 3: Nvidia offers superior graphics drivers, while AMD (ATI) gives better image quality.Reply -

chimera201 About HDTV refresh rates:Reply

http://www.rtings.com/info/fake-refresh-rates-samsung-clear-motion-rate-vs-sony-motionflow-vs-lg-trumotion -

photonboy Implying that an i7-4770K is little better than an i7-950 is just dead wrong for quite a number of games.Reply

There are plenty of real-world gaming benchmarks that prove this so I'm surprised you made such a glaring mistake. Using a synthetic benchmark is not a good idea either.

Frankly, I found the article was very technically heavy were not necessary like the PCIe section and glossed over other things very quickly. I know a lot about computers so maybe I'm not the guy to ask but it felt to me like a non-PC guy wouldn't get the simplified and straightforward information he wanted. -

eldragon0 If you're going to label your article "graphics performance myths" Please don't limit your article to just gaming, It's a well made and researched article, but as Photonboy touched, the 4770k vs 950 are about as similar as night and day. Try using that comparison for graphical development or design, and you'll get laughed off the site. I'd be willing to say it's rendering capabilities are actual multiples faster at those clock speeds.Reply -

SteelCity1981 photonboy this article isn't for non pc people, because non pc people wouldn't care about detailed stuff like this.Reply -

renz496 Reply14561510 said:Suggestion for Myths Part 3: Nvidia offers superior graphics drivers

even if toms's hardware really did their own test it doesn't really useful either because their test setup won't represent million of different pc configuration out there. you can see one set of driver working just fine with one setup and totally broken in another setup even with the same gpu being use. even if TH represent their finding you will most likely to see people to challenge the result if it did not reflect his experience. in the end the thread just turn into flame war mess.

14561510 said:Suggestion for Myths Part 3: while AMD (ATI) gives better image quality.

this has been discussed a lot in other tech forum site. but the general consensus is there is not much difference between the two actually. i only heard about AMD cards the in game colors can be a bit more saturated than nvidia which some people take that as 'better image quality'. -

ubercake Just something of note... You don't necessarily need Ivy Bridge-E to get PCIe 3.0 bandwidth. Sandy Bridge-E people with certain motherboards can run PCIe 3.0 with Nvidia cards (just like you can with AMD cards). I've been running the Nvidia X79 patch and getting PCIe gen 3 on my P9X79 Pro with a 3930K and GTX 980.Reply -

ubercake Another article on Tom's Hardware by which the 'ASUS ROG Swift PG...' link listed for an unbelievable price takes you to the PB278Q page.Reply

A little misleading.