Computer History: From The Antikythera Mechanism To The Modern Era

In this article, we shed light on the most important moments in computer history, acknowledging the people that have contributed to this evolution.

Commodore Amiga

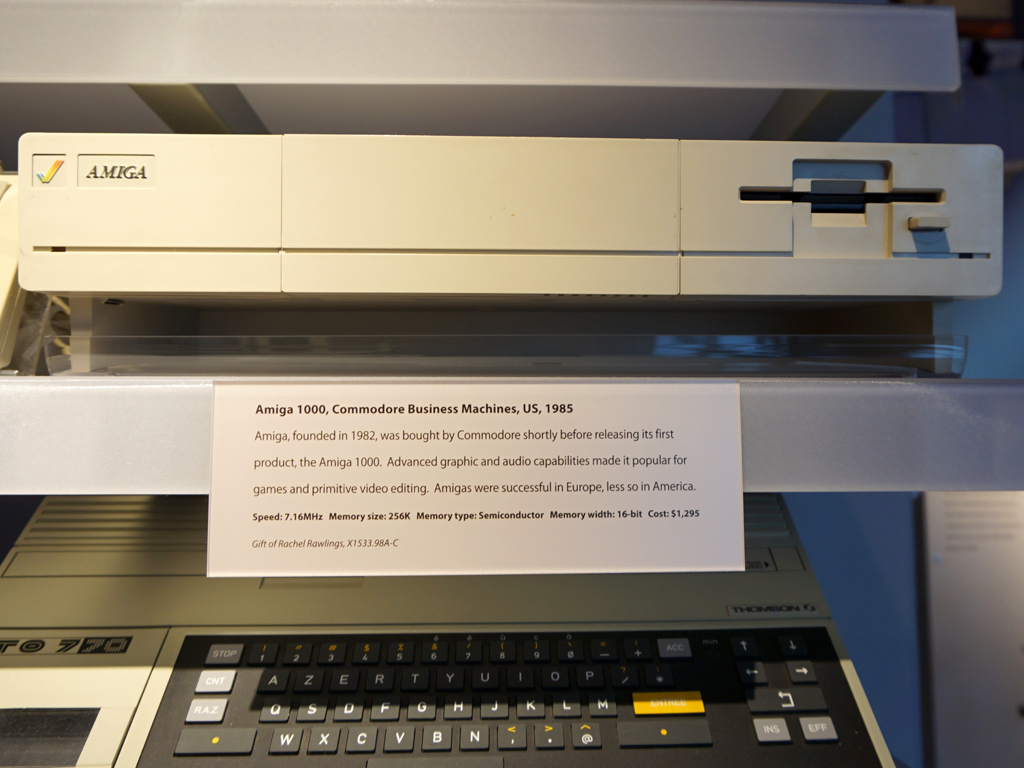

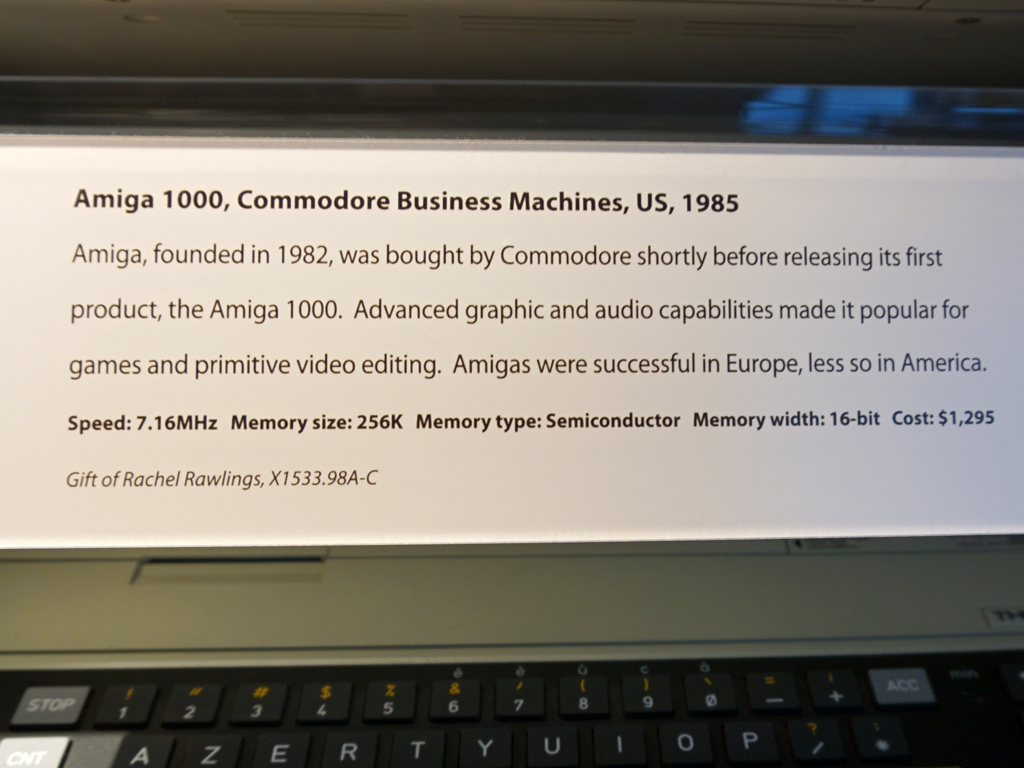

Many believe the Amiga line included the best home computers ever released. The first Amiga was introduced in July 1985. The A1000 model in its basic edition had 256KB of RAM and was supported with an optional color monitor. Commodore used the famous 16-bit Motorola 68000 CPU for the first Amiga models, clocked at 7.16 MHz for the U.S. version and at 7.09 MHz for the European model.

Despite the rather weak CPU, Amiga had amazing graphics and audio capabilities thanks to its dedicated circuits, called Denise (graphics) and Paula (audio). In addition to these two circuits there was also a third (initially called Agnus and after its upgrade renamed Fat Agnus), which provided fast RAM access to the other circuits, including the CPU.

Agnus also incorporated a chip called blitter, and it was responsible for boosting the 2D graphics performance of Amiga. In other words, the blitter played the role of a co-processor and was capable of copying a large amount of data from one region of the system's RAM to another, helping to increase the speed of 2D graphics rendering.

The A1000 was also equipped with a 3 ½" floppy drive and its operating system (OS) was the AmigaOS, which was multitasking while the IBM compatible PC's DOS was single tasking.

The AmigaOS kernel was loaded from a floppy disk to a board that was installed inside the A1000, which had 256KB of capacity. From the moment the kernel was loaded (also known as Kickstart), the 256KB of RAM was transformed to write-protected.

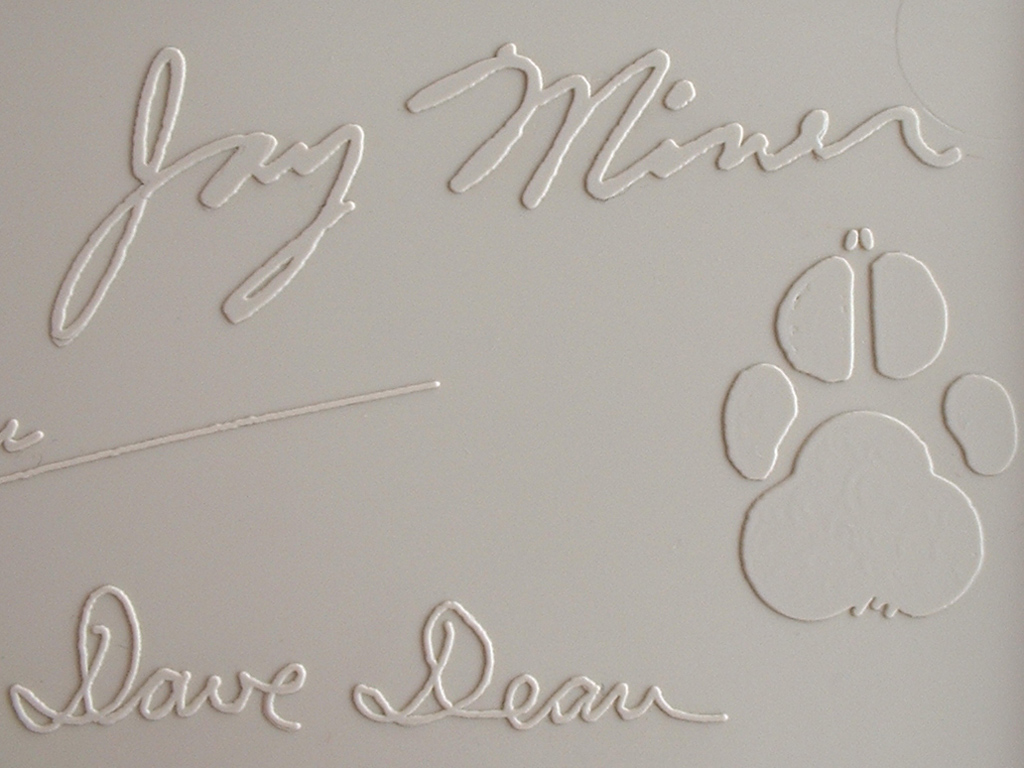

During this time, some designers held strong ties to their creations, which was clearly the case with Amiga. The first A1000 carried the signatures of their makers inside the chassis, along with the footprint of a dog that belonged to one of the team members (Jay Miner). The production of the A1000 stopped in 1987, when this model was replaced by the A500, which was a huge success, and the flagship A2000.

The new Amigas used an upgraded version of the AmigaOS and the A500 was equipped with 512KB of RAM, which could be upgraded up to 9.5MB. That's right, the Amiga had an open platform and could be upgraded rather easily by the standards of this period. Hands down, the A500 was the most successful product in Commodore's portfolio and it had huge sales, especially in the European market. A key role in Amiga's success was the huge software support, especially video games.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The Amiga computer had increased graphics capabilities and supported up to 640 x 512 resolution, and was able to depict up to 4096 colors simultaneously in a special mode called HAM, which was engaged only in static images. It also had strong audio capabilities, which, combined with the enhanced graphics, is what made this computer popular not only among gamers, but also professional graphics designers, video clip production studios and the film and music industry in general (although most music artists preferred the Atari ST computers, because of the input-output midi ports).

Amiga was one of the first computers with an open architecture. It had two expansion slots, one on the side and one on the bottom. All of its integrated circuits (ICs) weren't installed directly onto the motherboard, but were mounted onto bases so they could be easily removed and replaced with enhanced ICs.

The same applied to the CPU, which could be easily replaced with the more powerful Motorola 68010. The CPU could be upgraded with the much stronger 68020, 68030 or the 68040 through the sided expansion port. The memory upgrade was also possible (up to 1MB) directly on the motherboard, however only Fat Agnus supported the additional memory. Moreover, Amigas could accept a hard drive or special boards that simulated IBM-compatible PCs, so they could run the corresponding software.

The last Amiga from Commodore was the A400T, released in 1994, just before the company declared bankruptcy. It might sound strange that a company that built one of the most popular computers went bankrupt. The domination of the IBM compatible PCs sealed the fate of home computers. Microsoft also played a significant role in this, with its Windows OS, which offered the highly desired graphical interface.

Nonetheless, Amiga computers (especially the first ones) were way ahead of their time and had a strong following, which continued to support the company long after Amiga production stopped.

Current page: Commodore Amiga

Prev Page ATARI TOS, (Mega) STE, TT And The Jaguar Gaming Console Next Page Intel 80386 And The Attack Of The Clone PCs - Acorn Archimedes

Aris Mpitziopoulos is a contributing editor at Tom's Hardware, covering PSUs.