Pushing Intel Optane Potential: RAID Performance Explored

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Features & Specifications

Intel's Optane Memory is finally shipping, but many can't get past the idea of cache in a high-performance computer, or the steep system requirements. Optane Memory delivers SSD-like performance when you pair it with a hard disk drive, and for some tasks, it offers better performance than a low-cost SSD. Still, some users just want the fastest possible storage system, and caching isn't the best option. Today we examine one option that combines three Optane Memory SSDs together in a RAID 0 array to create a large volume for installing an operating system and a few programs.

After our initial article, I've read many reader comments about Optane Memory. It's obvious the name alone causes confusion. Optane "Memory" is actually a storage device. It gets confusing when you add in the fact that the Optane product family has two consumer cache devices and an enterprise-focused add-in card SSD, and then the terminology associated with building an Optane Memory (cache) array on your 200-series chipset. I'm confused, and I wrote it.

For this article, we're not worried about creating the caching array that preloads the high-speed device with frequently-accessed data. We are simply using three Optane devices together, forgoing the special software, and just muscling through the workloads with brute force.

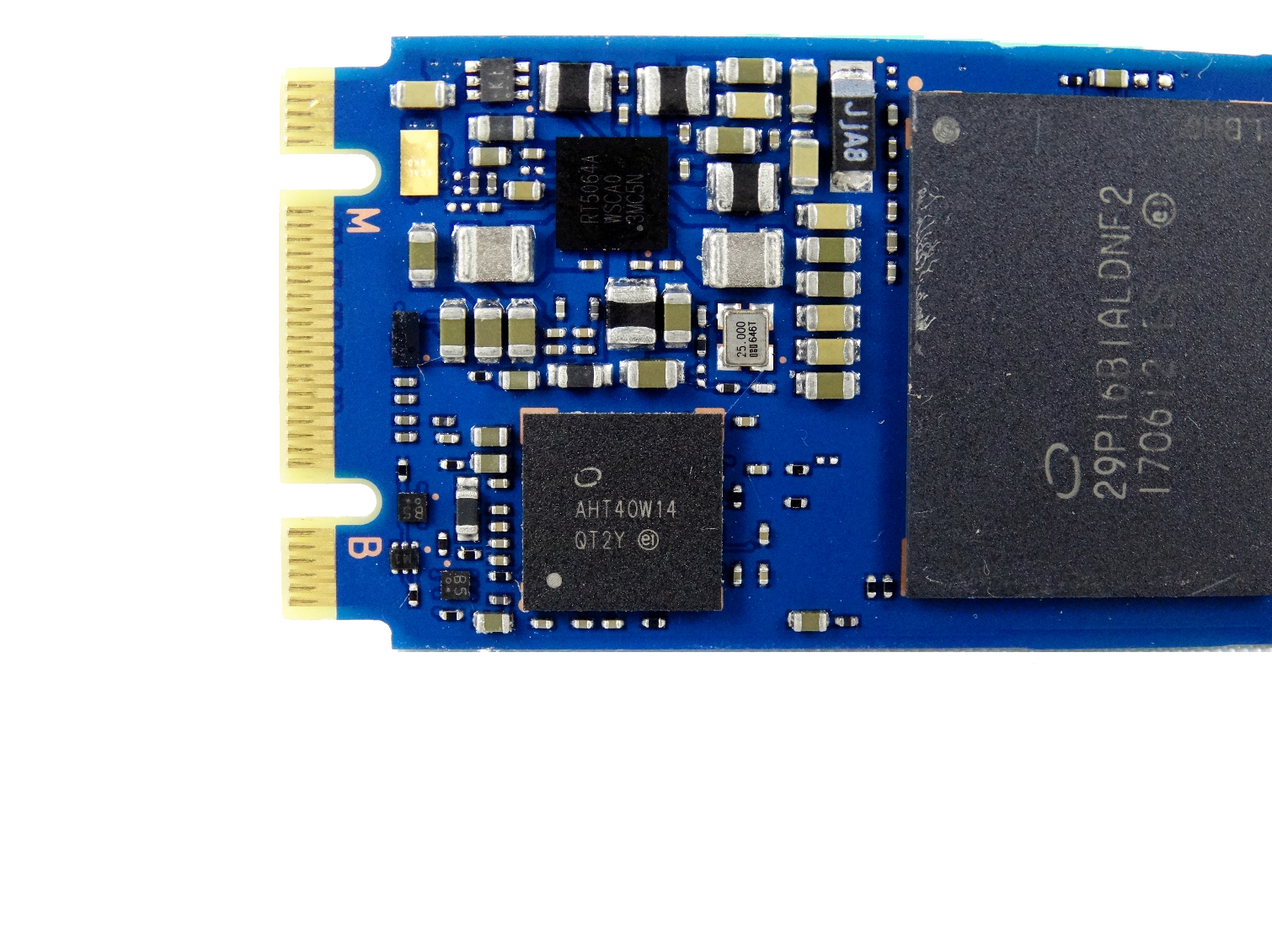

Optane's 3D XPoint (the physical chips) are all muscle. We can summarize the comments in our first Optane article by simply saying the caching product is only slightly faster than a high-performance SSD. That's kind of true, but it's a sandbagged product. The Optane Memory SSD with 32GB and 16GB of capacity uses either one or two channels. I suspect it's a single-channel device, but Intel will neither confirm nor deny the controller specifications. If we go with my theory, this Intel single-channel controller beats your 8-channel NAND-based SSDs easily. Over time, we'll see more of Optane's capabilities, but for now, we should think of it as a whole new class of memory.

You may not see it now, but Intel will soon have an advantage in the SSD game. For the last several years, all the SSD companies have played catch up with Samsung. Samsung currently has the most advanced NAND flash, and the advantage is so great that its TLC NAND often outperforms competing MLC NAND. Samsung's 3D NAND is like a college football team playing a high school football team. If that's the analogy, then Intel's Optane Technology is NFL caliber.

I think Optane is off to a great start. We should all remember that the early SLC-based prosumer SSDs were slower than a modern SD card when they first came to market. Over time Optane will get faster, larger, and cheaper. What we want is for Intel to mass produce it, make it cheap, and then give us the highest performing devices at an affordable price. If you think that's going to happen soon, then you don't know Intel. Don't look for low-cost consumer Optane-based SSDs anytime soon, but RAID allows you to game the system if you take on a little risk.

Modern SSDs use a form of internal RAID to increase performance. A single NAND die is fast, but it takes many working together to reach the high levels of performance we associate with SSDs. Larger capacities increase the likelihood of more parallel transactions (to a limit). This has both positive and negative side effects. Over time, the capacity of the NAND die increased, and the number of physical chips in the SSDs decreased. That's why many modern SSDs are slower than older models with the same capacity. Optane changes the game entirely. Intel hasn't given us an affordable prosumer Optane SSD, yet, so we're going to make one using a combination of hardware and software you may already have.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Specifications

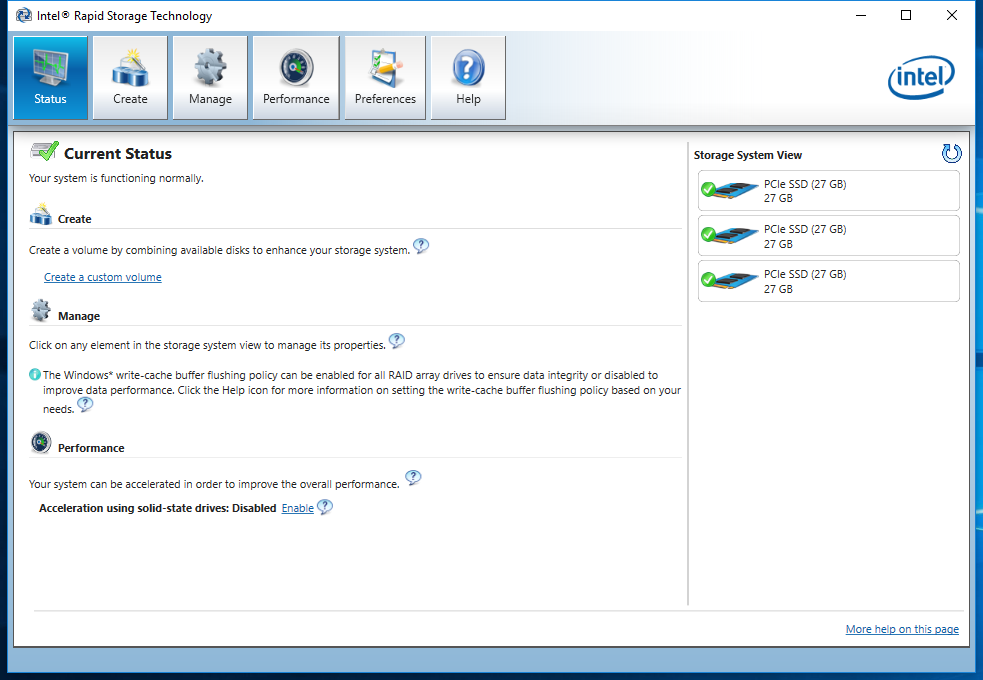

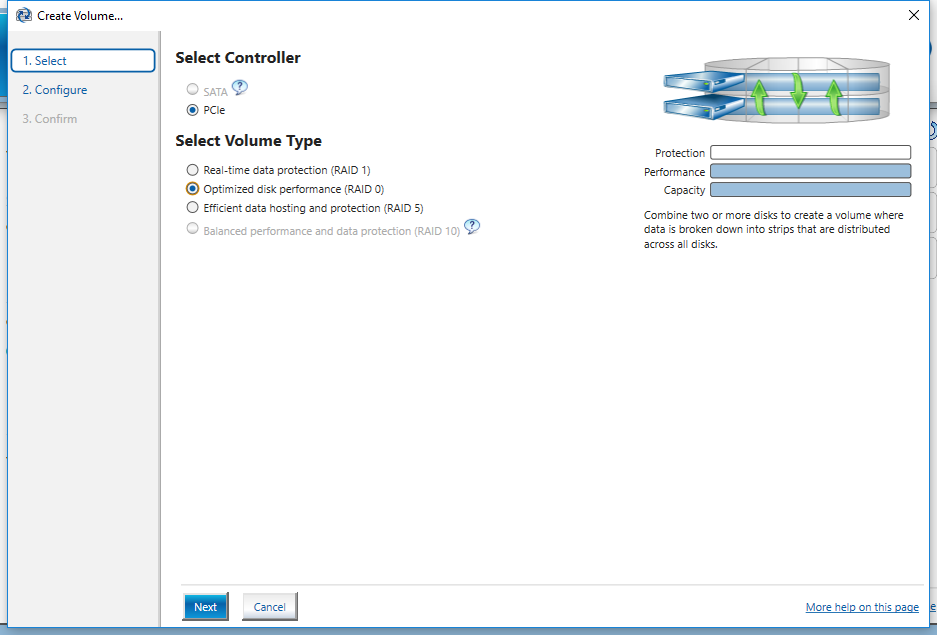

Not every Intel chipset supports RAID. PCIe RAID falls to an even more exclusive tier because you need the ability to divert the PCIe lanes directly to the PCH chipset. To enable that feature, you need a 100 or 200 series chipset that supports Rapid Storage Technology (RST). The restrictions are not as severe as enabling Optane Memory caching (7th Series Core processor and 200-Series Chipset), but the bar is still very high.

We used an Asrock Z170 Extreme7+ motherboard for our three-drive RAID 0 array. The board features three M.2 slots with PCIe 3.0 x4 connectivity and all the slots route through the PCH for Rapid Storage Technology. There are only a few motherboards with three M.2 sockets, and there are several with two M.2 sockets and a PCIe slot that has a PCH route option.

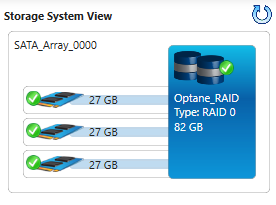

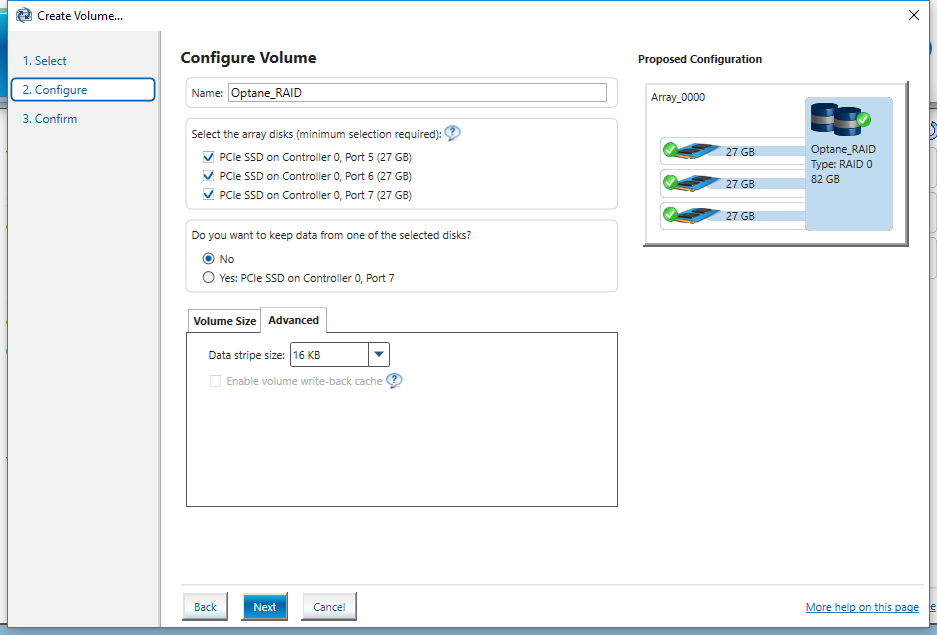

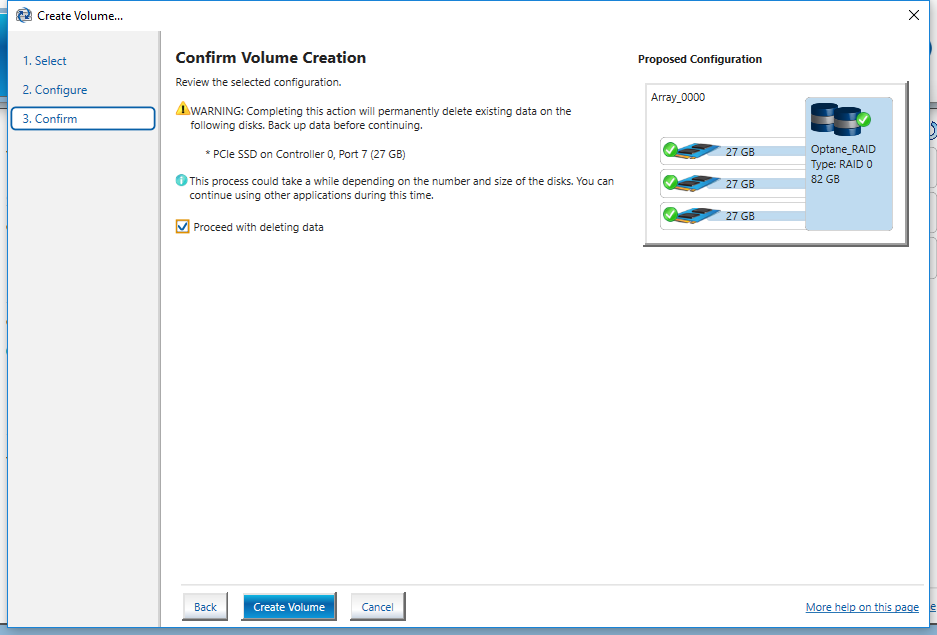

You can't boot to a true software RAID built with Microsoft Storage Spaces, but you can boot from a RAID 0 array using Rapid Storage Technology. The array gives us approximately 82GB of usable capacity, or around twice the requirement for a Windows 10 Pro installation with all the updates along with the Office software suite. It's not a lot of space, but in this situation, we're looking for quality over quantity.

The Optane Memory NVMe SSDs achieve less peak bandwidth than some SSDs, but it's all about where it provides higher performance. Optane memory is faster than NAND at low queue depths. Some have been hung up on Intel's initial claims that the media offers 100x the performance of NAND, but the device-level performance is closer to 10x. We didn't even hit that mark with Optane Memory (the official cache system). If you've ever read an SSD review before, then you understand that we've examined products with a 10% to 20% difference between the best and average products. The Optane memory advantage in your desktop may not be a full 10x improvement, but it’s a lot faster than what we've ever tested before (without losing all the data on a reboot).

Pricing, Warranty & Endurance

Intel's Optane Memory pricing hasn't changed since our first review. The 16GB drives still retail for between $45 and $50, and stacking up the smaller drives doesn't give you a lot of storage capacity. If you go the Optane RAID route, we suggest using the 32GB drives. Pricing fluctuates between $75 and $80 for each 32GB device, depending on the seller.

The 32GB Optane drives took longer to come to market than the smaller model. Newegg and Amazon both carry the 32GB now, and Intel hasn't said anything about an Optane shortage. Newegg limits the number of drives you can order at one time, so you have to place separate orders to buy three of them. Amazon doesn't have the same limit, but it keeps selling out.

Intel backs the Optane Memory SSDs with a 5-year limited warranty that covers up to 182.5 TB of write endurance.

A Closer Look

The Optane Memory module comes in an industry-standard single-sided M.2 2280 form factor. 3D XPoint memory is fast enough to achieve high performance rates while reading and writing to a single die (and remember, that's without requiring a DRAM buffer). The mysterious controller is smaller than most NAND-based SSD controllers, and it appears to be purpose-built for Optane Memory.

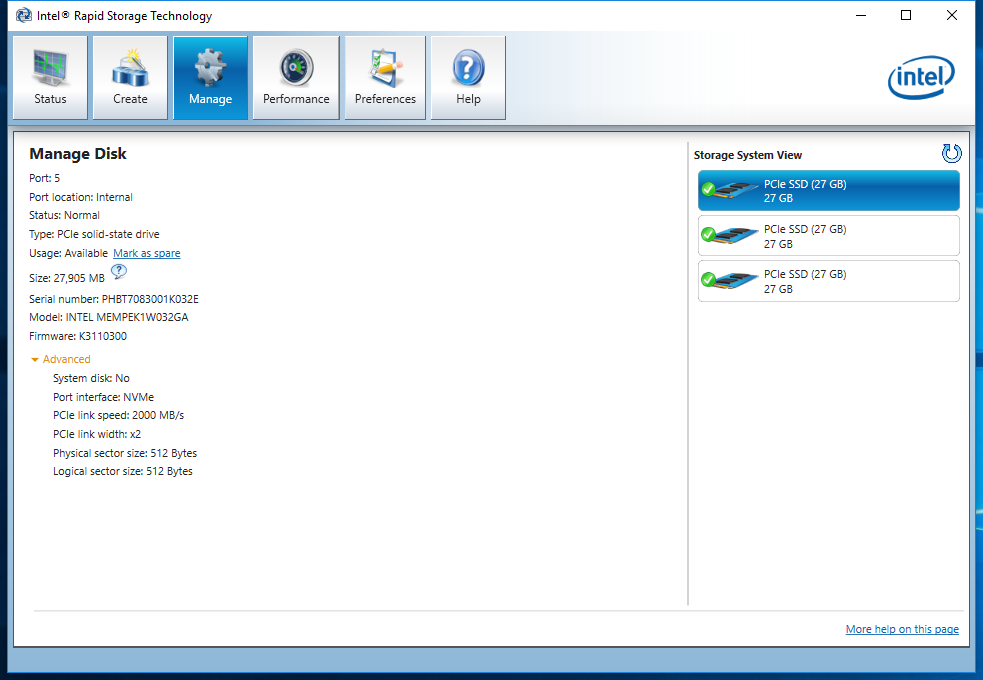

We armed our Asrock Z170 Extreme7+ motherboard with three Optane Memory 32GB M.2 modules in RAID 0 for this test.

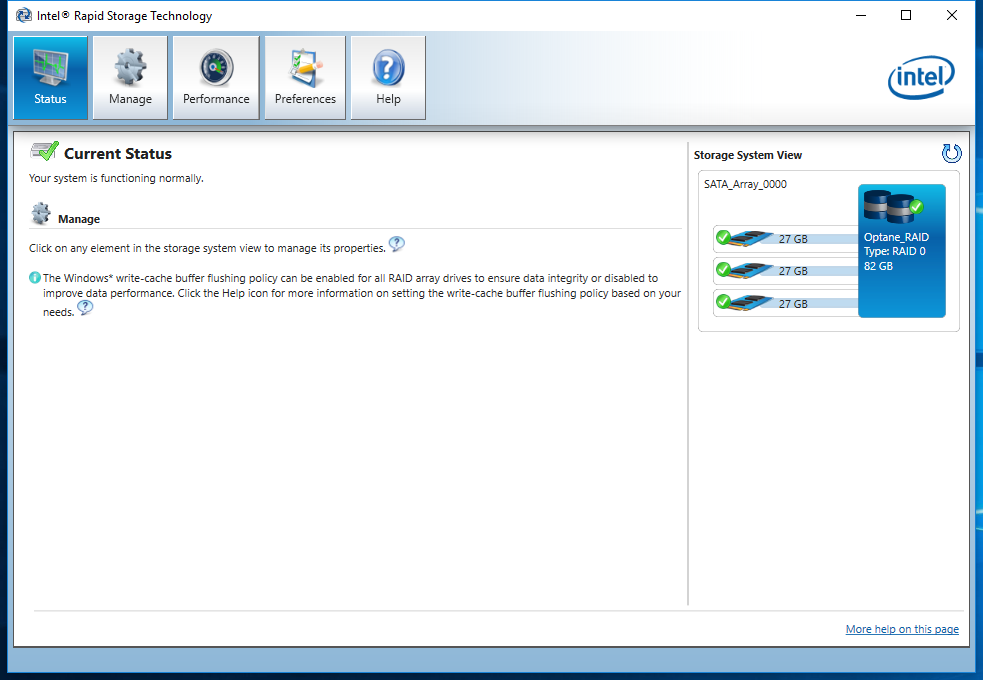

Intel Rapid Storage Technology RAID

We tested the Optane Memory RAID array as a secondary volume. This allows us to measure performance without any additional IO coming from the operating system or the measurement software. With the operating system already installed, we used Intel's Rapid Storage Technology interface to build the three-drive array using RAID 0. After our first round of tests, we cleared the data and installed Windows 10 Pro on the array to run BAPCo's SYSmark 2014 SE.

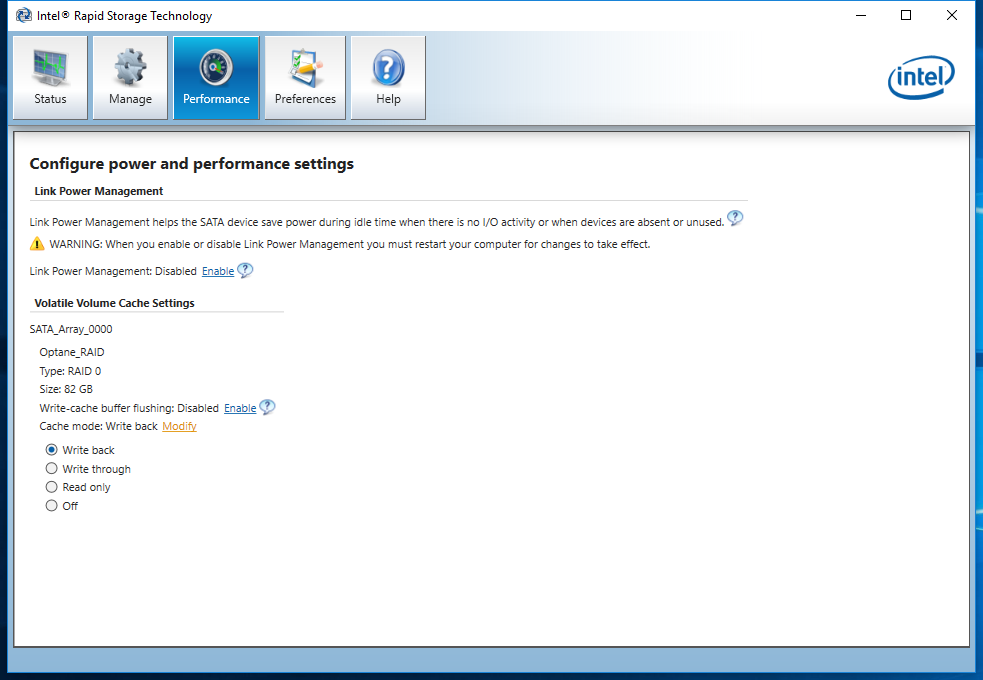

It's tempting to adjust the cache mode policy, but we advise against it. The write-back and write-through settings caused the system to lock up during heavy workloads. We didn't try the read-only setting. Of the three settings we used, "off" provided the best results and system stability.

MORE: Best SSDs

MORE: How We Test HDDs And SSDs

MORE: All SSD Content

Chris Ramseyer was a senior contributing editor for Tom's Hardware. He tested and reviewed consumer storage.

-

gasaraki Impressive but... you should have tested the 960Pro in RAID also as a direct performance comparison.Reply -

InvalidError Other PCH devices sharing DMI bandwidth with M.2 slots isn't really an issue since bandwidth is symmetrical and if you are pulling 3GB/s from your M.2 devices, I doubt you are loading much additional data from USB3 and other PCH ports. It is more likely that you are writing to those other devices.Reply

As for "experiencing the boot time", you wouldn't need to do that if you simply put your PC in standby instead of turning it off. If standby increases your annual power bill by $3, it'll take ~50 years to recover your 2x32GB Optane purchase cost from standby power savings. Standby is quicker than reboot and also spares you the trouble of spending many minutes re-opening all the stuff you usually have open all the time. -

takeshi7 Next, get 4 Optane SSDs, put them in this card, and put them in a PCIe x16 slot hooked up directly to the CPU.Reply

http://www.seagate.com/files/www-content/product-content/ssd-fam/nvme-ssd/nytro-xp7200/_shared/_images/nytro-xp7200-add-in-card-row-2-img-341x305.png -

gasaraki "Next, get 4 Optane SSDs, put them in this card, and put them in a PCIe x16 slot hooked up directly to the CPU.Reply

http://www.seagate.com/files/www-content/product-conten..."

Still just 4X bus to each M.2 so no different than on board M.2 slots. -

takeshi7 Reply19731006 said:"Next, get 4 Optane SSDs, put them in this card, and put them in a PCIe x16 slot hooked up directly to the CPU.

http://www.seagate.com/files/www-content/product-conten..."

Still just 4X bus to each M.2 so no different than on board M.2 slots.

But it is different because those M.2 slots are bottlenecked by the DMI connection on the PCH. The CPU slots don't have that issue.

-

InvalidError Reply

The PCH bandwidth is of little importance here as once you set synthetic benchmarks aside and step into more practical matters such as application launch and task completion times, you are left with very little real-world application performance benefits despite the triple Optane setup being four times as fast as the other SSDs, which means negligible net benefits from going even further overboard.19731050 said:But it is different because those M.2 slots are bottlenecked by the DMI connection on the PCH. The CPU slots don't have that issue.

The only time where PCH bandwidth might be a significant bottleneck is when copying files from the RAID array to RAMdisk or a null device. The rest of the time, application processing between accesses is the primary bottleneck.