Analysis: PhysX On Systems With AMD Graphics Cards

Rarely does an issue divide the gaming community like PhysX has. We go deep into explaining CPU- and GPU-based PhysX processing, run PhysX with a Radeon card from AMD, and put some of today's most misleading headlines about PhysX under our microscope.

CPU PhysX: Multi-Threading?

Does CPU PhysX Really Not Support Multiple Cores?

Our next problem is that, in almost all previous benchmarks, only one CPU core has really been used for PhysX in the absence of GPU hardware acceleration--or so some say. Again, this seems like somewhat of a contradiction given our measurements of fairly good CPU-based PhysX scaling in Metro 2033 benchmarks.

| Graphics card | GeForce GTX 480 1.5 GB |

|---|---|

| Dedicated PhysX card | GeForce GTX 285 1 GB |

| Graphic drivers | GeForce 258.96 |

| PhysX | 9.10.0513 |

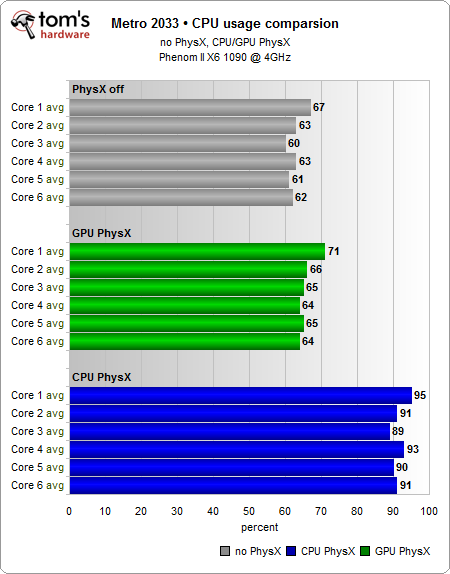

First, we measure CPU core utilization. We switch to DirectX 11 mode with its multi-threading support to get a real picture of performance. The top section of the graph below shows that CPU cores are rather evenly utilized when extended physics is deactivated.

In order to widen the bottleneck effect of the graphics card, we start out with a resolution of just 1280x1024. The less the graphics card acts as a limiting factor, the better the game scales with more cores. This would change with the DirectX 9 mode, as it limits the scaling to two CPU cores.

We notice a small increase in CPU utilization when activating GPU-based PhysX because the graphics card needs to be supplied with data for calculations. However, the increase is much larger with CPU-based PhysX activated, indicating a fairly successful parallelization implementation by the developers.

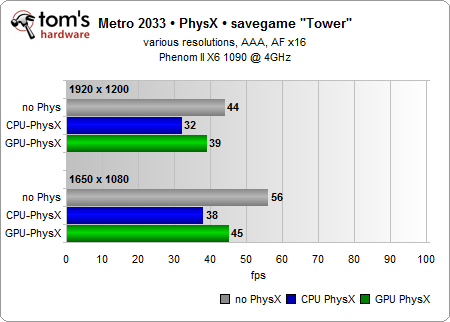

Looking at Metro 2033, we also see that a reasonable use of PhysX effects is playable, even if no PhysX acceleration is available. This is because Metro 2033 is mostly limited by the main graphics card and its 3D performance, rather than added PhysX effects. There is one exception, though: the simultaneous explosions of several bombs. In this case, the CPU suffers from serious frame rate drops, although the game is still playable. Most people won’t want to play at such low resolutions, so we switched to the other extreme.

Performing these benchmarks with a powerful main graphics card and a dedicated PhysX card was a deliberate choice, given that a single Nvidia card normally suffers from some performance penalties with GPU-based PhysX enabled. Things would get quite bad in this already-GPU-constrained game. In this case, the difference between CPU-based PhysX on a fast six-core processor with well-implemented multi-threading and a single GPU is almost zero.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Assessment

Contrary to some headlines, the Nvidia PhysX SDK actually offers multi-core support for CPUs. When used correctly, it even comes dangerously close to the performance of a single-card, GPU-based solution. Despite this, however, there's still a catch. PhysX automatically handles thread distribution, moving the load away from the CPU and onto the GPU when a compatible graphics card is active. Game developers need to shift some of the load back to the CPU.

Why does this so rarely happen?

The effort and expenditure required to implement coding changes obviously works as a deterrent. We still think that developers should be honest and openly admit this, though. Studying certain games (with a certain logo in the credits) begs the question of whether this additional expense was spared for commercial or marketing reasons. On one hand, Nvidia has a duty to developers, helping them integrate compelling effects that gamers will be able to enjoy that might not have made it into the game otherwise. On the other hand, Nvidia wants to prevent (and with good reason) prejudices from getting out of hand. According to Nvidia, SDK 3.0 already offers these capabilities, so we look forward to seeing developers implement them.

Current page: CPU PhysX: Multi-Threading?

Prev Page CPU PhysX: The x87 Story Next Page How To: His Majesty, Radeon The Fifth, And The PhysX Squire, GeForce

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

eyefinity So it's basically what everybody in the know already knew - nVidia is holding back progress in order to line their own pockets.Reply -

Emperus Is it 'Physx by Nvidia' or 'Physx for Nvidia'..!! Its a pity to read those lines wherein it says that Nvidia is holding back performance when a non-Nvidia primary card is detected..Reply -

It looks like the increase in CPU utilization with CPU physX is only 154%, which could be 1 thread plus synchronization overhead with the main rendering threads.Reply

-

eyefinity The article could barely spell it out more clearly.Reply

Everyone could be enjoying cpu based Physics, making use of their otherwise idle cores.

The problem is, nVidia doesn't want that. They have a proprietary solution which slows down their own cards, and AMD cards even more, making theirs seem better. On top of that, they throw money at games devs so they don't include better cpu physics.

Everybody loses except nVidia. This is not unusual behaviour for them, they are doing it with Tesellation now too - slowing down their own cards because it slows down AMD cards even more, when there is a better solution that doesn't hurt anybody.

They are a pure scumbag company. -

rohitbaran In short, a good config to enjoy Physx requires selling an arm or a leg and the game developers and nVidia keep screwing the users to save their money and propagate their business interests respectively.Reply -

iam2thecrowe The world needs need opencl physics NOW! Also, while this is an informative article, it would be good to see what single nvidia cards make games using physx playable. Will a single gts450 cut it? probably not. That way budget gamers can make a more informed choice as its no point chosing nvidia for physx and finding it doesnt run well anyway on mid range cards so they could have just bought an ATI card and been better off.Reply -

archange Believe it or not, this morning I was determined to look into this same problem, since I just upgraded from an 8800 GTS 512 to an HD 6850. :OReply

Thank you, Tom's, thank you Igor Wallossek for makinng it easy!

You just made my day: a big thumbs up! -

jamesedgeuk2000 What about people with dedicated PPU's? I have 8800 GTX SLi and an Ageia Physx card where do I come into it?Reply -

skokie2 What is failed to be mentioned (and if what I see is real its much more predatory) that simply having an onboard AMD graphics, even if its disabled in the BIOS, stops PhysX working. This is simply outragous. My main hope is that AMD finally gets better at linux drivers so my next card does not need to be nVidia. I will vote with my feet... so long as there is another name on the slip :( Sad state of graphics generally and been getting worse since AMD bought ATI.. it was then that this game started... nVidia just takes it up a notch.Reply