Radeon HD 7990 And GeForce GTX 690: Bring Out The Big Guns

EVGA recently lent our German lab one of the GeForce GTX 690s we've had in the U.S. for months. The purpose? To pit against HIS' upcoming 7970 X2 and PowerColor's Devil13 HD7990, both dual-Tahiti boards vying to become the world's fastest graphics card.

EVGA GeForce GTX 690: Elegance, Illustrated

EVGA GeForce GTX 690: A Tough-To-Beat Incumbent

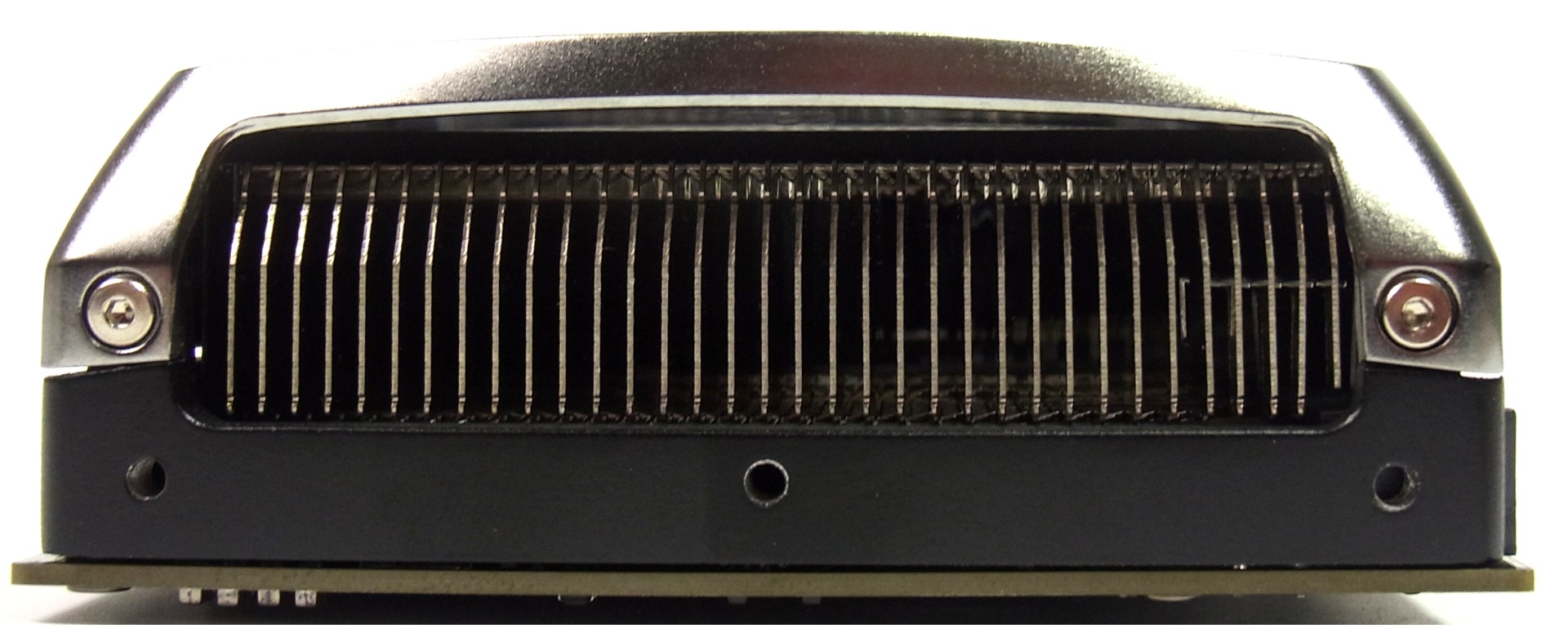

Nvidia put a lot of effort into engineering a compelling reference GeForce GTX 690, and EVGA taps that implementation for its version of the card. It's only a two-slot board, and, at 1.04 kg, not very heavy.

There are actually three different versions of EVGA's GeForce GTX 690: a baseline model with a 915 MHz core and 1502 MHz memory, a Signature card running at the same clock rates (but with a unique bundle), and a water-cooled Hydro Copper Signature board operating at 993/1502 MHz.

The minimalist box design hides massive performance inside.

EVGA doesn’t just include the usual stuff in the box (a pair of power adapters and a couple of display adapters), but also a flashy poster and stickers.

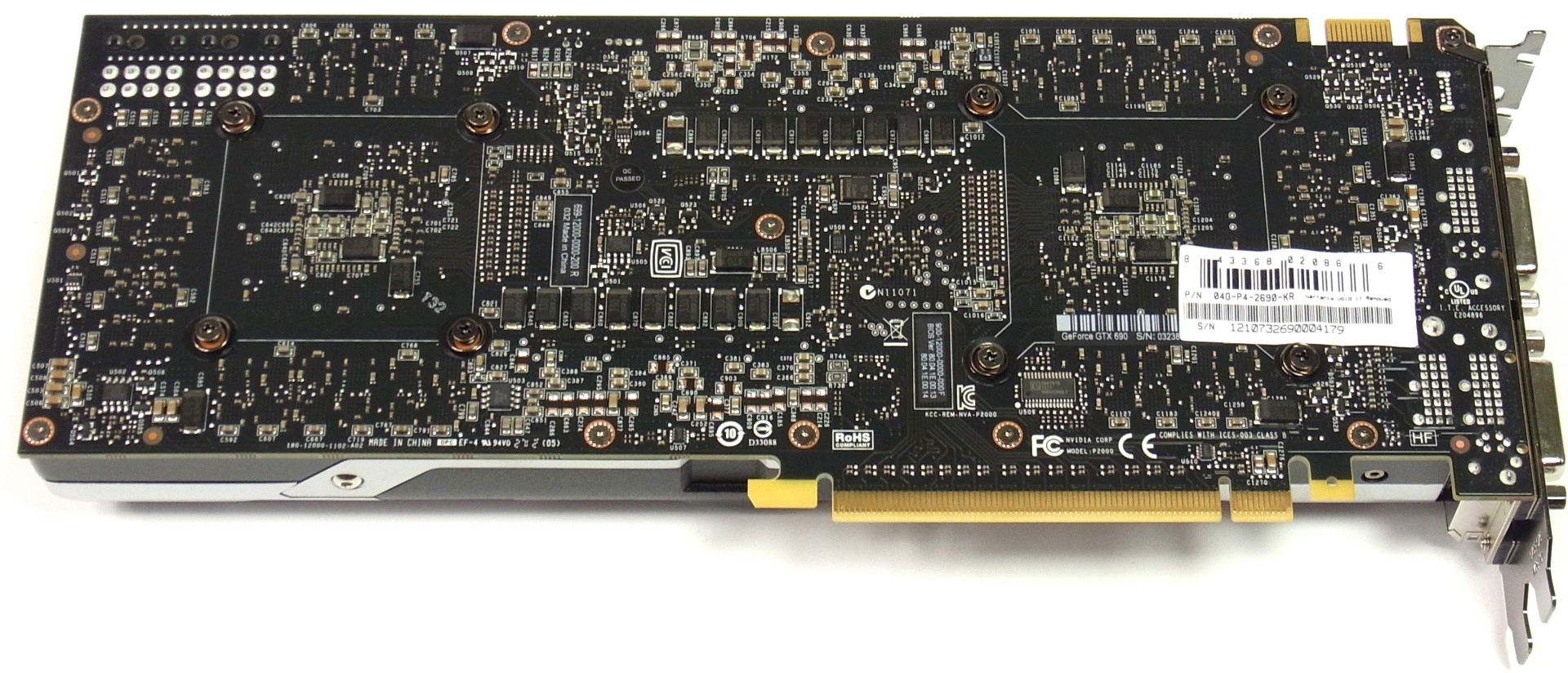

The GeForce GTX 690 sports two complete GK104 GPUs (the same ones that drive Nvidia's single-chip flagship GeForce GTX 680). Consequently, EVGA's card sports a total of 3072 CUDA cores (1536 per GPU), 256 texture units (128 per GPU), and 64 ROPs (32 per GPU).

Nvidia isn’t using its old PCIe 2.0-constrained NF200 bridge chip any more to connect its graphics processors. The new switch is PLX's PEX 8747, which supports 48 lanes of third-gen PCI Express connectivity. Again, 16 go to an upstream port, while 16 each create downstream ports attached to the GPUs. Rated latencies as low as 126 ns should help with expedient transfers between the host and GPUs.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Each GPU is mated to 2 GB of GDDR5 memory over a 256-bit interface. Again, this is the same configuration we know from the Nvidia GeForce GTX 680, giving us a similar peak bandwidth number of 192 GB/s.

The cores themselves run slightly slower than the single-chip card's, though. Each GK104 on the GeForce GTX 690 operates at 915 MHz, rather than the 680's 1006 MHz. So long as Nvidia's 300 W TDP rating isn't exceeded, GPU Boost should be able to push clock rates up to 1019 MHz, which is only a little lower than the GeForce GTX 680’s maximum frequency.

Two eight-pin connectors, together with the PCI Express slot, combine to deliver up to 375 W. This board's predecessor, the GeForce GTX 590, also hit that limit. But dual-GPU cards usually use a little less power than two equivalent single-GPU cards running in SLI. As such, 300 W could be a realistic figure for EVGA's GeForce GTX 690.

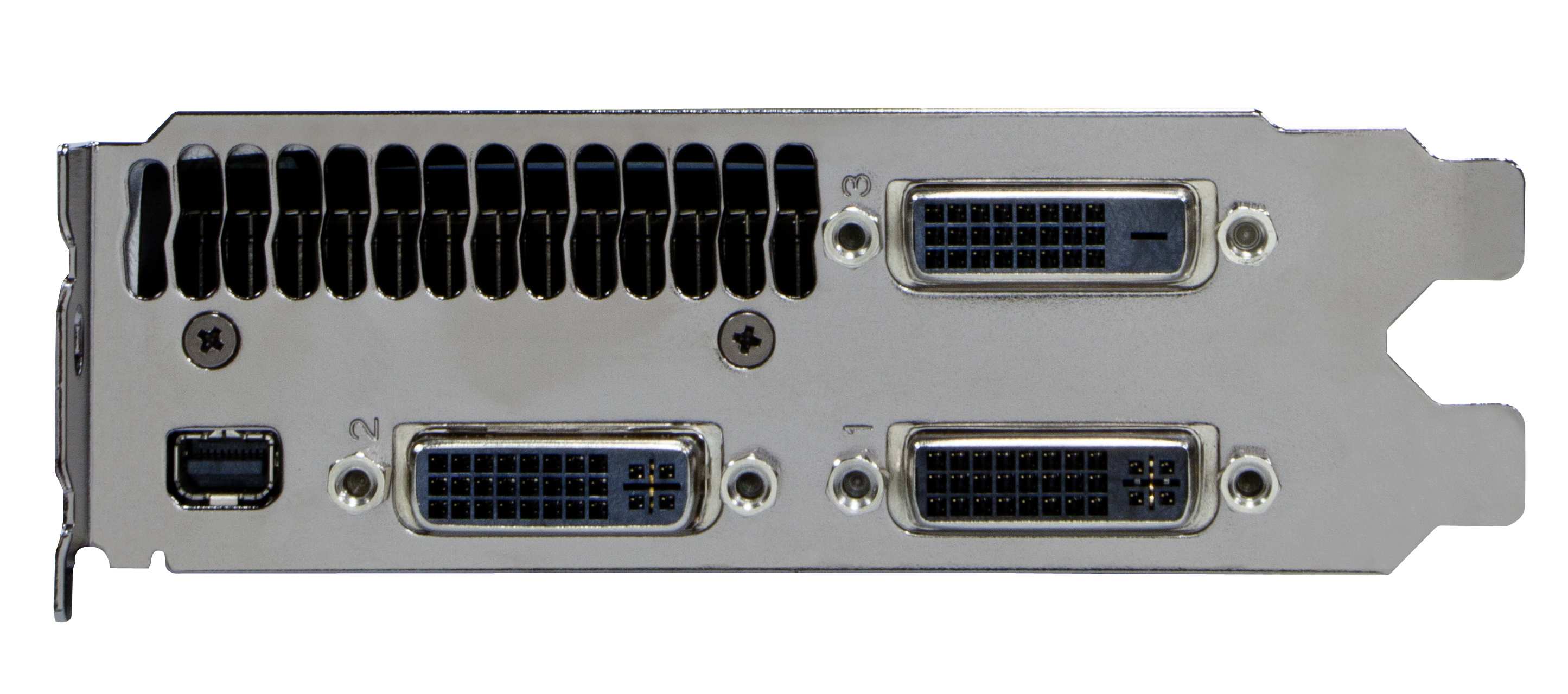

The GeForce GTX 690 has three DVI connectors and one DisplayPort output, allowing the card to drive up to four displays simultaneously.

EVGA is particularly proud of its warranty coverage, which lasts for three years and is fully transferable. So, if you're the sort to buy the best of the best every year, whoever picks up your left-over GeForce GTX 690 on eBay in 2013 should still be covered. The company also offers warranty extension out to five or 10 years, though we see absolutely zero value in protecting a decade-old graphics card.

Also high up on the EVGA's list of accolades is its Precision X software, which Nvidia used to illustrate the functionality of GPU Boost back when it launched GeForce GTX 680. The software facilitates core and memory clock rate control, fan speed tuning, and real-time monitoring of the 690's vital attributes.

Lastly, we were excited to see EVGA launch controller software for the LED under this card's GeForce GTX logo (up on the top edge of the card). Nvidia told us something like this was in development back when we first reviewed the 690, but it wasn't ready yet. Used together with Precision X, the little utility can increase/decrease the LED's brightness based on GPU utilization, clock rate, or frame rate. Pretty cool, and only compatible with EVGA's GeForce GTX 690.

Current page: EVGA GeForce GTX 690: Elegance, Illustrated

Prev Page HIS 7970 X2: The Challenger Next Page PowerColor Devil13 HD7990: Big And Flashy

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

mayankleoboy1 IMHO, the GTX690 looks best. There is something really alluring about shiny white metallic shine and the fine metal mesh. Along with the fluorescent green branding.Reply

Maybe i am too much of a retro SF buff :) -

Ironslice What's the most impressive is that the GTX 690 was made by nVidia themselves and not an OEM. Very nice and balanced card.Reply -

thanks for the in depth analysis with adaptive V-sync and radeon pro helping with micro stutter.Reply

not to take away anything for the hard work performed; i would have liked have seen nvidia's latest beta driver, 310.33, included also to see if nvidia is doing anything to improve the performance of their card instead of just adding 3d vision, AO, and sli profiles. -

RazorBurn AMD's Dual GPU at 500+ Watts of electricity is out for me.. Too Much Power and Noise..Reply -

mohit9206 2 670's in sli is better than spending on a 690 and 2 7950's in Xfire is better than spending on a 7990. this way you save nearly $300 both waysReply