A 1400 MB/s SSD: ASRock's Z97 Extreme6 And Samsung's XP941

Z97 ushers in new and exciting ways to attach and use storage devices. With support for M.2 PCIe and SATA Express, two sides of the same SSD coin, Z97 improves on Z87. But not everywhere. AsRock add to Z97 with some new tricks, and so we take a look.

Z97 Express: The Same Old Bandwidth Limitations

Not surprisingly, bandwidth through the Z97 Express platform controller hub to the host processor is limited by Intel's DMI interface, based on PCI Express 2.0. That connection won't be updated to third-gen transfer rates until Skylake, which is still two generations away. But Intel's mainstream desktop chipset doesn't just need the bandwidth advantages of PCIe 3.0, it could also really benefit from more lanes than the eight it offers currently.

We know this because we've already looked at how multi-drive SSD arrays on Intel's 6 Gb/s ports are cut off at the knees. Last year, with a stack of SSD DC 3500s and ASRock's C226 WS motherboard, I put together Six SSD DC S3500 Drives And Intel's RST: Performance In RAID, Tested, and the ceiling was made quite clear. Z87 Express offered six 6 Gb/s ports of connectivity, but three decent SSDs are enough to saturate the DMI's limited bandwidth. Sixteen-hundred megabytes per second was basically the limit.

Does any of that change in Z97 Express? How does the addition of SATA Express and a second-gen x2 slot sharing the same limited throughput alter the equation?

Of course, as we've established, ASRock's Z97 Extreme6 is unique. It does have a two-lane M.2 PCI Express 2.0 slot competing for the PCH's limited bandwidth. But it also employs what ASRock calls Ultra M.2, which is a second slot tapping into a Haswell-based CPU's 16 lanes of third-gen PCIe, too. This slot isn't affected by the chipset. And if you drop a PCIe M.2 drive into the Ultra slot, you can still use SATA Express, which is wired into Z97. In exchange, you can't run a graphics card using the processor's 16 lanes, instead bumping it down to eight. Perhaps more severely, SLI and CrossFire configurations are out, too.

But I'm a storage guy. Giving up complex graphics arrays is alright in my book.

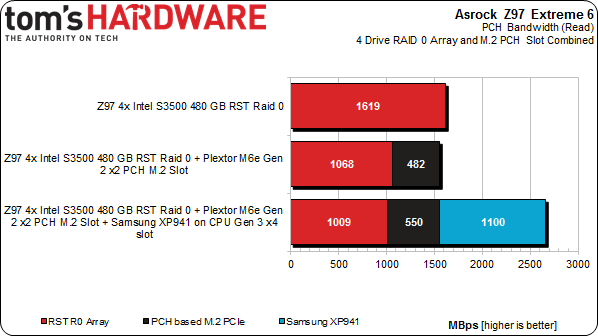

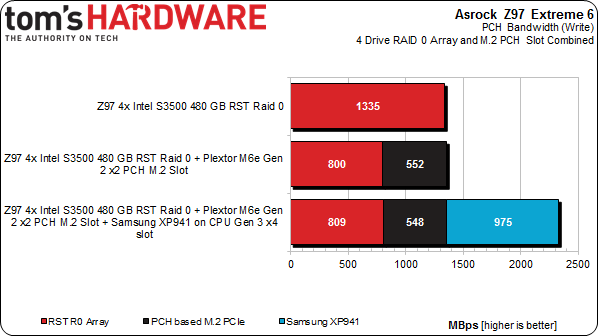

So, here's a breakdown of the DMI bandwidth problem. With four SATA 6Gb/s drives in RAID 0, we're limited to around 1600 MB/s. When you factor in the PCH-attached M.2 slot, available bandwidth doesn't change. But the distribution does. Finally, we add Samsung's XP941 in ASRock's special Ultra slot. It doesn't cannibalize Z97's throughput, but as we apply a workload to every device simultaneously, check out how much bandwidth we can push through the Samsung compared to Plextor's M6 and four-drive array of SSD DC S3500s.

Each device gets a workload of 128 KB sequential data with Iometer 2010. We start with the four-drive RAID 0 array, which are already limited by the DMI interconnect. As expected, we see roughly 1600 MB/s. Then, we add the two-lane M.2 slot hosting Plextor's M6e, a PCIe-based drive. The read task is simultaneously applied to it and the RAID 0 configuration.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Not surprisingly, total bandwidth still adds up to ~1600 MB/s. But it's split unevenly between the M.2 slot and SATA 6Gb/s ports. No matter what combination of storage you use attached to Z97 Express, there's a finite ceiling in place. I concede that most desktop users won't ever see the upper bounds of what DMI 2.0 can do. But it's worth noting that Intel arms this chipset with more I/O options than the core logic can handle gracefully.

Then we add Samsung's XP941, which does its business free of the DMI's limitations. It alone delivers as much throughput as Intel's four SSD DC S3500s. That's notable because, when you think about it, a single SSD in the PCH-attached M.2 slot monopolizes as much as half of the DMI's available headroom. As storage gets faster and the DMI doesn't, an increasing number of bottlenecks surface.

The same workload pushing writes (rather than reads) demonstrates even lower peak throughput, topping out north of 1300 MB/s. We saw the same thing last year in our Z87 Express-based RAID 0 story.

Tapping into the CPU's PCIe controller with a four-lane M.2 slot dangles a tantalizing option in front of storage enthusiasts like myself, eager to circumvent the Z97 chipset's limited capabilities. I understand that most enthusiasts, even the most affluent power users, won't have six SSDs hanging off of their motherboards. But it really doesn't take much to hit the upper bound of what a PCH can do. And DMI bandwidth is shared with USB and networking too, so we're even assuming those subsystems are sitting idle.

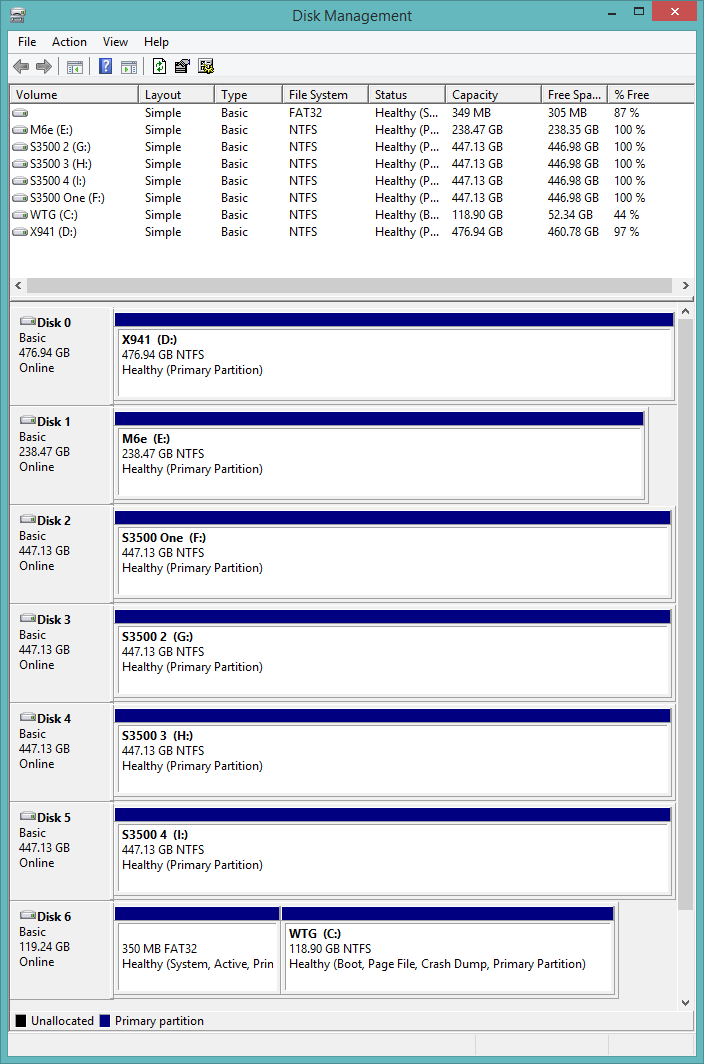

This is what the Disk Management console looks like with four SSDs on Intel's 6 Gb/s ports, Plextor's M6e in the PCH-attached M.2 slot, the USB 3.0 Windows to Go storage device used to boot the OS, and Samsung's XP941. Only the last device isn't sharing throughput through Intel's DMI.

Think you might try working around these issues by dropping a four- or eight-lane HBA onto your motherboard? Wrong. Remember, unless you're tapping into the processor's third-gen PCIe lanes, all expansion goes through Z97 Express, subjecting you to the same limitations. Professionals who need more should simply look to one of Intel's higher-end LGA 2011-based platforms.

I remain critical of PCIe-attached storage without NVMe (the time for that is coming). However, AHCI doesn't stop Samsung's X941 from demonstrating sexy performance characteristics. And ASRock's Z97 Extreme6 is really the only board able to expose its potential right now. Let's take a closer look and suss out the extent of its advantage in the Ultra M.2 slot.

Current page: Z97 Express: The Same Old Bandwidth Limitations

Prev Page M.2 And SATA Express, Discussed Next Page Testing Samsung's XP941 On Z97 Express-

aminebouhafs Once an SSD in plugged into the Ultra M.2 slot, the bandwidth between central processing unit and graphics processing unit is cut-down by half. Therefore, while the end-user gets additional SSD performance, the end-user may lose some GPU performance because of insufficient bandwidth between it and the CPU.Reply -

JoeArchitect Very interesting article and a great read. Thanks, Chris - I hope to see more like this soon!Reply -

Eggz This makes me excited for X99! With 40 (or more) lanes, of PCI-e (probably more), there will be no need to compromise. We have to remember that the Z97 Chipset is a consumer-grade product, so there almost has to be tradoffs in order to justify stepping up to a high-end platform.Reply

That said, I feel like X99, NVMe, and and M.2 products will coincide nicely with their respective releases dates. Another interesting piece to the puzzle will be DDR4. Will the new storage technology and next-generation CPUs utilize it's speed, or like DD3, will it take several generations for other technologies to catch up to RAM speeds? This is quite an interesting time :) -

Amdlova Chris test the asrock z97 itx... and another thing... my last 3 motherboard from asrock and i want to say Asrock Rock's!Reply -

Damn_Rookie While storage isn't the most important area of computer hardware for me, I always enjoy reading Christopher's articles. Very well written, detail orientated, and above all else, interesting. Thanks!Reply -

hotwire_downunder ASRock has come along way, I used them a long time back with disappointing results, but I have started to use them again and have not been disappointed this time around.Reply

Way to turn things around ASRock! Cheap as chips and rock steady! -

alidan @aminebouhafs if i remember right, didn't toms show how much performance loss there is when you tape gpu cards to emulate having half or even a quarter of the bandwidth? if i remember right back than the difference was only about 12% from 16 lanes down to either 4 or 8Reply -

Eggz Reply13445787 said:@aminebouhafs if i remember right, didn't toms show how much performance loss there is when you tape gpu cards to emulate having half or even a quarter of the bandwidth? if i remember right back than the difference was only about 12% from 16 lanes down to either 4 or 8

PCI-e 3.0 x8 has enough bandwidth for any single card. The only downside to using PCI-e lanes on the SSD applies only to people who want to use multiple GPUs.

Still, though, this is just the mid-range platform anyway. People looking for lots of expansion end up buying the X chipsets rather than the Z chipsets because of the greater expandability. I feel like the complaint is really misplaced for Z chipsets, since they only have 16 PCI-e lanes to begin with. -

cryan ReplyOnce an SSD in plugged into the Ultra M.2 slot, the bandwidth between central processing unit and graphics processing unit is cut-down by half. Therefore, while the end-user gets additional SSD performance, the end-user may lose some GPU performance because of insufficient bandwidth between it and the CPU.

Well, it'll definitely negate some GPU configurations, same as any PCIe add-in over the CPU's lanes. With so few lanes to work with on Intel's mainstream platforms, butting heads is inevitable.

Regards,

Christopher Ryan