Gaming At 1920x1080: AMD's Trinity Takes On Intel HD Graphics

Think you're pretty snazzy because your integrated graphics core plays mainstream games at 1280x720? We're on to bigger and better things, like modern titles at 1920x1080. Can AMD's Trinity architecture push high-enough frame rates to make this possible?

Benchmark Results: Battlefield 3, Crysis 2, And Witcher 2

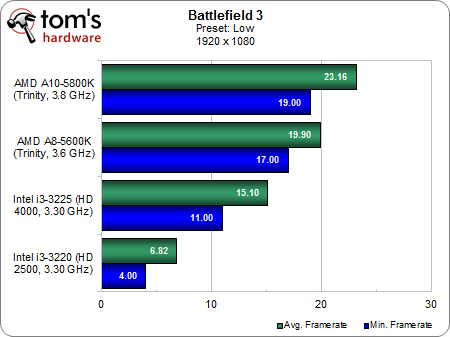

Battlefield 3 has a couple of different anti-aliasing settings: Anti-Aliasing Post, which is FXAA, and Anti-aliasing Deferred, which is MSAA. The former is fairly performance-inexpensive, while the latter can have a huge impact on frame rates. When we isolated no AA versus 4x MSAA at 1920x1080 (with 16x AF and Ultra details enabled), the feature clobbered frame rates between 30 and 40%—and that was with high-end discrete GPUs.

Even with anti-aliasing effects disabled altogether, our integrated graphics engines didn't stand a chance at 1920x1080. AMD's new Trinity-based flagship, the A10-5800K can't get anywhere near 30 FPS, and its sub-20-frame minimum performance level is simply unacceptable. Intel's HD Graphics 4000 solution similarly fails to impress.

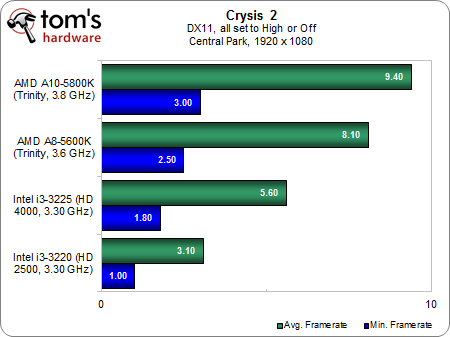

The situation is even worse in Crysis 2. There’s no point in belaboring this. The DX 11-based title is famous for its massively taxing load. Even AMD admitted going in that the game would crush any of the integrated graphics solutions we threw at it. So, we knew it would be bad. But how bad? Let’s just say we’d like to revisit this test in three or four years. Perhaps by then, Crysis won’t crash on its first load attempt under Intel’s integrated CPUs, as it did on both chips for us.

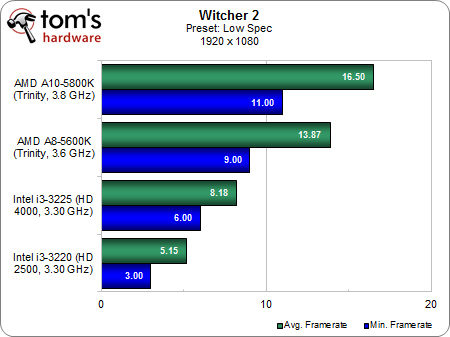

The Witcher 2: Assassins of Kings is a newcomer in our benchmarking line-up, added after receiving feedback from you, our readers, that it was something you wanted to see. With its admirable RED engine-fueled graphics, we wanted to find out if this medieval fight-fest would lean more toward Skyrim or Battlefield 3 in its weight on graphics hardware. Clearly, the answer carries the weight of a boat anchor. We played the Day of the Assault (Morning) battle and found that The Witcher 2 isn’t as demanding as Crysis 2, but it’s not far off.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Benchmark Results: Battlefield 3, Crysis 2, And Witcher 2

Prev Page Benchmark Results: Skyrim And Deus Ex: HR Next Page Benchmark Results: DiRT Showdown-

azathoth Seems like a perfect combination for a Casual PC gamer, I'm just curious as to the price of the Trinity APU's.Reply -

luciferano They both have graphics that have HD in their name, but AMD's HD graphics are more *HD*, lol.Reply -

Nintendo Maniac 64 Err... did we really need both the A10-5800k and the A8-5600k? Seeing how both are already 100w unlocked CPUs, surely something like an A10-5800k vs a 65w A10-5700 would have been more interesting for an HTPC environment...Reply -

mayankleoboy1 Consoles set the bar for game developers. These iGPU's are comparable to the consoles and thats why games will run smooth here.Reply

With next gen consoles coming out next year, game devs will target them. Hence the minimum standard for games will rise, making the next gen games much slower on the iGPU's. So both AMD and Intel will have to increase performance much more in the next 1-2 years.

tl;dr : next gen games will run poorly on these igpu's as next gen consoles will set the minimum performance standard. -

mousseng Reply

Keep in mind, though, that that's exactly what's going to allow AMD and Intel to advance their hardware faster than games will, as they were discussing in the article (first page of the interview). Look how far Fusion and HD Graphics have come over the past 3 years, and look how long the previous console generation lasted - if that trend is anything to go by, I'm sure integrated graphics could easily become a viable budget gaming option in the next few years.9537609 said:tl;dr : next gen games will run poorly on these igpu's as next gen consoles will set the minimum performance standard. -

falchard Since when as AMD or nVidia actually taken on Intel graphics? Thats a bit insulting considering the disproportionate results time and time again.Reply -

luciferano mayankleoboy1Consoles set the bar for game developers. These iGPU's are comparable to the consoles and thats why games will run smooth here.With next gen consoles coming out next year, game devs will target them. Hence the minimum standard for games will rise, making the next gen games much slower on the iGPU's. So both AMD and Intel will have to increase performance much more in the next 1-2 years.tl;dr : next gen games will run poorly on these igpu's as next gen consoles will set the minimum performance standard.Reply

Actually, the A10 and A8 have somewhat superior graphics compared to current consoles. Current consoles can't even play in 720p as well as these AMD IGPs played 1080p despite being a more optimized platform, so that this is true is kinda obvious IMO. Also, new games would simply mean dropping resolution for these APUs. They wouldn't be unable to play new games, just probably at 1080p and 16xx by 900/10xx resolutions too.

Intel probably isn't very motivated by gaming performance for their IGPs and they're supposedly making roughly 100% performance gains per generation with their top-end IGPs anyway, so they're working on growing IGP performance. AMD also gets to use GCN in their next APU and I don't think that I need to explain the implications there, especially if they go the extra mile with using their high-density library tech too.