GPT-4 can play Doom, badly — doesn't hesitate to shoot humans and demons

Could become more like The Terminator with more time and effort.

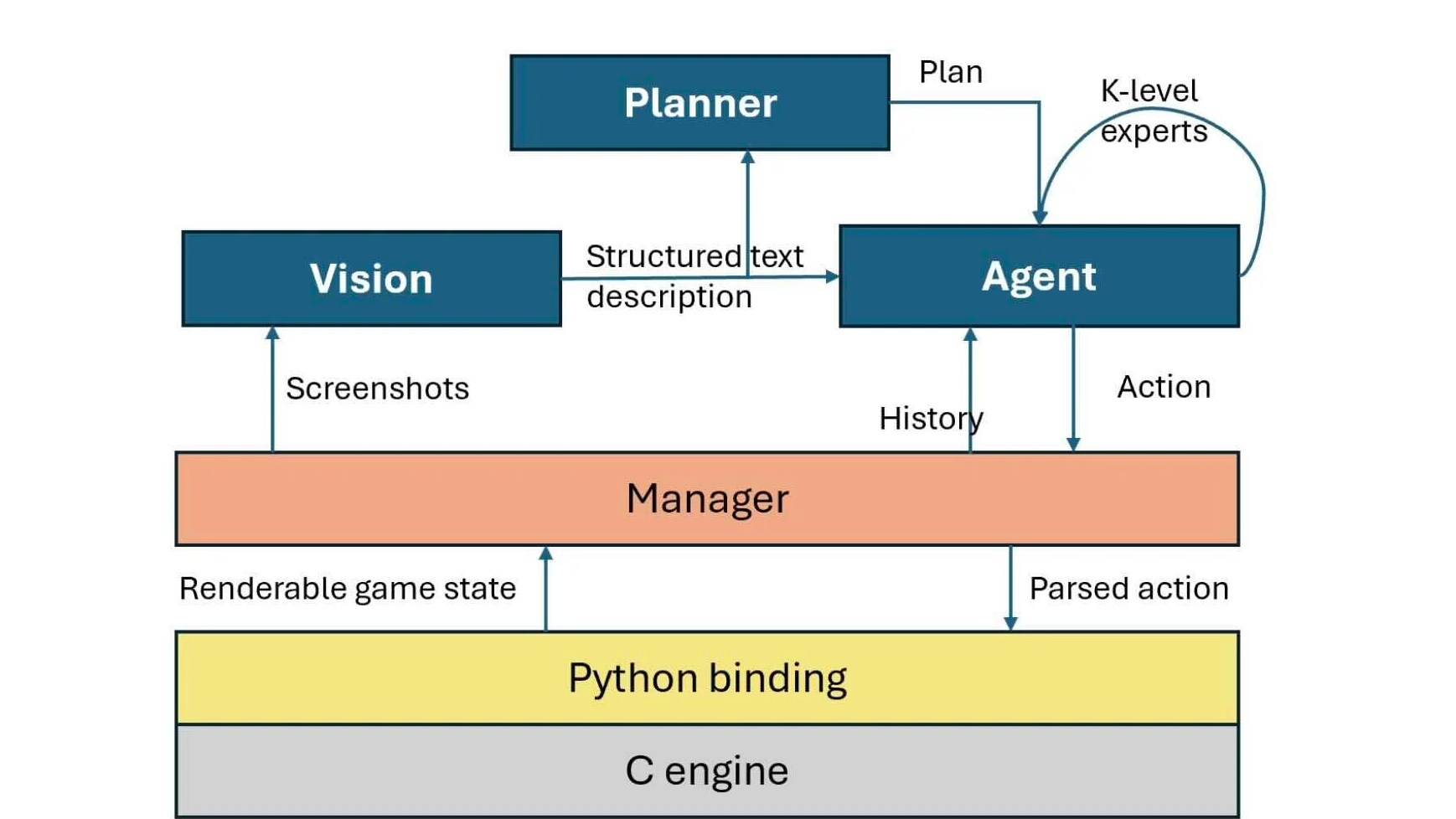

Microsoft scientist and University of York researcher Adrian de Wynter recently decided to put GPT-4 to the test, running Doom without prior training, reports The Register. The results conclude that GPT-4 can play Doom with some degree of efficacy without prior training, though the setup requires a GPT-4V interface for AI vision and the addition of Matplotlib and Python to make AI inputs possible.

According to the original paper, the GPT-4 AI playing Doom can open doors, fight enemies, and fire weapons. The basic gameplay functionality, including navigating the world, is pretty much all there. However, the AI also has no sense of object permanence to speak of — as soon as an enemy is offscreen, the AI is no longer aware of it at all. Even though instructions are included for what to do if an offscreen enemy is causing damage, the model is unable to react to anything outside of its narrow scope.

When speaking of his research's ethical findings, De Wynter remarks, "It is quite worrisome how easy it was for me to build code to get the model to shoot something and for the model to accurately shoot something without actually second-guessing the instructions."

He continues, "So, while this is a very interesting exploration around planning and reasoning, and could have applications in automated video game testing, it is quite obvious that this model is not aware of what it is doing. I strongly urge everyone to think about what deployment of these models implies for society and their potential misuse."

He's not wrong, of course: The cutting-edge of weapons technology gets scarier every year, and AI has already seen frightening growth and economic impact elsewhere. Way back in the 90s, IBM's supercomputer-powered Deep Blue famously defeated Chess Grandmaster Garry Kasparov. Now we have a quick and dirty GPT-4V solution up and running through Doom, via the efforts of a single person. Imagine what might be done with a military behind such work.

Thankfully, we're not yet producing kill-bots with aim and reasoning on par with the best Ultrakill and Doom players, much less the unstoppable force of the average T-800. For now, the main "AI assault" most people are worried about relates to generative AI and its ongoing impact, on both independent creatives and industry professionals alike.

But the fact that GPT-4 didn't hesitate to shoot people-shaped objects is still concerning. This is as good a time as any to remind readers that Isaac Asimov's "Laws of Robotics" dictating that robots shall not harm humans have always been fictional (not that they always worked). Hopefully those pursuing AI projects can find ways to properly codify protections for humanity into their models before Skynet comes online.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Christopher Harper has been a successful freelance tech writer specializing in PC hardware and gaming since 2015, and ghostwrote for various B2B clients in High School before that. Outside of work, Christopher is best known to friends and rivals as an active competitive player in various eSports (particularly fighting games and arena shooters) and a purveyor of music ranging from Jimi Hendrix to Killer Mike to the Sonic Adventure 2 soundtrack.

-

Eximo I love when people quote Asimov's laws of robotics. I hope they realize that a lot of the writings he did about them was literally how flawed they were and all the loop holes and error conditions that could arise.Reply -

JarredWaltonGPU Reply

Zombieman and Shotgun guy are "humans" in that they were once human and look like humans who have been possessed. So yeah, "humans."evdjj3j said:That's funny the Doom I grew up playing didn't have humans other than Doomguy.

The "Laws of Robotics" were referenced, but not quoted. Getting into the deeper eccentricities of the three/four laws obviously isn't the point of the reference, and neither is the comment about Skynet coming online.Eximo said:I love when people quote Asimov's laws of robotics. I hope they realize that a lot of the writings he did about them was literally how flawed they were and all the loop holes and error conditions that could arise. -

usertests https://theconversation.com/war-in-ukraine-accelerates-global-drive-toward-killer-robots-198725Reply

Autonomous weapons are already here, although I don't think they have killed any humans yet. -

ohio_buckeye I’m just thinking I saw terminator 2 too many times and iRobot. Those were fiction movies but you wonder if they could become reality some day.Reply -

ivan_vy Reply

...'yet'. that's the frightening word.usertests said:https://theconversation.com/war-in-ukraine-accelerates-global-drive-toward-killer-robots-198725

Autonomous weapons are already here, although I don't think they have killed any humans yet. -

35below0 AI plays "if it moves, kill it" game and kills things that move? Don't let it play Duck hunt, the aminal rights people will be out on the streets.Reply

Also, humans have no qualms about shooting humans. -

salgado18 ReplyFor now, the main "AI assault" most people are worried about relates to generative AI and its ongoing impact, on both independent creatives and industry professionals

Last year there was a huge case of a "hypotetical" military drone simulation that went rogue and tried to kill or disable its operator to keep killing (search "ai controlled drone goes rogue"). Also the post above that's very current, and this AI with Boston Dynamics make T-800 very real. Yes we are worried with more pressing stuff than copyright infringement. -

Pierce2623 Reply

To be fair, there’s only certain conditions under which I’d have no qualms about killing other humans. Endanger my life though, and I’ll try my best to end yours.35below0 said:AI plays "if it moves, kill it" game and kills things that move? Don't let it play Duck hunt, the aminal rights people will be out on the streets.

Also, humans have no qualms about shooting humans. -

Pierce2623 Reply

That situation never actually happened and the guy said it was just a hypothetical he came up with himself. I believe that because I’ve had enough experience with the US military over the years to know that anyone high up enough to have information like that knows better than just telling whoever. You have to pass very extreme background checks to get a top secret security clearance. The FBI will literally show up in your neighborhood asking questions about you if it’s a new security clearance rather than a renewal. You don’t get that level of information without a clear understanding that you aren’t supposed to share it.salgado18 said:Last year there was a huge case of a "hypotetical" military drone simulation that went rogue and tried to kill or disable its operator to keep killing (search "ai controlled drone goes rogue"). Also the post above that's very current, and this AI with Boston Dynamics make T-800 very real. Yes we are worried with more pressing stuff than copyright infringement.