Intel CEO attacks Nvidia on AI: 'The entire industry is motivated to eliminate the CUDA market'

Reframing the AI framework, away from CUDA and toward more open standards.

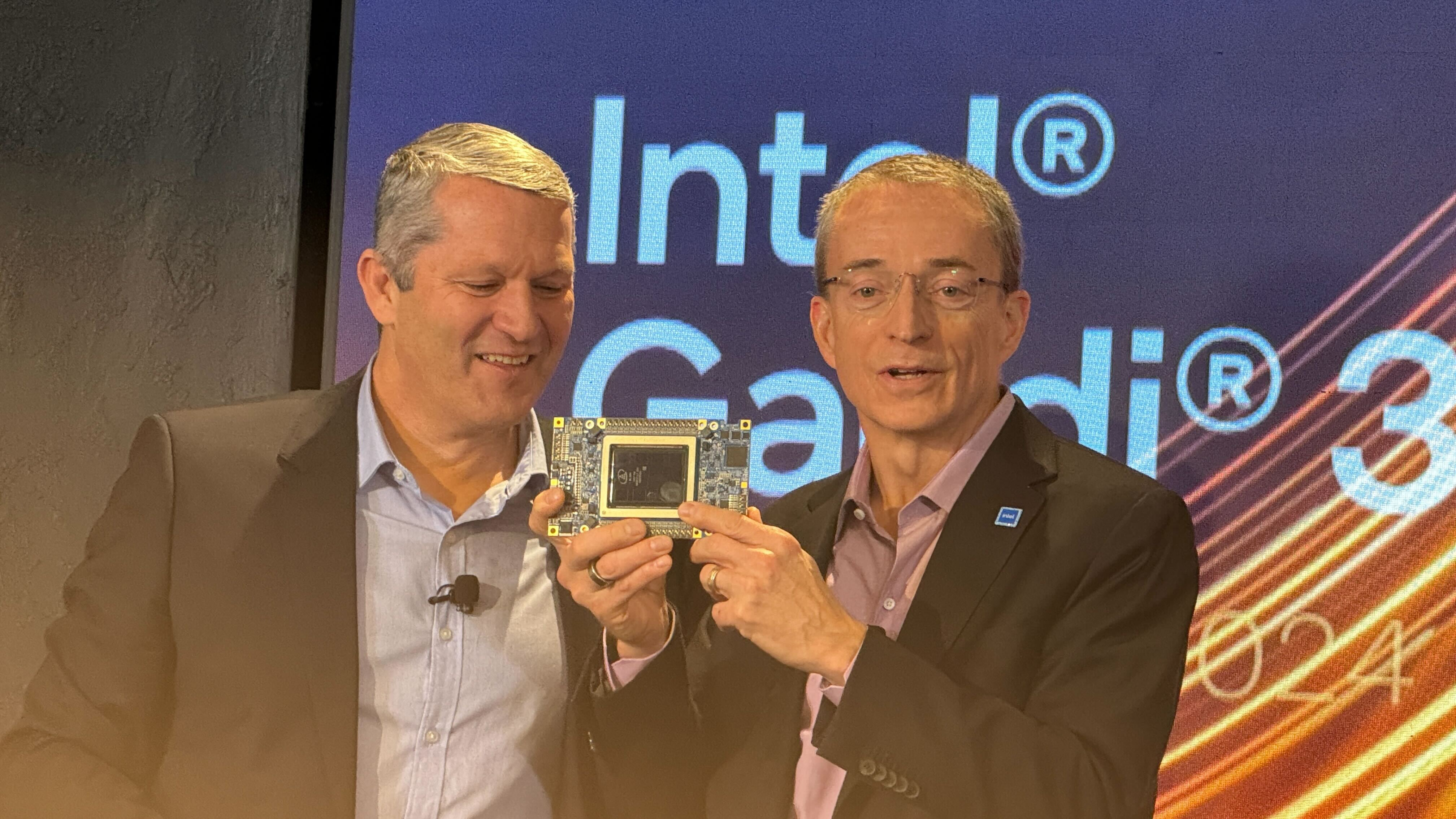

Intel CEO Pat Gelsinger came out swinging at Nvidia's CUDA technology, claiming that inference technology will be more important than training for AI as he launched Intel Core Ultra and 5th Gen Xeon datacenter chips in an event here in New York City. Taking questions at the NASDAQ, Gelsinger suggested that Nvidia’s CUDA dominance in training wouldn't last forever.

"You know, the entire industry is motivated to eliminate the CUDA market," Gelsinger said. He cited examples such as MLIR, Google, and OpenAI, suggesting that they are moving to a "Pythonic programming layer" to make AI training more open.

"We think of the CUDA moat as shallow and small," Gelsinger went on. "Because the industry is motivated to bring a broader set of technologies for broad training, innovation, data science, et cetera."

But Intel isn't relying just on training. Instead, it thinks inference is the way to go.

"As inferencing occurs, hey, once you've trained the model… There is no CUDA dependency," Gelsinger continued. "It's all about, can you run that model well?" He suggested that with Gaudi 3, shown on stage for the first time, that Intel will be up to the challenge, and will be able to do it as well with Xeon and edge PCs. Not that Intel won't compete in training, but "fundamentally, the inference market is where the game will be at," Gelsinger said.

He also took the opportunity to push OpenVINO, the standard that Intel has gathered around for its AI efforts, and predicted a world of mixed computing, some that occurs in the cloud, and others that happen on your PC.

Sandra Rivera, executive vice president and general manager of the Data Center and AI Group at Intel, added that Intel's scale from the data center to the PC may make it a partner of choice, as it can produce at volume.

"We're going to compete three ways for 100% of the datacenter AI TAM." Gelsinger said, tacking onto Rivera's comment. "With our leadership CEOs, leadership accelerators, and as a foundry. Every one of those internal opportunities is available to us: The TPUs, the inferentias, the trainiums, et cetera. We're going to pursue all of those. And we're going to pursue every commercial opportunity as well, with NVIDIA, with AMD, et cetera. We're going to be a foundry player."

It's a bold strategy, and Gelsinger appeared confident as he led his team through presentations today. Can he truly take on CUDA? Only time will tell as applications for the chips Intel launched today — and that his competitors are also working on — become more widespread.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Andrew E. Freedman is a senior editor at Tom's Hardware focusing on laptops, desktops and gaming. He also keeps up with the latest news. A lover of all things gaming and tech, his previous work has shown up in Tom's Guide, Laptop Mag, Kotaku, PCMag and Complex, among others. Follow him on Threads @FreedmanAE and BlueSky @andrewfreedman.net. You can send him tips on Signal: andrewfreedman.01

-

TechLurker It's odd that he's supporting an open standard when Intel also tend to be very proprietary, but it also makes sense to support it and pull the rug out some from NVIDIA somehow.Reply

In this case, I do hope he and the open-source project supporters succeed in the same way AMD was able to force some shift towards more open standards. -

-Fran- So they're not in the rearview mirror yet, right? xDReply

Oh, Mr Pat. I am with you on this one, but... I have to say: easier said than done.

Regards. -

peachpuff Reply

Usually companies in second place do this, good luck pat...TechLurker said:It's odd that he's supporting an open standard when Intel also tend to be very proprietary, but it also makes sense to support it and pull the rug out some from NVIDIA somehow.

-

ThomasKinsley I'm for anything that contributes to open standards that ultimately lets us have offline, trainable AI models that we can wholly customize in a simple package.Reply -

jaquith Everyone from a Elon Musk to the CEOs of Intel, AMD, Cerebras, and every AI system that's a contender .. (CUTTHROAT) .. want the machine of Nvidia+ OpenAI to stop in their tracks .. (SO THEY CAN CATCH UP!) It's like the Gold Rush to California and people are willing to do and say anything to win the next multi trillion dollar opportunity at any cost.Reply -

bourgeoisdude I know this is tech news. It is relevant. Just speaking generally: I hope Toms doesn't become another politics like space. I get enough of that in my feed and tech news generally calms me. If it becomes a space of "should China have more tech restrictions" or "is NVIDIA too powerful" or "look at how generous the NVIDIA CEO is, grit your teeth in anger you AMD fanboys". I don't know how much of that I can take.Reply -

JamesJones44 Intel CEO: Hey Nvidia we want you to be a customer of IFS... but... we hate you so let me publicly attack your product interface.Reply -

TJ Hooker Reply

Unfortunately making AI development tools open source/standard does imply that the resulting models (or the data needed to train them) would be open.ThomasKinsley said:I'm for anything that contributes to open standards that ultimately lets us have offline, trainable AI models that we can wholly customize in a simple package.