Windows Copilot will add GPU support in a future release — Nvidia details the advantages of high performance GPUs for AI workloads and more

Firing up a GPU versus an NPU will still create a massive drain on your laptop battery.

When Microsoft revealed the first Copilot+ laptops, all running Qualcomm Snapdragon X Elite processors, it wasn't just a slap in the face to AMD and Intel — Nvidia also got snubbed. Because Nvidia is the biggest name in the AI space right now, at least from the hardware side of things, many wondered why Copilot wasn't also able to run on powerful GPUs. Wonder no longer, as Nvidia announced at Computex 2024 that it's collaborating with Microsoft to bring the Copilot runtime to GPUs sometime later this year.

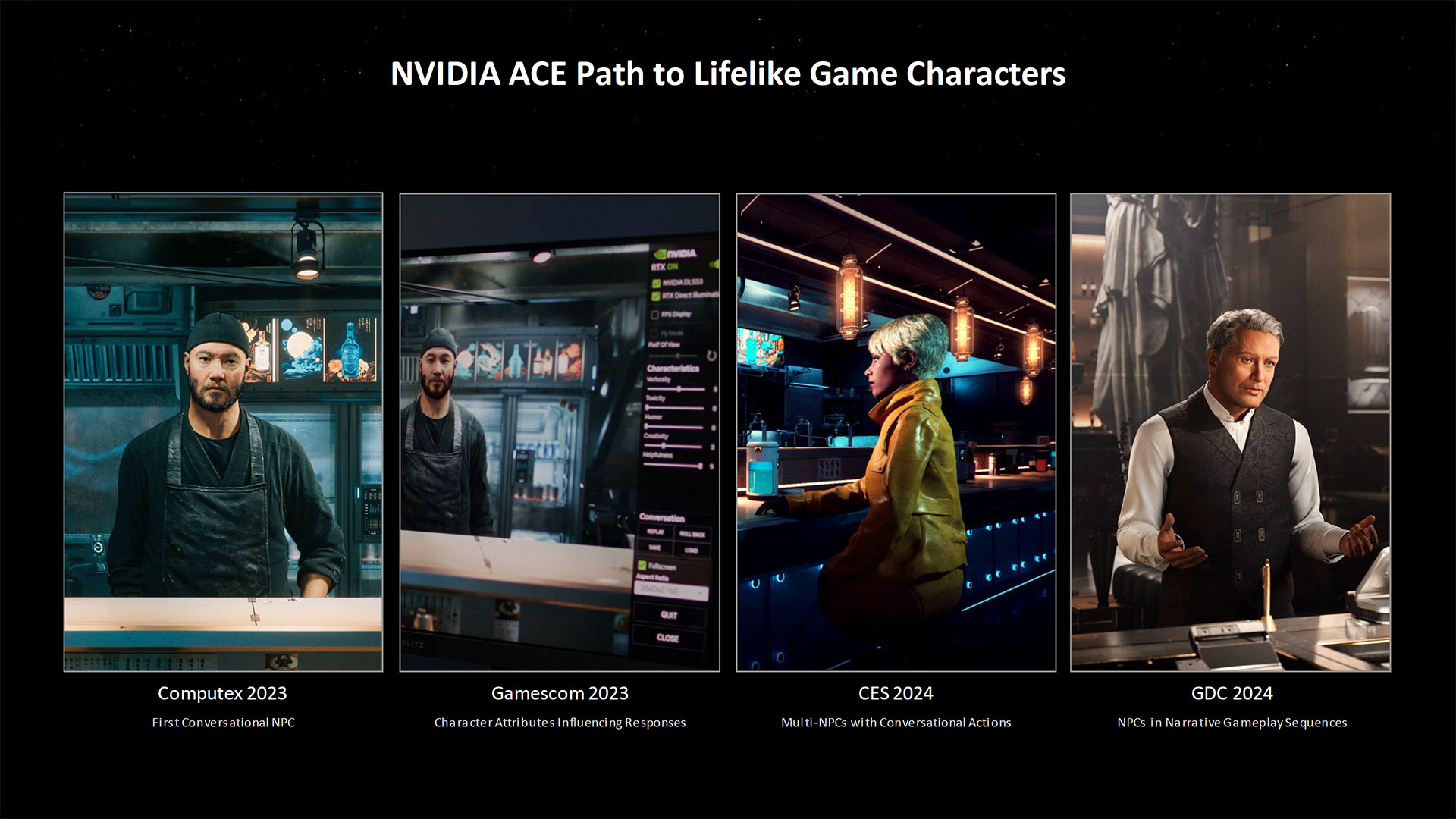

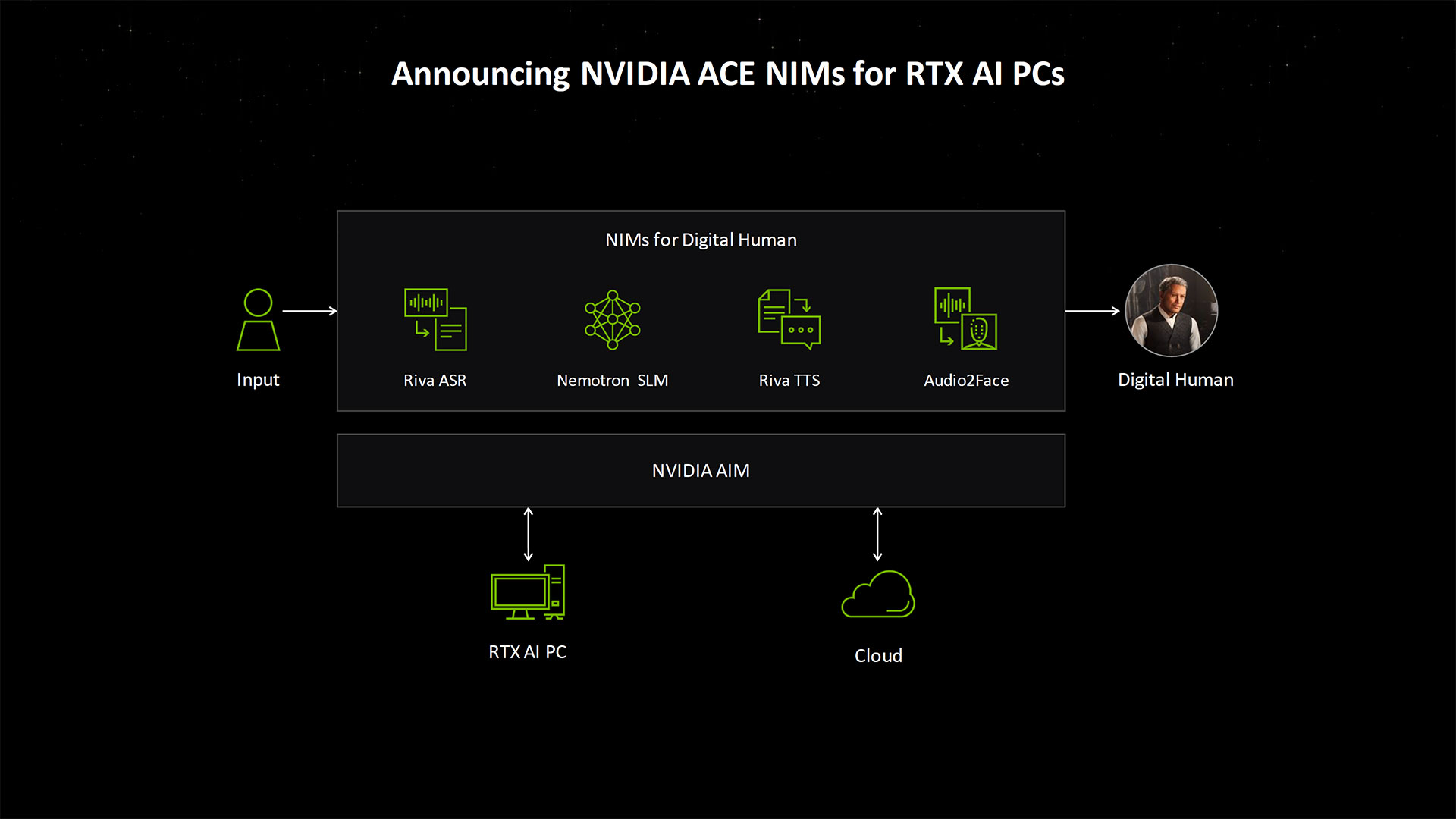

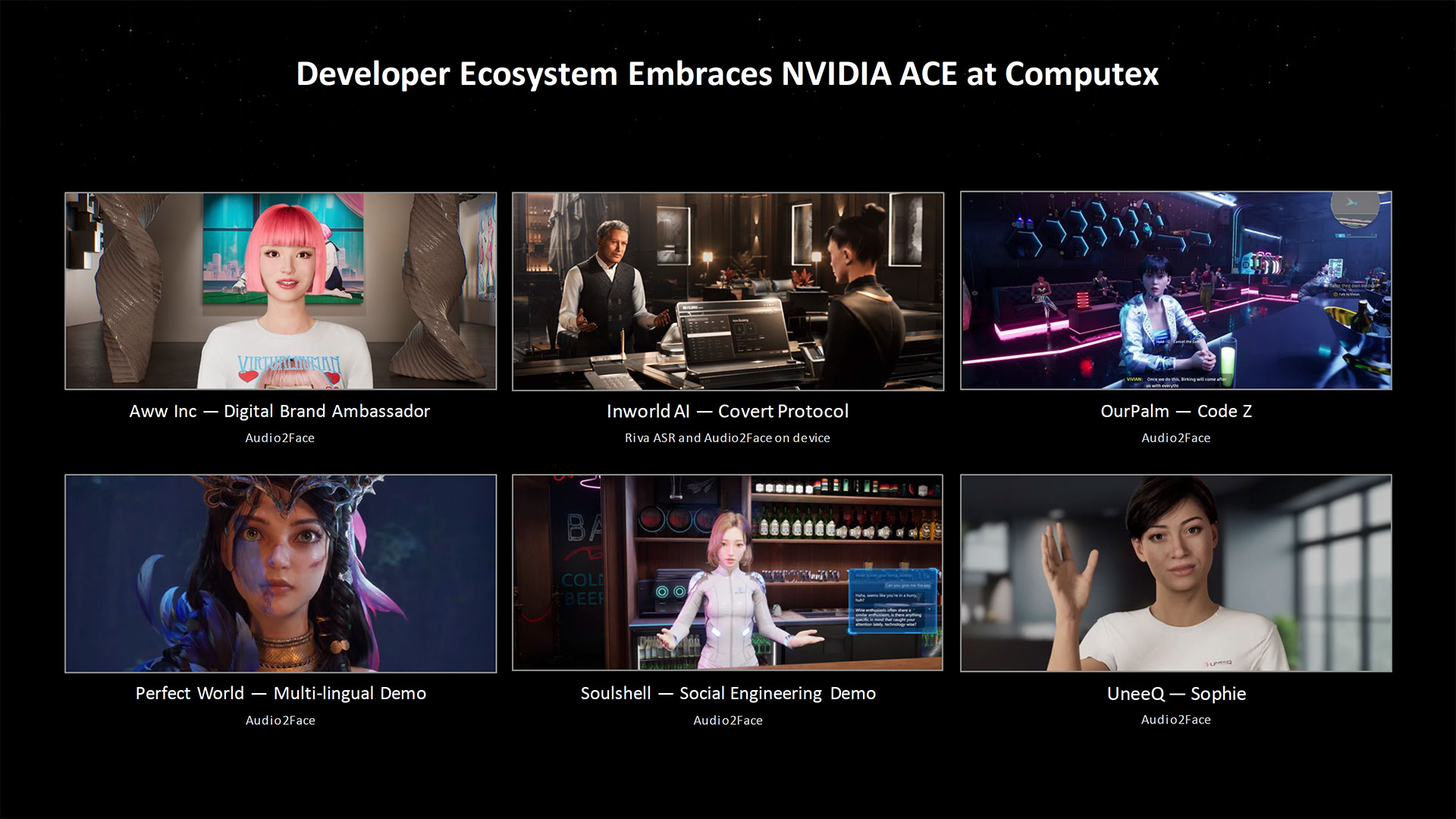

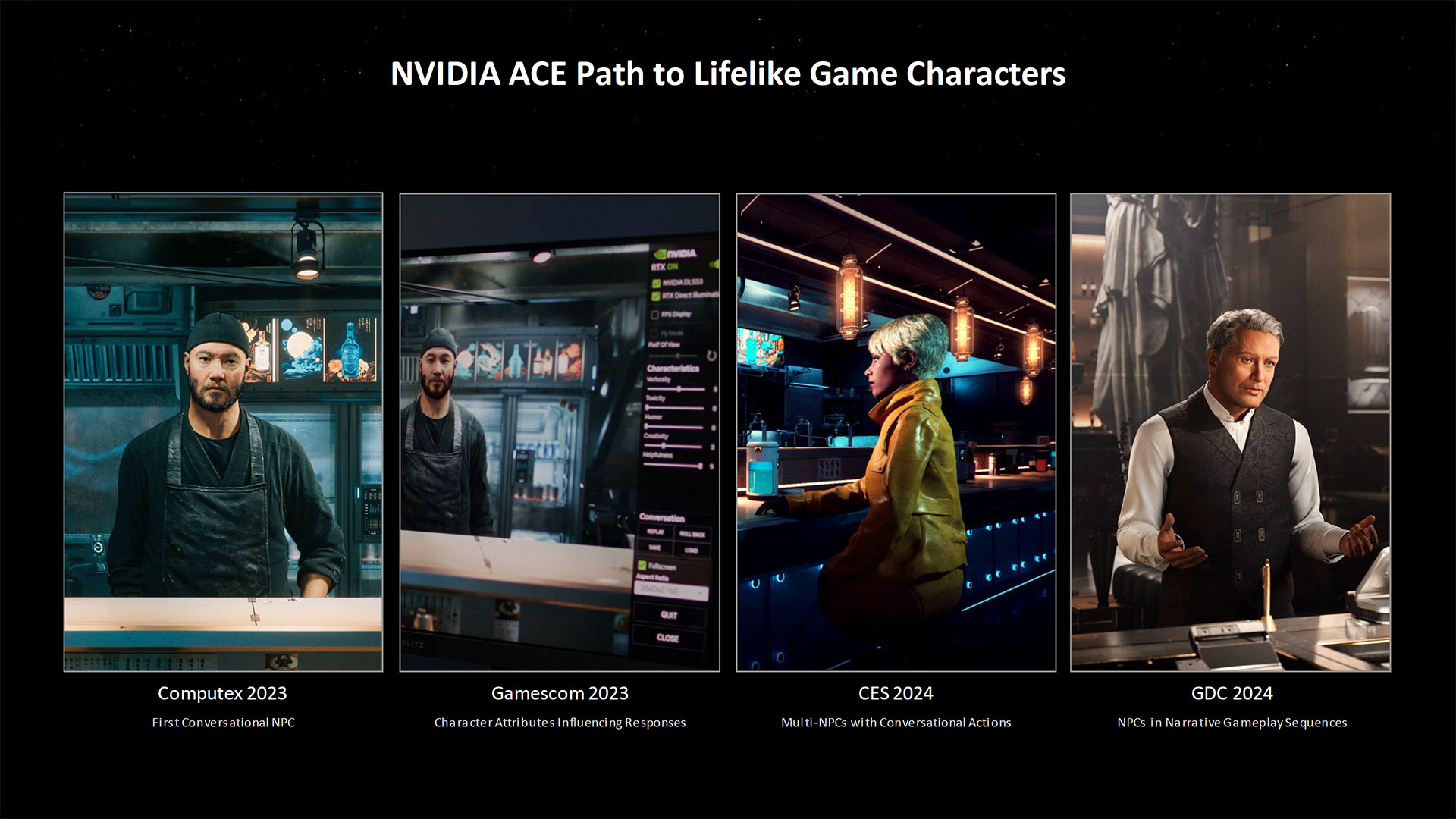

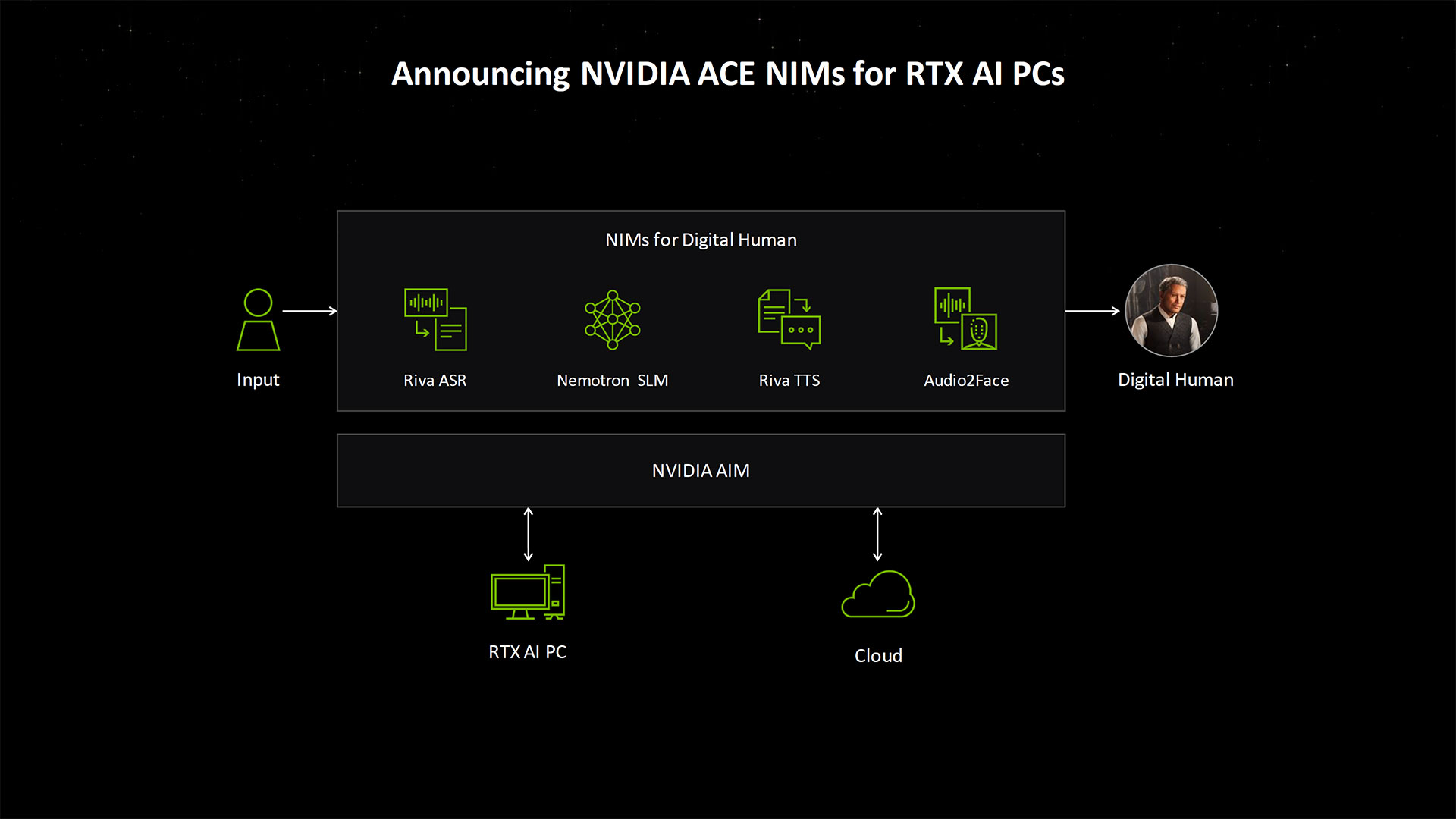

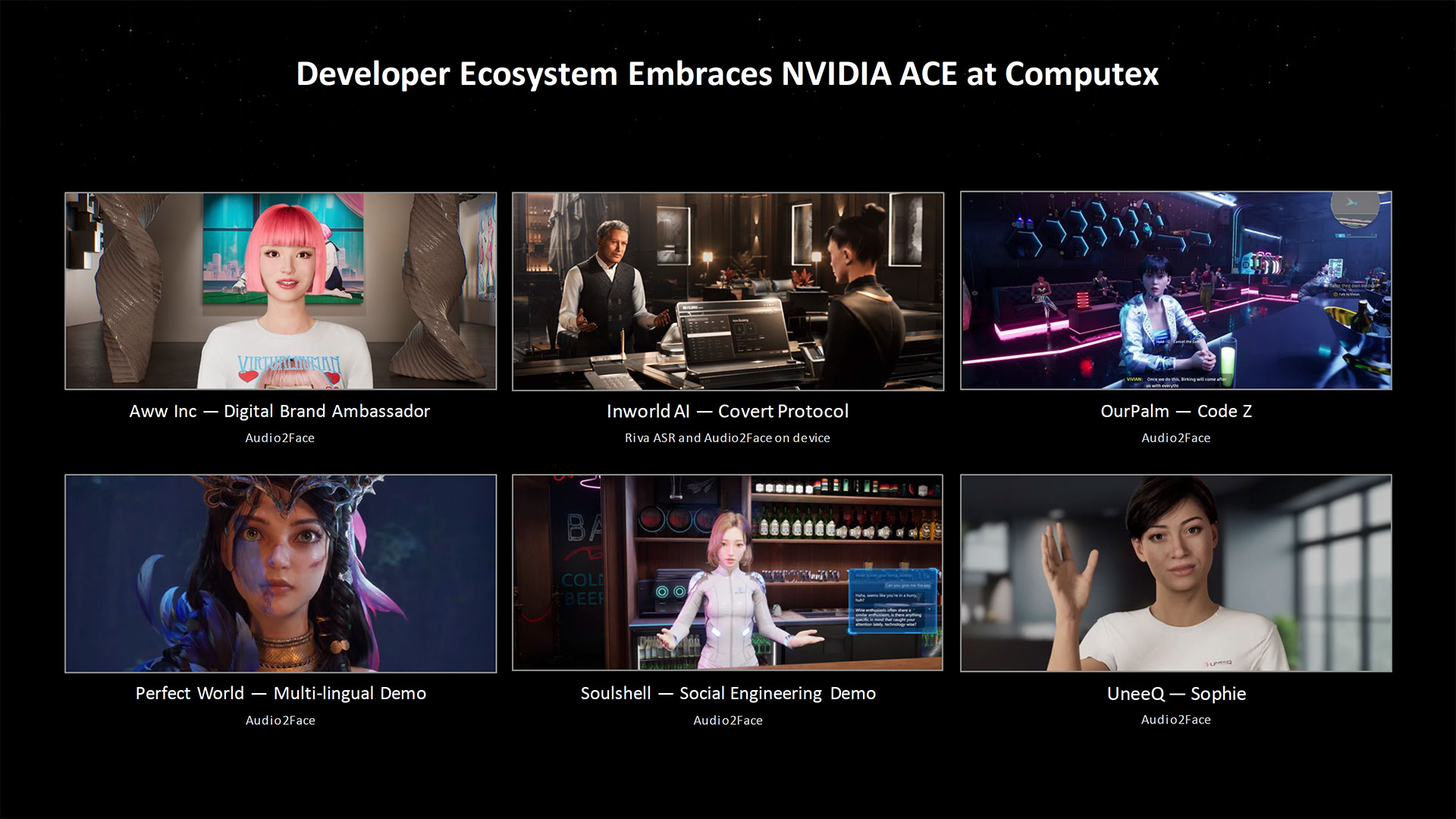

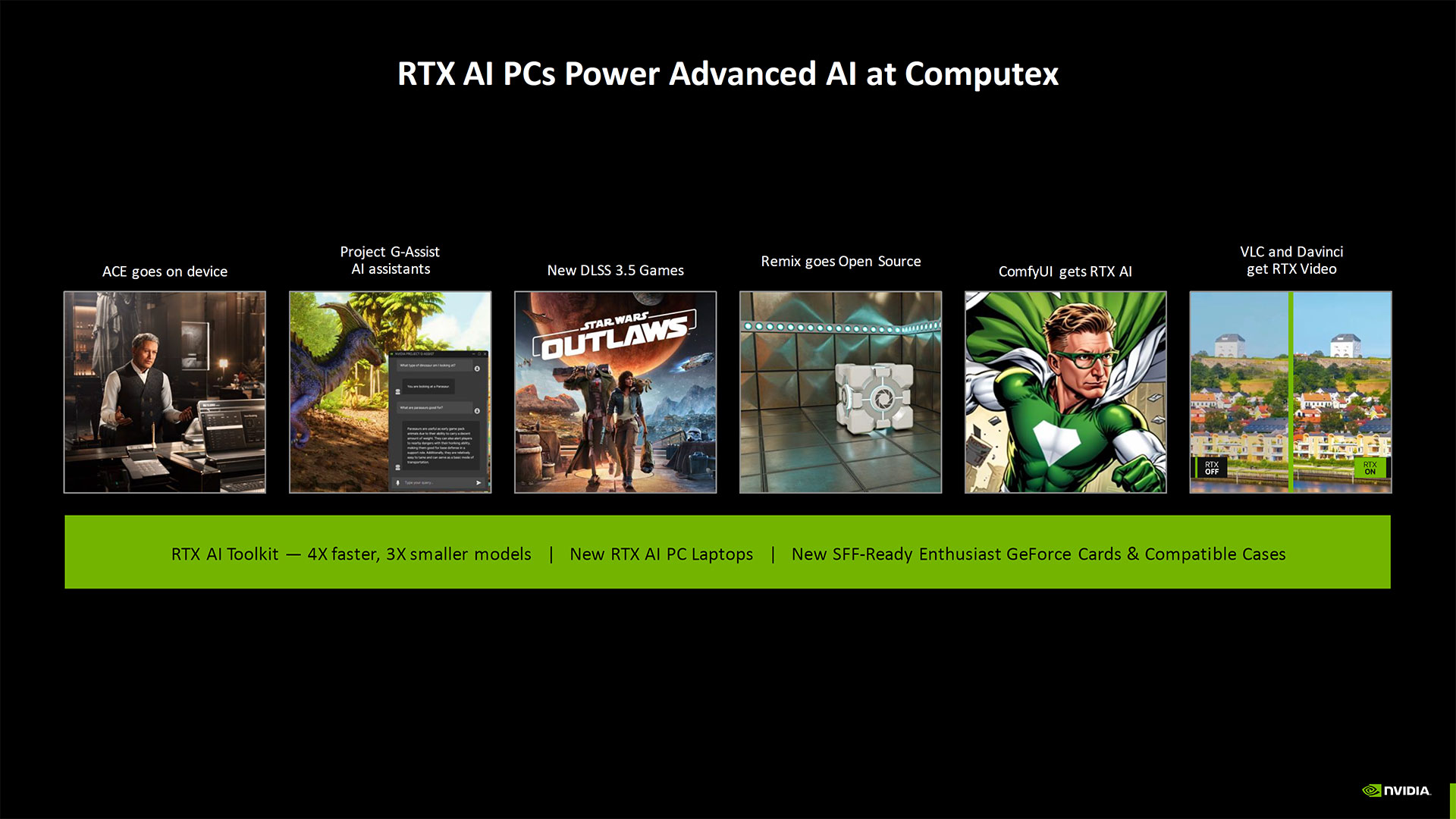

There's a lot of AI news coming from Nvidia, in fact. Some of it is just the latest updates on stuff we've heard about before, like the latest ACE demos (Avatar Cloud Engine) that's been going through various iterations for more than a year. Nvidia also wanted to let everyone know that there will be Windows Copilot+ capable laptops with RTX 40-series GPUs in the near future — powered, somewhat ironically, by rival chips from AMD and Intel. But hey, there's still potential for a Windows AI laptop running an Arm processor with an Nvidia GPU to ship sometime next year.

So yes, that's the gist of the announcement: Laptops using AMD's upcoming Zen 5 "Strix Point" processors, aka Ryzen AI CPUs, will be available with Nvidia RTX 40-series GPUs. Let's also spoil the surprise by saying that there will also be, at some point later this year, Copilot+ laptops equipped with Intel's Lunar Lake processors and RTX 40-series GPUs. And after that, once the Blackwell RTX 50-series GPUs begin shipping and then eventually come to laptops, Copilot+ PCs that have those as-yet-unannounced chips as well.

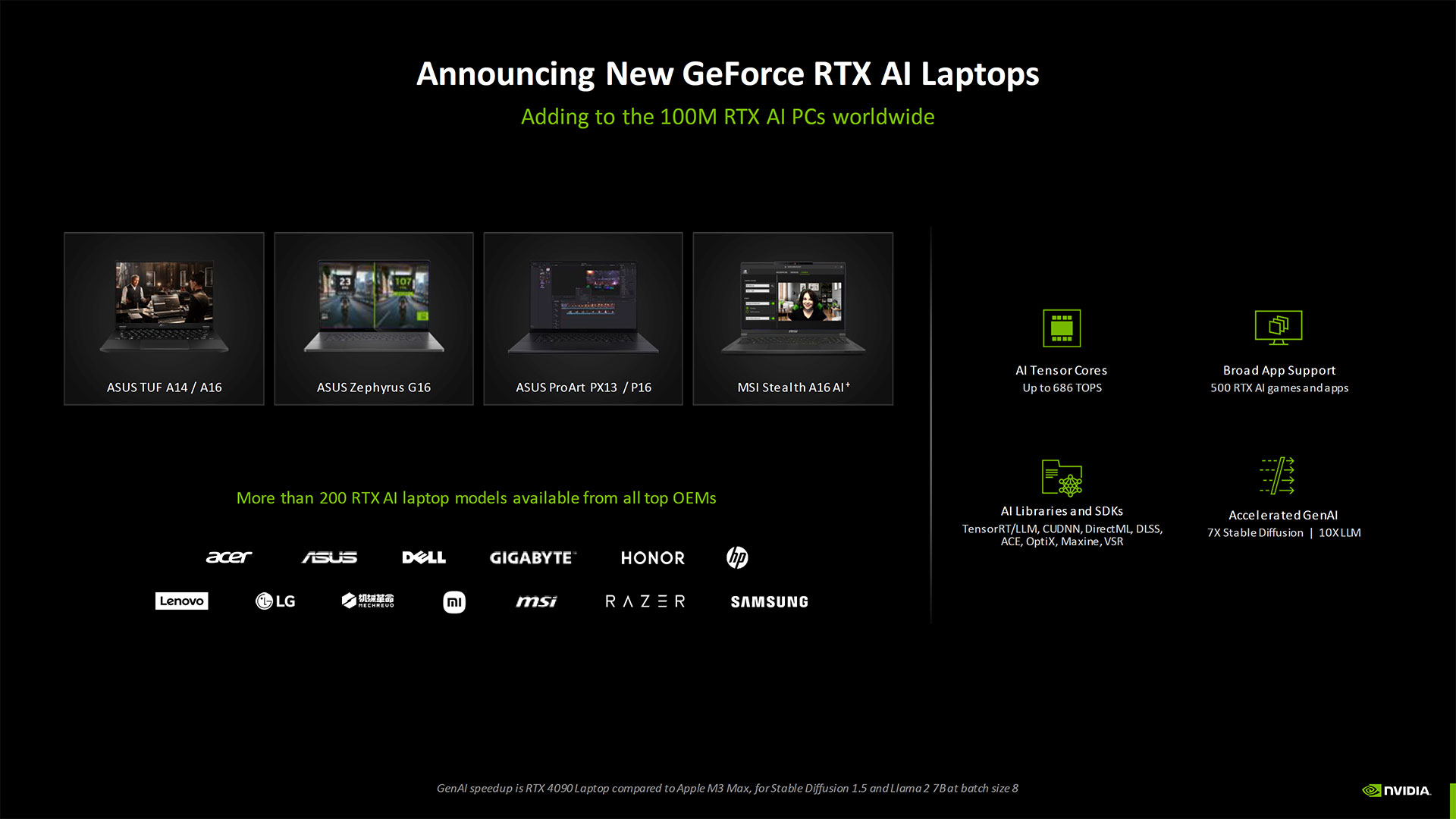

Nvidia provided the above images of five laptops (four from Asus and one from MSI), which will likely be some of the first Copilot+ laptops to have RTX GPUs. It also has a new term, "RTX AI laptop," which basically means any laptop shipped in the past year or so with an RTX 40-series dedicated graphics card, ranging from the RTX 4050 Laptop GPU up through the RTX 4090 Laptop GPU. Every major Windows laptop manufacturer has such a system, and so there are "more than 200 RTX AI laptop models available" already.

What's interesting is that in an earlier briefing, Nvidia showed all five of these laptops as "RTX AI | Copilot+" in a presentation. The final version of the slide (shown below) dropped any mention of Copilot+, as apparently Nvidia wants to focus more on the RTX AI laptop branding.

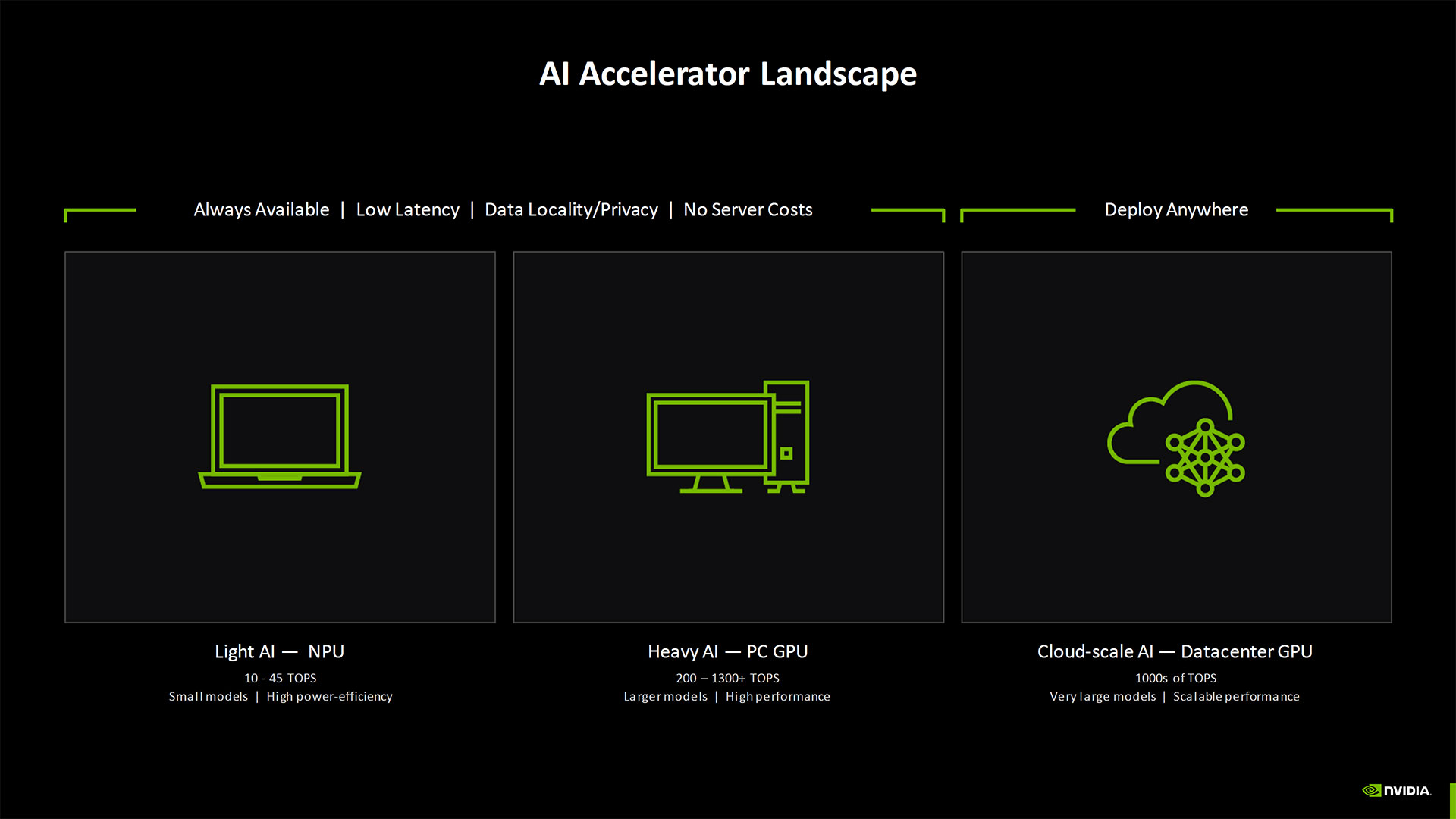

You can understand Nvidia's side of things. Here we have "AI PCs" that provide anywhere from 10 to 45 teraops (TOPS) of INT8 compute running on an NPU (Neural Processing Unit). But Nvidia's RTX 40-series GPUs have already been far exceeding that level of performance for nearly two years now — and the previous generation RTX 30-series GPUs also offered plenty of TOPS as well (though Nvidia is now focused more on the future).

The current 40-series range in AI TOPS from around 200 TOPS on the RTX 4050 Laptop GPU up to more than 1300 TOPS for the desktop RTX 4090, and everything in between. For reference, the RTX 30-series ranged from around 23 TOPS (mobile RTX 3050 at base clock) to as high as 320 TOPS on the RTX 3090 Ti — and double those numbers for sparse operations. Even the RTX 20-series launched back in 2018 delivered potentially hundreds of TOPS of compute, with the RTX 2060 laptop GPU offering 59 TOPS up to the RTX 2080 Ti with 215 TOPS.

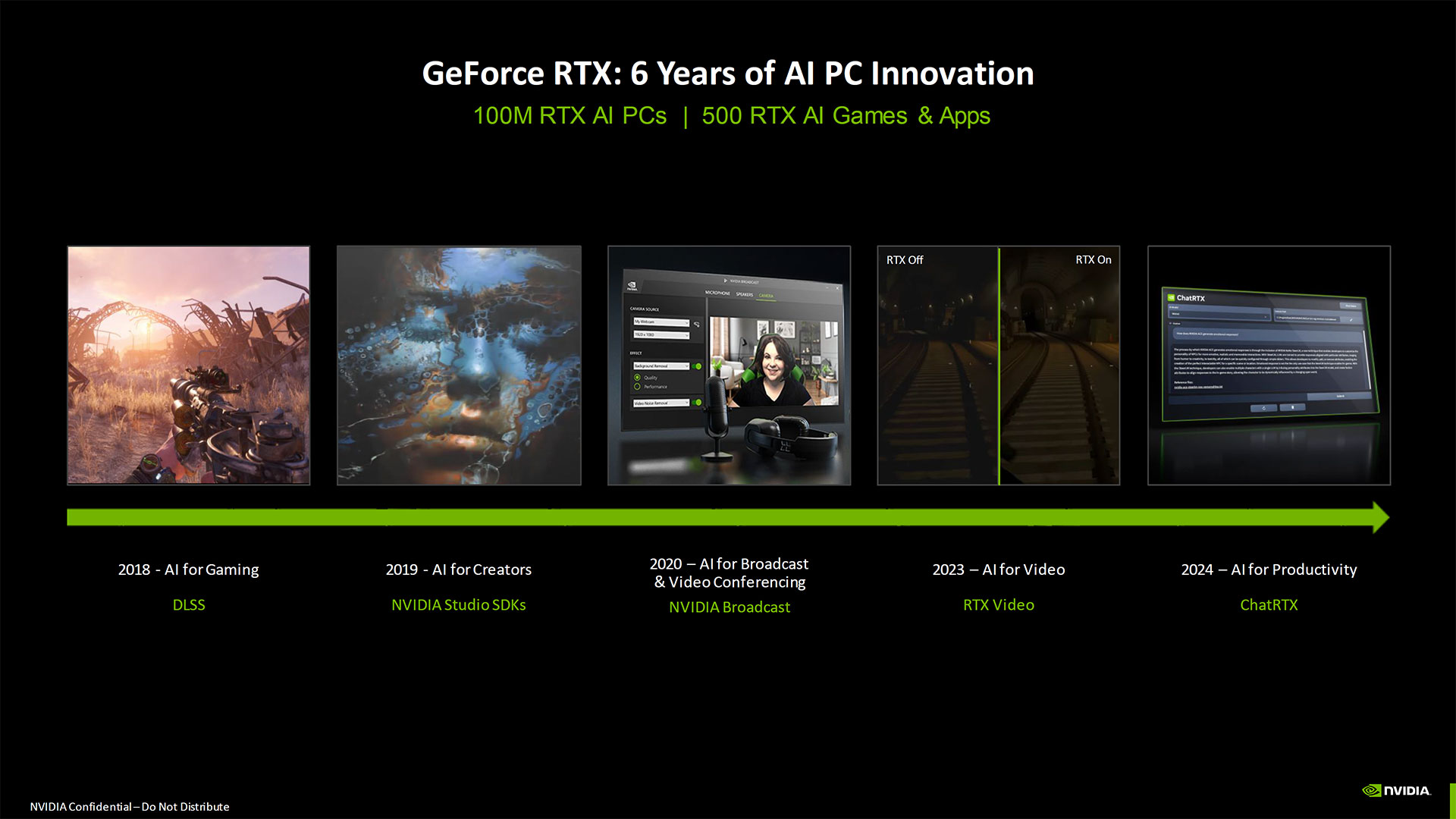

Which means, if you were to use Nvidia RTX GPUs for AI, we've had higher than Copilot+ levels of compute performance on tap for at least the past six years. We just weren't really doing much with it, other than running DLSS in games — and Nvidia Broadcast for video and audio cleanup. And Nvidia has plenty of other AI-related tech still in the works.

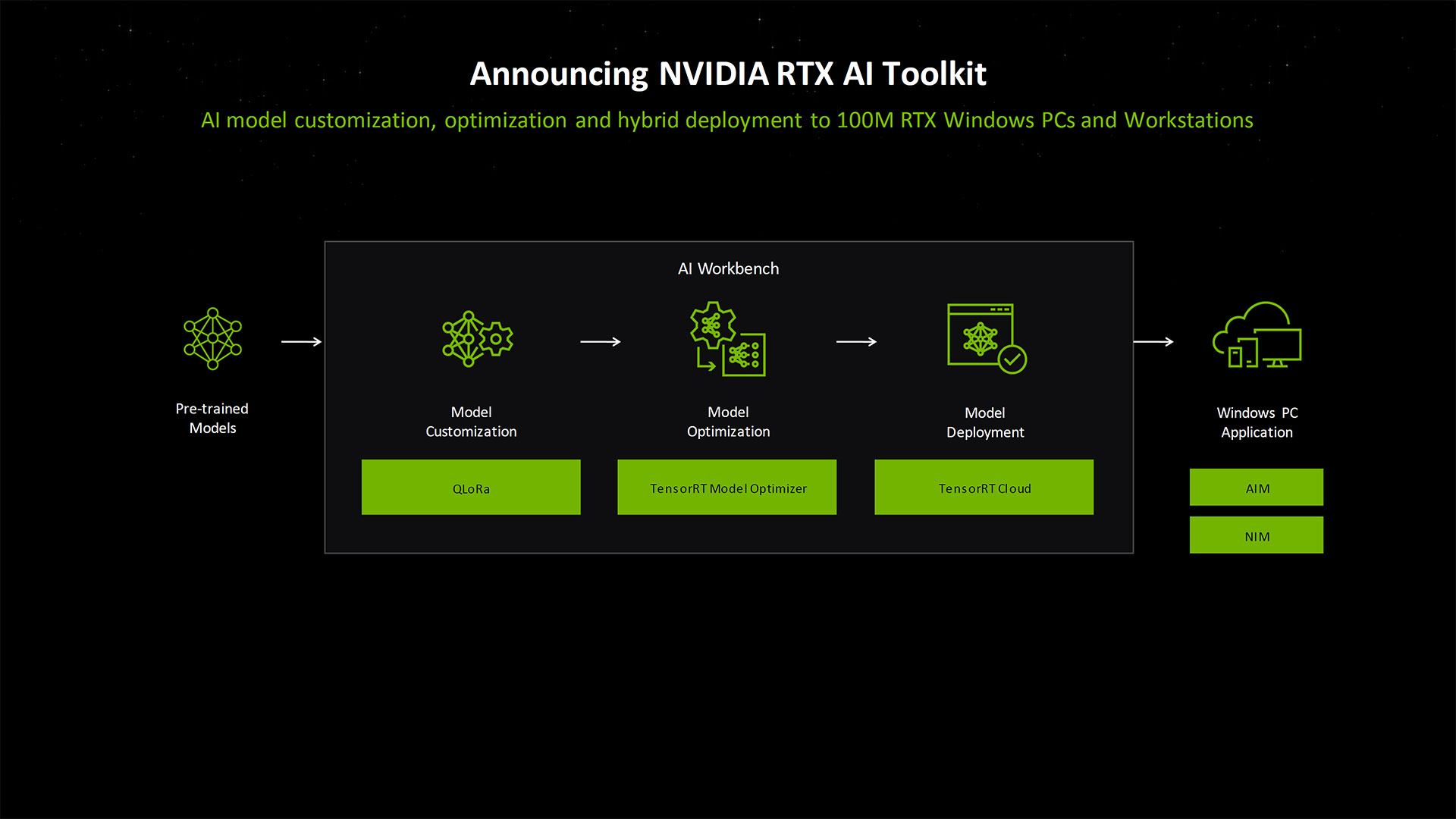

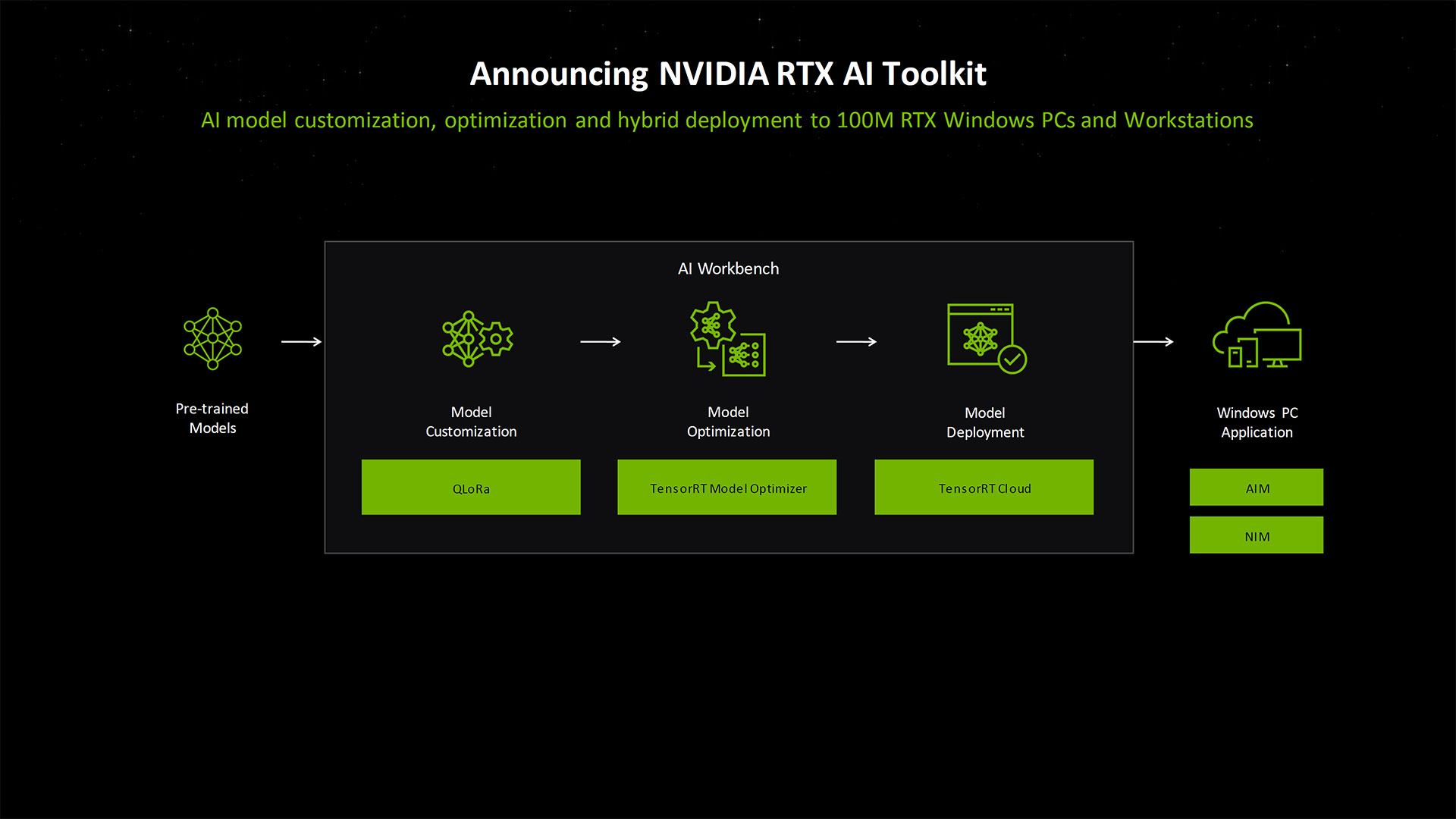

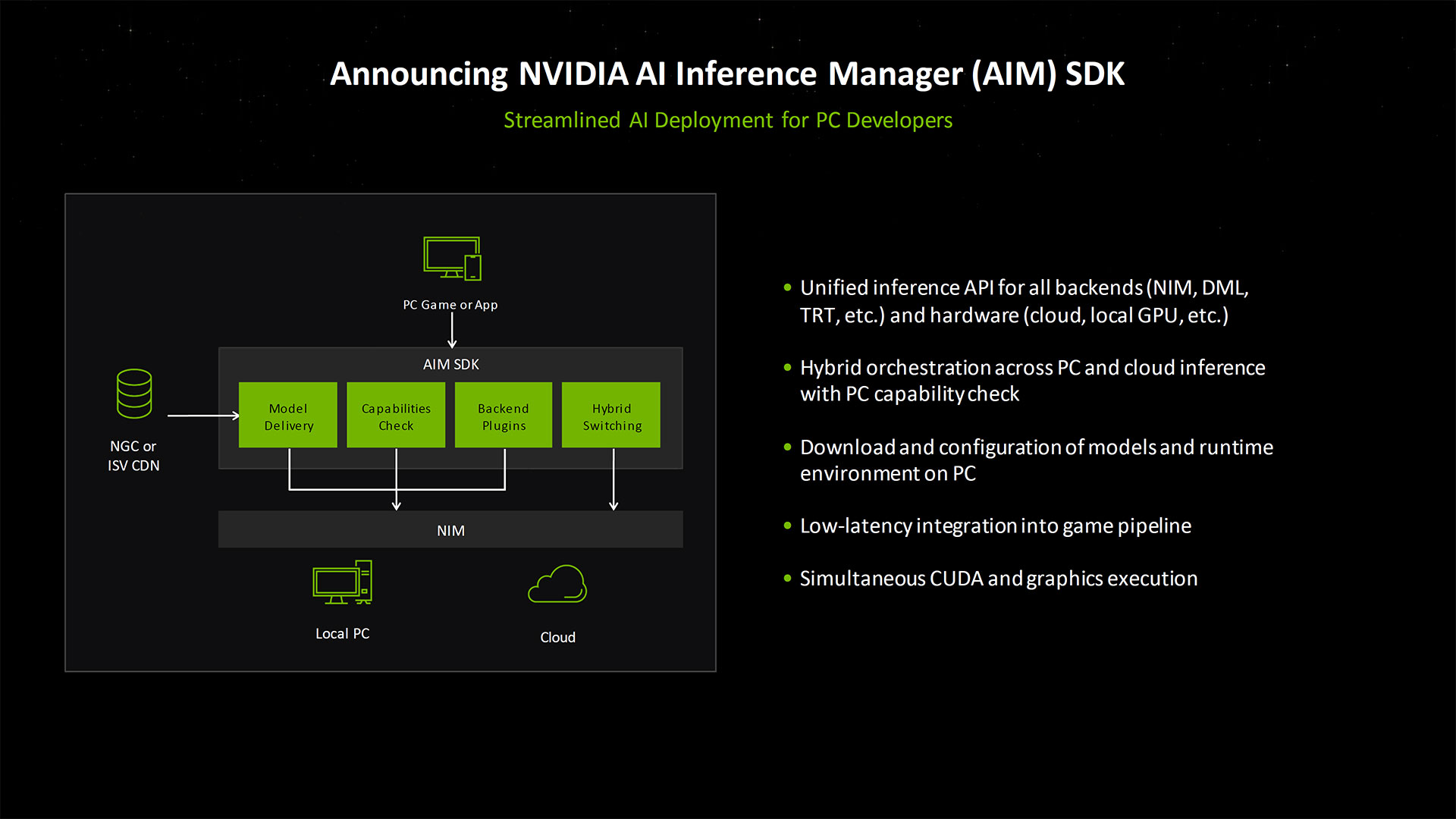

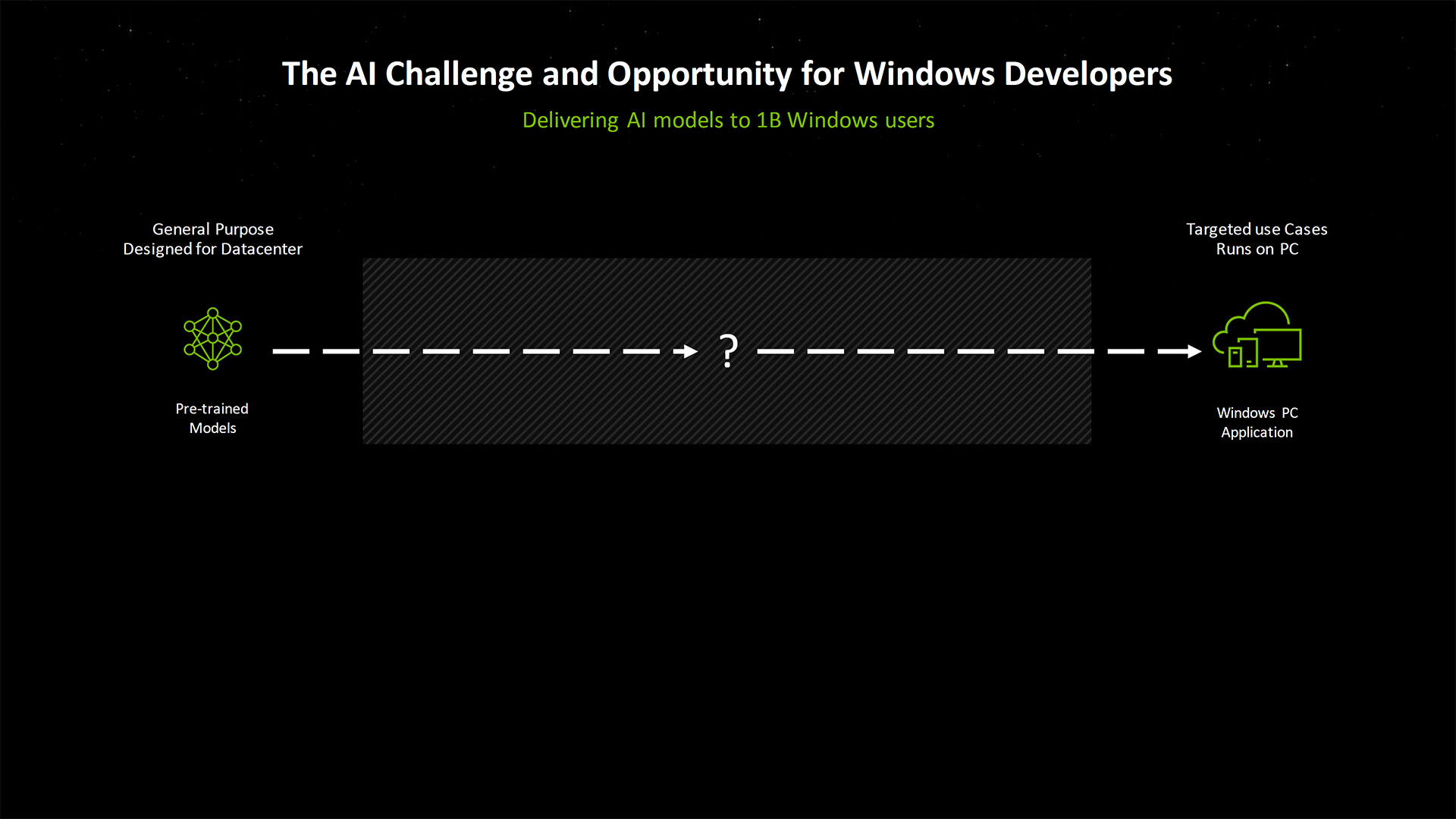

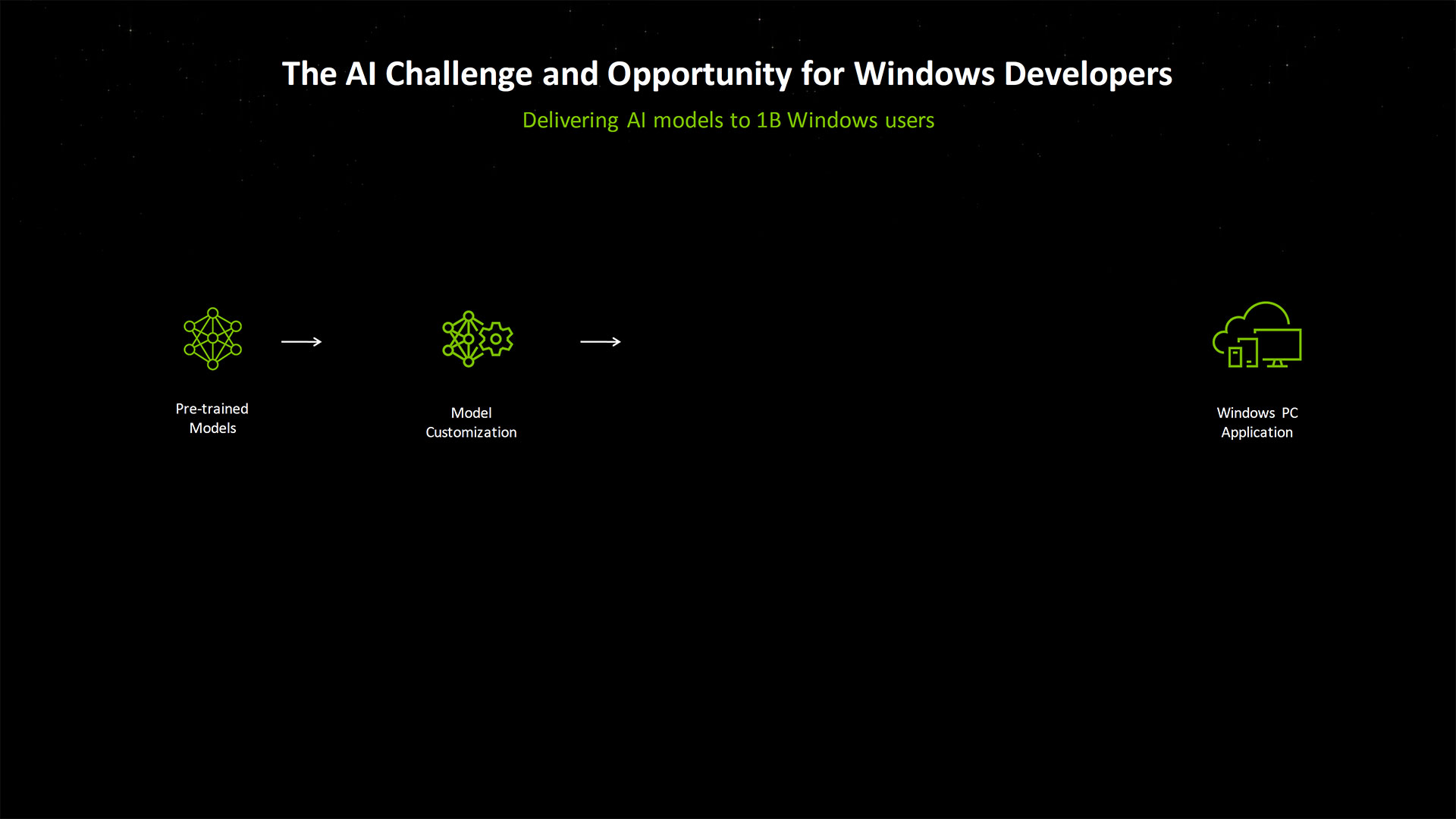

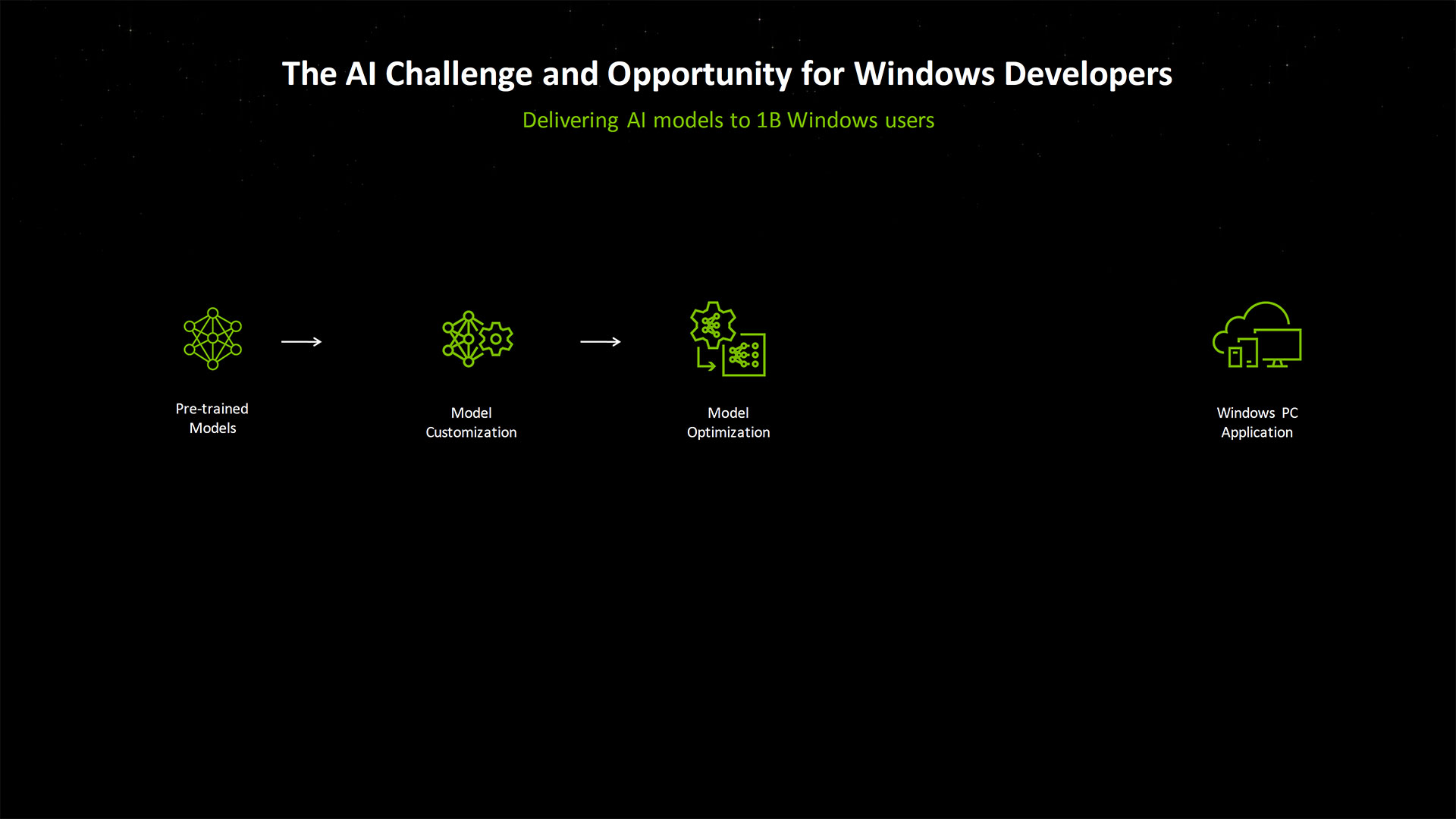

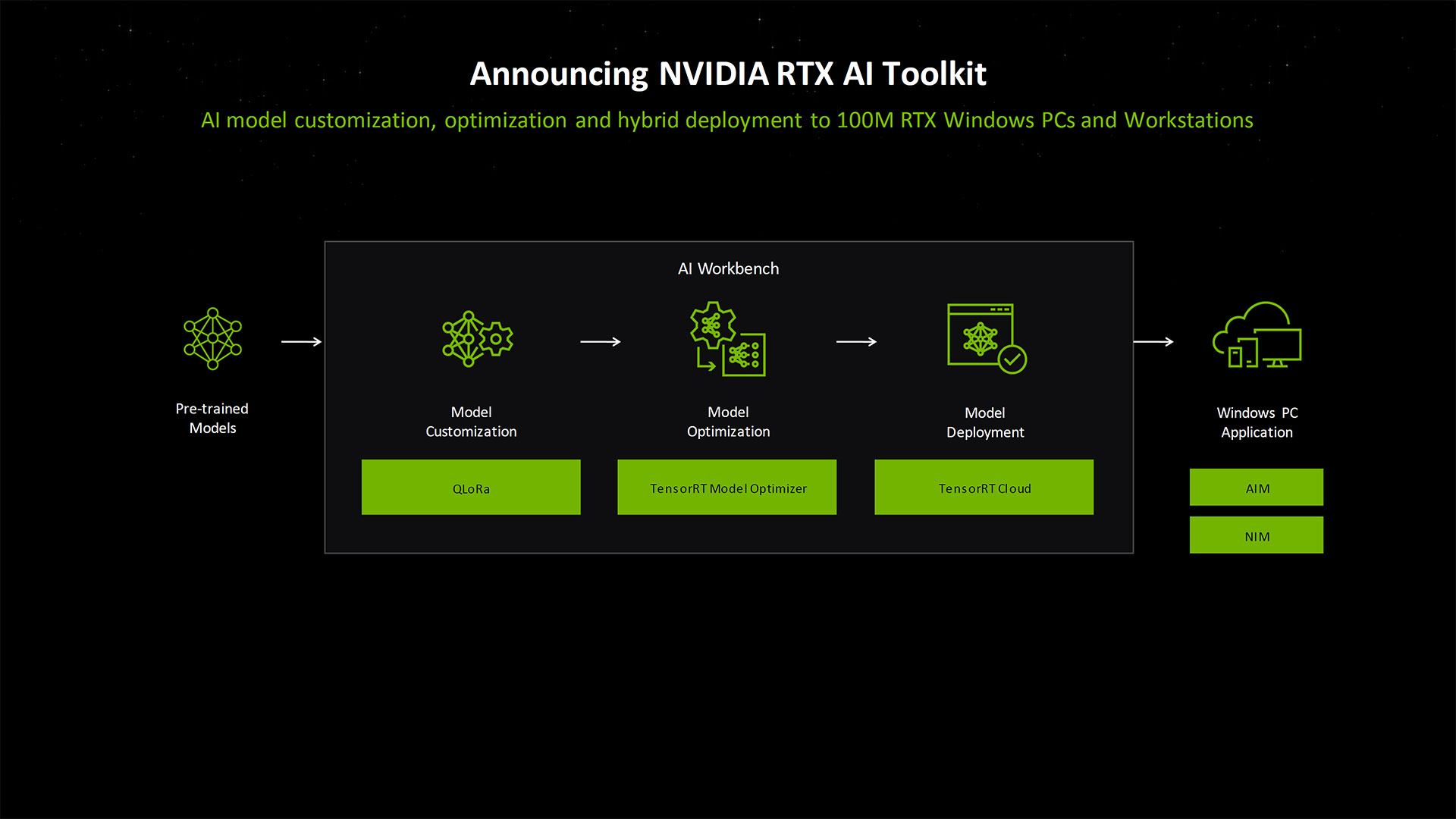

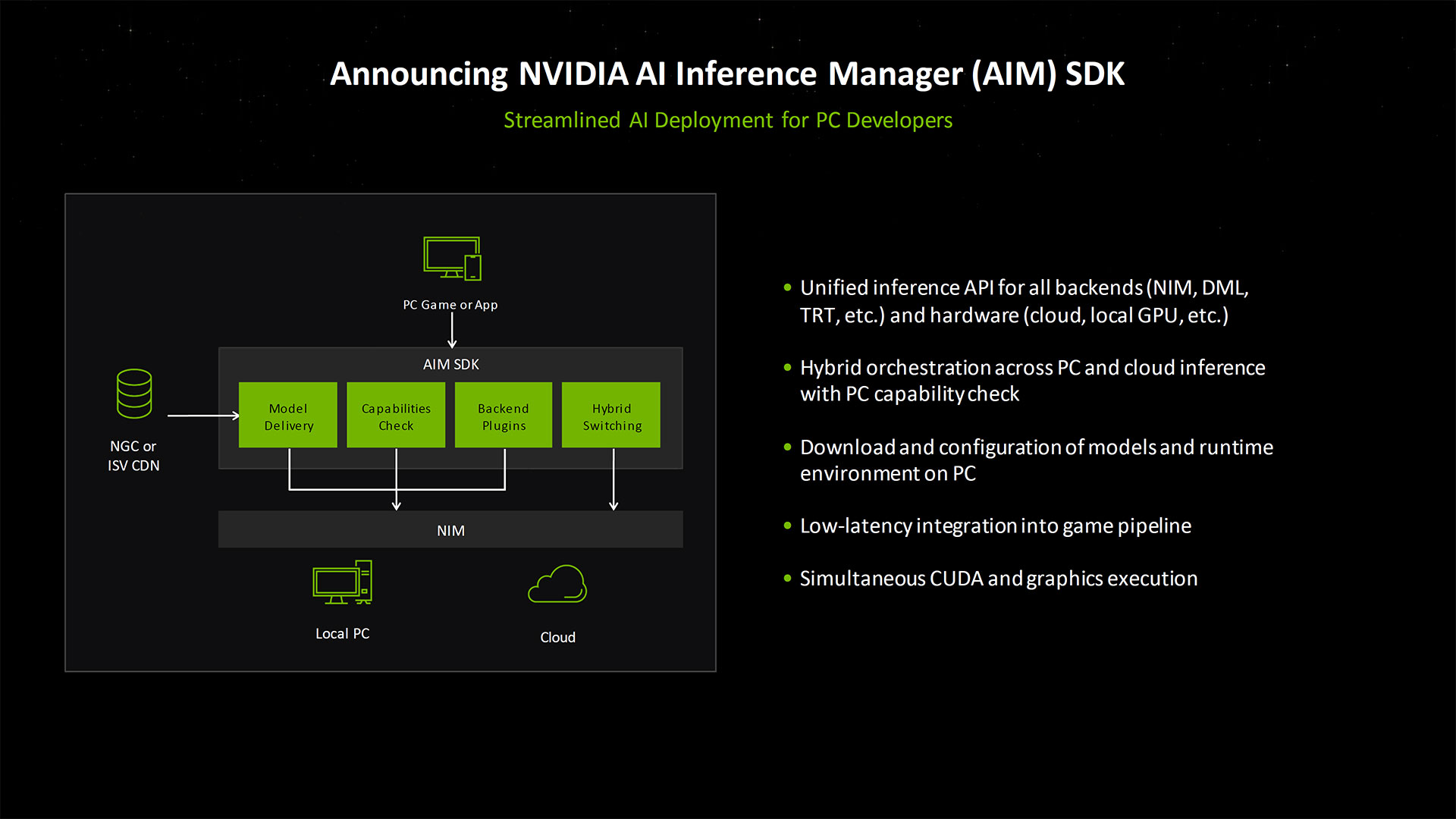

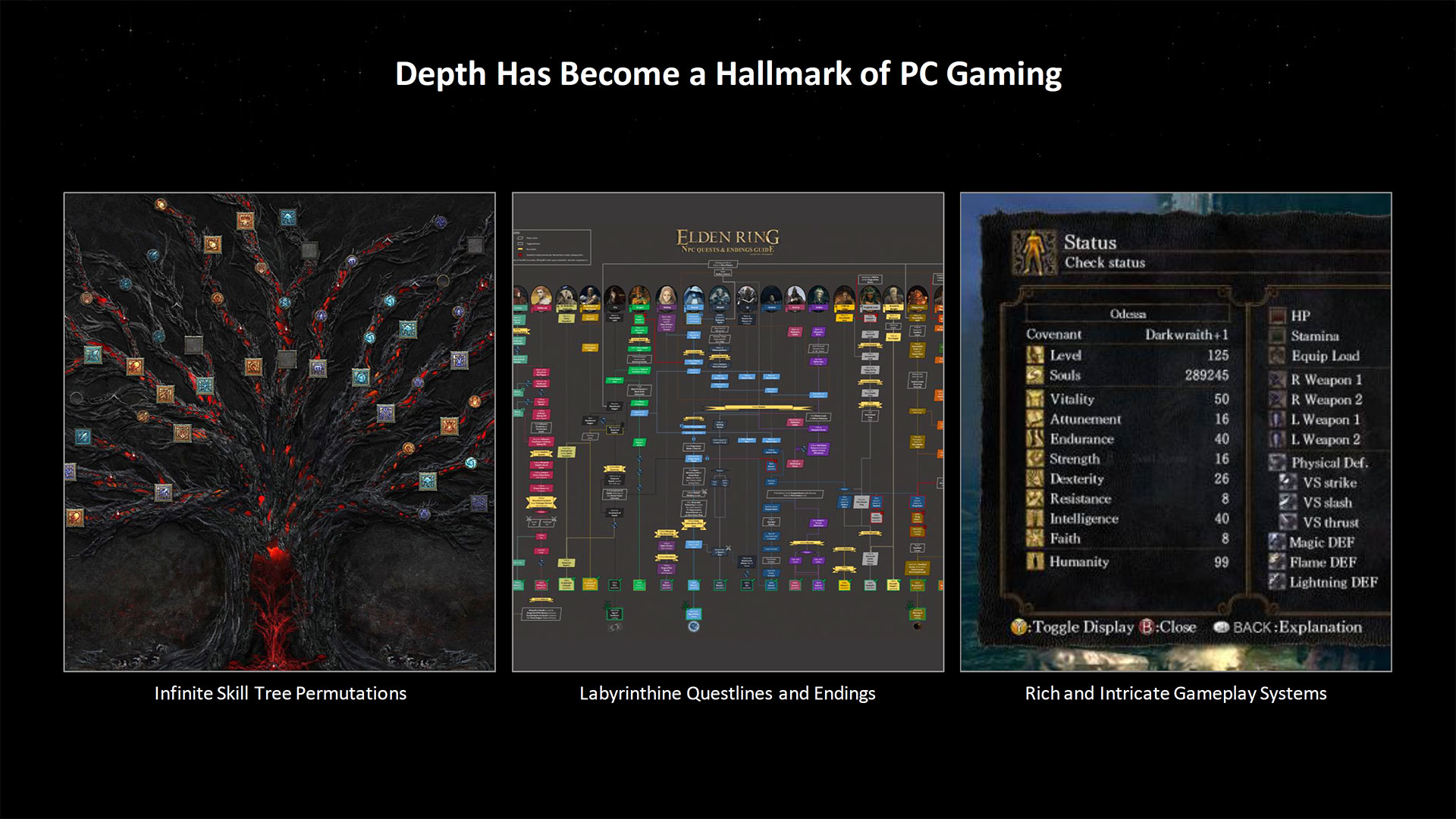

Also announced today is the new Nvidia RTX AI Toolkit, which helps developers with AI model tuning and customization all the way through to model deployment. The toolkit can potentially be used to power NPCs in games and other applications. We've seen examples of this with the ACE demos mentioned above, where the first conversational NPC demo was shown at last year's Computex. It's been through multiple refinements now, with multiple NPCs, and Nvidia sees ways to go even further.

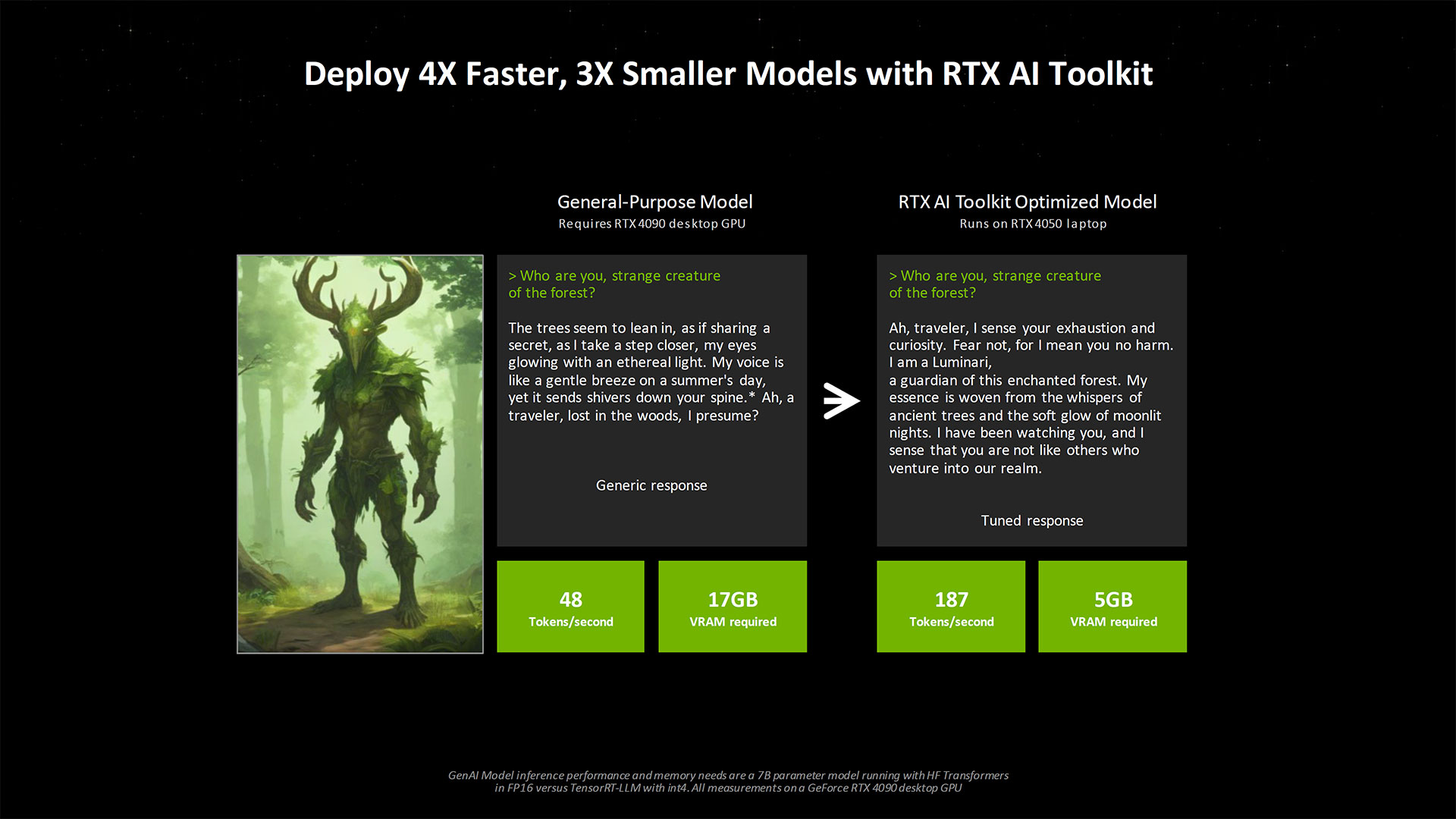

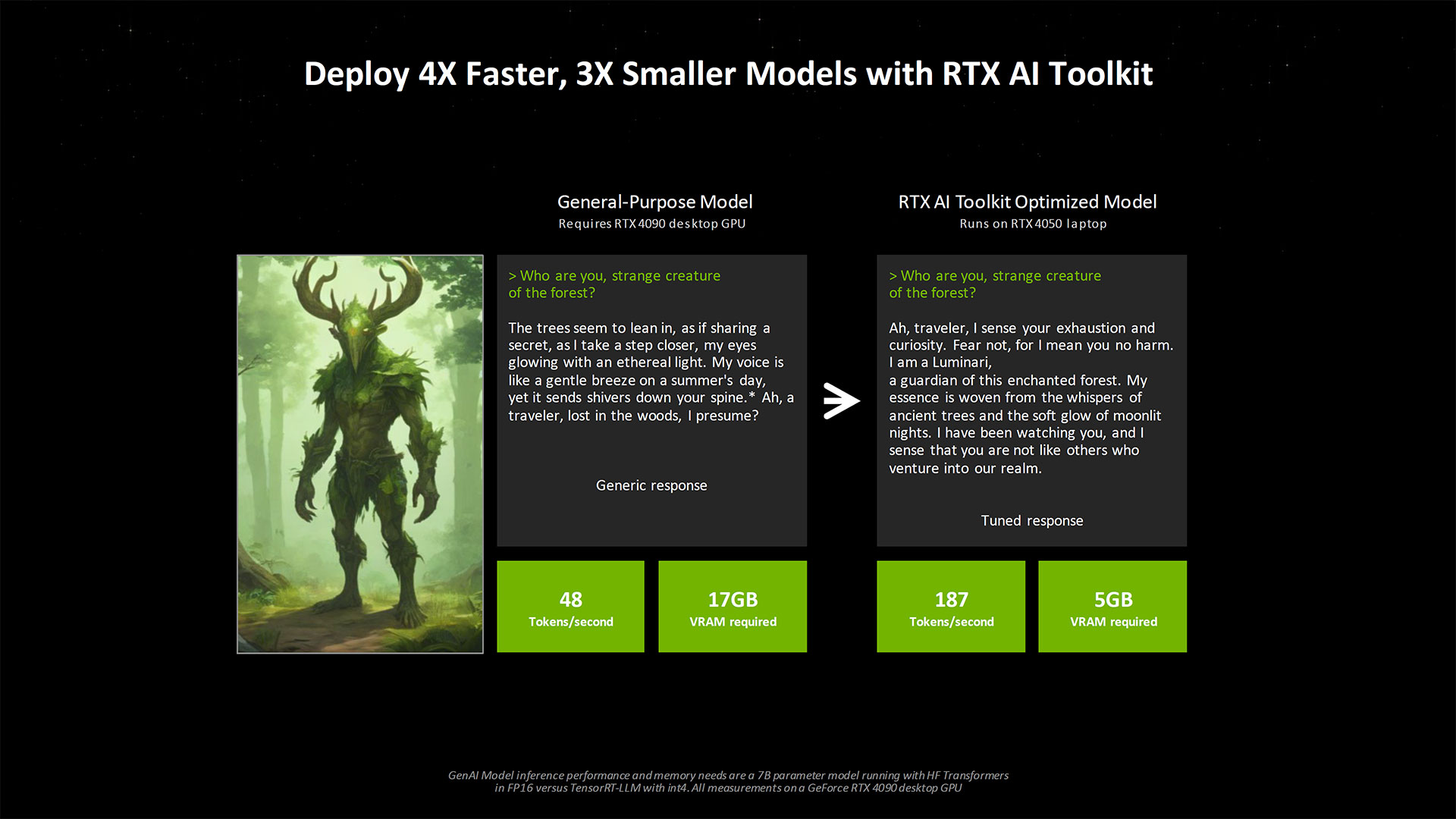

One example given was a general purpose LLM that used a 7 billion parameter LLM. The interaction with an LLM powering an NPC provided the usual rather generic responses. It also required an RTX 4090 (17GB of VRAM) to run the model, and only generated 48 tokens per second. Using the RTX AI Toolkit to create an optimized model, Nvidia says developers can get tuned responses that are far more relevant to a game world, and it only needed 5GB of VRAM and spit out 187 tokens per second — nearly four times the speed with one third the memory requirement. More importantly perhaps, the tuned model could run on an RTX 4050 laptop GPU, which only has 6GB of VRAM.

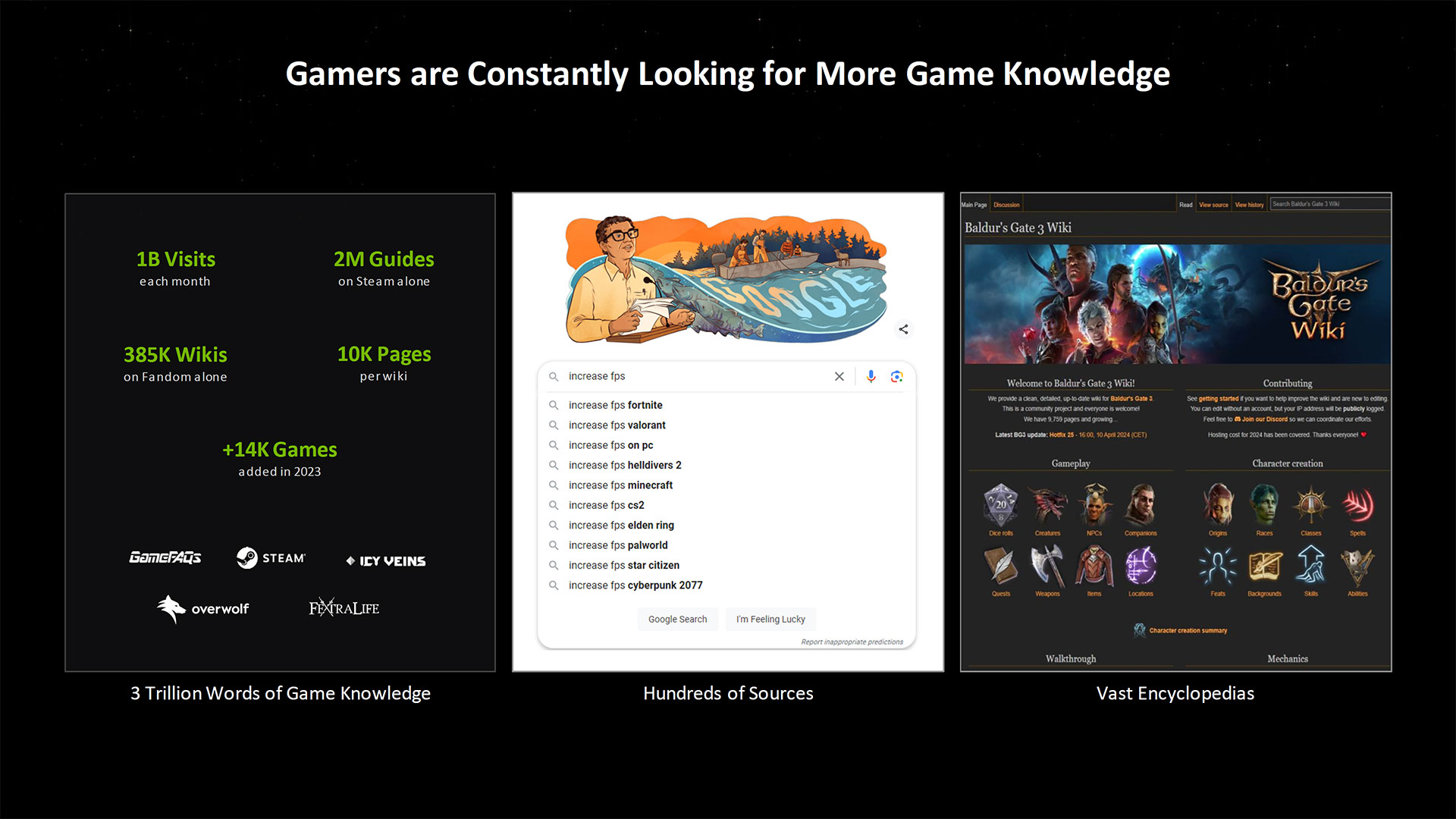

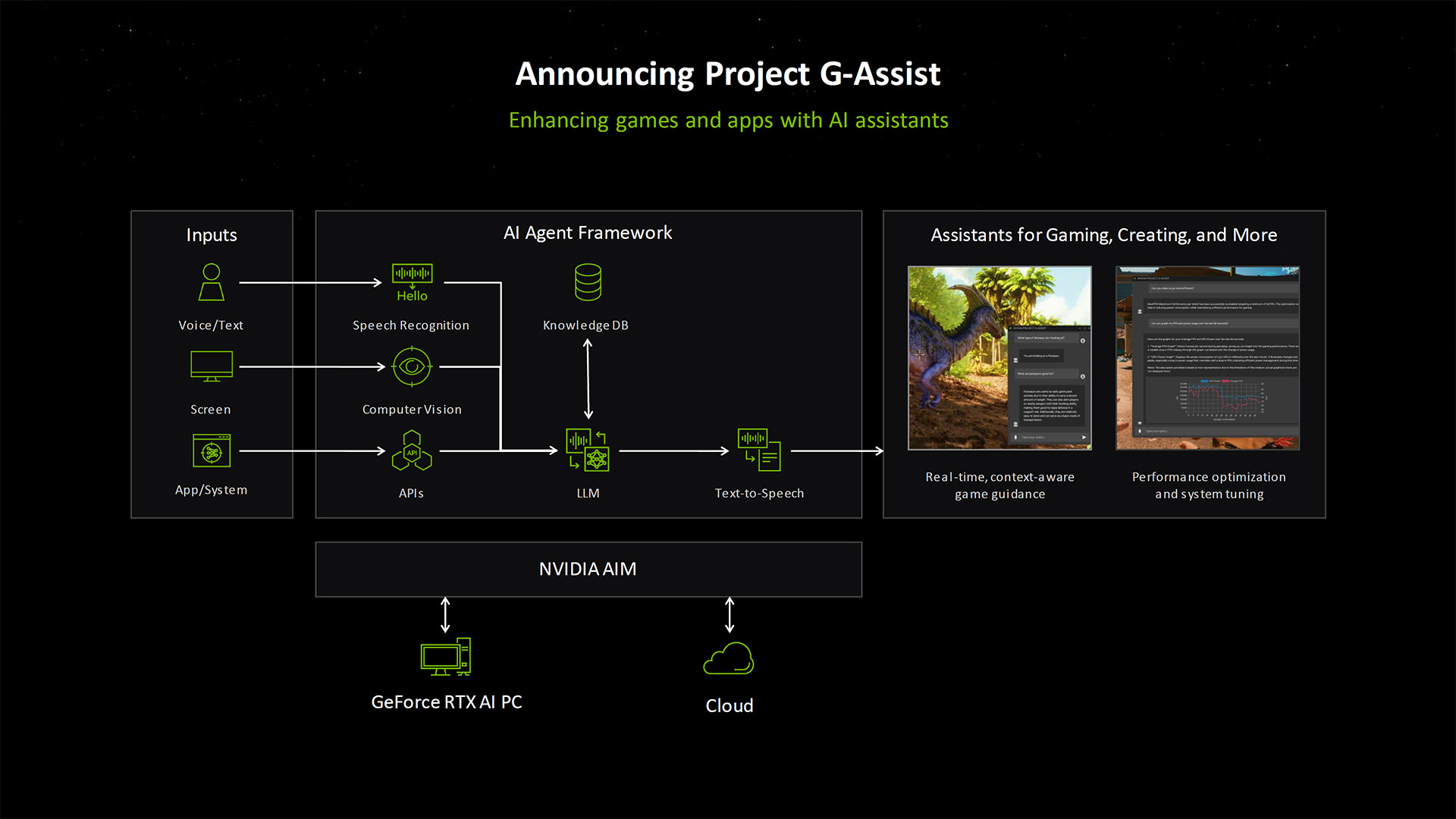

Nvidia also showed a tech demo of how all of these interrelated AI technologies could come together to provide ready access to game information with its Project G-Assist. We've covered that in more detail separately.

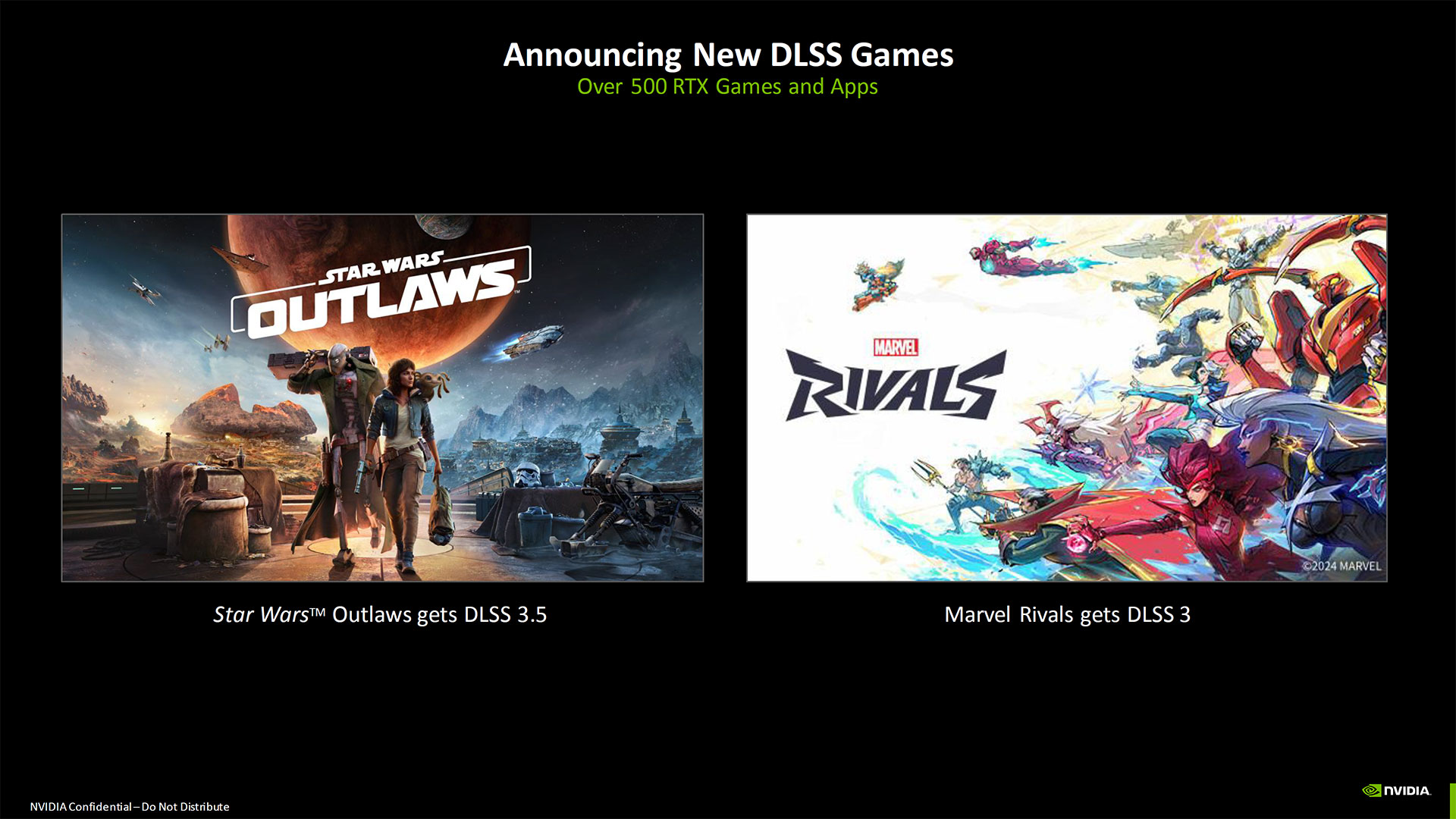

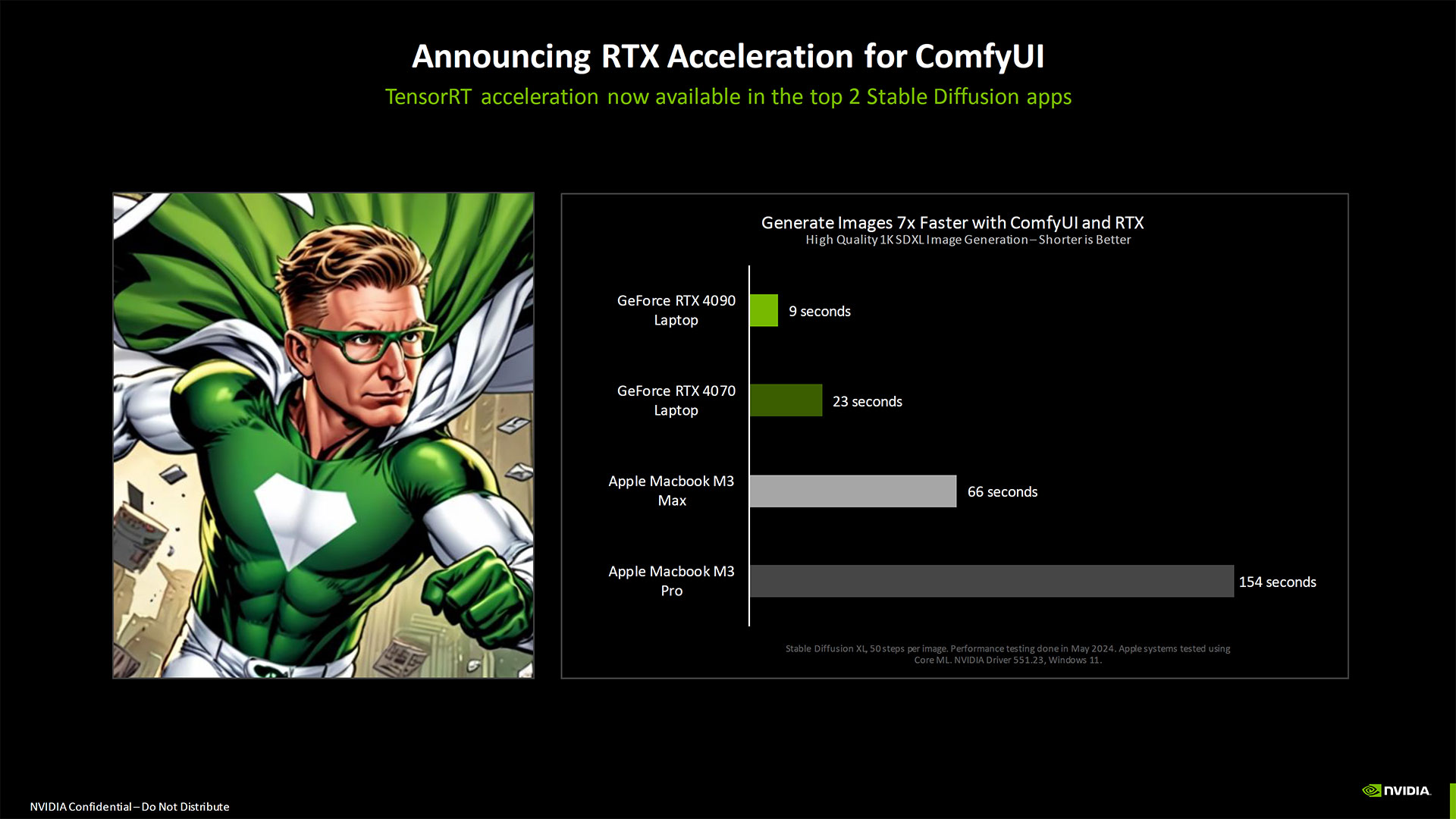

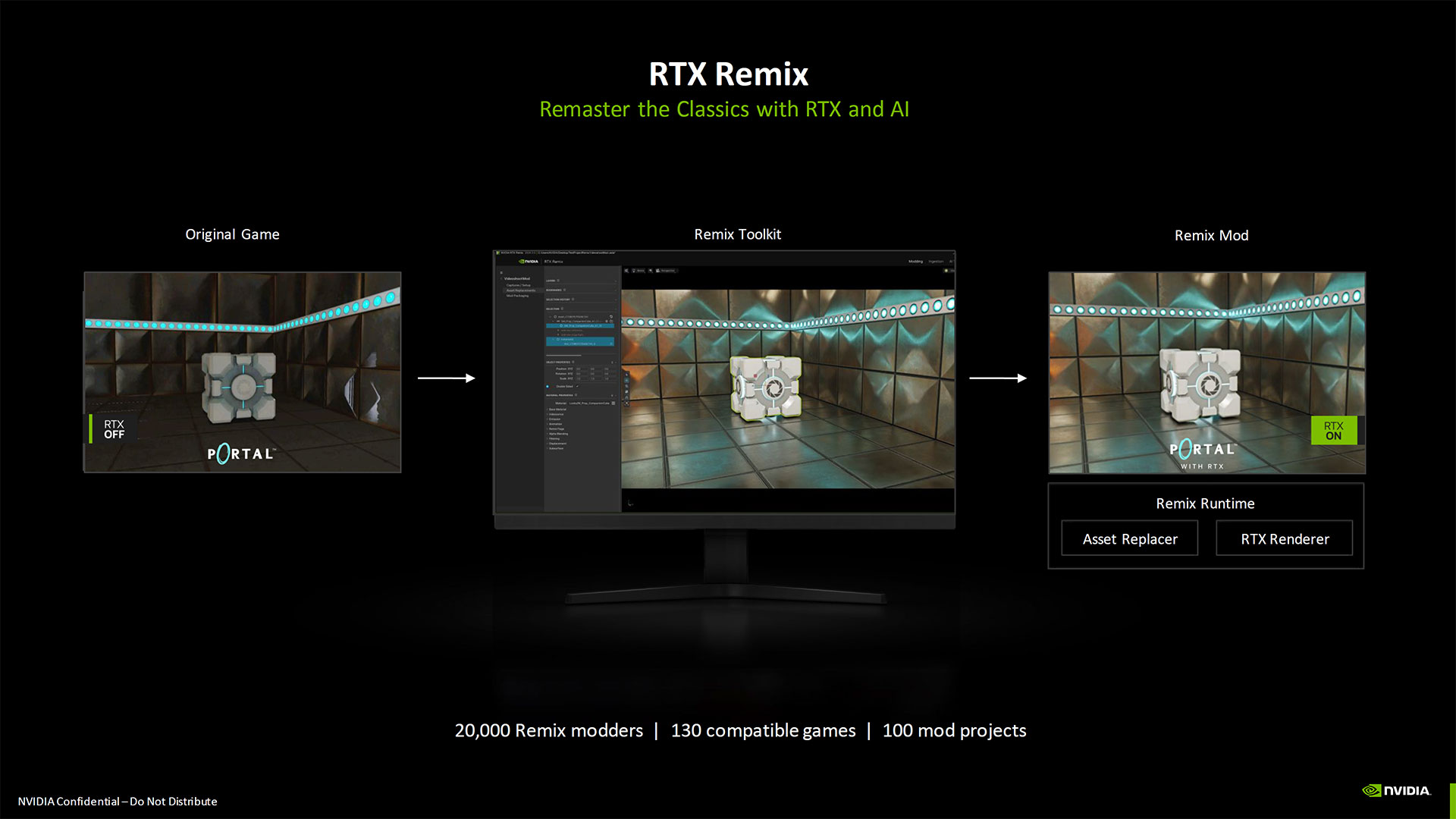

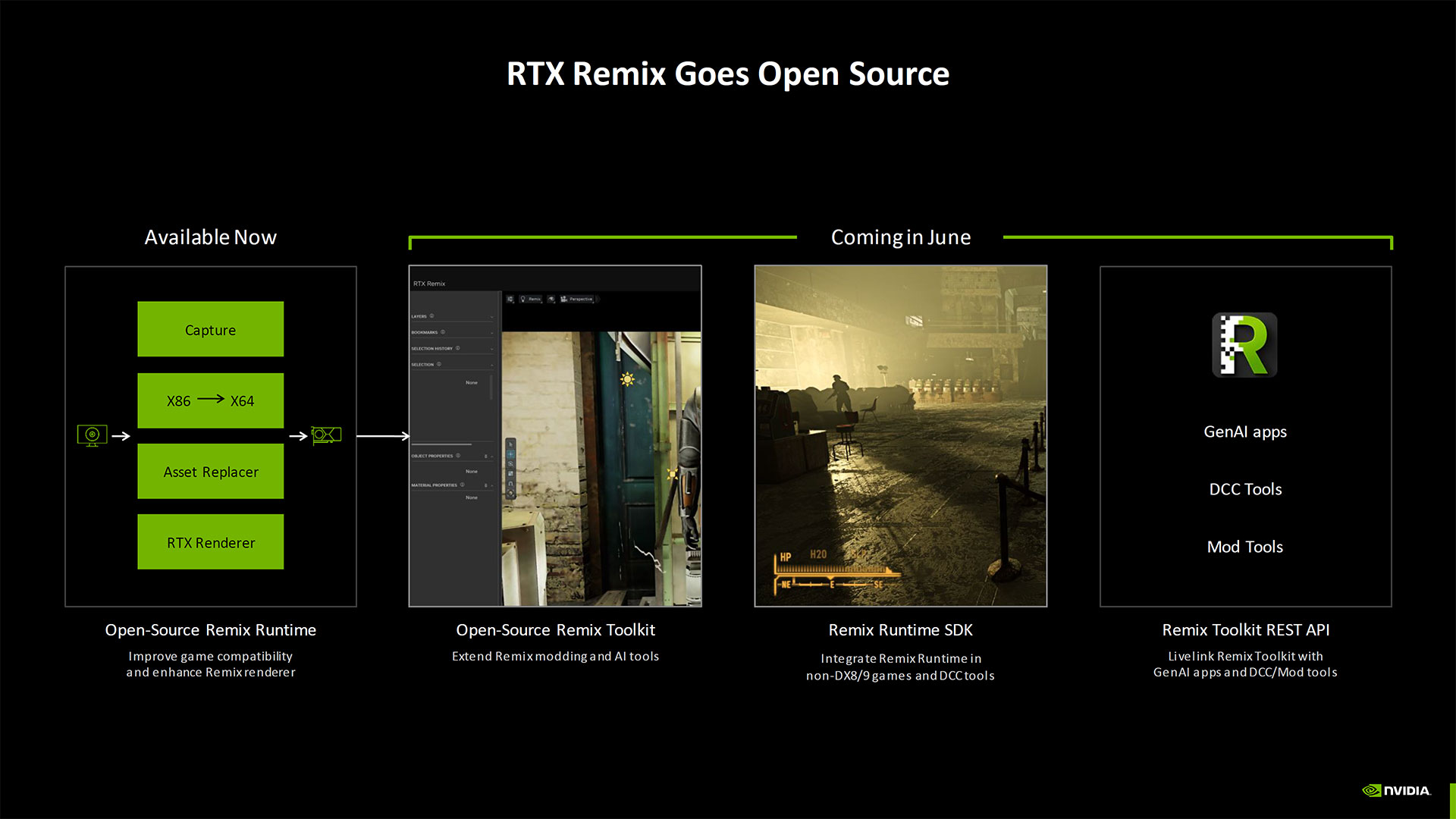

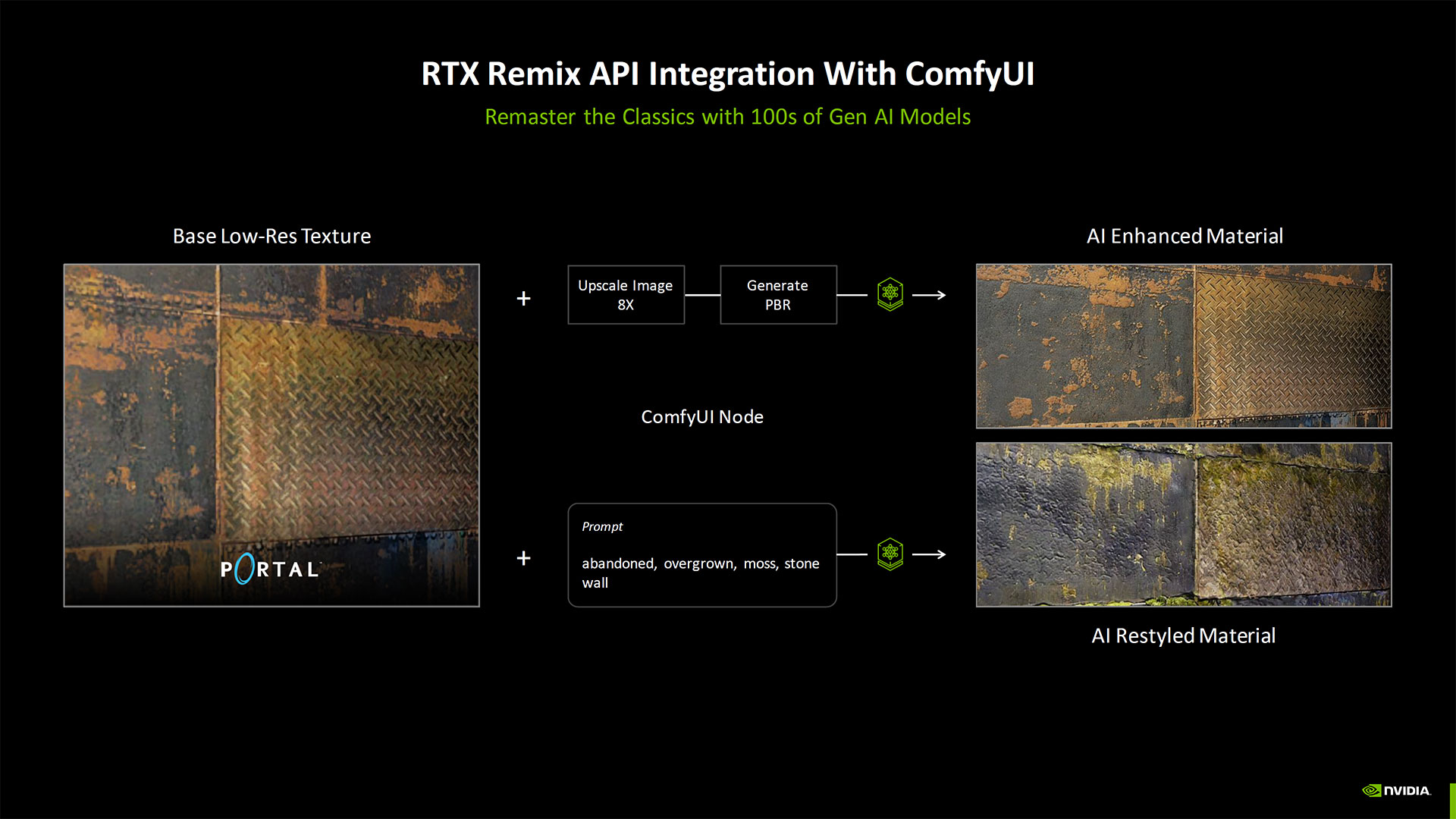

There was plenty more about AI that Nvidia talked about, as you can imagine. RTX TensorRT acceleration is now available in ComfyUI, another popular Stable Diffusion image generation tool. The ComfyUI integration is also coming to RTX Remix, so that modders can use it to quickly enhance textures on old games. You can watch the full Computex presentation, and we have the full slide deck below for reference.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Alvar "Miles" Udell Once Microsoft drops Copilot for Microsoft 365 from a $20 a month addition to included in the price, then I'll get interested in GPU/NPU accelerated features.Reply -

KnightShadey ReplyAdmin said:The first Windows Copilot implementations have snubbed Nvidia, but that will change with a future runtime.

So say nVidia 🙄 , but then immediately removed any reference to Copilot+ from their material and keep referring to ' a form of Copilot' . 🧐 Which could be anything (the 7 flavours of Copilot including a version of Copilot Pro for all we know), but definitely/obviously NOT Copilot+ that is in the current spotlight and only available to Qualcomm, AMD, and intel being a given. This is more nV NEEDING that spotlight for investor interest/dollars from folks who don't know Ai from AR. 🤑

It's the Computex equivalent of a paper launch at CES to have people delay purchase while nVidia plays catch-up. That's why they had their paper launch the day before AMD's, because if it were tonight people would have much tougher questions about this supposed coming Copilot for nV solution. Up until last night they could ride on the coat-tails of AMD & intel's 'coming soon' Copilot+ platforms, that will work with/without nVidia hardware, but not vice versa.

Yes, they rock the Server and GPU Ai space, and have the most/bestest software tools for developers out there, but that is not what Copilot+ is. And nVidia sees their stranglehold on the tools of the trade slipping a small bit with these other players carving a chunk, so they make a noise as if they are also involved in one of the big growth areas for consumers they missed (stIll dwarfed by corporate Ai revenue.. for now, but what leads what in the sector users or tools?) .

They will maintain leadership for a long while, but any cracks of sunlight worry those same investors... "where is nV in this Copilot stuff?" same as those asking the same silly questions 2 weeks ago about AMD & intel when Qualcomm launched. Doom & Gloom for them because they weren't on the Day 1 announcement.... which also prompted nV to launch their 'Basic' reply about Copilot PCs, because can't not be in the news when Ai is mentioned.

Admittedly the Strix platform (especially if Halo is anywhere near expected) seems like a great platform to build from WITH a powerful nV GPU (although 4070 is just 'OK' but needs more than 8GB to be anything other than a helper to Copilot), which would allow you to use CPU/iGPU + NPU + GPU Ai tasks simultaneously (according to AMD and nV's comments) while giving you the ability to develop for all 3 levels of Ai power, and also allow for multi- tasking (like Ai security running in low-level background, Productivity Ai crunching a report/presentation/ etc , and the powerful GPU available for the occasional Ai generated content, all on device. Granted the memory requirements are more inline with portable workstation / desktop replacement class laptops than the mid-range of yesterday's 13-16" thin&light-ish offerings (not ultra portable, but not really HX other than name. 🤔

For the tasks THAT basic, that Strix , Lunar Lake and SDX-Elite are going to be doing via Copilot+ , why would M$ want to muddy the waters with unknown CPU? + ? GPU = Copilot Asterix* / Windows CoPilotRT2 ? It seems to be counter to the point of, standardization and focusing development within a specific framework. M$ should view WinRT as a perfect example of that, don't make Windows adapt to the subsets make the subset adapt to Windows, now ARM adapts to Windows not vice versa, same for CoPilotRT*, wasting resource for what ends up being a temporary nV roadbump to poison the well. Especially if nV is aiming to make it's own Copilot+ leVel ARM CPU/APU by the time any of this collaboration could bear fruit.

Making Copilot Asterix for nVidia also seems like mucking with your luxury car's infotainment system because it's not available in the V12 track version of your vehicle. Two different ecosystems that shouldn't be held up by the other. To me it's similar to the M1-4 situation for Apple, you don't need to totally rework the iPad or Laptop environments to make them work, but it would be nice to ADD the full features as a secondary focus, but remember the primary role of each. An iPad running iPadOS that can boot into MAC OS is far better than ruining the iPad by making it full MacOS or iOS by adding terrible multi-tasking and calling is some showy name... err.... well, perhaps lesson learned? 🤔

nV GPU already have full access to models for both desktops and laptops... but no one is buying a thin and light GTX4050/RX7500 to do that serious work, rather than just get their feet wet. So why would M$ change the environment for that now for weak laptops, when they didn't do it for much more powerful desktops?

As for the discussion of power and nVidia's prior generations, it highlights one of their challenges, look at the GTX4050's Ai throughput similar to the GTX2080Ti's.... then compare the price & power consumption. Sure nV's argument that they are currently orders of magnitude better than the first CoPilot+ PCs are , but the AMD CPU+NPU is currently ~100 TOPs even fore StrixHalo with more CPU cores, more NPU Ai tiles and double the memory bandwidth. That's 1st gen Copilot+ hardware, so why worry about Nv (or AMD) GPUs for this specific portion rather than make it so the CPU+NPU Copilot+ can work with Any GPU to offload heavy workloads

More resources put into scheduling etc in Windows likely results in better... well... results, than just wasting resources on a Copilot*Asterix for nVidia. Yes, make Ai GPU development tools (from all 3 IHVs) work better on Windows , similar to Linux, and make GPU TOPs available to more apps on Windows, BUT leave Copilot+ to it's specific roll. That's far better use of Windows dev time and better for users as a whole, rather than making an RT version of Copilot for nVidia.

That all Nvidia can say it that they are ' developing/working with M$ on an API ' , seems like that's pretty late to get something for this year that's anything more than a tack-on window(s) dressing.

Sure the GPUs can crunch the workloads, but as has been mentioned... so could the previous GTX and RX series GPUs and those didn't get any love from M$ when they had the chance; adding it now (or more realistically just saying they will add it , then dropping CopilotRT* 'development' when their own ARM CPU comes out next year) seems more like a pure PR paper effort rather than anything that could replicate Copilot+ in the area it would be best at, low-level/power always-on fluffy ' convenience-Ai' akin to the return of Clippy... but perhaps useful this time. 📎 😜

nVidia just needs to be comfortable with being good at 8 out of 10 things and not try to spoil the other 1 or 2 just because no one is talking about them for 10 seconds. 🤨 -

Murissokah Replyjalyst said:^Summary? :unsure::grinning:

Here's a quick summary by ChatGPT (Powered by Nvidia):

The text critiques Nvidia's recent announcements and strategies around AI and Copilot+. Here’s a summary:

Nvidia's Announcement and Strategy:Nvidia initially mentioned "Copilot+" but quickly shifted to referring to "a form of Copilot," creating ambiguity about their specific offering.

Copilot+ is currently exclusive to Qualcomm, AMD, and Intel, not Nvidia.

Nvidia's move appears to be aimed at maintaining investor interest and market presence amidst growing AI competition.Market Position and Comparisons:The announcement is likened to a "paper launch," intended to delay consumer purchases while Nvidia catches up with competitors.

Nvidia is seen leveraging the hype around AMD and Intel’s Copilot+ platforms, which can operate independently of Nvidia hardware.Nvidia’s Current Strengths and Concerns:Nvidia dominates the server and GPU AI market with superior developer tools, but Copilot+ represents a consumer growth area they are missing.

Investors are concerned about Nvidia's absence in the Copilot+ arena, prompting a response from Nvidia to stay relevant in AI discussions.Strix Platform and Future Prospects:Nvidia's Strix platform, combined with powerful GPUs, could support diverse AI tasks.

The platform requires substantial memory, making it more suitable for high-end laptops rather than mid-range models.Microsoft’s Role and Standardization:There's skepticism about Microsoft adapting to Nvidia’s requirements for a specific Copilot version.

The preference is for a standardized development framework, avoiding temporary or niche adaptations.Long-term Implications:Nvidia’s efforts may seem more like a PR move rather than a substantive contribution to Copilot+.

Nvidia should focus on its core strengths and not try to overshadow competitors in every AI segment.In essence, Nvidia is seen as trying to maintain relevance and investor confidence amidst AI advancements by other companies, but their strategy and announcements might be more about perception than practical, immediate contributions to the AI landscape. -

KnightShadey Reply

LOL! Not really quick or much compression on that summary (including stating it's a summary twice), 😜Murissokah said:Here's a quick summary by ChatGPT (Powered by Nvidia): ...

For you youngins, scientific notation...errr... summary trick... Read the opening statement/hypothesis and conclusion, skipping the middle representing supporting data. 🤔😉

So say nVidia 🙄 , but then immediately removed any reference to Copilot+ from their material and keep referring to ' a form of Copilot' . 🧐 Which could be anything (the 7 flavours of Copilot including a version of Copilot Pro for all we know), but definitely/obviously NOT Copilot+ that is in the current spotlight and only available to Qualcomm, AMD, and intel being a given. This is more nV NEEDING that spotlight for investor interest/dollars from folks who don't know Ai from AR.. . . nVidia just needs to be comfortable with being good at 8 out of 10 things and not try to spoil the other 1 or 2 just because no one is talking about them for 10 seconds. 🤨" -

Murissokah Reply

First one was me, GPT text starts on the second paragraph.KnightShadey said:...LOL! Not really quick or much compression on that summary (including stating it's a summary twice)...

I'll take it as a compliment as I'm not that young. Here on the site I'm 13 years your senior.KnightShadey said:For you youngins...

But yeah, this was all in good sport. Your text was quite long for a forum comment, so in good spirit of AI bullshit and in answer to the previous request I fed it to ChatGPT. -

KnightShadey ReplyMurissokah said:I'll take it as a compliment as I'm not that young. Here on the site I'm 13 years your senior.

Not really, I was here much earlier than that, just not under this name (no longer have access to that email). Back then long posts were more common, even evolving into buyer's guides. 😉

Much of the old guard is gone (including Cleeve moving on to AMD), but a few familiar faces remain.

Murissokah said:But yeah, this was all in good sport. Your text was quite long for a forum comment, so in good spirit of AI bullshit and in answer to the previous request I fed it to ChatGPT.

That's how it was received, just poking fun in return. 😜

According to the Genius Ai in my microwave, when I asked about nV and Copilot, it declared it was beep Beep BEEP, likely due to foul language filter. 🤪

Still better than your appliance trying to kill you I guess. 🤔🤣