Intel has processed 30,000 wafers with High-NA EUV chipmaking tool

Two systems in use.

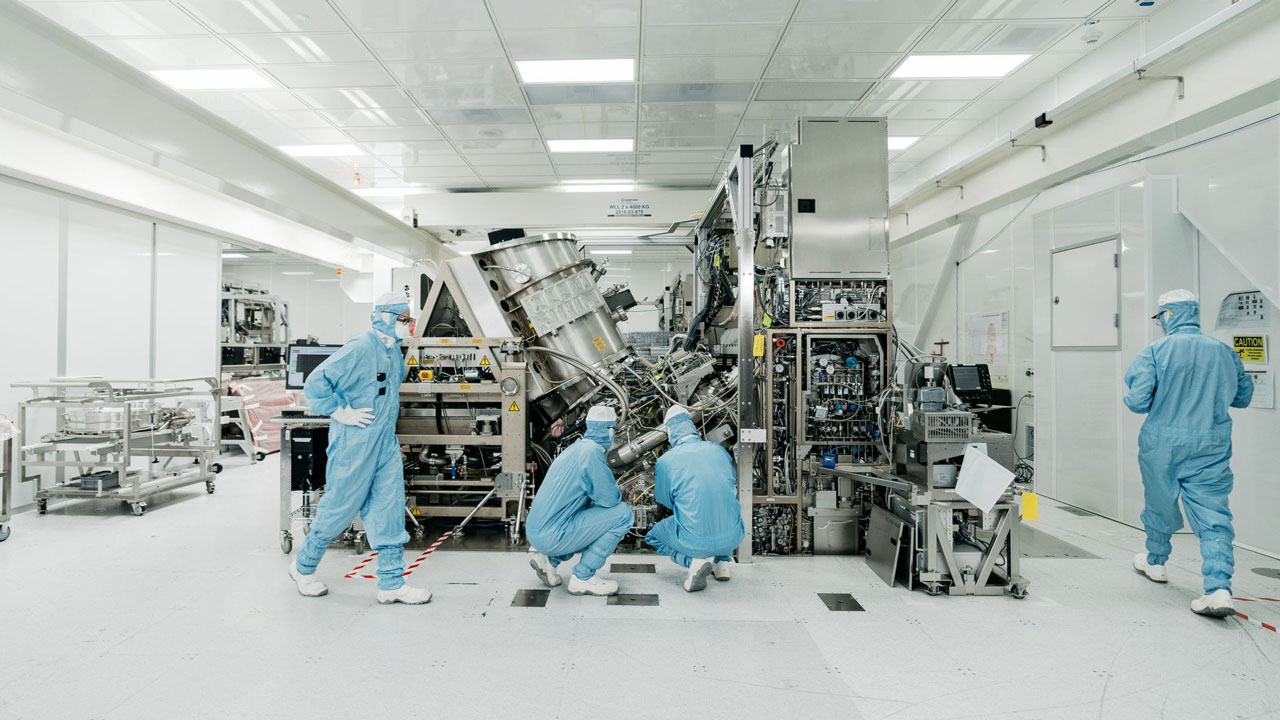

Intel has started using two leading-edge ASML High-NA Twinscan EXE:5000 EUV lithography tools, the company revealed on Monday at an industry conference, Reuters reports. The company uses these systems for research and development purposes, and so far, Intel has processed tens of thousands of wafers using them.

Intel installed and started using two High-NA EUV lithography tools from ASML at its D1 development fab near Hillsboro, Oregon, last year and has now processed as many as 30,000 wafers using these systems, Intel engineer Steve Carson revealed at the SPIE Advanced Lithography + Patterning conference. Intel was the first leading chipmaker to get High-NA EUV machines (which are believed to cost €350 million each) last year and plans to use them to produce its 14A (1.4nm-class) chips several years down the road.

Adopting an all-new manufacturing tool ahead of competitors is important, as it enables Intel to develop various High-NA EUV manufacturing aspects (such as glass for photomasks, pellicles for photomasks, chemicals, etc.) that could eventually become industry standards. Also, ASML is poised to develop its Twinscan EXE:5000 High-NA EUV tools with feedback provided by engineers from Intel, which could give the American giant an edge over competitors over time.

Processing 30,000 wafers in a quarter is far below what commercial-grade systems can do. However, the number is massive for R&D usage, demonstrating how serious Intel is about becoming the leading chip maker in the High-NA EUV era.

Although ASML considers its Twinscan EXE:5000 High-NA EUV lithography tools to be pre-production tools not designed for high-volume manufacturing, Intel has reportedly said these systems are “more reliable than earlier models.” Still, the report does not elaborate on whether ASML’s Twinscan EXE:5000 is more reliable than the company’s pre-production Twinscan NXE:3300 tool from 2013, which was used to develop the existing EUV ecosystem, or the production-grade Twinscan NXE:3600D or NXE:3800E that are used for high-volume manufacturing (HVM) today. Considering that ASML uses similar light sources for NXE and EXE machines, they may indeed be very reliable.

ASML’s Twinscan EXE High-NA EUV lithography tools can achieve a resolution of down to 8nm with a single exposure, a substantial improvement compared to Low-NA EUV systems that offer 13.5nm resolution with a single exposure. While current-generation Low-NA EUV tools can still achieve an 8nm resolution with double patterning, this lengthens the product cycle and can affect yields. High-NA EUV tools reduce the exposure field by half compared to Low-NA EUV systems, which require chip developers to alter their designs. Given the costs and peculiarities of High-NA EUV litho systems, all chipmakers have different strategies for their adoption. Intel clearly wants to be the first adopter, whereas TSMC is a little more cautious.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

bit_user Reply

Can such a number really be just for node development? Or does this imply they're already using it for some low-volume production?The article said:Processing 30,000 wafers in a quarter is far below what commercial-grade systems can do. However, the number is massive for R&D usage

I can sort of see why you might need lots of test wafer for node development. For instance, if you want to sweep different parameter spaces. Then, as the node finalizes, I guess cell library design and testing needs to happen. Still, seems like a lot of wafer to handle, especially if you're doing any sort of detailed analysis of them. -

jkflipflop98 Oh yeah, that's pretty much normal. All the different modules have their own pet projects and issues to iron out. The diffusion guys might be trying a new atomic layer deposition, and the etch guys are trying some new acid/base chemistry for an undercut, while the planar guys are trying out some new pad materials. Then we need fleets of optical inspection tools backed up by hundreds of scanning and transmission electron scopes to double check it all. It adds up quick.Reply -

why_wolf Reply

I guess it depends on what a "regular" amount of test wafers is? So this could be a nothing article or it could mean Intel is putting in serious hours on R&D to get back ahead and performing tests back to back.bit_user said:Can such a number really be just for node development? Or does this imply they're already using it for some low-volume production?

I can sort of see why you might need lots of test wafer for node development. For instance, if you want to sweep different parameter spaces. Then, as the node finalizes, I guess cell library design and testing needs to happen. Still, seems like a lot of wafer to handle, especially if you're doing any sort of detailed analysis of them. -

DS426 I also had the initial shock of thinking 30K wafers seems high when used just for R&D, but I suppose thousands of unique wafers come about really quickly when several variations exist at many of the different stages. For example, if 4x6x8x4x7 possibilities were used across those 5 stages/characteristics, that would consume 5,375 wafers. Add one more stage with 5 possibilities (different materials, chemicals, time, etc.) and you get pretty close to 30K wafers. :OReply -

bit_user Reply

Yeah, but I'm sure they wouldn't do uniformly sampled parameter sweeps. Probably something more like a binary search or gradient descent.DS426 said:I also had the initial shock of thinking 30K wafers seems high when used just for R&D, but I suppose thousands of unique wafers come about really quickly when several variations exist at many of the different stages. For example, if 4x6x8x4x7 possibilities were used across those 5 stages/characteristics, that would consume 5,375 wafers.

If the materials & processing costs are like $10k each, that'd add up to $300M, which seems not way out of line with what I imagine node development costs are running. If that many were fabbed over a single year, it'd be about 82 per day or 5 per hour (assuming equipment is available 67% of the time). -

thestryker Reply

Keep in mind the first one completed installation in Apr 2024 and the second in Oct so that's a lot of time relatively speaking. Given that 18A was also originally supposed to be a High-NA node I wouldn't be surprised if they were able to hit the ground running processing wafers quickly. I can only imagine the process engineers salivating at the possibility of seeing what they can do with the node on better machines. I can't imagine if the only thing they were doing was 14A prep this many would have been run through yet.bit_user said:Can such a number really be just for node development? Or does this imply they're already using it for some low-volume production?

I can sort of see why you might need lots of test wafer for node development. For instance, if you want to sweep different parameter spaces. Then, as the node finalizes, I guess cell library design and testing needs to happen. Still, seems like a lot of wafer to handle, especially if you're doing any sort of detailed analysis of them.

Depending on the speed with which ASML can get the 5200 to market I could even see one of the advanced 18A nodes being run High-NA since 14A is still a ways out.

edit: Just read the source article and they claim the 30000 is in a single quarter which does seem like a high number, but perhaps that's just an indicator of how rapid development progress is. I imagine we'll find out more in Apr when they do their foundry event. -

dalek1234 We need an insider to tell us what the yield rate is.Reply

If these are wafers for R&D only, it could also mean that there is a high defect rate, and Intel is trying frantically different things to try to improve the yield, hence the high wafer count.

Or it could be a bunch of different reasons, like others have stated. Basically, the article doesn't have enough detail to be of any use. -

bit_user Reply

This isn't for 18A, but maybe 14A is far enough along that they're already starting to focus on yield improvements.dalek1234 said:We need an insider to tell us what the yield rate is.

The article says this was an announcement Intel made at an industry conference. If it were a bad sign, I think they wouldn't have told that to a bunch of experts who'd be best-placed to understand that.dalek1234 said:If these are wafers for R&D only, it could also mean that there is a high defect rate, and Intel is trying frantically different things to try to improve the yield, hence the high wafer count.

I think Intel is trying to tell us their development is proceeding at a rapid pace. I agree that it's pretty useless, at least to you and me.dalek1234 said:Or it could be a bunch of different reasons, like others have stated. Basically, the article doesn't have enough detail to be of any use.

I'm mostly impressed at the processing rate, which as @thestryker pointed out, was 30k per 3 months = 329 per day. If it were going 24/7, that's 13.7 per hour which is almost like printing paper. Even with 2 machines going, that's a lot faster than I thought it'd be.