AMD Launches Milan-X With 3D V-Cache, EPYC 7773X With 768MB L3 Cache for $8,800

More cache for more cash

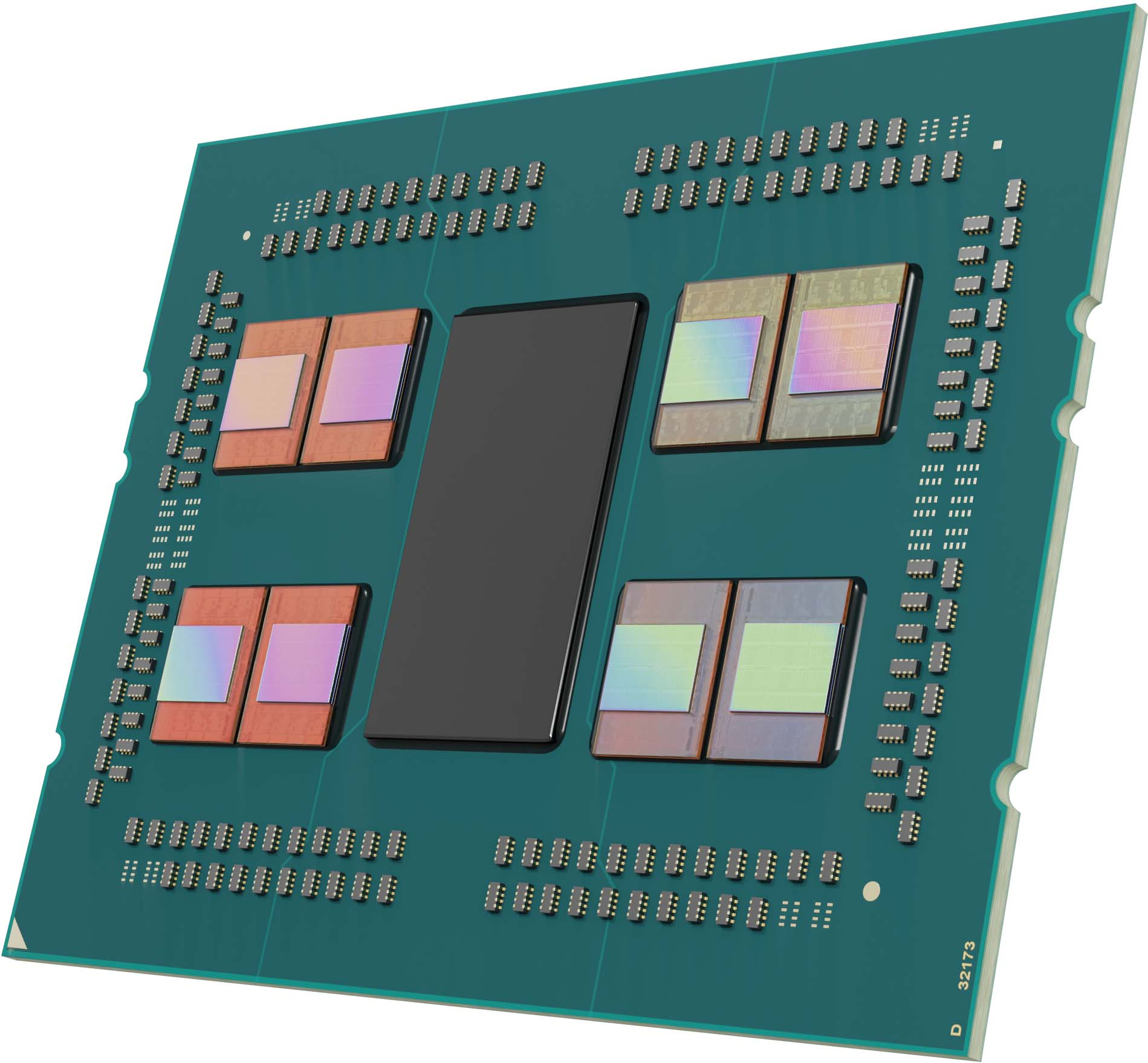

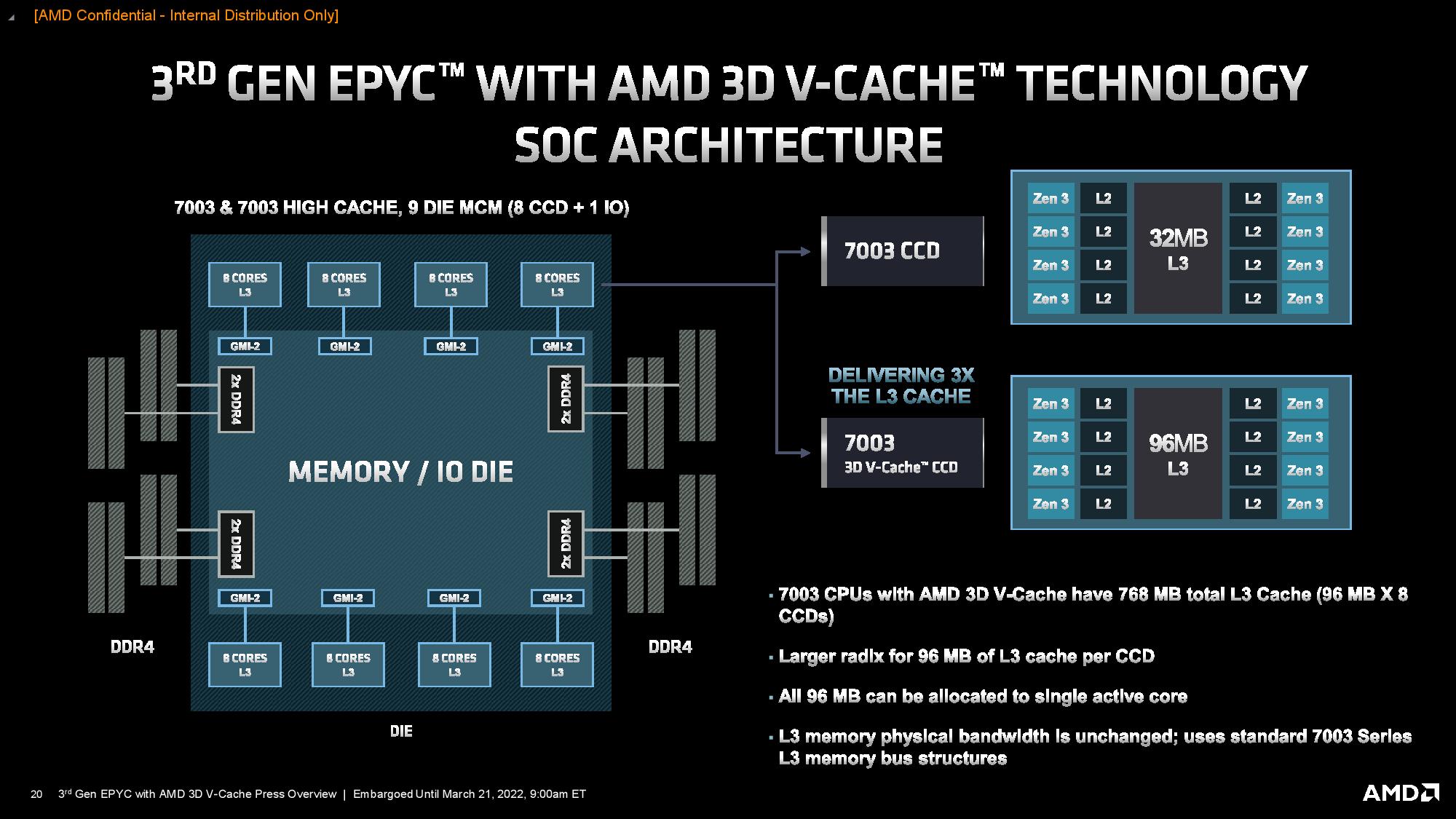

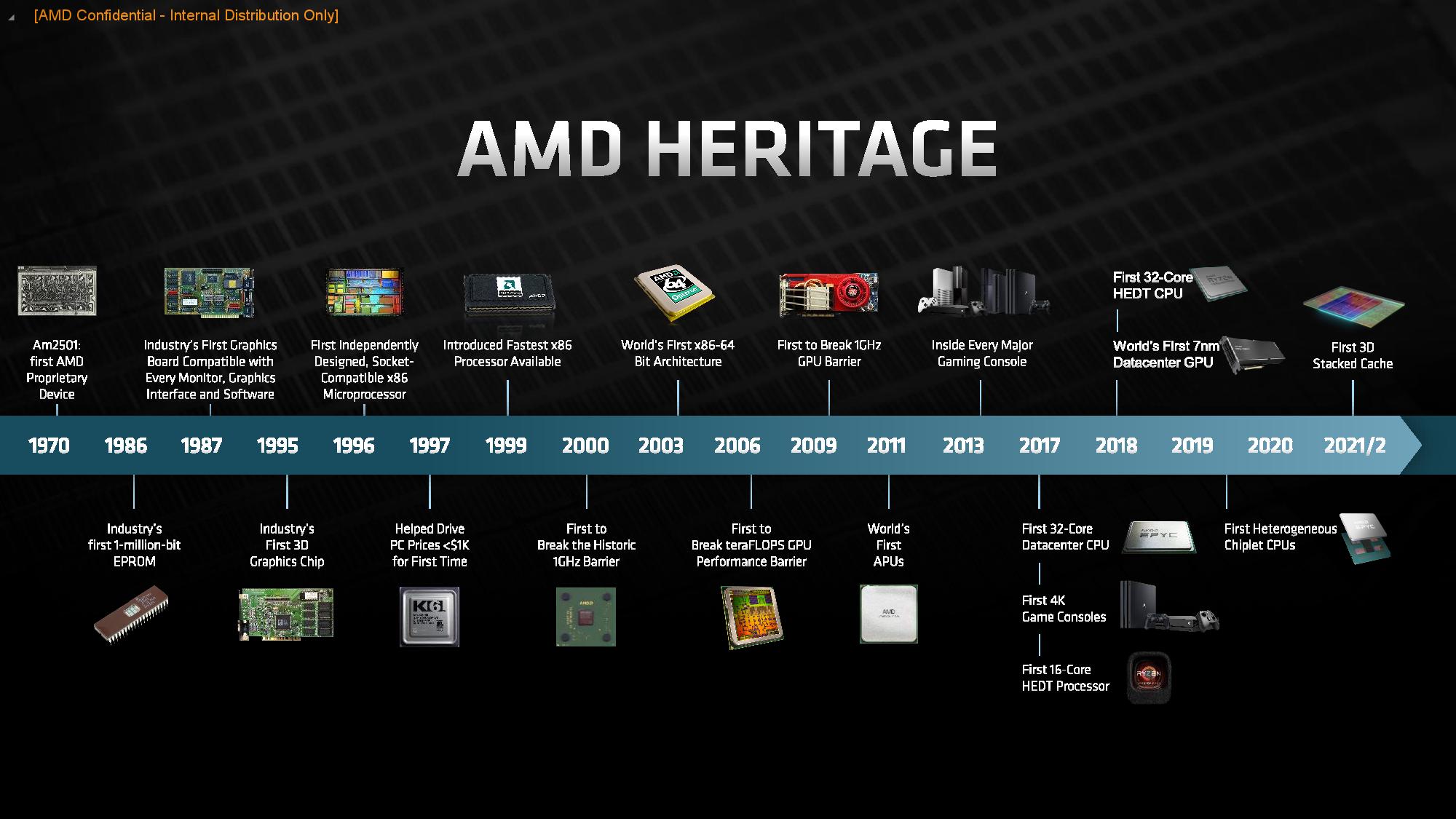

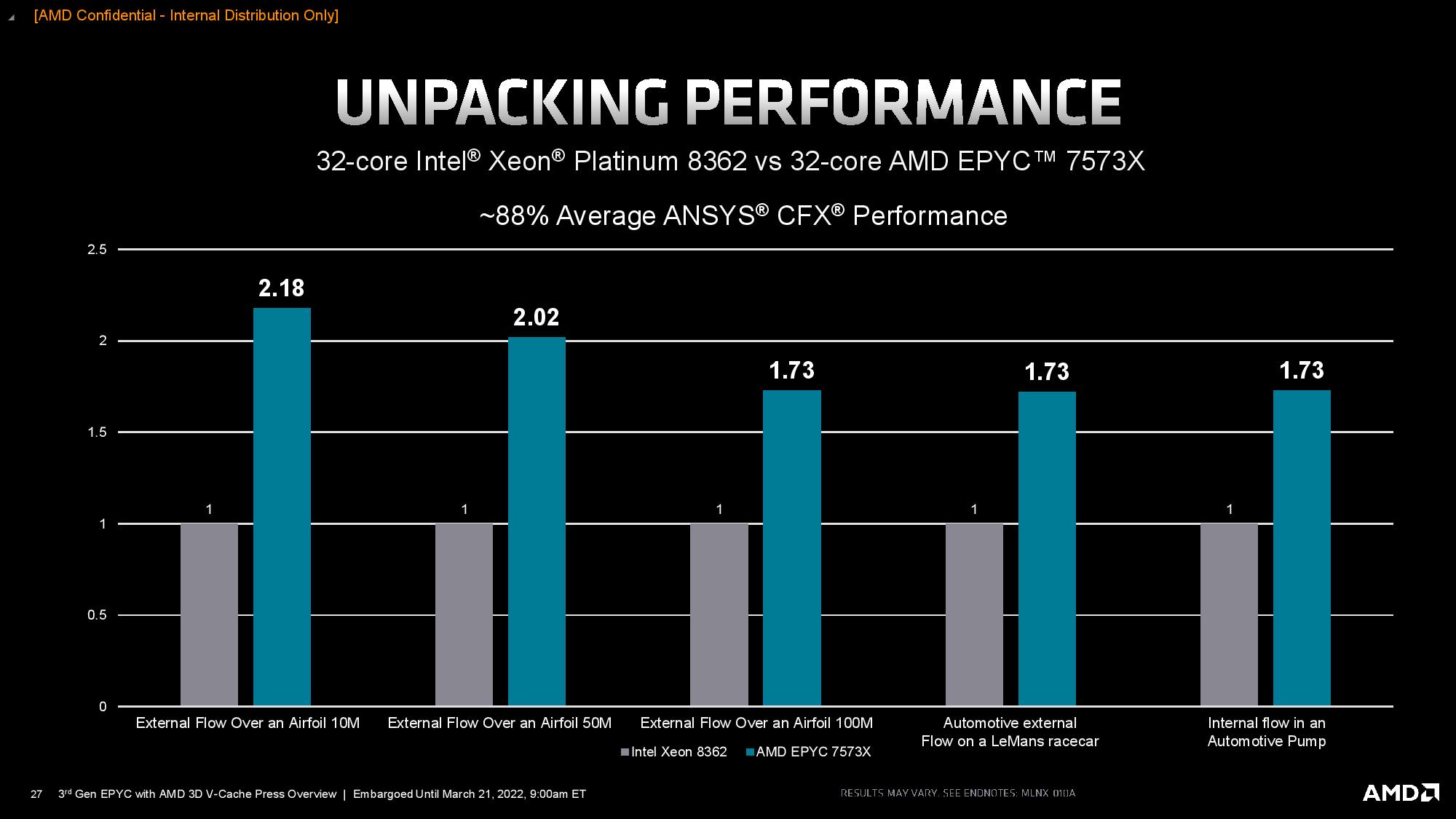

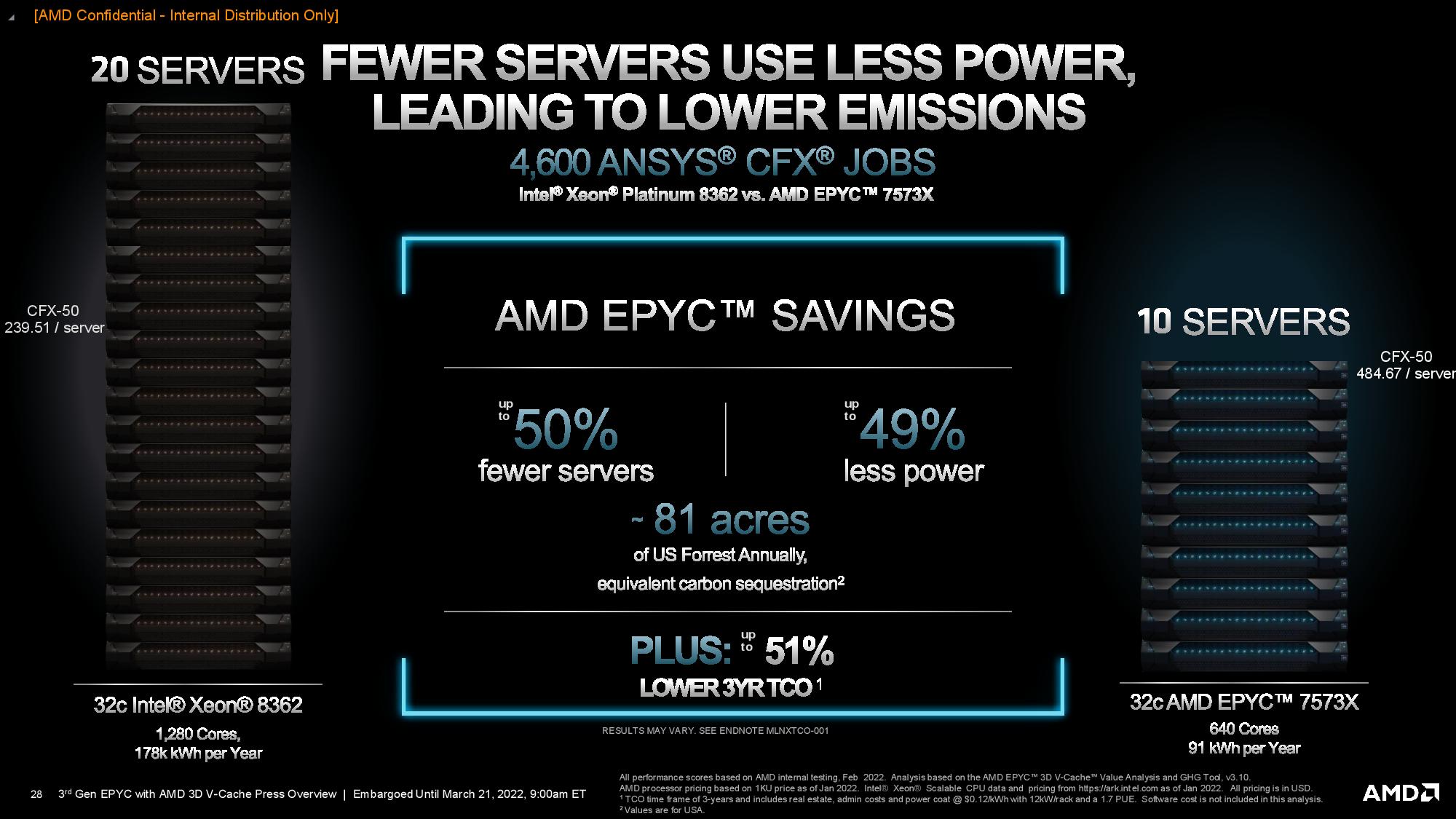

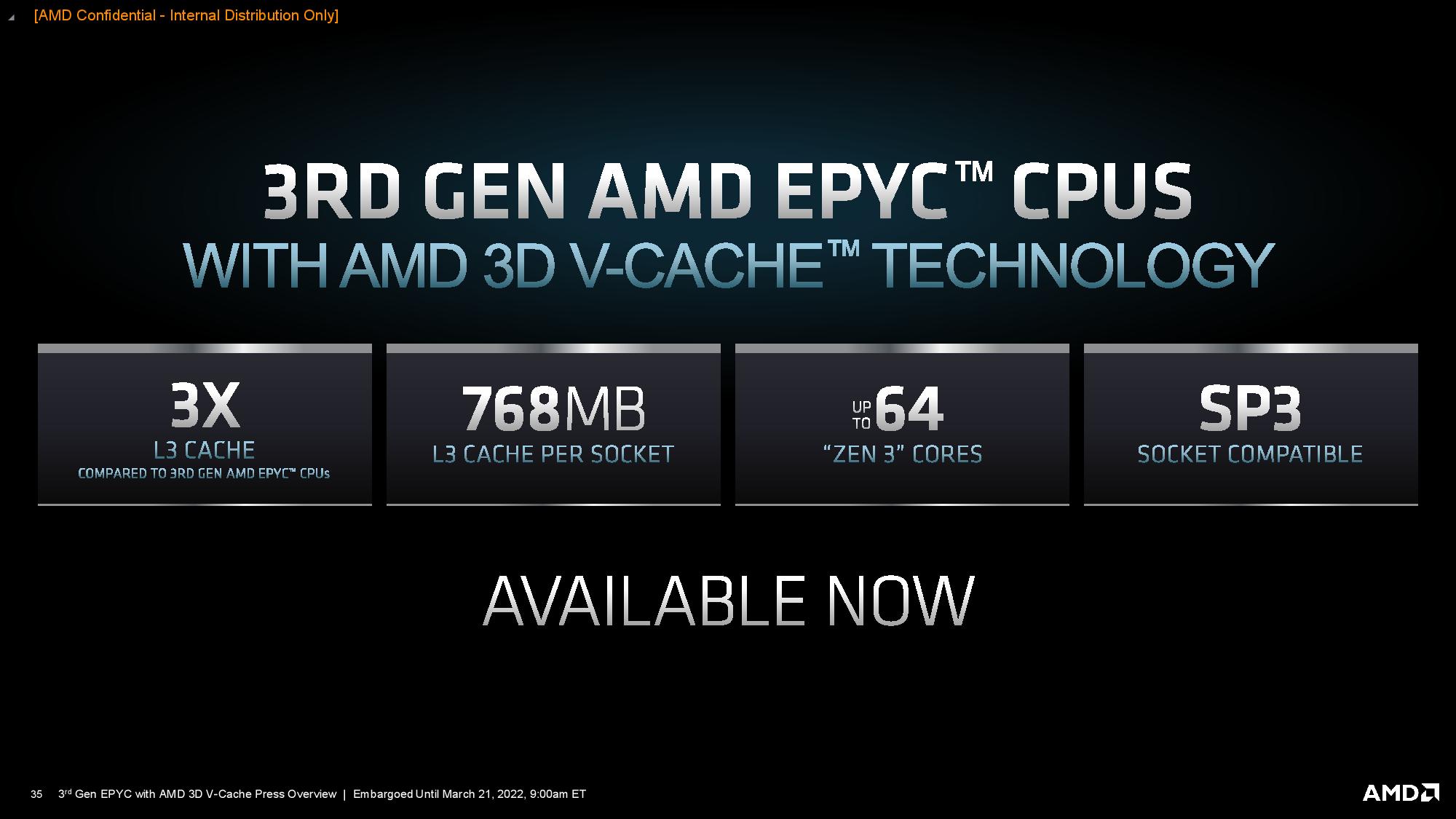

AMD fully unveiled its lineup of third-gen EPYC 7003 "Milan-X" processors with 3D V-Cache today as it announced the general availability of the world's first chips to ship with a 3D-stacked cache, thus tripling the amount of L3 cache per chip. AMD claims the new chips offer up to an 88% performance improvement in select technical computing workloads, headlined by the $8,800 64-core 128-thread EPYC 7773X that comes with a once-unthinkable 768MB of L3 cache. That means a two-socket server can now house up to 1.5 gigabytes of L3 cache. AMD also revealed a few new details around its 3D V-Cache technology that is also coming to its consumer-oriented Ryzen 7 5800X3D chips next month.

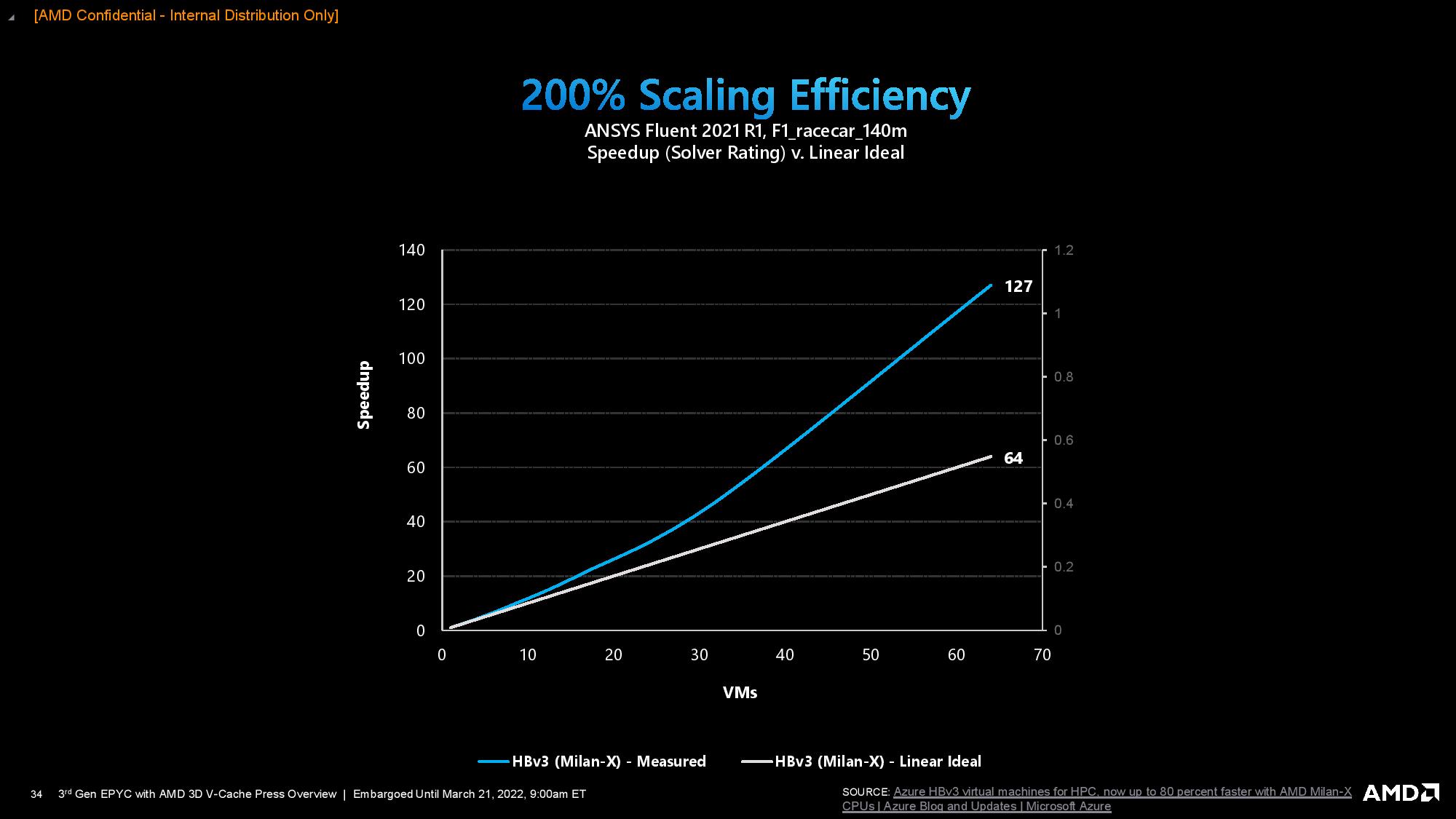

AMD has already shipped the Milan-X processors to hyperscalers, OEMs, and SIs. In addition, Microsoft has already shared a plethora of benchmarks from the Milan-X HBv3 VMs already available at Microsoft Azure, largely confirming AMD's performance claims.

New today is pricing for the chips, and below we can see that the chips come with an upcharge ranging from a $910 (11.5%) increase for the 64-core EPYC 7773X to a $2,620 (+167%) upcharge for the 16-core EPYC 7373X, at least compared to the standard general-purpose models. However, Milan-X targets the high-performance segment, so the frequency-optimized "F" series Milan chips are better comparables. Here we see that Milan-X is roughly 20% more expensive than those parts.

Intel doesn't have any directly-comparable Xeon models, but that will change when the Sapphire Rapids chips with HBM2E arrive later this year. Intel claims those chips will be twice as fast as Milan-X in some workloads. Naturally, we'll have to see how that plays out in our labs.

AMD EPYC 7003 Milan-X Specifications and Pricing

| Processor | Price (1KU) | Cores/Threads | Base/Boost Clock (GHz) | L3 Cache (L3 + 3D V-Cache) | TDP | cTDP (W) |

| EPYC 7773X | $8,800 | 64 / 128 | 2.2 / 3.5 | 768MB | 280W | 225-280W |

| EPYC 7763 | $7,890 | 64 / 128 | 2.45 / 3.5 | 256MB | 280W | 225-280W |

| EPYC 7573X | $5,950 | 32 / 64 | 2.8 / 3.6 | 768MB | 280W | 225-280W |

| EPYC 7543 | $3,761 | 32 / 64 | 2.8 / 3.7 | 256MB | 225W | 225-240W |

| EPYC 7F53 | $4,860 | 32 / 64 | 2.95 / 4.0 | 256MB | 280W | 225-280W |

| EPYC 7473X | $3,900 | 24 / 48 | 2.8 / 3.7 | 768MB | 240W | 225-280W |

| EPYC 7443 | $2,010 | 24 / 48 | 2.85 / 4.0 | 128MB | 200W | 165-200W |

| EPYC 74F3 | $2,900 | 24 / 48 | 3.2 / 4.0 | 256MB | 240W | 225-240W |

| EPYC 7373X | $4,185 | 16 / 32 | 3.05 / 3.8 | 768MB | 240W | 225-280W |

| EPYC 7343 | $1,565 | 16 / 32 | 3.2 / 3.9 | 128MB | 190W | 165-200W |

| EPYC 73F3 | $3,521 | 16 / 32 | 3.5 / 4.0 | 256MB | 240W | 225-240W |

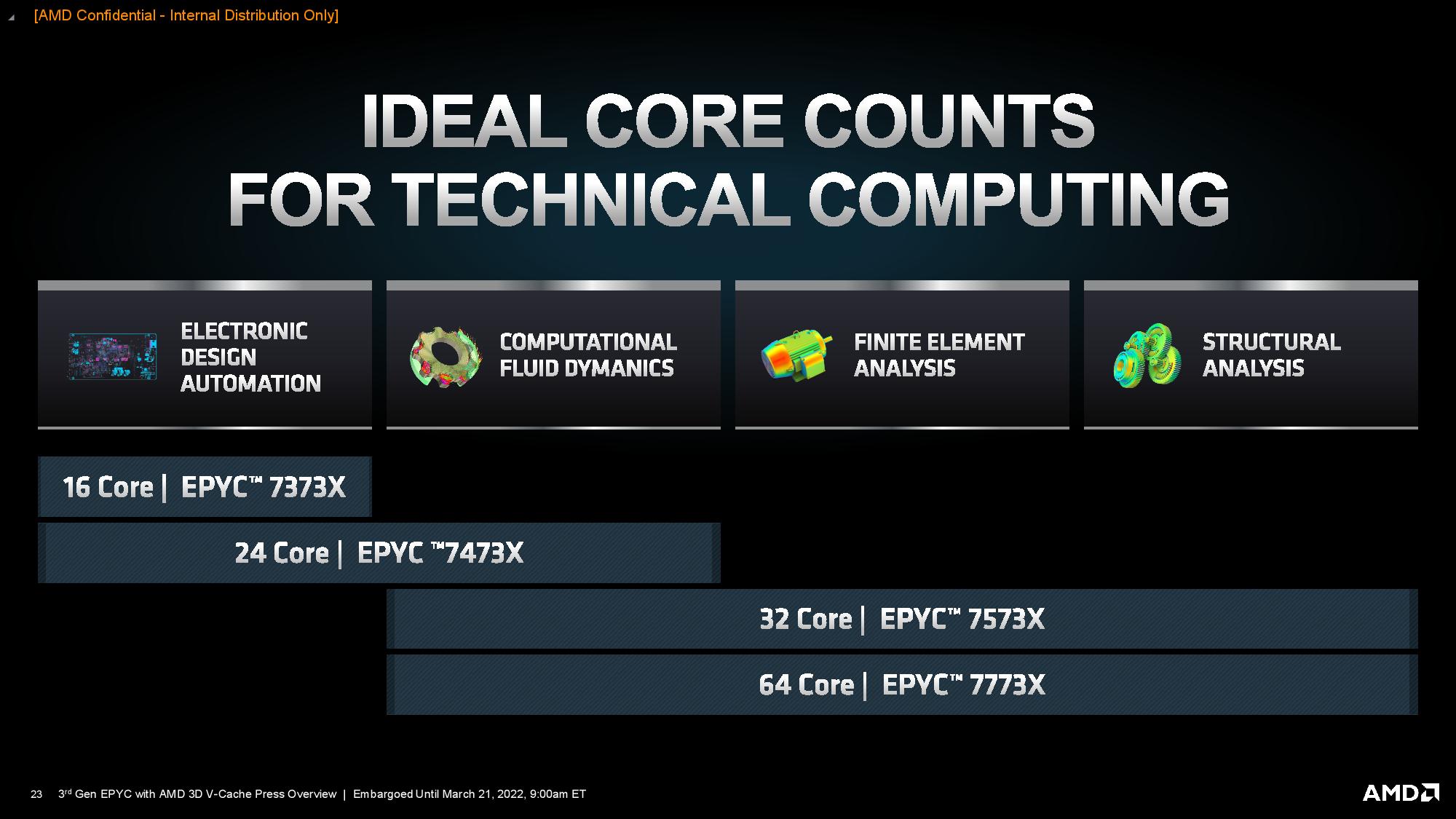

After the requisite BIOS update, these chips drop into existing servers with the SP3 socket. The 3D V-Cache technology can deliver stunning performance improvements in some workloads, but those gains don't apply to every type of application. As such, AMD's limited selection of four chips, denoted by an 'X' suffix, represents a group of carefully selected core counts to satisfy particular technical workload requirements.

Workloads that benefit the most from 3D V-Cache tend to be sensitive to L3 cache capacity, have high L3 cache capacity misses (data is too large for the cache), or L3 cache conflict misses (cached data has low associativity). Workloads that are least likely to benefit tend to have high cache hit rates, high L3 cache coherency misses (data is frequently shared between cores), or only use cached data once (instead of repeatedly).

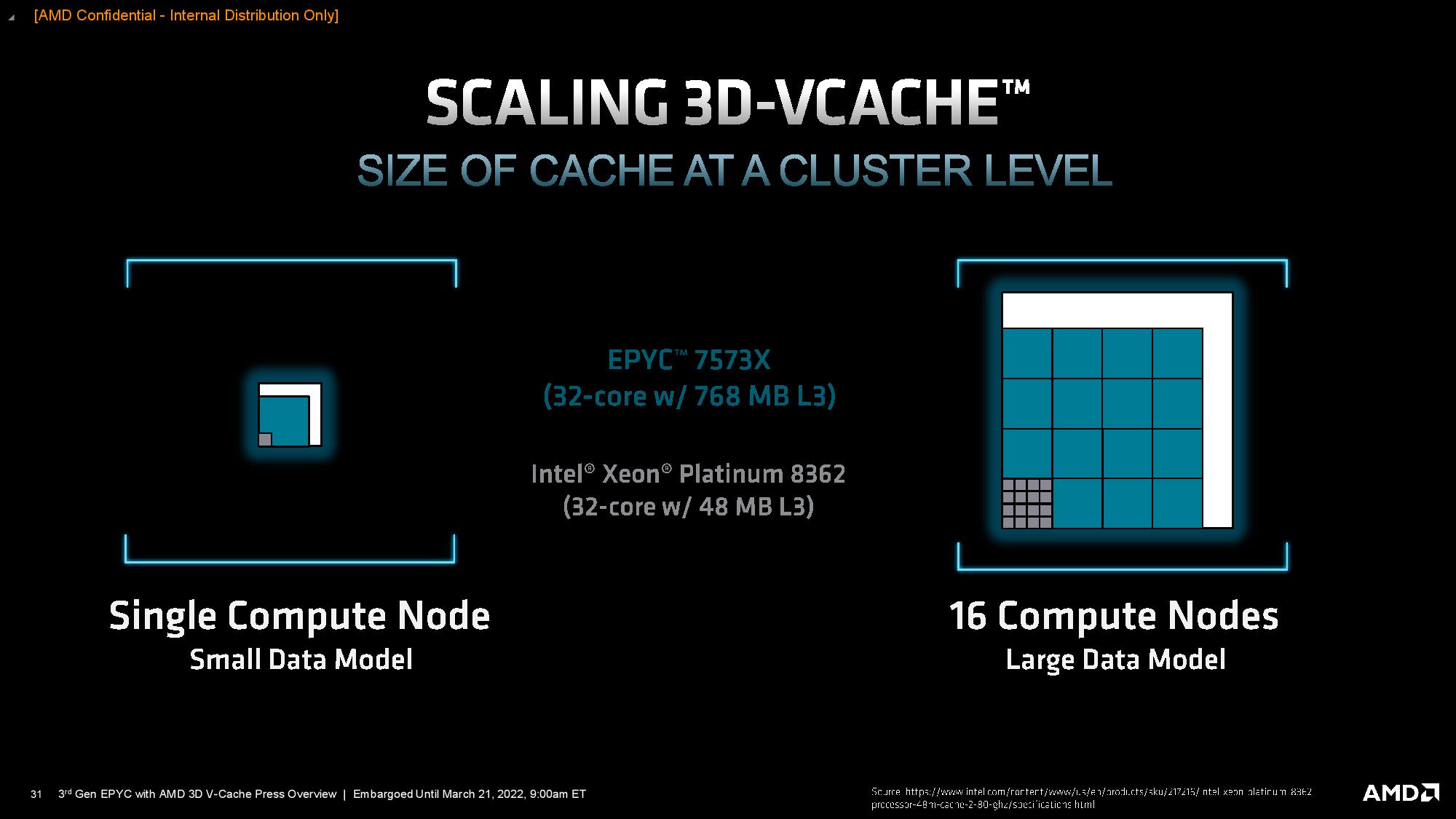

All four Milan-X models come equipped with eight active core compute die (CCD) to deliver the full 768 MB of L3 cache, even with the smaller SKUs. This makes sense given that each core has access to the full 96MB of L3 cache, so even the lowest-end 16-core model can fully leverage the cache for applications that don't rely on heavy parallelization.

We expect some clock speed tradeoffs due to the increased cache and the thermal/power challenges associated with this type of design, but the impact is quite muted compared to the standard general-purpose EPYC models. For instance, the EPYC 77373X has a 250 MHz lower base clock speed but has an identical 3.5 GHz boost compared to the 7763. We do see a 100 to 200 MHz decline in the base/boost speed on a few other SKUs, but these declines aren't as pronounced as some feared.

The clock rate adjustments are more noticeable when we zoom out to compare to the frequency-optimized F-series parts, with an up to 500 MHz decline in base frequency and a 400 MHz reduction in boost clocks. However, these types of adjustments vary by model. AMD's goal here is to deliver increased performance via the larger L3 caches, thus offsetting the frequency adjustments and offering more overall performance for workloads that benefit. AMD also wanted to keep the processors within the same TDP envelope as other EPYC 7003 chips to ensure compatibility with existing EPYC systems.

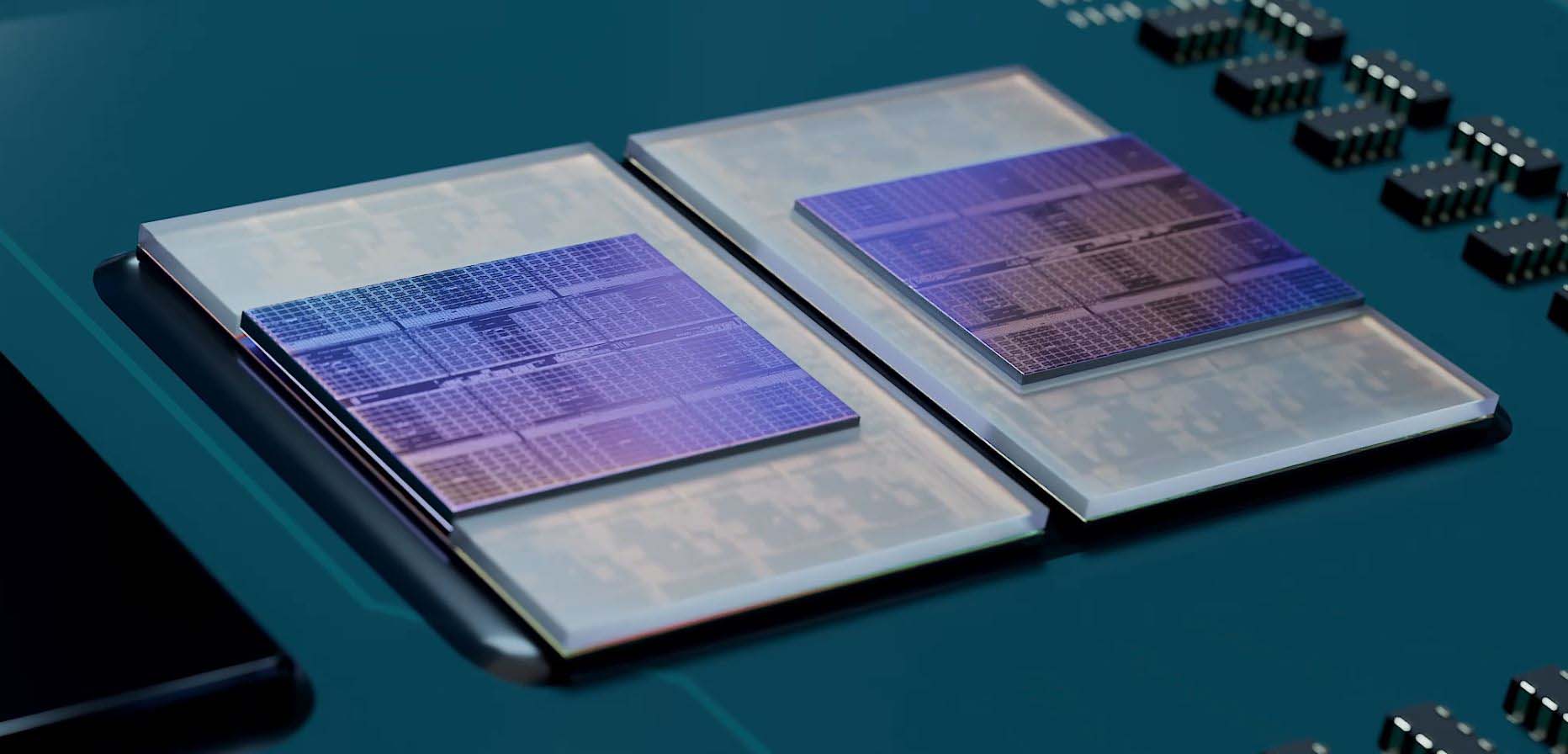

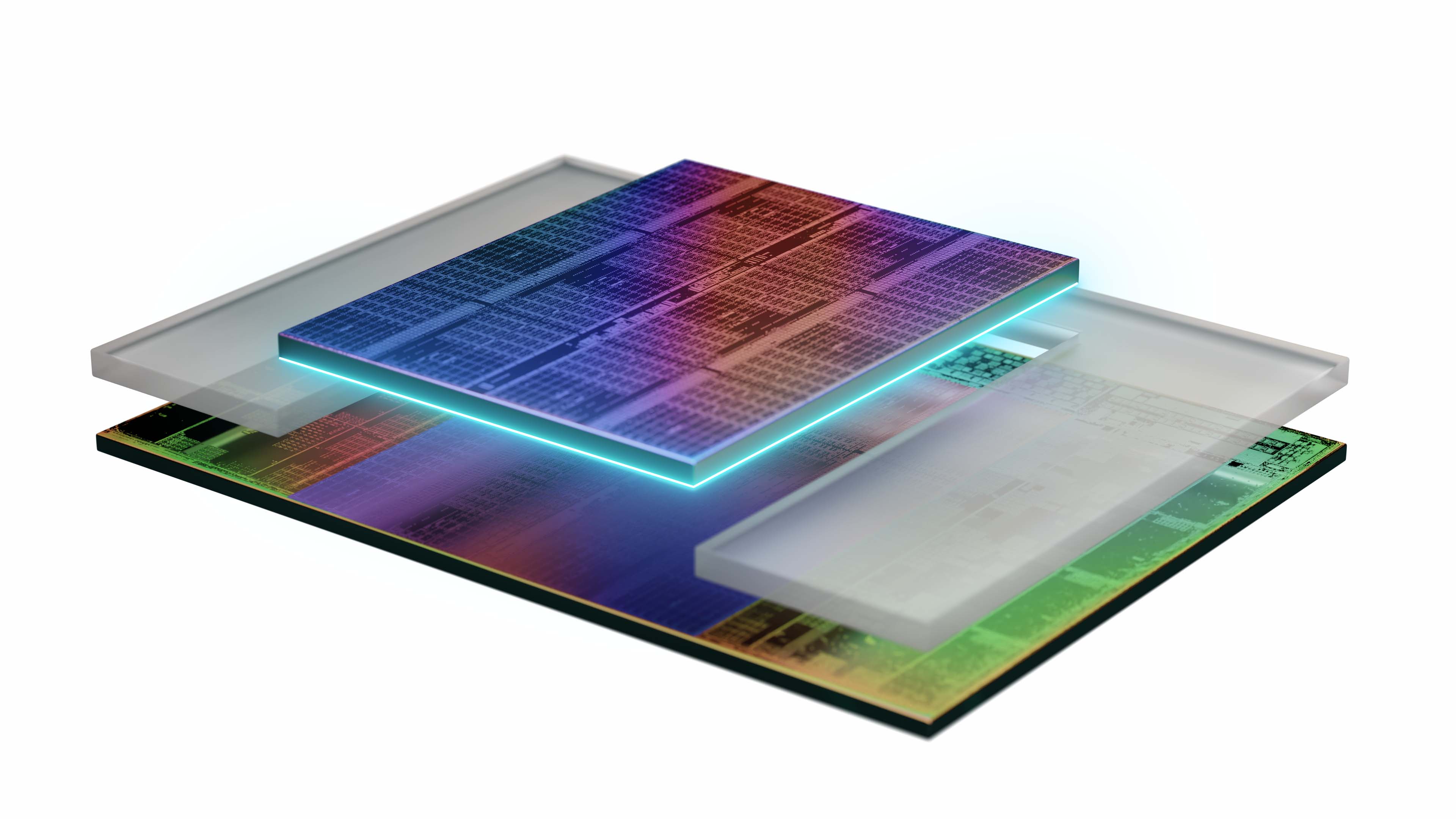

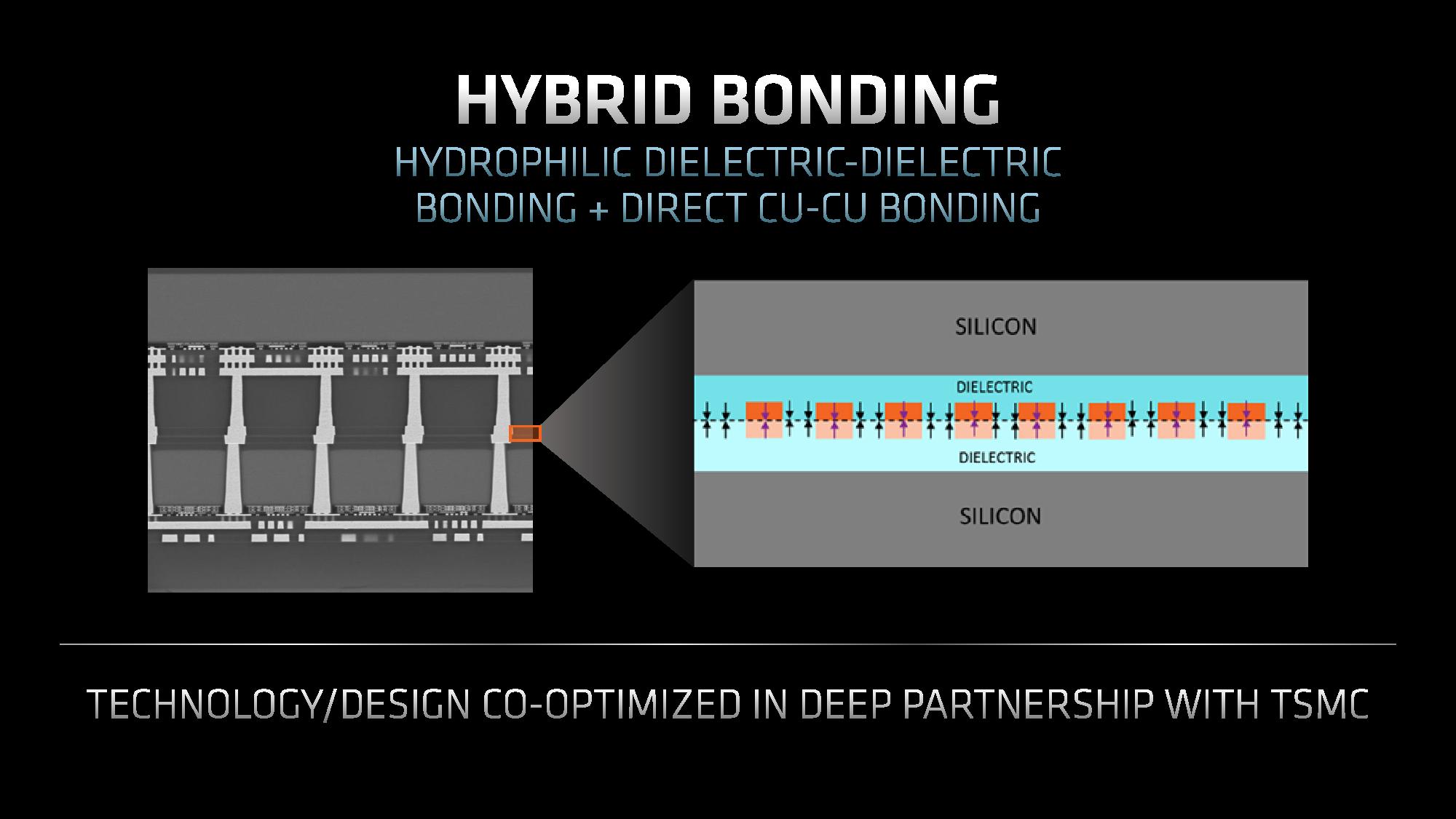

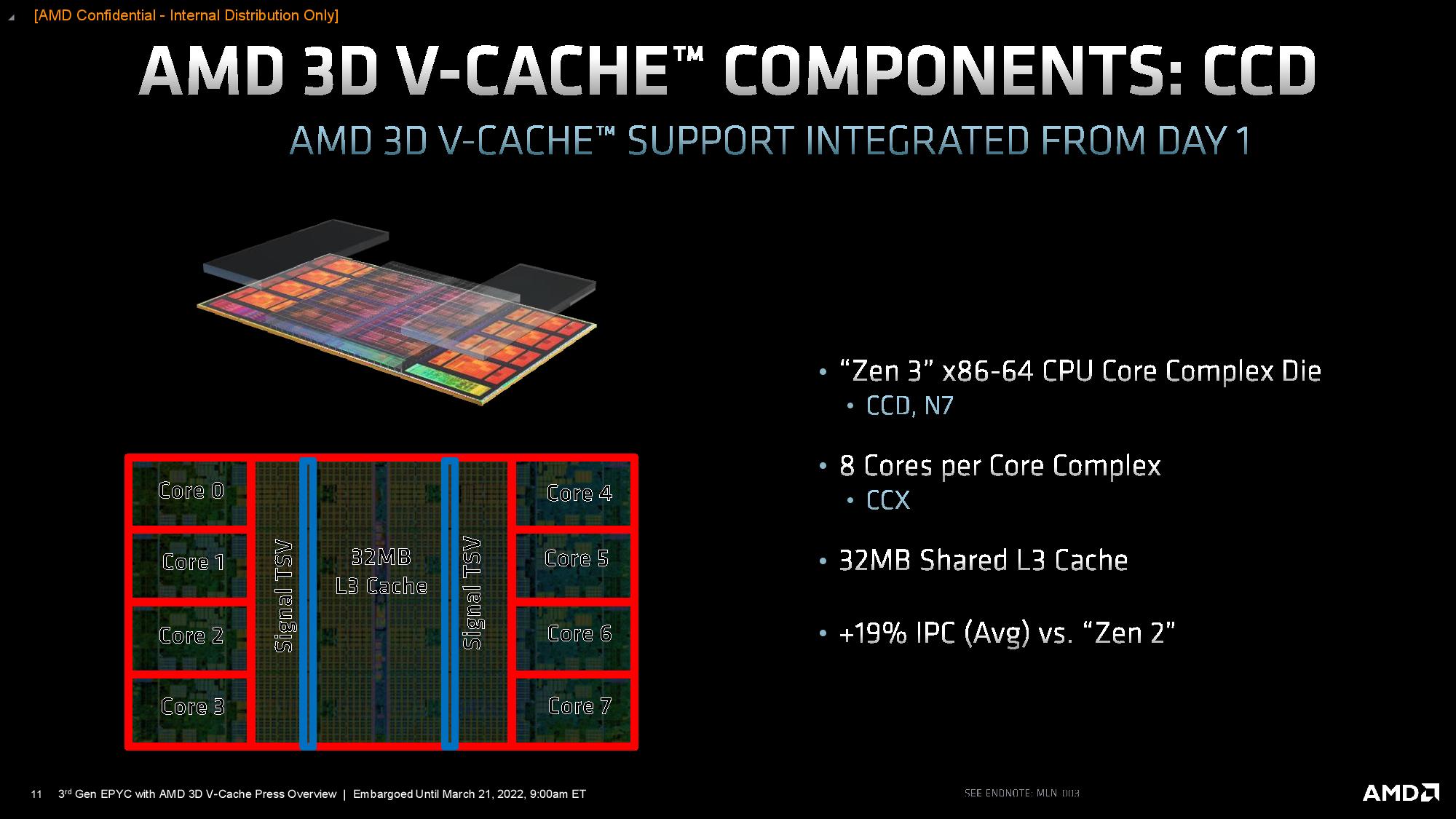

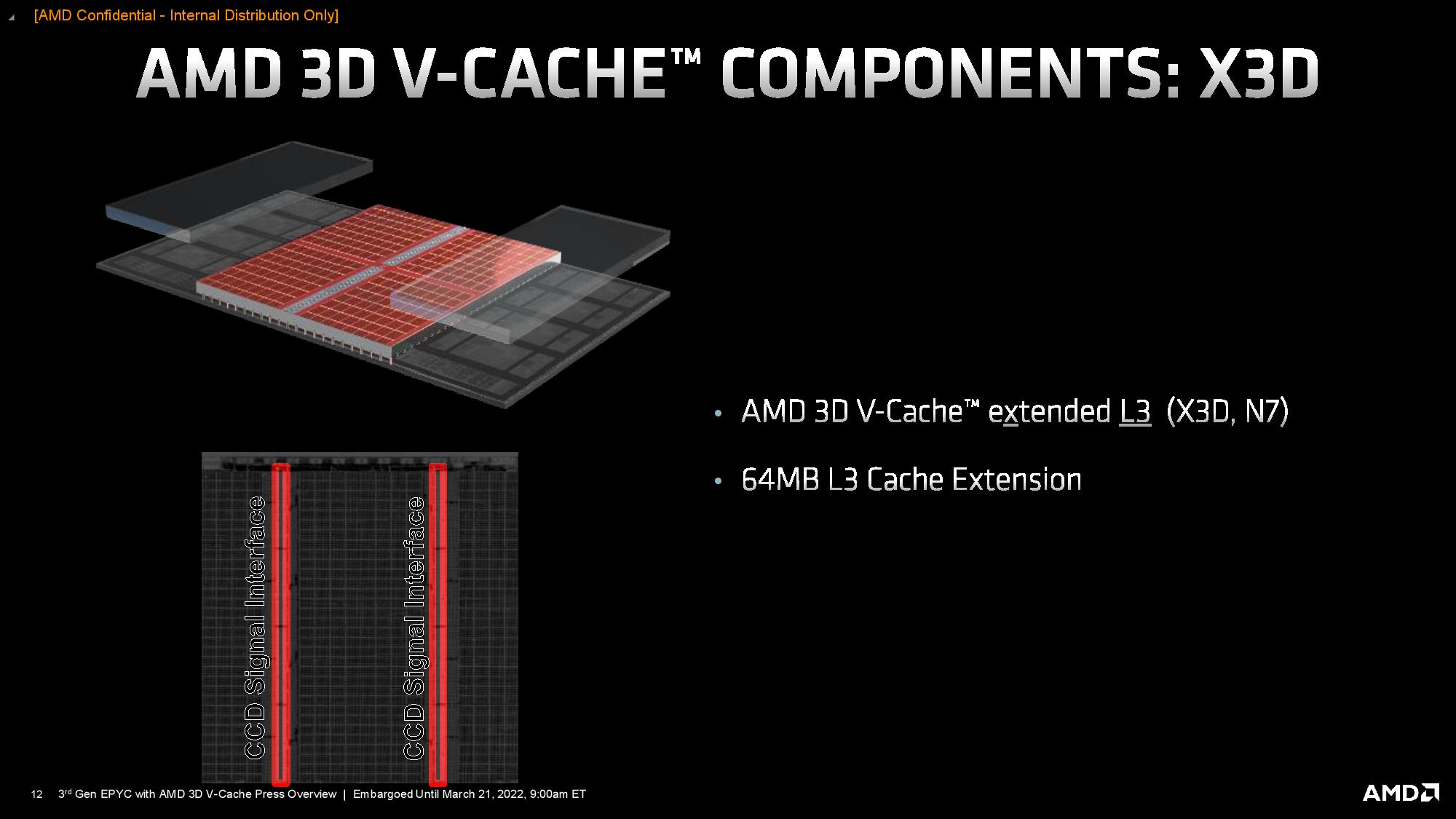

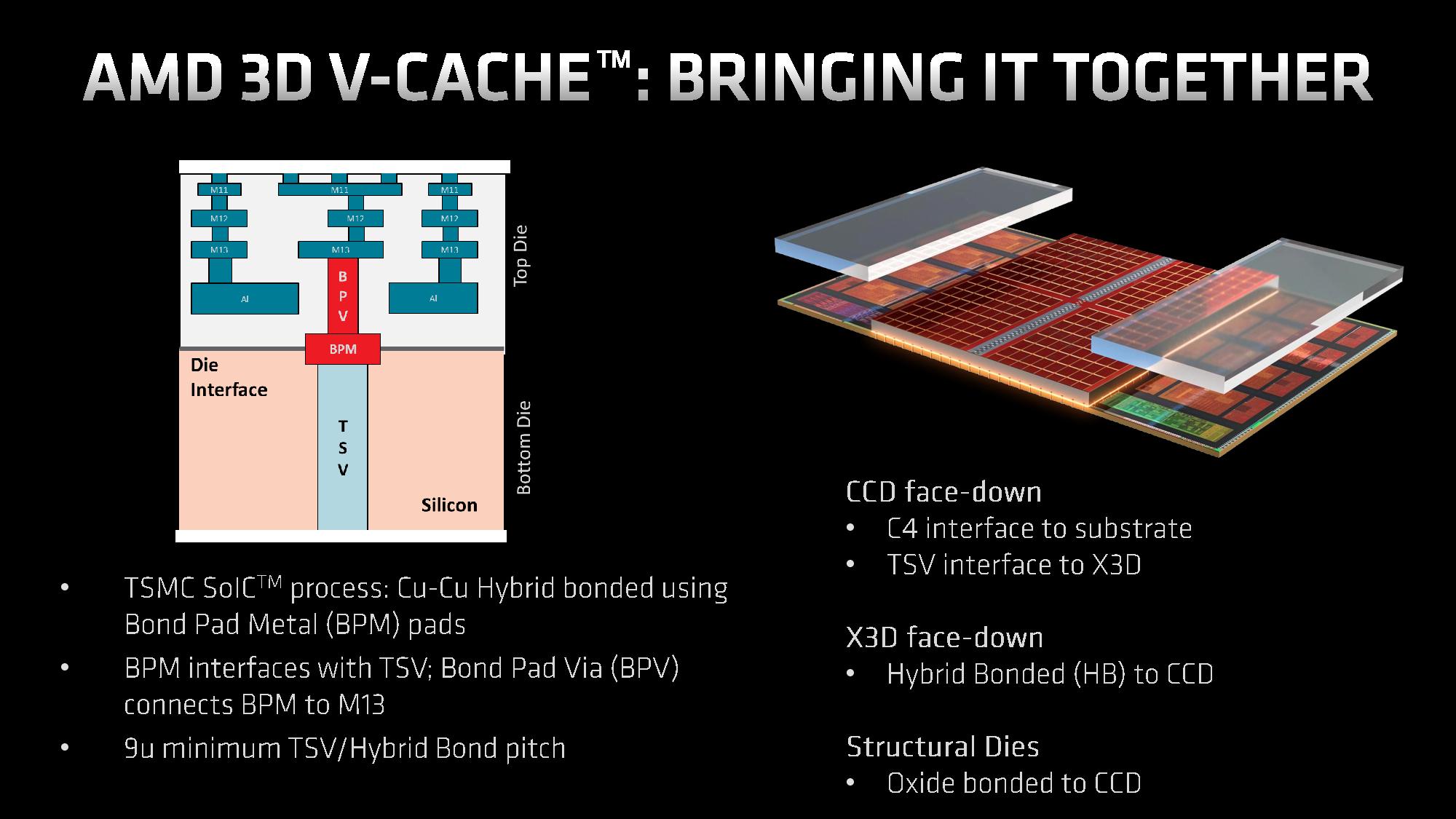

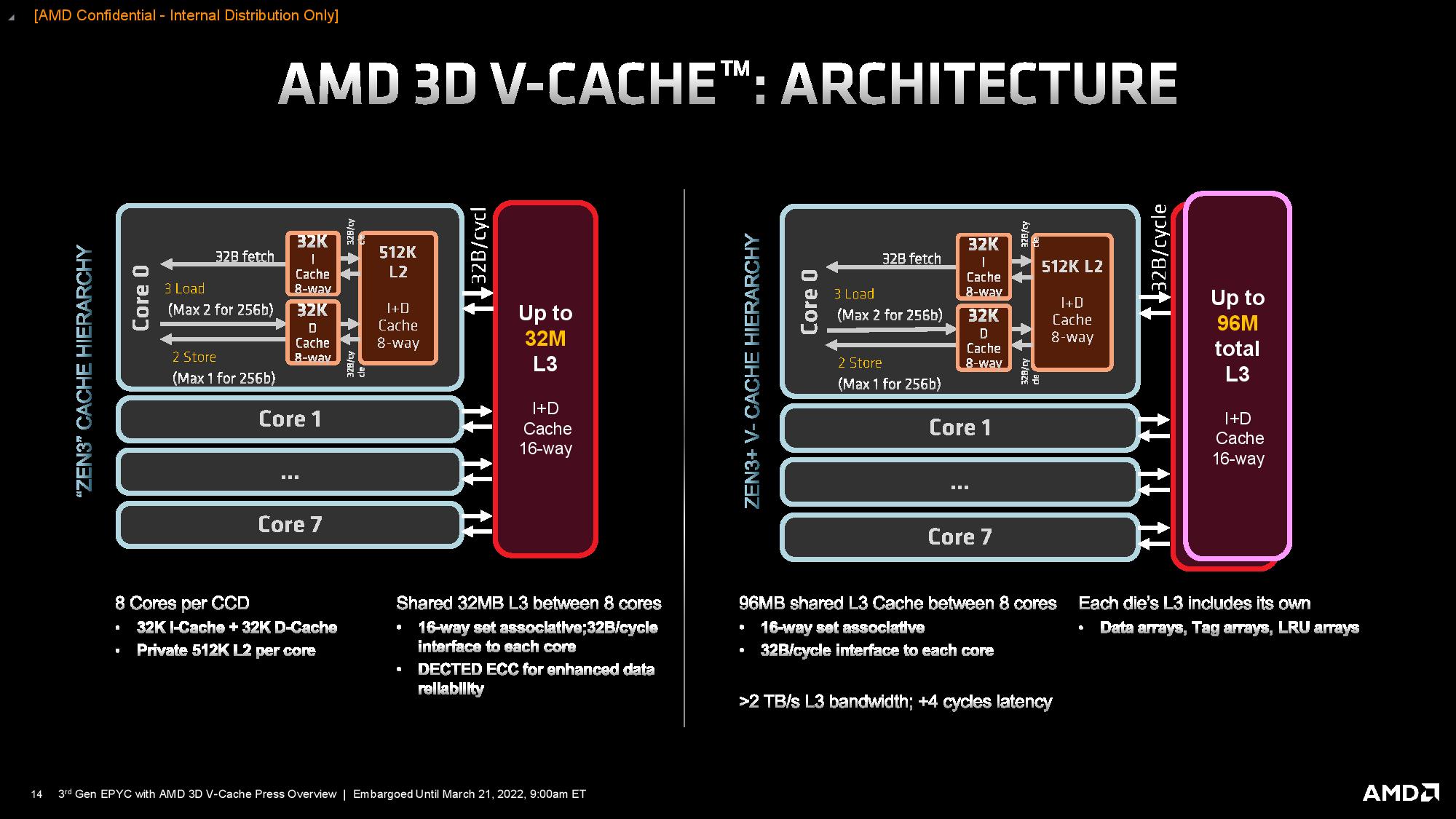

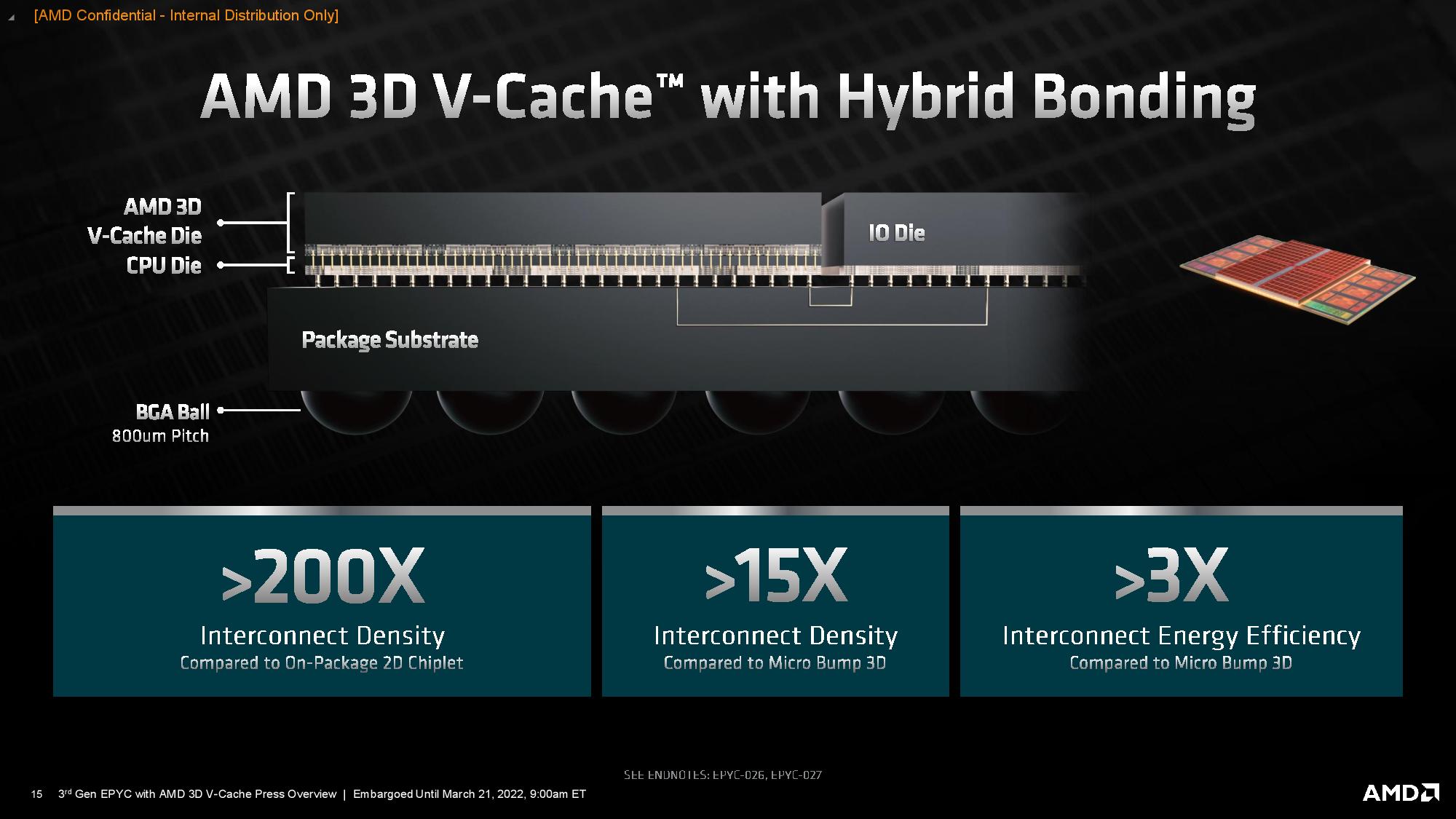

As you can see throughout the above album, AMD also shared more details around its 3D V-Cache tech. As a quick refresher, 3D V-Cache leverages a novel new technique that uses hybrid bonding to fuse an additional 64MB of 7nm SRAM cache vertically atop the Ryzen compute chiplet, thus tripling the amount of L3 cache per die. You can read the deep dive details here and here.

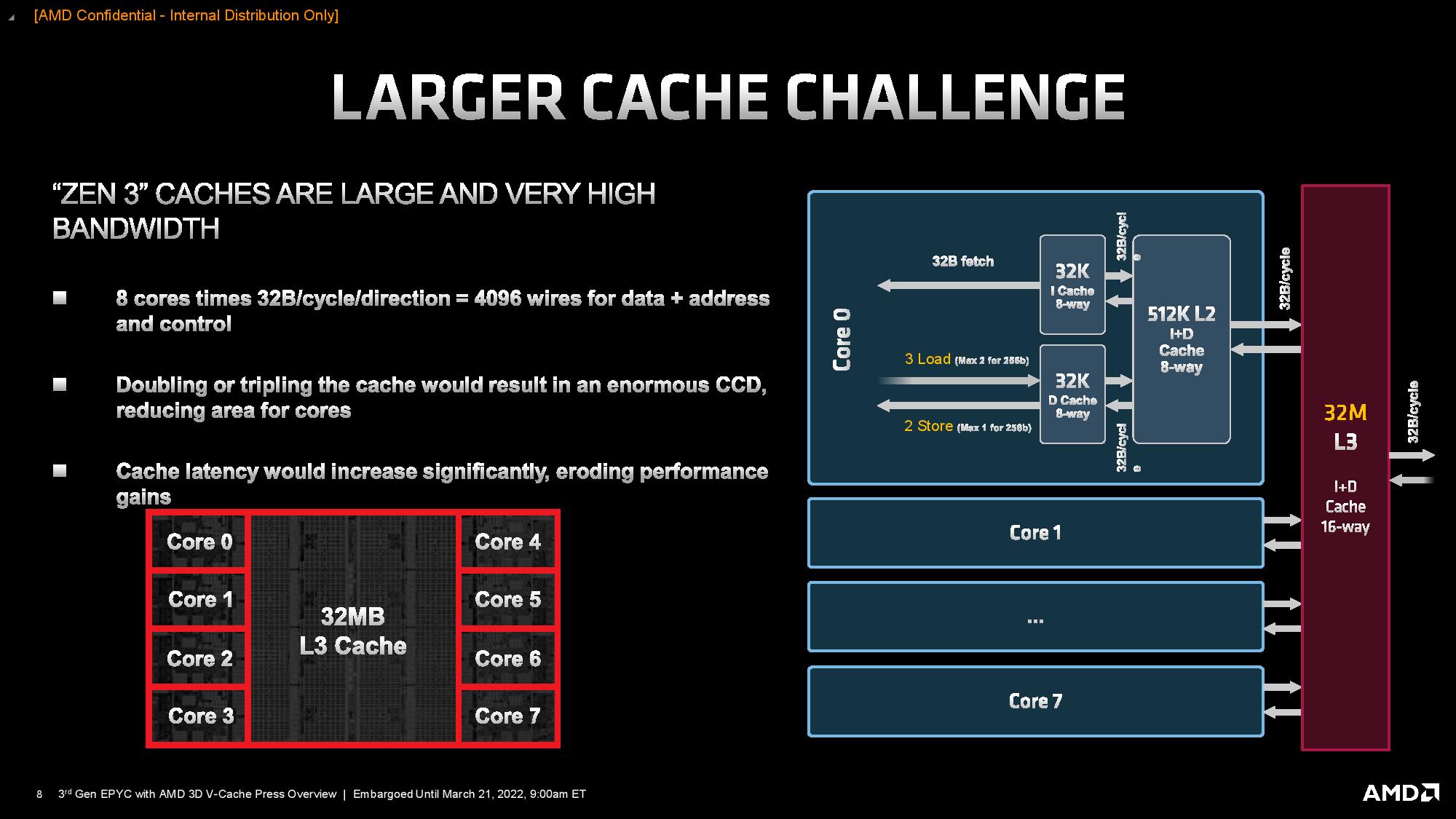

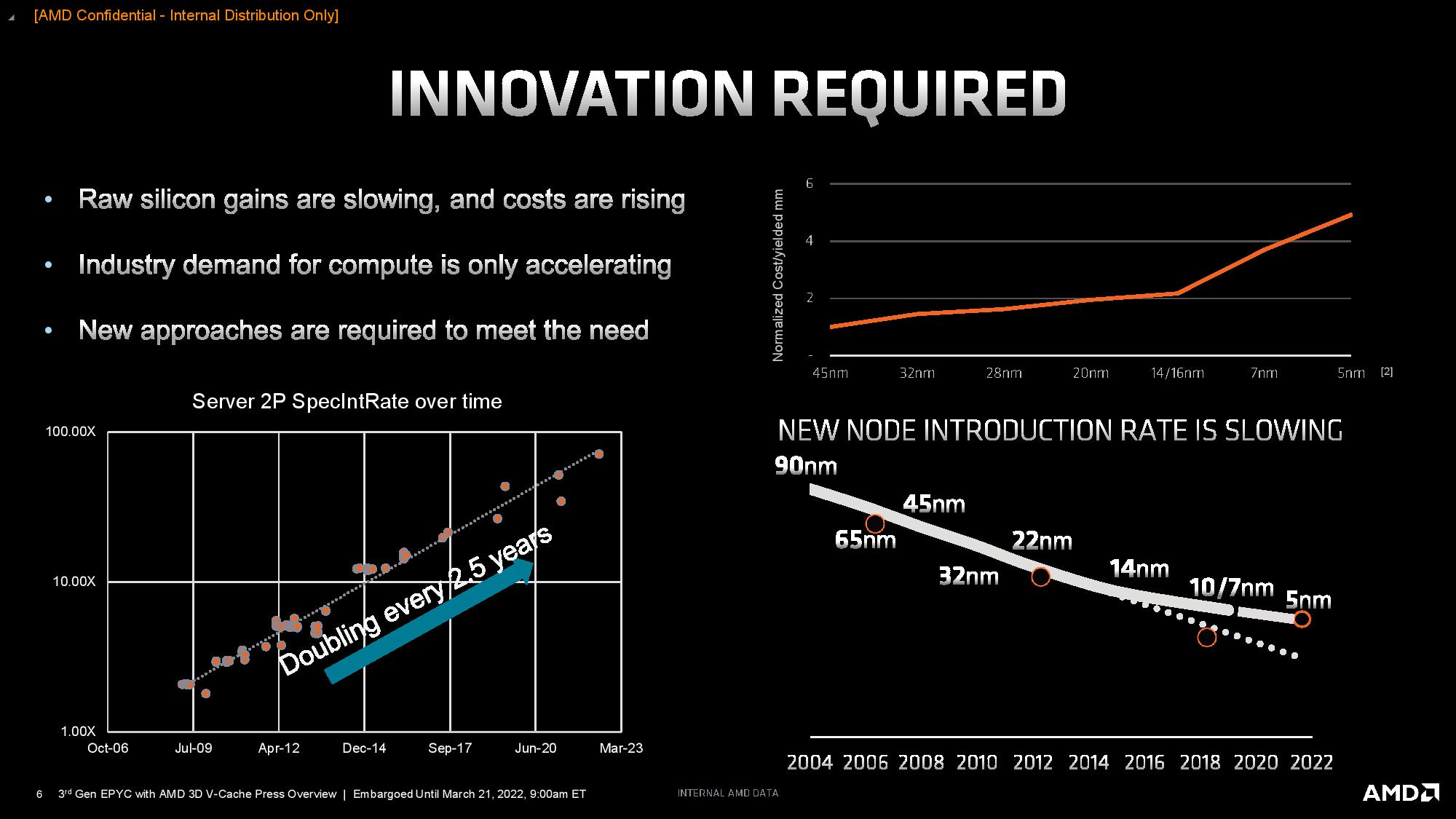

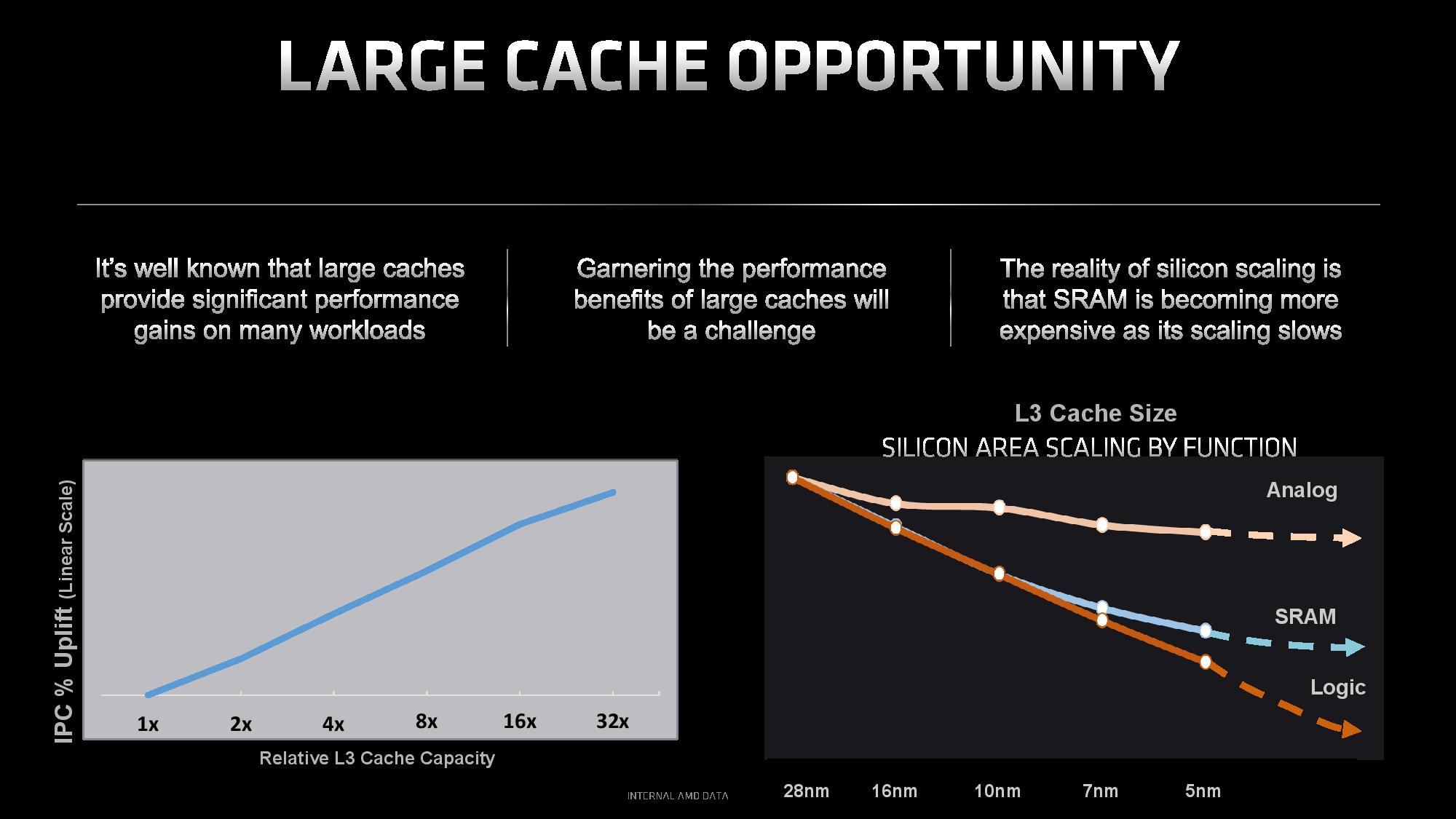

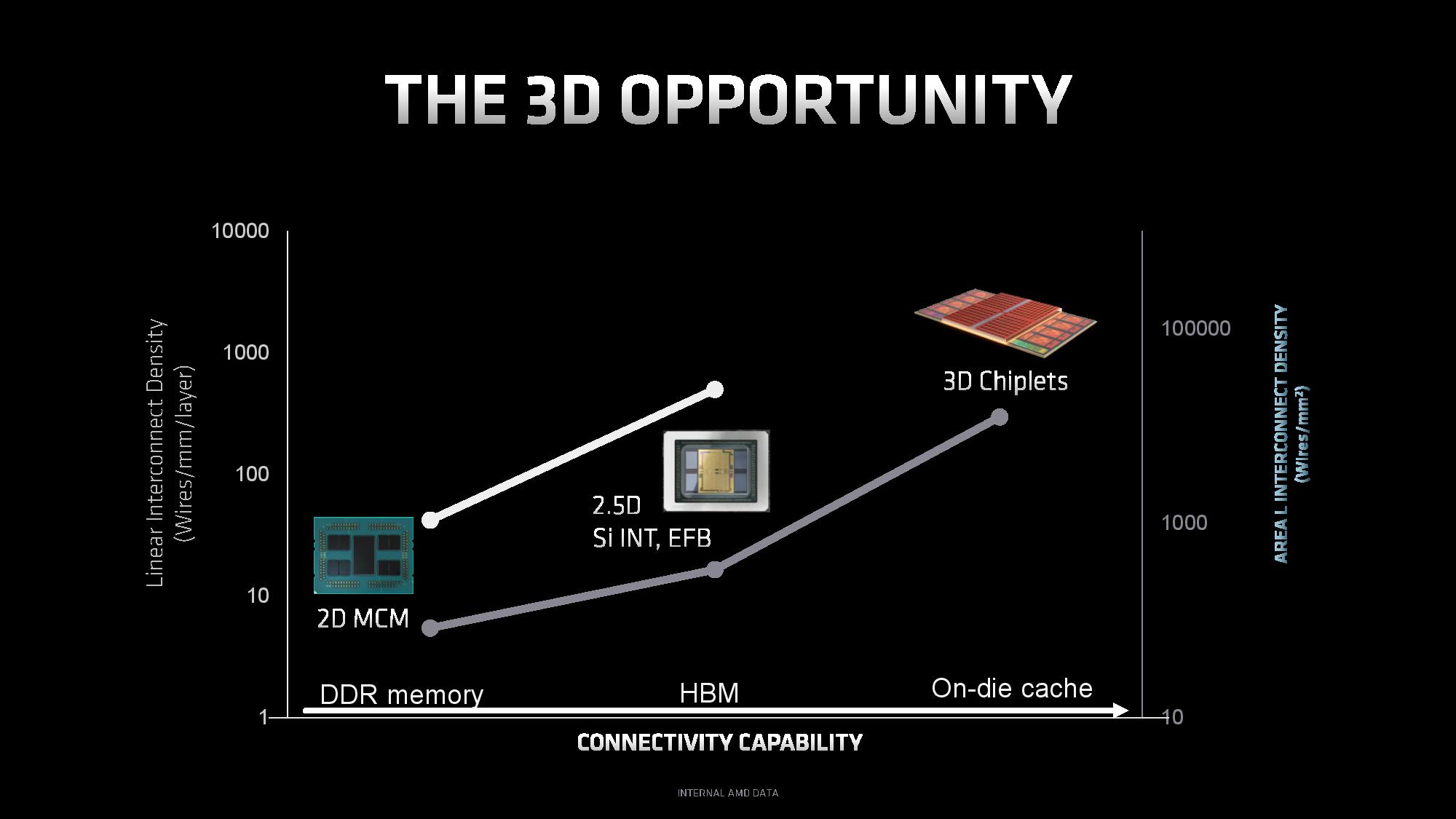

Several factors influenced AMD's decision to use 3D-stacked SRAM, but key among them is that SRAM density isn't scaling as fast as logic density. As a result, caches now consume a higher percentage of die area than before, but without delivering meaningful capacity increases. Furthermore, expanding the cache laterally would incur higher latency due to longer wire lengths and eat into the available die area that AMD could use for cores. Additionally, adding another SRAM chiplet in a 2D layout isn't feasible due to the latency and bandwidth impact.

To address those issues, AMD stacks the additional SRAM directly on top of the center of the compute die where the existing L3 resides. This L3-on-L3 stacking allows the lower die to deliver power and communicate through two rows of TSV connections that extend upwards into the bottom of the L3 cache chiplet. These connections go vertically into the upper die and fan out, which actually reduces the amount of distance data has to travel, thus reducing the number of cycles needed for traversal compared to a standard planar (2D) cache expansion. As a result, the L3 chiplet provides the same 2 TB/s of peak throughput as the on-die L3 cache, but it only comes with a four-cycle latency penalty.

The L3 cache chiplet spans the same amount of area as the L3 cache on the CCD underneath, but it has twice the capacity. That's partially because the additional L3 cache slice is somewhat 'dumb' — all the control circuitry resides on the base die, which helps reduce the latency overhead. AMD also uses a density-optimized version of 7nm that's specialized for SRAM. The L3 chiplet is also thinner than the base die (13 metal layers).

AMD produces all of its Zen 3 silicon with TSVs, so all of its Zen 3 silicon supports a 3D V-Cache configuration. However, the TSVs aren't exposed unless they're needed. For 3D V-Cache models, AMD also slightly thins the base die as well to both expose the TSV connections and also to maintain the same overall package thickness (Z-Height) as the existing models.

The lack of control circuitry in the L3 chiplet also maximizes capacity and allows AMD to selectively 'light up' only the portions of the cache that are being accessed, thus reducing (and even removing) the power overhead of tripling the L3 cache capacity. In addition, because the larger cache reduces trips to main memory due to higher L3 cache hit rates, the additional capacity relieves bandwidth pressure on main memory, thus reducing latency and thereby improving application performance from multiple axes. Fewer trips to main memory also reduces overall power consumption.

The L3 cache chiplet consumes significantly less power per square millimeter than the CPU cores. Still, vertical stacking does increase power density, so it's best to isolate it from the heat-generating cores on the sides of the chiplet. However, this would leave a protruding die on top of the CCD, so AMD uses a single silicon shim that wraps around three sides of the L3 chiplet to create an even surface for the heat spreader that sits atop the chiplet. Silicon is an excellent thermal conductor, so the shim allows the heat to transfer from the cores up to the heat spreader.

Previous renderings of the design have shown two distinct silicon shims and appeared to show the L3 cache die spanning from one side of the die to the other. However, AMD's materials for the Milan-X launch clearly show one long shim that covers the compute die and a thin portion on the edge of the die that isn't covered by the L3 cache chiplet. This thin expanse of the bottom die includes I/O functions that the chiplet uses to communicate with the I/O die.

AMD says no software modifications are required to leverage the increased cache capacity, though it is working with several partners to create certified software packages. Those packages might see further performance optimizations, too.

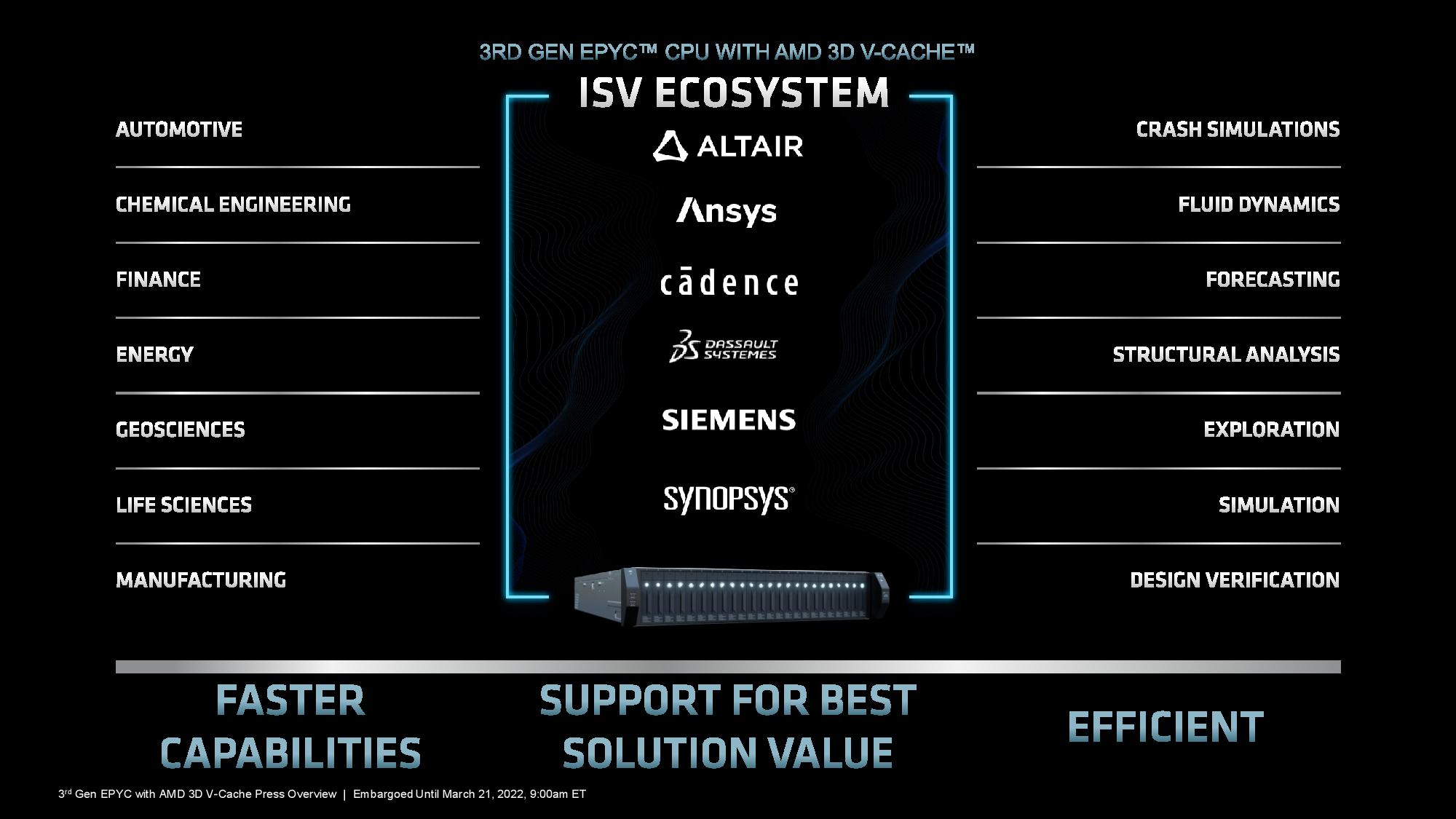

The 16- and 24-core Milan-X models are particularly well-suited for electronic design automation (EDA) software, which tends to be lightly threaded. However, this expensive software also tends to be licensed on a per-core basis, so having two potential models allows customers to select their optimum configuration. Meanwhile, the 24-, 32- and 64-core models are well suited for more demanding threaded tasks, like computational fluid dynamics (CFD), finite element analysis (FEA), and structural analysis. Again, the range of available core counts allows configuration based upon licensing models.

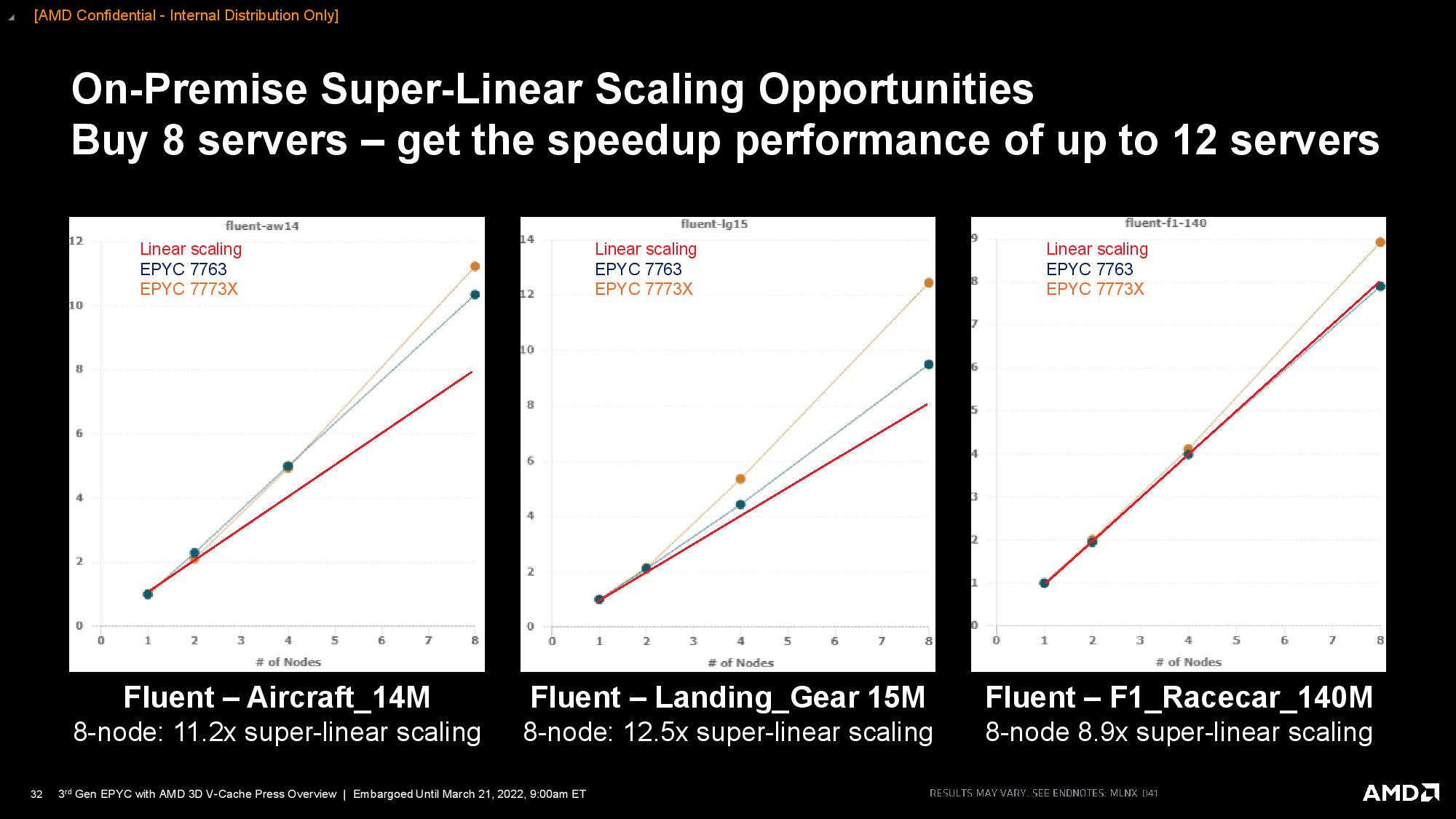

AMD provided a spate of its own internally-derived benchmarks, but as with all vendor-provided test data, you should approach it with caution. We've included the test notes at the end of the above album.

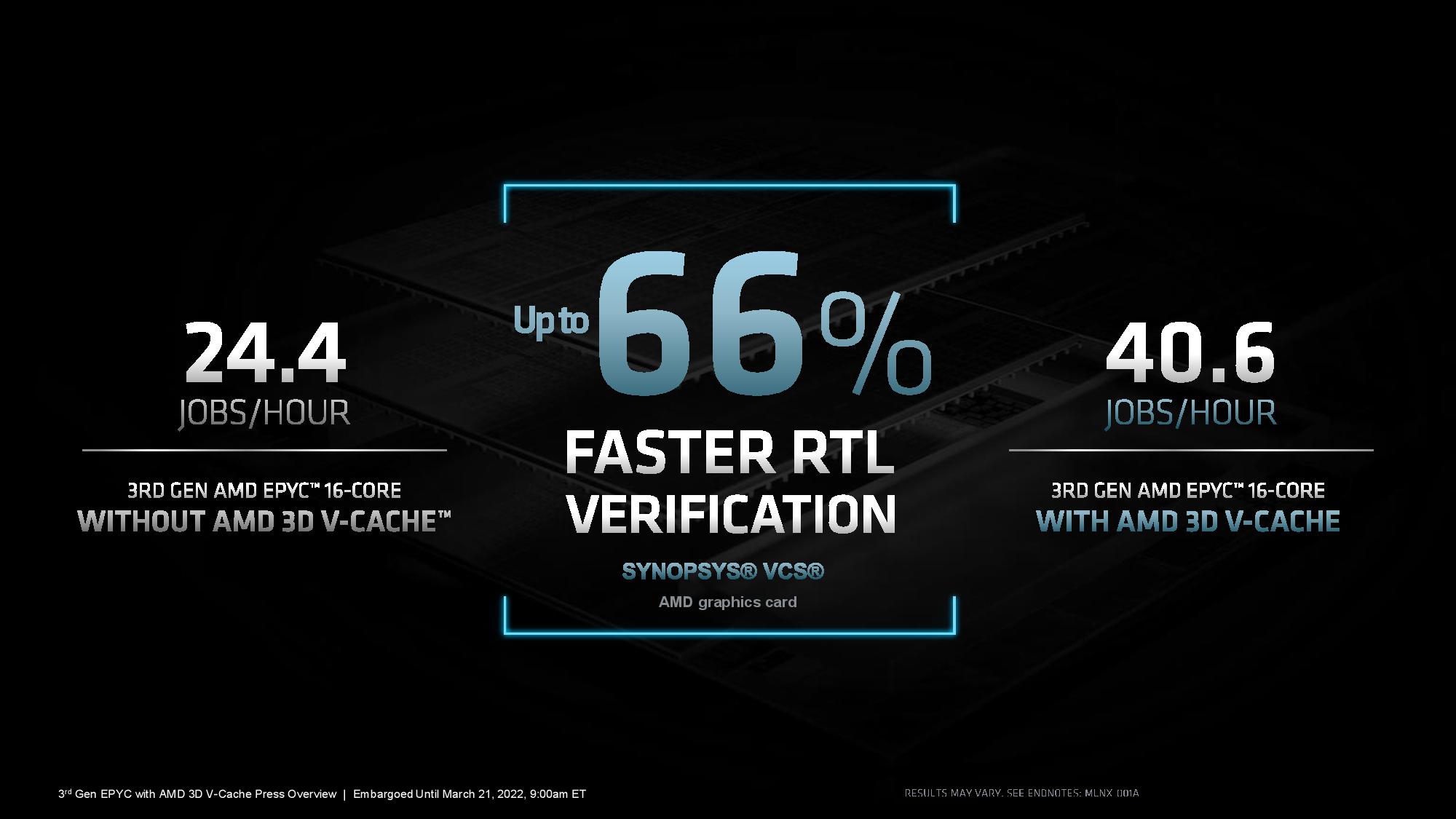

AMD's benchmarks include a 66% gain for the 16-core Milan-X against a standard Milan model, but the results are just as impressive against Intel's Xeon.

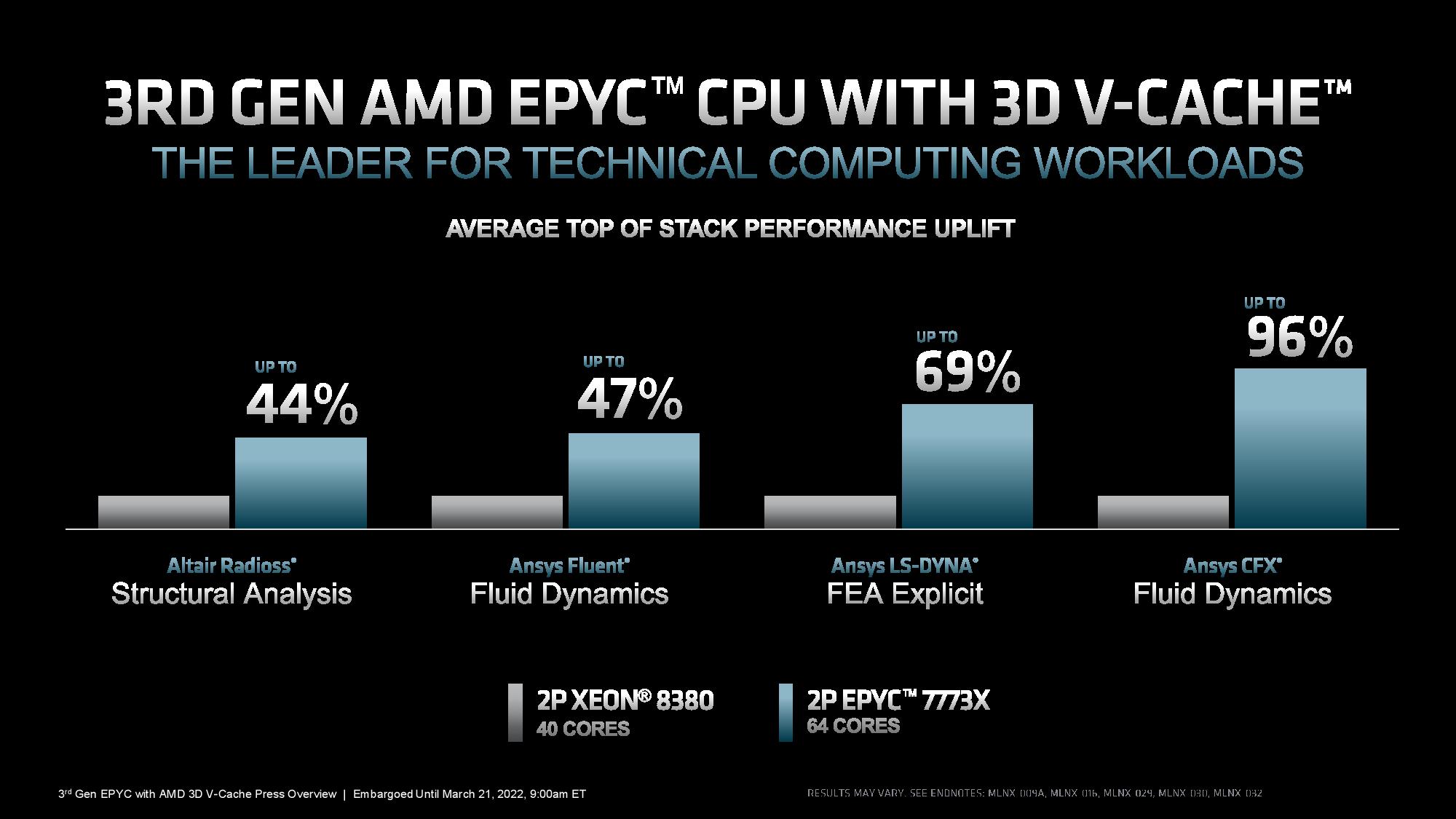

Turning to highly-threaded workloads with flagship chips, AMD claims that a dual-socket server with its flagship 64-core 7773X chips delivers 44% to 96% more performance than a two-socket Intel Xeon 8380 system (40 core chips) in a selection of structural analysis, fluid dynamics, and FEA workloads.

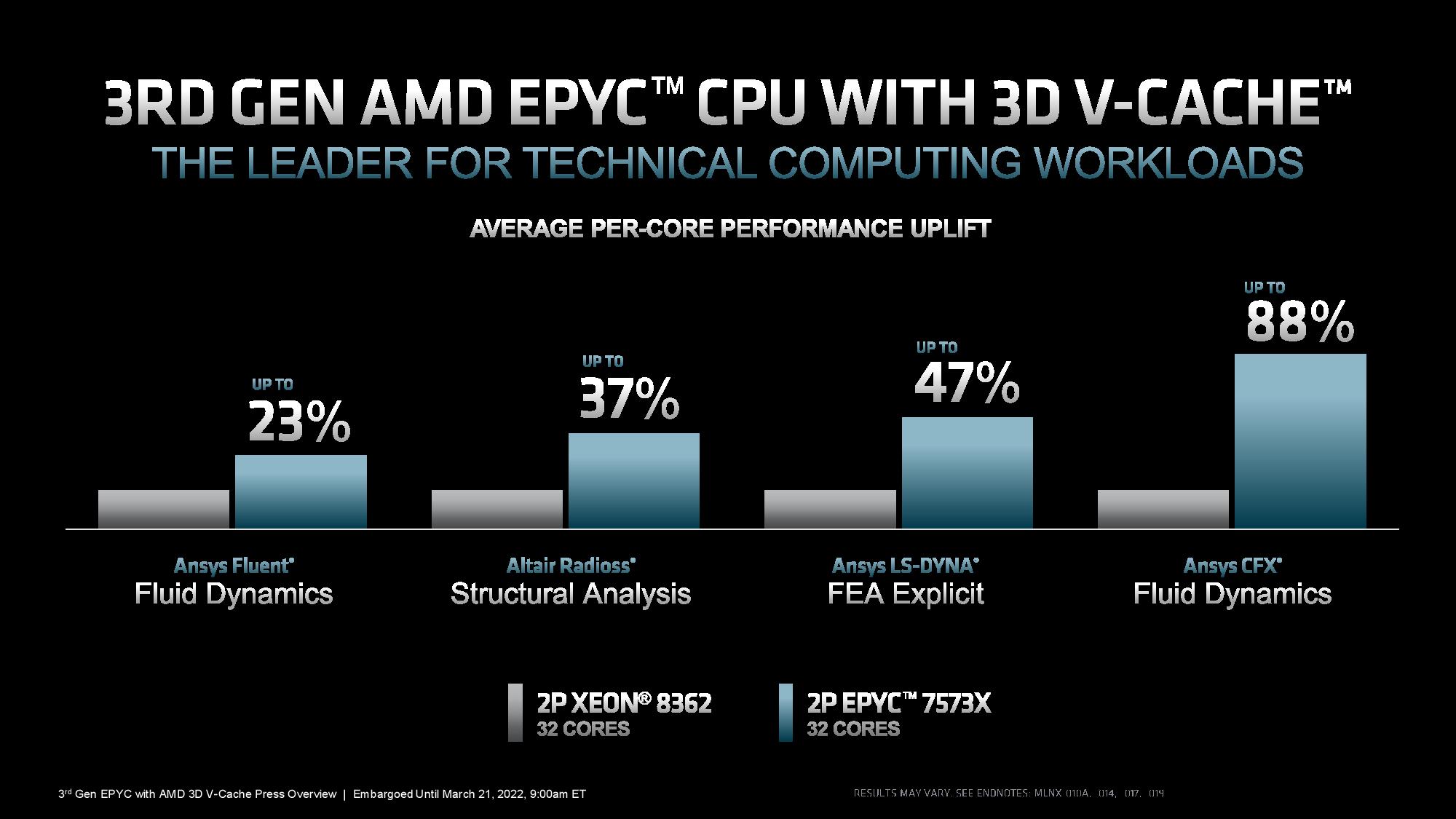

You would expect the 128-core EPYC server to beat the 80-core Intel server, so AMD also provided a core-to-core comparison with those same applications. Here we see AMD's 32-core 7573X square off with Intel's 32-core Xeon 8382, beating it by 23% to 88% in those same benchmarks.

AMD has an impressive roster of ISV partners running the gamut of the types of applications that work best with the 3D V-Cache architecture and also has plenty of support from hardware vendors, like Supermicro, Dell, Lenovo, HPE, Gigabyte, and QCT, among others.

The Milan-X processors are available worldwide today from retailers, and systems are also available at OEMs. Additionally, Milan-X is available through Microsoft Azure's HBv3 VMs.

Gamers will get their hands on the Ryzen 7 5800X3D, the first gaming chip with 3D V-Cache technology, next month.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

-Fran- Good to know these are baking well. Gives me hope on what to expect out of the 5800X3D part. I'm still not that thrilled with it, but I'll remain cautiously optimistic.Reply

Also, a 1K jump in price for about 50% extra performance on top dog CPUs is not bad. Even when Companies scoff a those price increases, I think it's good AMD is not trying to milk them dry right off the bat. Specially when these are direct replacements of Milan CPUs/SoCs, which were already direct replacements from their predecessors (IIRC). A good value proposition for HPE. Maybe?

Anyway, good on AMD. I just hope they don't go full stupid.

Regards. -

artk2219 Reply-Fran- said:Good to know these are baking well. Gives me hope on what to expect out of the 5800X3D part. I'm still not that thrilled with it, but I'll remain cautiously optimistic.

Also, a 1K jump in price for about 50% extra performance on top dog CPUs is not bad. Even when Companies scoff a those price increases, I think it's good AMD is not trying to milk them dry right off the bat. Specially when these are direct replacements of Milan CPUs/SoCs, which were already direct replacements from their predecessors (IIRC). A good value proposition for HPE. Maybe?

Anyway, good on AMD. I just hope they don't go full stupid.

Regards.

Keep in mind that extra 1K is tray pricing, OEM's may get it for significantly less in volume, it still doesnt mean they wont ty to pass that increase off to the consumer though :LOL:. -

waltc3 No reason to look at AMD's examples, Here's a 3rd-party test suit that is very impressive...almost feel sorry for Intel...almost...;)Reply

https://www.phoronix.com/scan.php?page=article&item=amd-epyc-7773x-linux&num=1 -

jeremyj_83 Reply

How about MS ripping out most of their pretty new Milan based HBv3 nodes and replacing them with Milan-X instead.waltc3 said:No reason to look at AMD's examples, Here's a 3rd-party test suit that is very impressive...almost feel sorry for Intel...almost...;)

https://www.phoronix.com/scan.php?page=article&item=amd-epyc-7773x-linux&num=1 -

PiranhaTech There's a good chance that cache is just the start. SoC parts, maybe more computational chiplets, etcReply -

waltc3 Replyjeremyj_83 said:How about MS ripping out most of their pretty new Milan based HBv3 nodes and replacing them with Milan-X instead.

I know...Unreal...;) -

Alvar "Miles" Udell It will be interesting to see a 7763 vs 7773X comparison. $1000 price premium, multiplied by tens or hundreds, adds up quickly.Reply -

hotaru251 Reply

yes, but those companies that NEED that many make more $ off of using them to point they dont care.Alvar Miles Udell said:adds up quickly.

There is a reason the entire EPYC line sell so well. Yes, they costly, but they are also worth it to the ppl they are aimed at.

time/performance = $. -

-Fran- Even with that price hike, they're still cheaper than the Intel alternative so...Reply

Regards.