Intel Drops Xe LP Graphics Specs: Tiger Lake GPU Has 2x Speeds

Your next Intel notebook might actually be good enough for gaming.

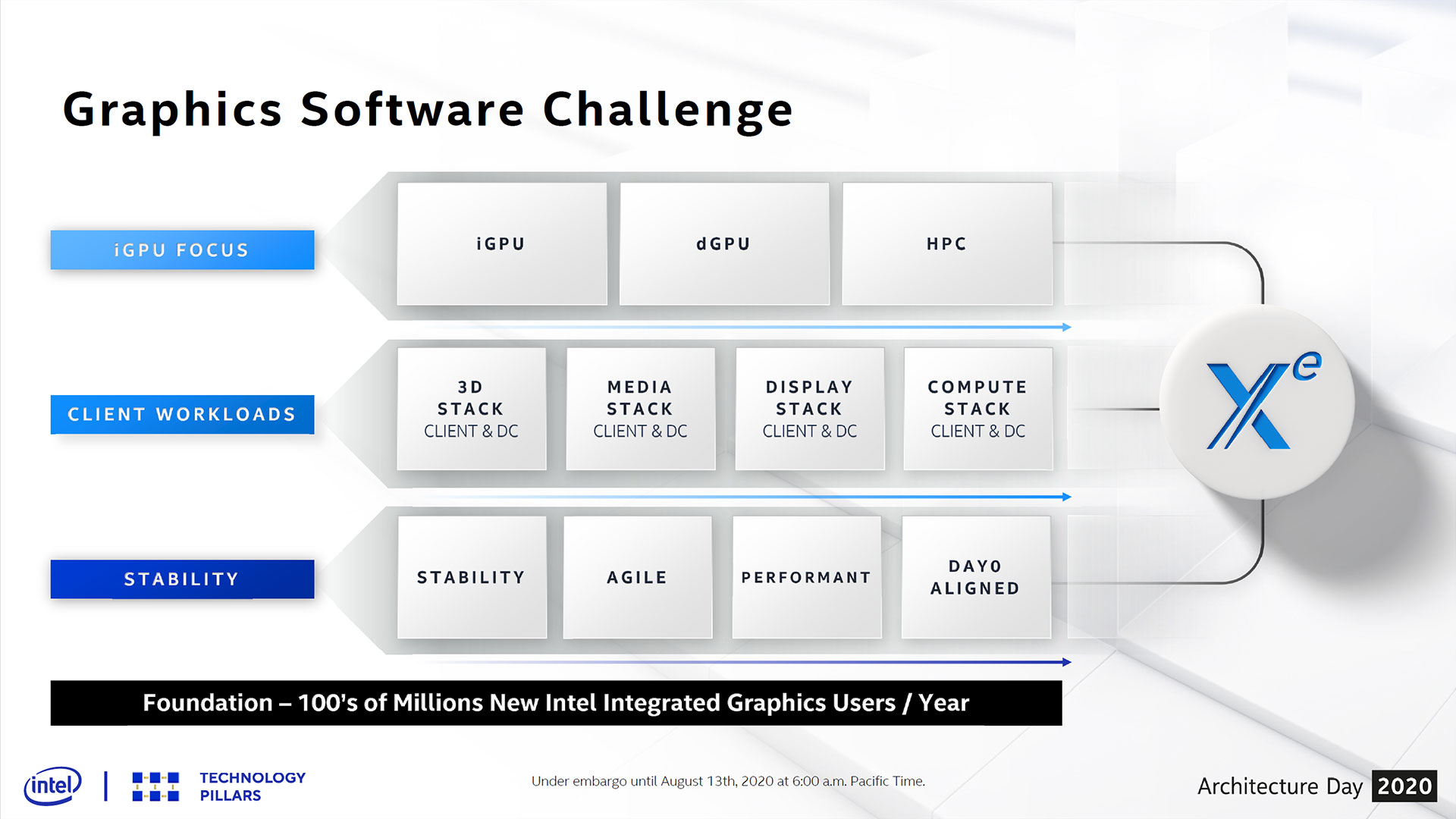

Laptops powered by Intel's next-generation Tiger Lake processors are coming soon, with much-improved graphics performance. Tiger Lake's integrated GPUs will use Intel's long-awaited Xe LP Graphics, the low power variant of Team Blue's latest graphics architecture — Gen12 if you're counting. It's been a couple of years in the making, and Intel's aspirations to enter (or re-enter) the discrete graphics card market all build off of the Xe architecture. In 2018, Intel announced its intention to create a brand new architecture that would scale from client workloads to the data center — "from teraflops to petaflops."

There will be multiple implementations of Xe, and we've covered each separately: everything we know about Xe Graphics covers the high-level overview, Xe HP and Xe HPC are for the data center, and the newly announced Intel Xe HPG is a GPU for enthusiasts and gamers that want a compelling alternative to AMD and Nvidia GPUs. But let's focus on Xe LP, the integrated and entry-level graphics solution that's primarily for laptops and mobile solutions, built on Intel's 10nm SuperFin process.

Improving graphics performance can be done in a variety of ways, and Xe LP specifically targets lower power devices. We may see higher-power implementations of Xe LP, which Intel isn't discussing yet, but the primary target will be 10W-30W — and that's power shared with the CPU. Building a GPU that can perform well in such a low power envelope is very different from building a massive 250W (or more!) chip for desktops. Intel has worked to deliver three things with the Xe LP architecture: more FLOPS (floating point operations per second), more performance per FLOP, and less power required per FLOP.

The first is pretty straightforward. Put more computational resources into a design and you can do more work. The second deals with the efficiency of that work. We've frequently noted that the various GPU and CPU architectures are not equal. As an example, today's AMD RX 5700 XT is rated at 10.1 TFLOPS, while Nvidia's RTX 2070 Super is rated at 9.1 TFLOPS (both using boost clocks). In theory, the RX 5700 XT has an 11% performance advantage, but look at our GPU hierarchy and you'll see that across a suite of games and test settings, the 2070 Super ends up 5% faster. It basically comes down to IPC, or instructions per clock: How much real work can you get out of an architecture?

Raw computational power that's difficult to utilize can be wasteful, however, and that's what the third design goal addresses: Use less power per FLOP. Again, looking at previous GPUs, AMD's RX Vega 64 is rated to deliver up to 12.7 TFLOPS while the competing Nvidia GTX 1080 sits at just 8.9 TFLOPS. In practice (and four years later, running a modern gaming suite), the RX Vega 64 is about 7% faster than the GTX 1080. The problem is that, looking at real GPU power use, Vega 64 uses an average of 298W compared to 181W for GTX 1080. (The TDPs in this instance are actually fairly accurate, which isn't always the case.) For a desktop, the extra 118W may not be the end of the world, but in a thin and light laptop, every watt matters.

Intel says overall efficiency improvements allow Xe LP to beat the previous Gen11 graphics (used in Ice Lake mobile CPUs) across the entire spectrum of performance. It can run at higher frequencies, but more importantly it can reach those higher frequencies and performance at the same voltage. Alternatively, it can provide the same performance as Gen11 while using lower voltages, which equates to less power.

Obviously there are still some constraints — Xe LP can't necessarily do everything at the same time. That will depend on product implementation, so a 10W or 15W part likely won't sustain maximum clocks due to the power limit. We saw that when we tested Ice Lake integrated graphics as well: bumping the TDP limit from 15W to 25W allowed Gen11 graphics to run about 35% faster. Regardless, Xe LP has a more efficient architecture that should prove very beneficial to laptops in particular. Let's dig into what Intel has changed relative to Gen11, and what we can expect in terms of features and performance.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

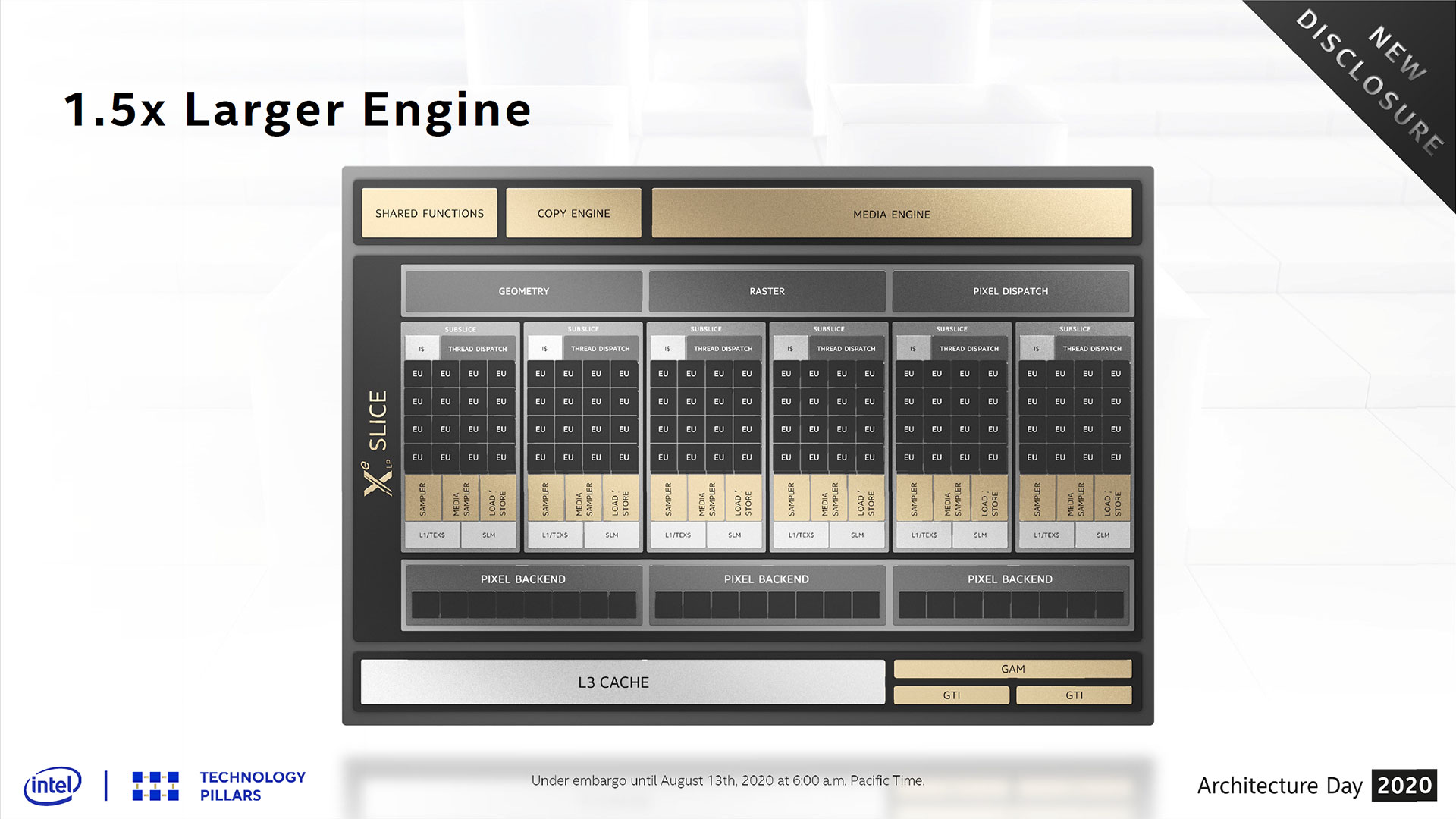

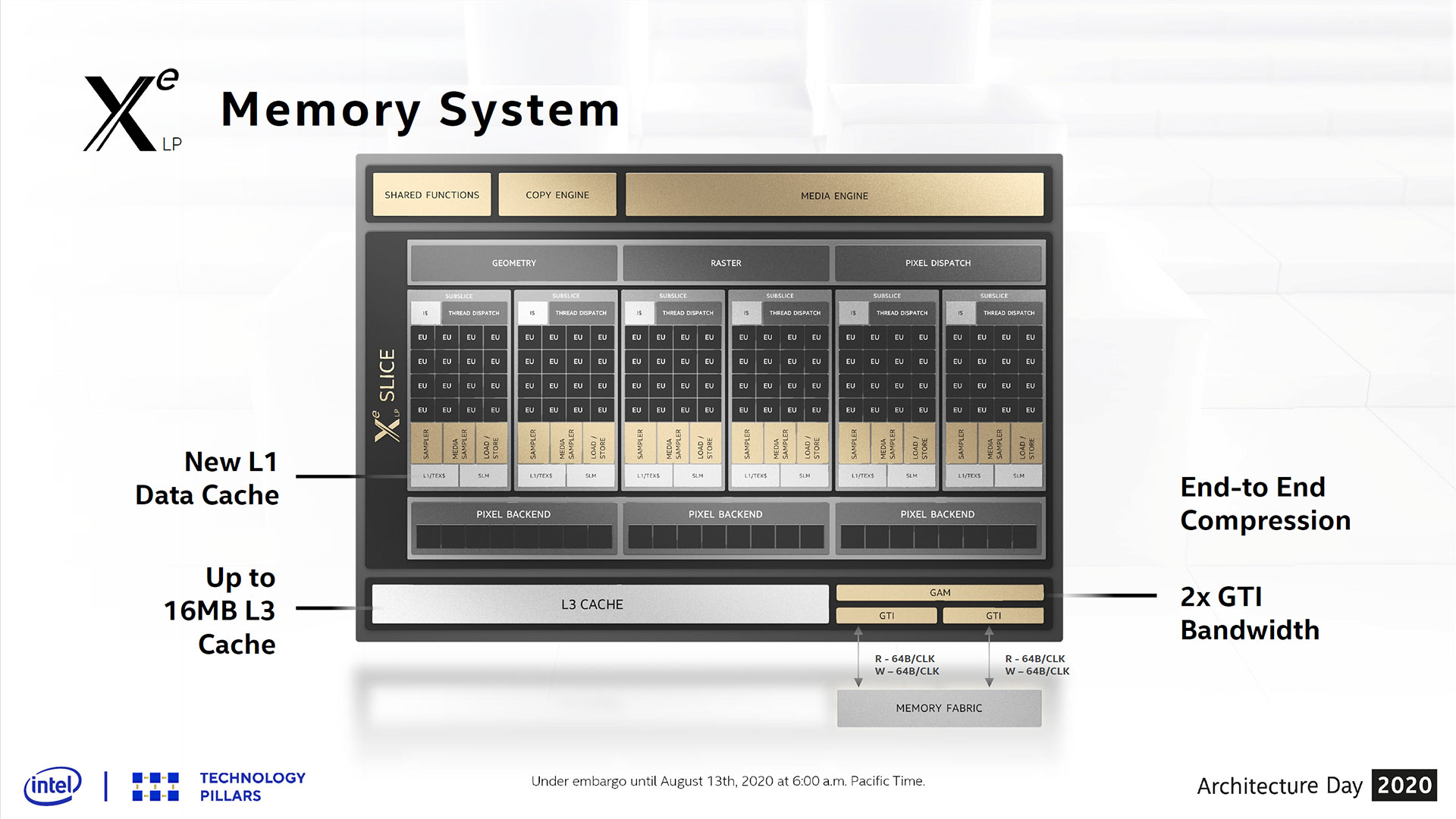

The biggest change is immediately obvious when looking at the block diagram. Xe LP packs 50% more EUs (Execution Units) into the maximum configuration. Ice Lake's Gen11 Graphics topped out at 64 EUs, so there's an immediate and substantial increase in computational resources. Combine that with higher clock frequencies and there should be many workloads where Xe LP is roughly twice as fast as Gen11. How much of that comes from the larger engine and higher frequencies vs. architecture enhancements isn't entirely clear, however. It's one of the things we're looking forward to testing once we get actual hardware.

And by hardware, we mean the upcoming Tiger Lake CPUs that are going into laptops. Intel's DG1 graphics cards do exist, and they're available for use in a form of 'early access' cloud testing environment (via Intel's Dev Cloud). Some form of Xe LP dedicated GPU is still supposedly in the works, but we're not super interested in a budget dedicated GPU — not that it won't potentially have some good use cases, but based on current rumors it will be better as a media solution rather than a gaming solution. Intel will also have dedicated graphics cards powered by Xe HPG, but as noted earlier we've covered that elsewhere.

Right now, it looks like many of the Xe LP architectural changes relative to Gen11 are about improving efficiency, the all important performance per watt metric. 50% more EUs and higher clocks would do absolutely nothing for performance if it also meant substantially higher power requirements. Intel isn't saying we'll see double the performance of Gen11 in every comparison, but Xe LP will definitely be able to achieve higher performance within any given power envelope.

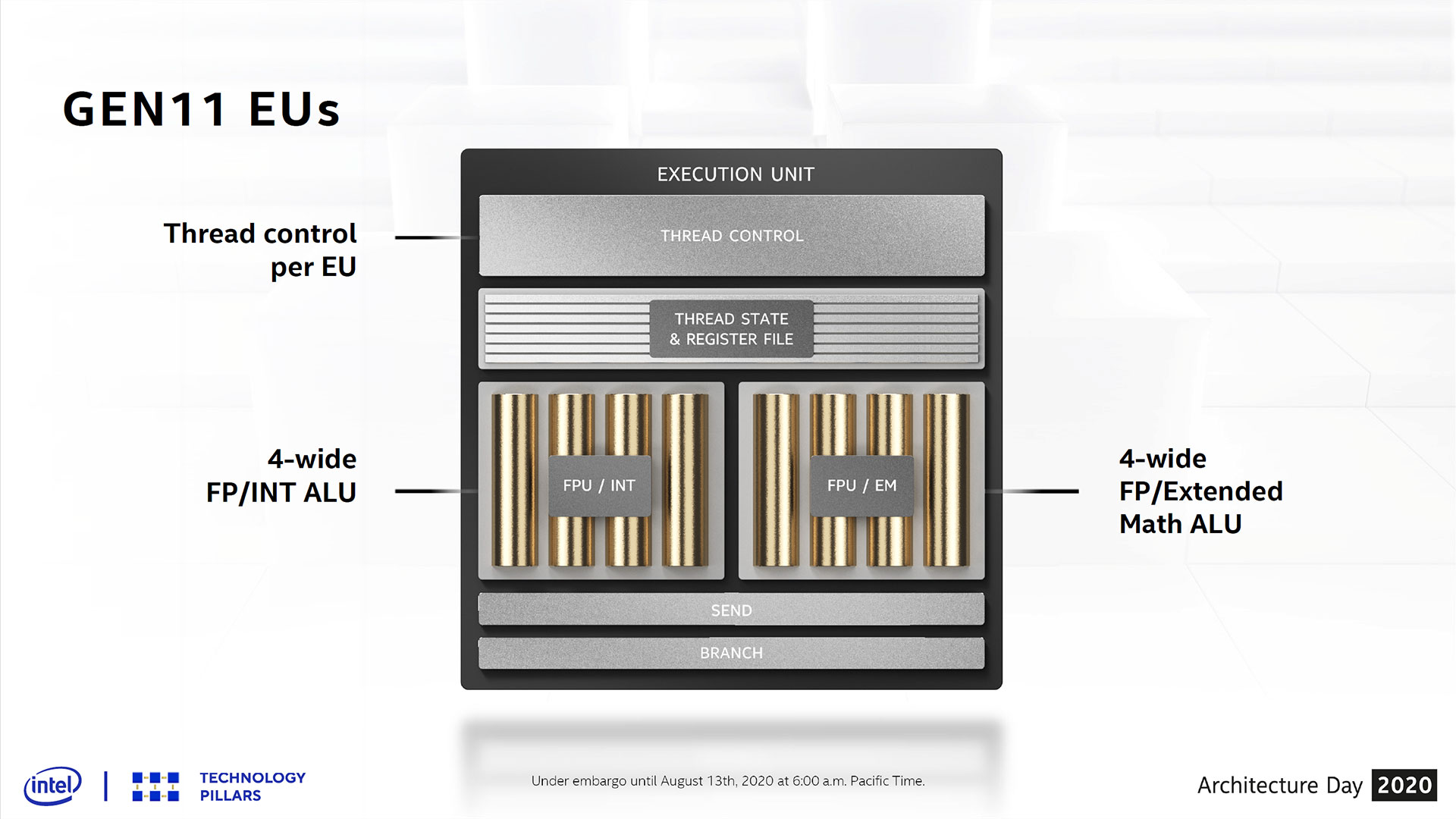

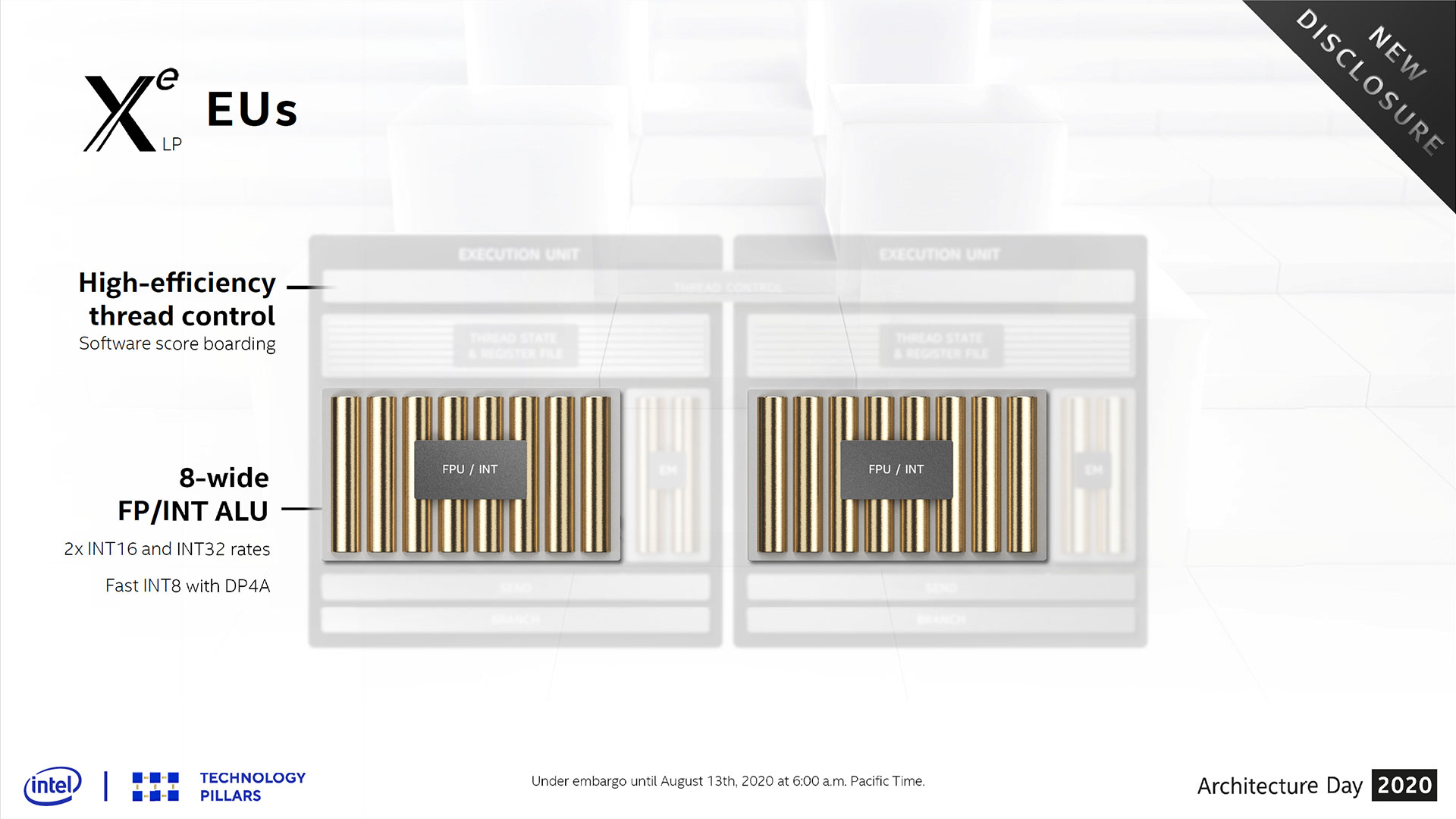

Besides having 50% more EUs, Intel has reworked a lot of the underlying architecture. Gen11 Graphics had two ALUs (arithmetic logic units) that were each 4-wide, one with support for FP/INT (floating-point and integer) and the other for FP/EM (enhanced math — things like trigonometric functions, logarithms, square roots, and other complex math). Each EU had its own thread controller, thread state and register file, send, and branch units. For Xe Graphics, each EU now has a single 8-wide vector unit that can do FP/INT, plus a separate EM unit that can run concurrently with the FP/INT pipeline. Two EUs also share a single thread controller, which helps to save some die area and power.

Fundamentally, Intel's GPUs have often been pretty narrow. Nvidia's current architectures break workloads up into vector instructions that are basically 32-wide, and one of AMD's big updates with Navi was to go from GCN's 64-wide wavefronts to dual 32-wide wavefronts. Having 4-wide EUs by comparison is very narrow, and moving to 8-wide should improve efficiency. I do wonder if Xe HP/HPG will move to 16-wide or even 32-wide, given their higher performance targets.

Including FP and INT across all eight ALU pipelines also means integer performance has doubled relative to Gen11. Intel also added support for 2x INT16 calculations, and 4x INT8 calculations (16-bit and 8-bit integers, respectively). These are mostly useful for inference applications, where higher precision isn't always necessary. This is actually a reversal of a reduction in INT computational resources made with Gen11, but as software has changed Intel felt adding additional INT computation back into the GPU was the right move. This is somewhat akin to what Nvidia did in adding concurrent FP and INT support to its Turing architecture (though Xe LP doesn't support concurrent INT + FP).

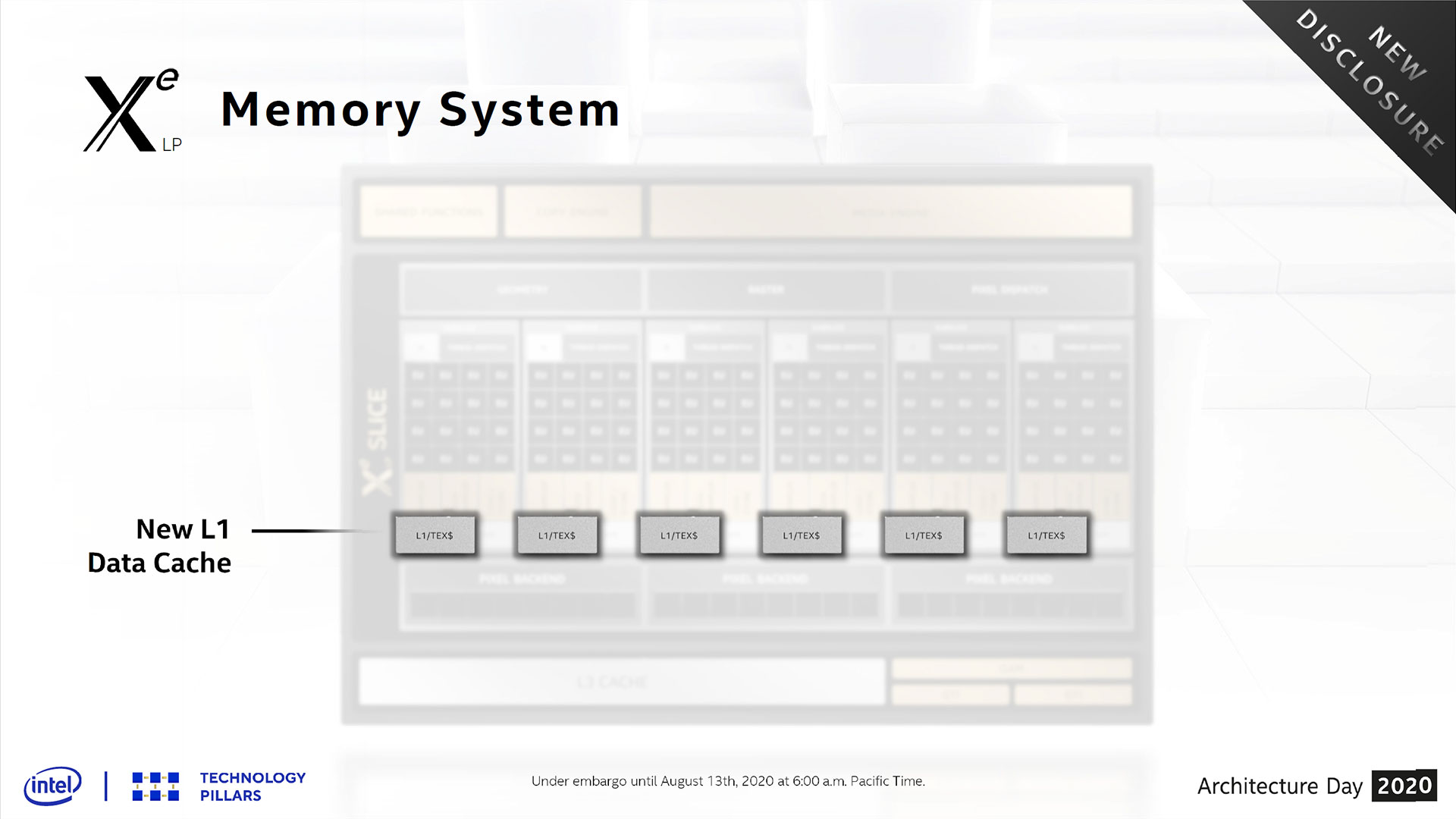

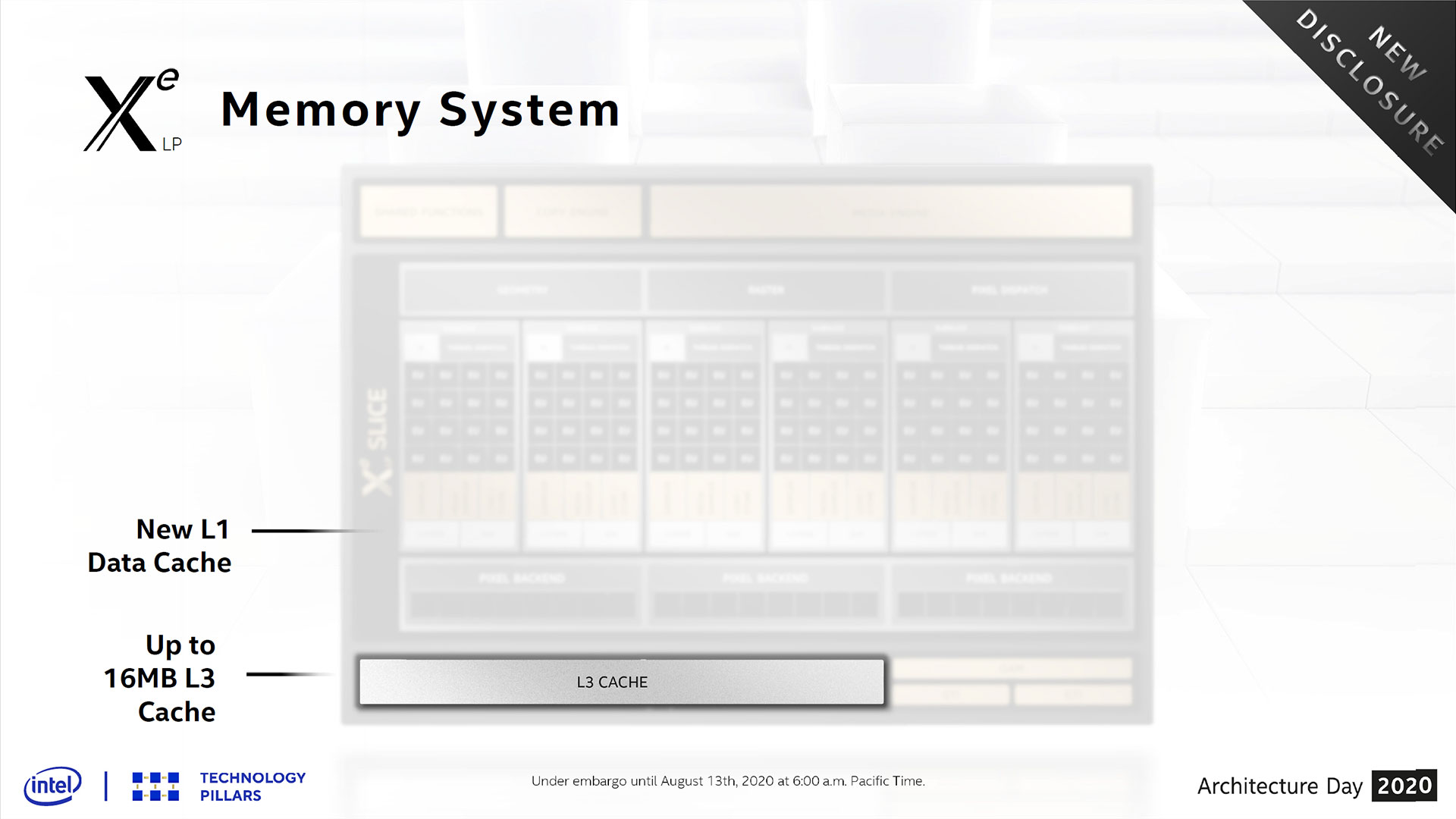

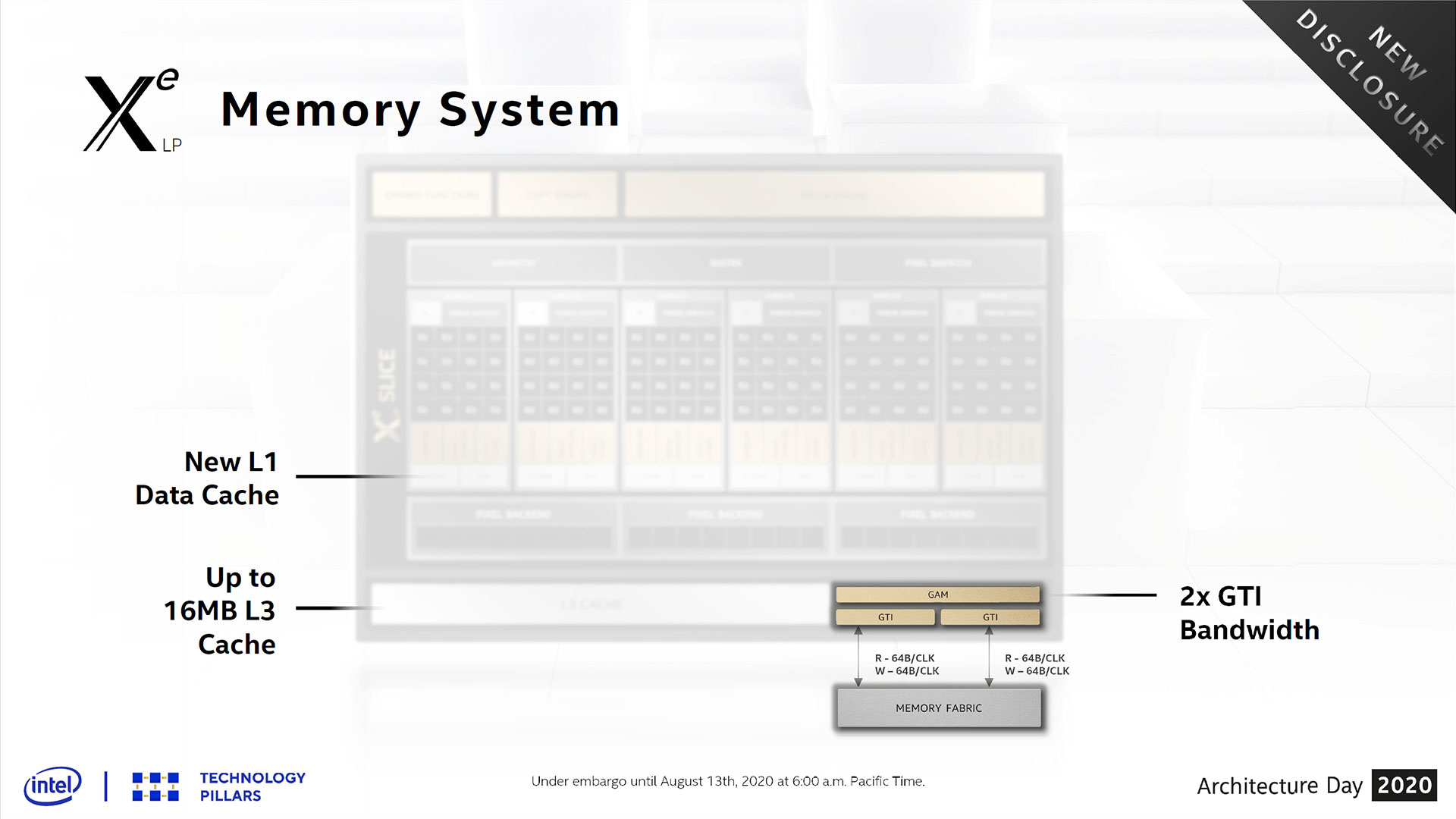

New to Xe LP is an L1 cache, which reduces latency and helps lower the demands on the L3 cache. Previously, Gen11 had caching in the texture subsystem, but moving to a more general L1 cache design allows for more flexibility. Maximum L3 cache size has also been doubled to 16MB, but that's perhaps partly because Ice Lake only had up to 8MB L3 — if there were higher core count desktop variants of Ice Lake, it would have had larger L3 caches as well. Worth noting is that in the Tiger Lake disclosures, Intel also revealed that the integrated Xe LP has a 3.8MB L3 cache, while the CPU has up to a 12MB cache that functions as an LLC (last level cache) relative to the GPU. The graphics interface to the LLC and system memory has been doubled as well, with two 64 bytes-per-clock channels. Again, more bandwidth and lower latencies should improve graphics performance quite a bit.

Let's also quickly note that Intel's DG1 chip is obviously different from the integrated Xe LP solution in Tiger Lake. Intel hasn't disclosed the full specs, though reports say DG1 test cards have 3GB of GDDR6 and 96 EUs. Since DG1 doesn't include a CPU, other aspects of the chip could be changed, including the L3 cache size. We'll have to wait and see what happens with DG1 and Xe LP dedicated solutions in the future.

Finally, Xe LP supports end-to-end compression of data. We asked for additional details of what this means, and Intel's David Blythe said this is more than the delta color compression used by AMD and Nvidia. With end-to-end compression, decompression events are rare if not completely removed from the workflow for most use cases, allowing for compressed data from source to sink. This improves the bandwidth and power characteristics for more inter-operational scenarios that are becoming prevalent, including game-streaming (render and video encode), video chat recording (camera and video encode) and more. Intel's end-to-end compression is the first solution to unify compression across all APIs and virtually all engines.

So not only does Xe LP potentially have more memory bandwidth, with support for up to LPDDR4x-4267 and LP5-5400 (in Tiger Lake), but it will make better use of that bandwidth. A dual-channel DDR4-3200 system memory configuration 'only' provides 64 GBps of bandwidth, but that might actually be enough to keep Xe LP fed with the data it needs.

Bigger, faster, better — that's Xe LP in a nutshell. If you're wondering why it's not even bigger, though, it goes back to the power targets. Intel hasn't confirmed the maximum TDP configurations for Xe LP, but we'd expect Tiger Lake to follow a similar path to Ice Lake. Looking at the Ice Lake product stack, there were TDPs ranging from 9W to 28W, with boost power exceeding that for short periods of time. Even at 28W, I suspect Gen11 Graphics was starting to run into power constraints, so Intel focused on improving performance per watt rather than just adding more EUs.

There are also die size considerations, but fundamentally I don't think a 128 or larger EU count integrated GPU is feasible. AMD's Renoir uses Vega 8, which is similar in many ways to Xe. AMD has 512 shader cores (ALUs), each capable of one FMA instruction per clock (two FLOPS), running at up to 2.1 GHz. Xe LP has 768 ALUs, each capable of one FMA, probably running at around 1.5 GHz. The two GPUs certainly aren't the same, but they end up at roughly the same space: 2.1 TFLOPS compute, give or take.

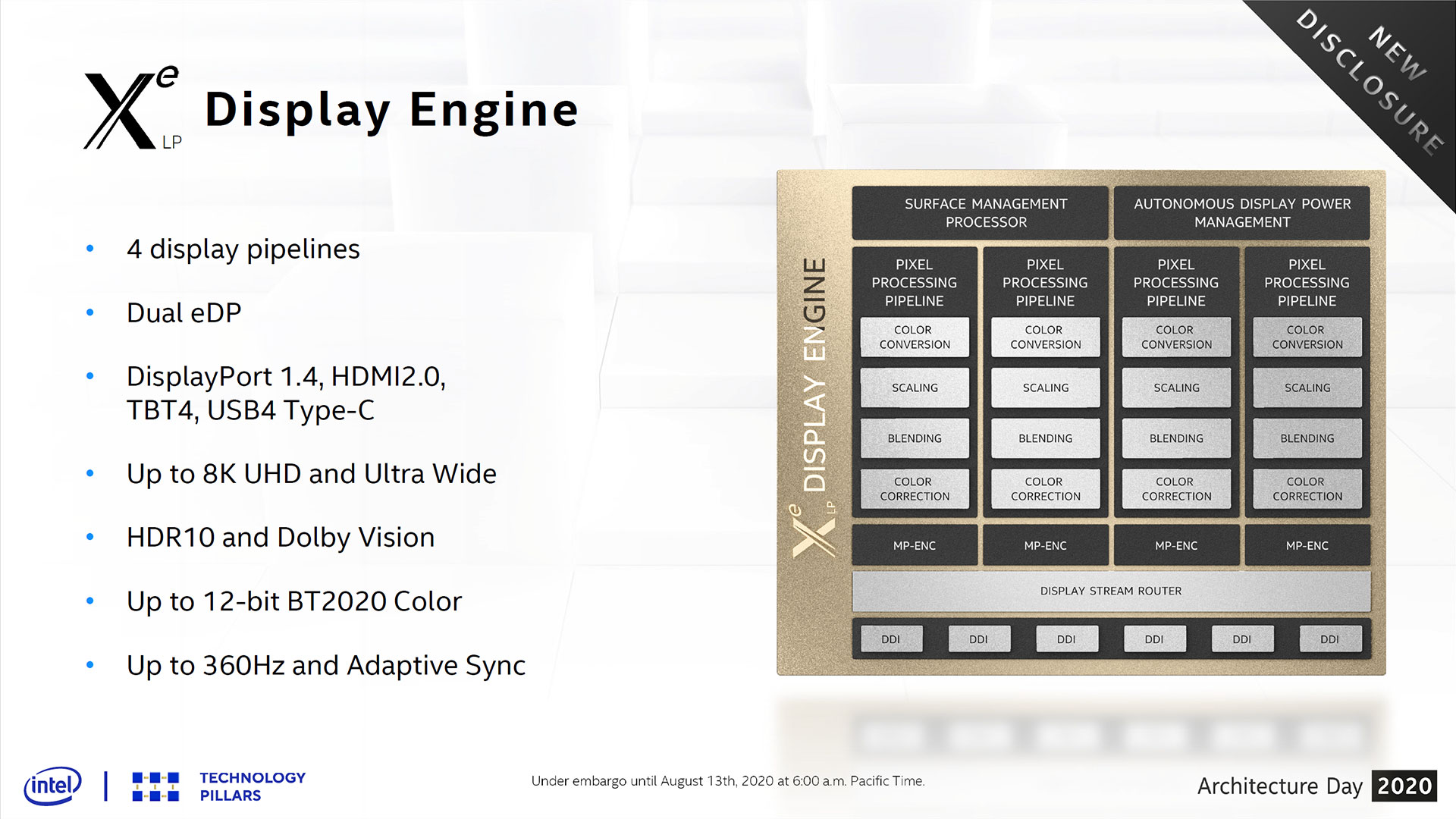

There are other changes with Xe LP as well. The Xe LP Display Engine supports up to four displays, with DisplayPort 1.4b and HDMI 2.0 capabilities. (Related reading: DisplayPort vs. HDMI) That's enough for 4K 60Hz HDR with DisplayPort, or 8K if you're willing to use DSC (chroma compression). HDMI is limited to 8K60, though I suspect that won't matter much for laptop users. I'm still trying to get GPUs that can drive 4K displays at higher refresh rates, so I'm not champing at the bit for 8K just yet. Still, DisplayPort 2.0 and HDMI 2.1 have both been standardized for over a year, so it's a bit of a letdown that Intel didn't support them. Adaptive Sync is also supported at refresh rates of up to 360 Hz (on DisplayPort — 240 Hz presumably on HDMI).

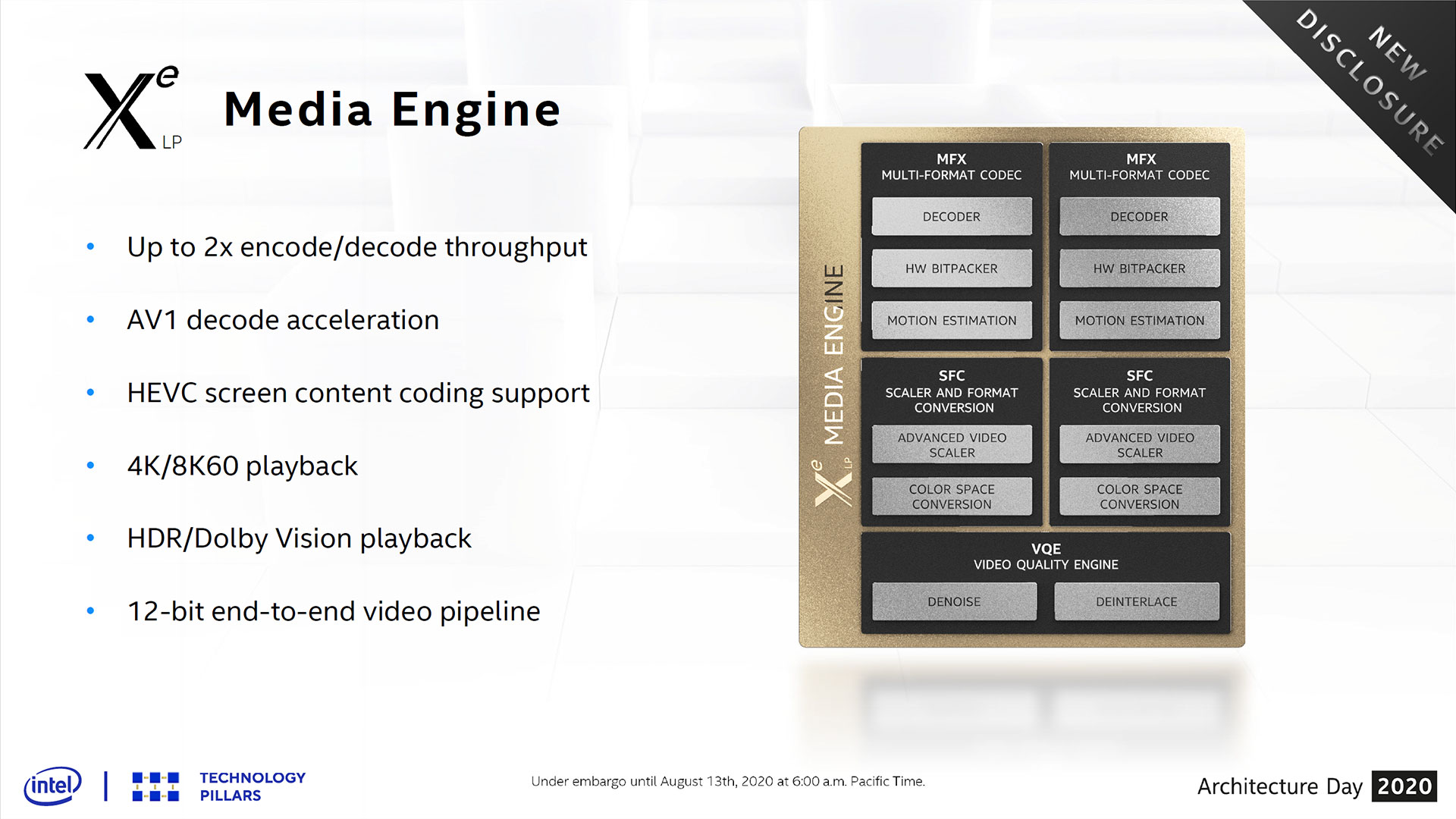

Next, the updated Xe LP Media Engine has twice the encode/decode throughput of Gen11, and it adds full AV1 decode acceleration (something missing from Gen11). With support for up to four 4K60 displays, Intel has upgraded the Media Engine so that even a lowly Xe LP solution can drive 4K60 HEVC content to all four outputs. Xe LP can also decode up to seven 4K60 HEVC streams concurrently, and encode or transcode up to three 4K60 HEVC stream (at better than CPU-based encoding quality).

Thanks to the media streaming prowess, Intel also plans to launch an SG1 (Server Graphics 1) product that includes four Xe LP chips and focuses on data center media applications, leveraging the enhanced media capabilities of Xe. That would potentially handle decoding up to 28 4K60 streams, or encoding/transcoding 12 such streams.

Finally, Intel demonstrated a Tiger Lake laptop running an OpenVINO workload that leverages GPU compute as well as DL Boost to upscale images via an AI algorithm. In this particular test, the Tiger Lake laptop was more than twice as fast as an Ice Lake laptop. How that translates to other applications remains to be seen.

Of course none of the Xe LP enhancements will mean much to gamers if Intel's drivers still have problems. Things have certainly improved over the past year or two, and in my recent testing of integrated graphics on an Ice Lake Gen11 laptop, I'm happy to say that all nine of the test games worked — and all but one of them hit 30 fps at 720p with a 25W TDP applied. By way of comparison, only three of the nine games broke 30 fps on Gen9.5 graphics (UHD Graphics 630 in the Coffee Lake Refresh), and not a single game managed 30 fps with a Core i7-4770K (Gen7 HD Graphics 4600).

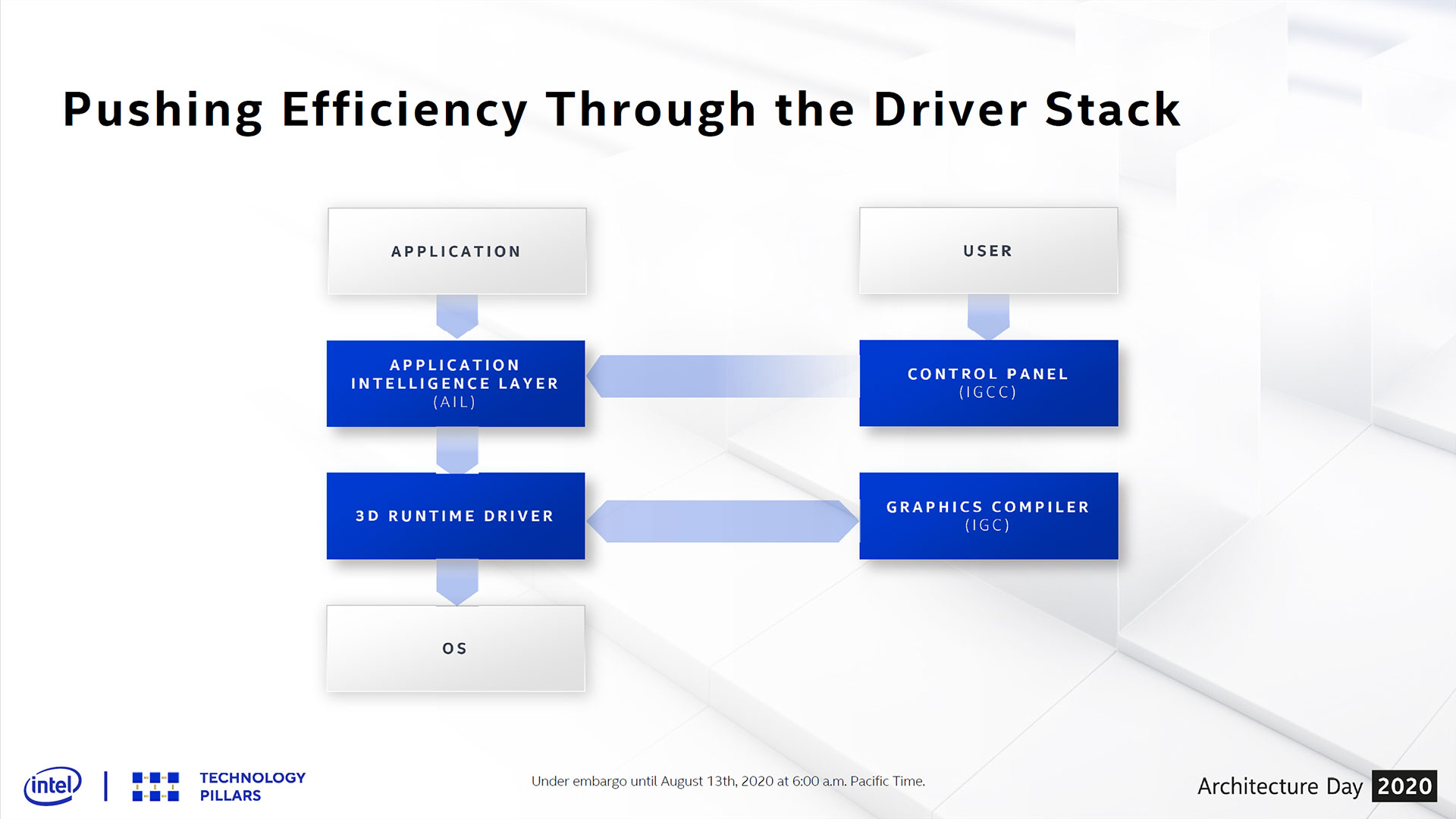

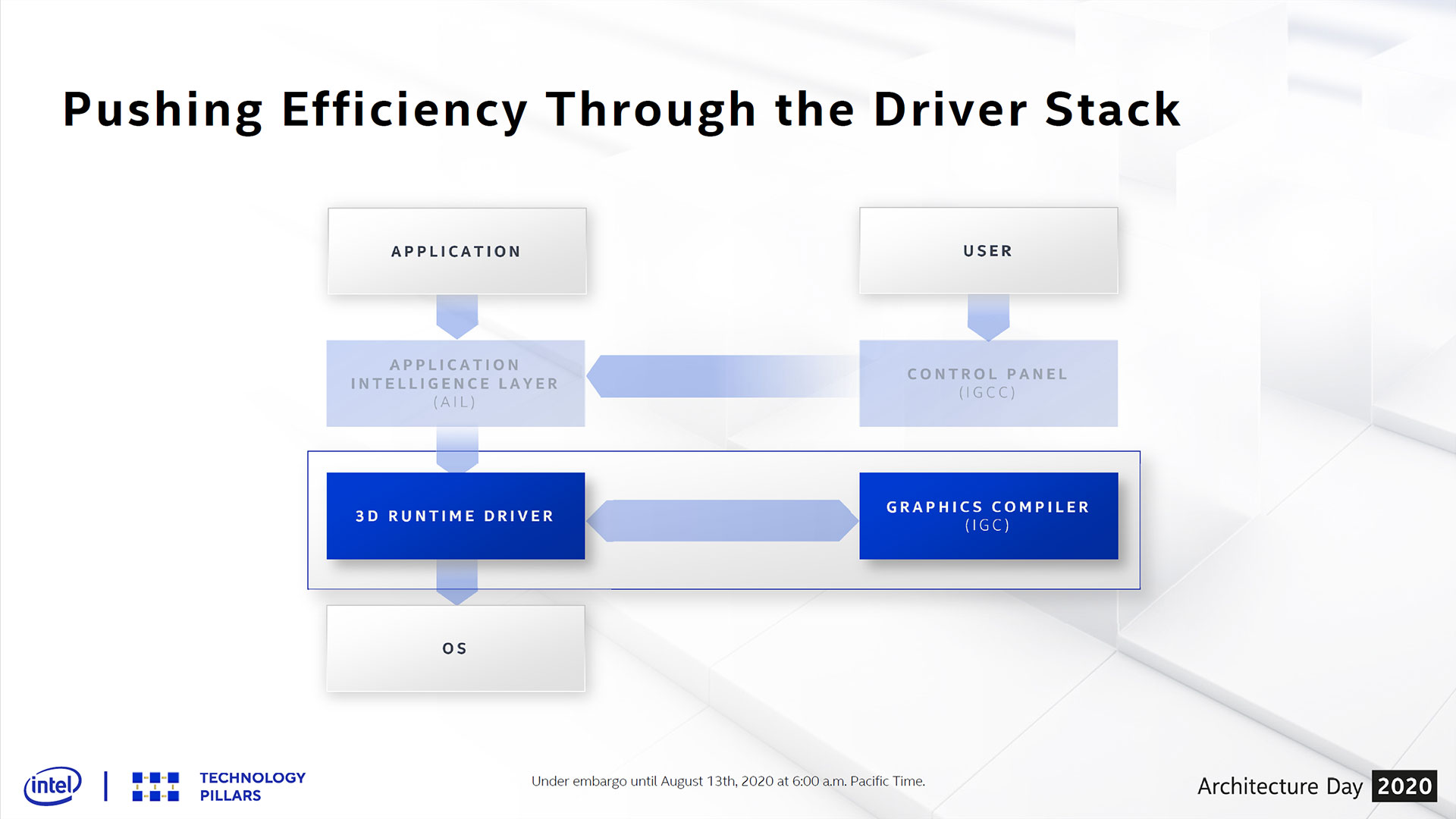

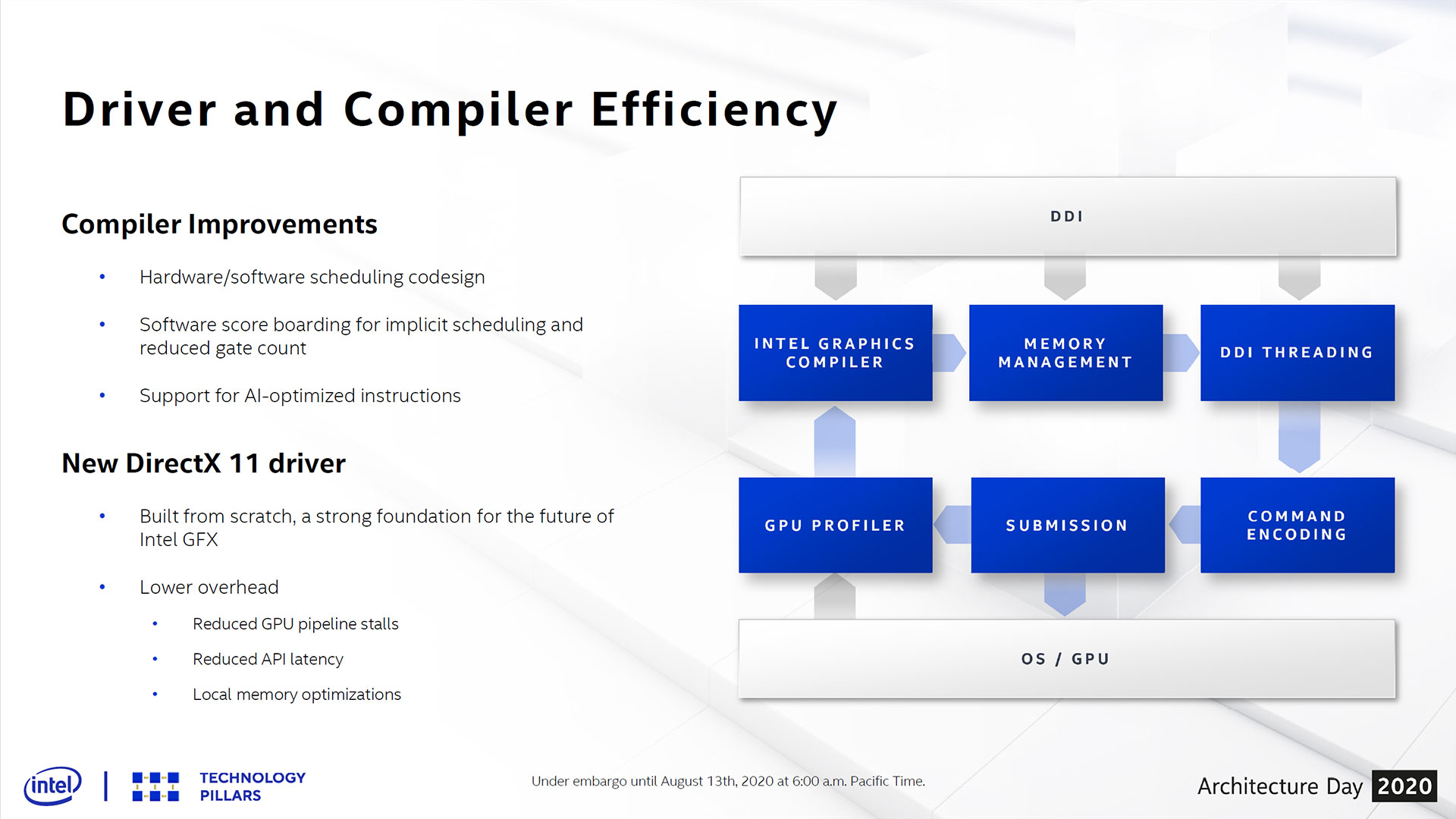

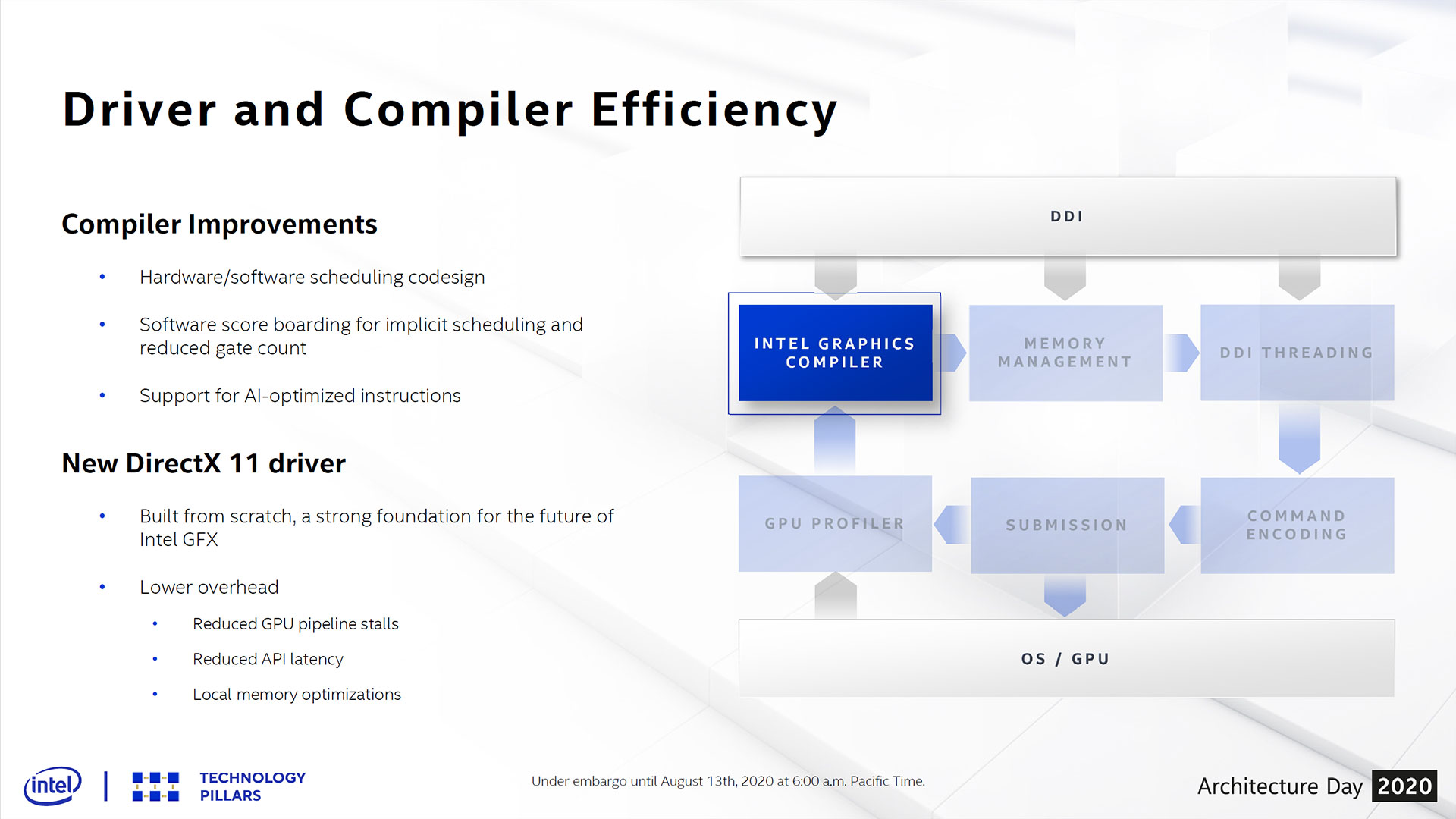

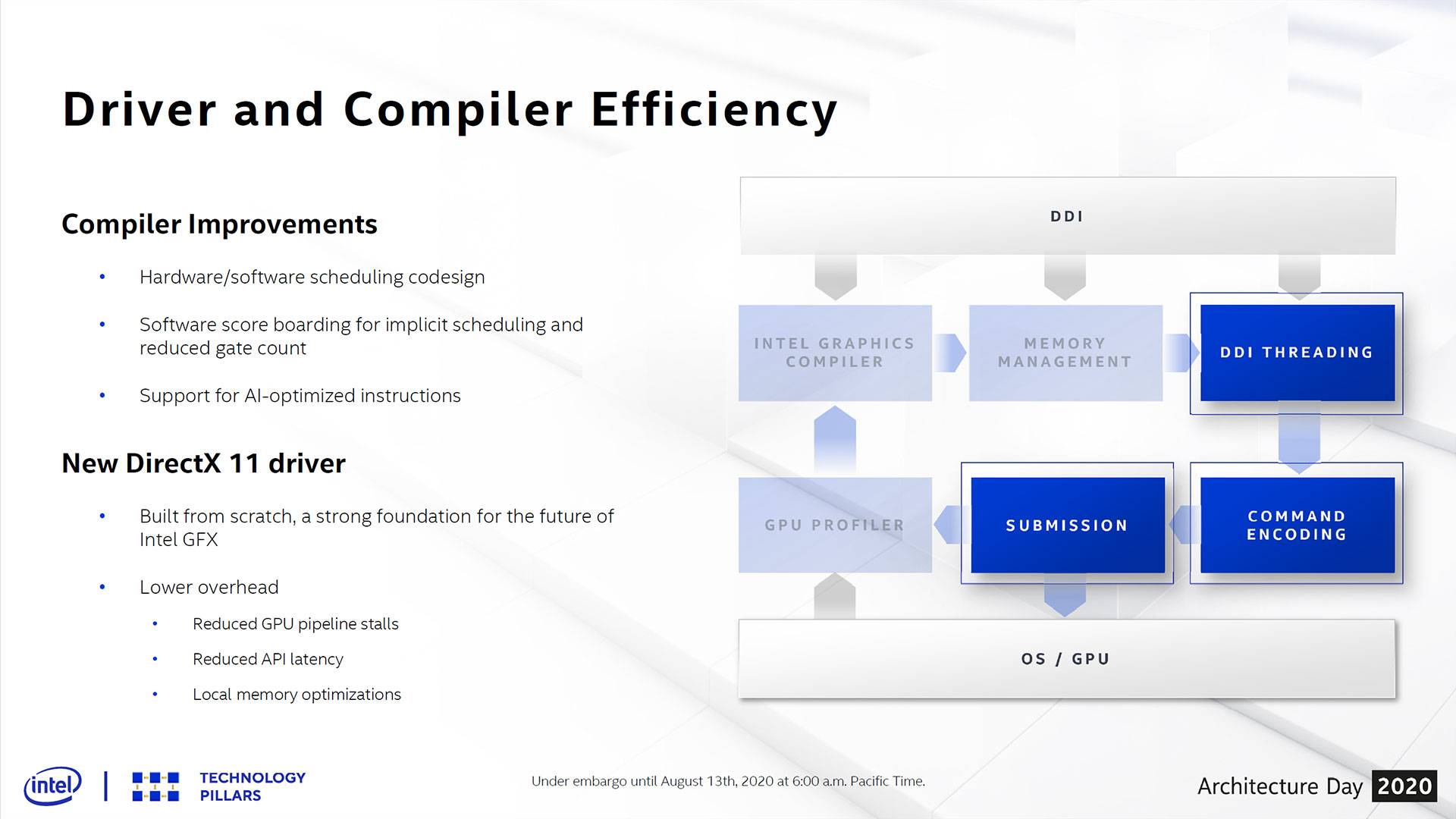

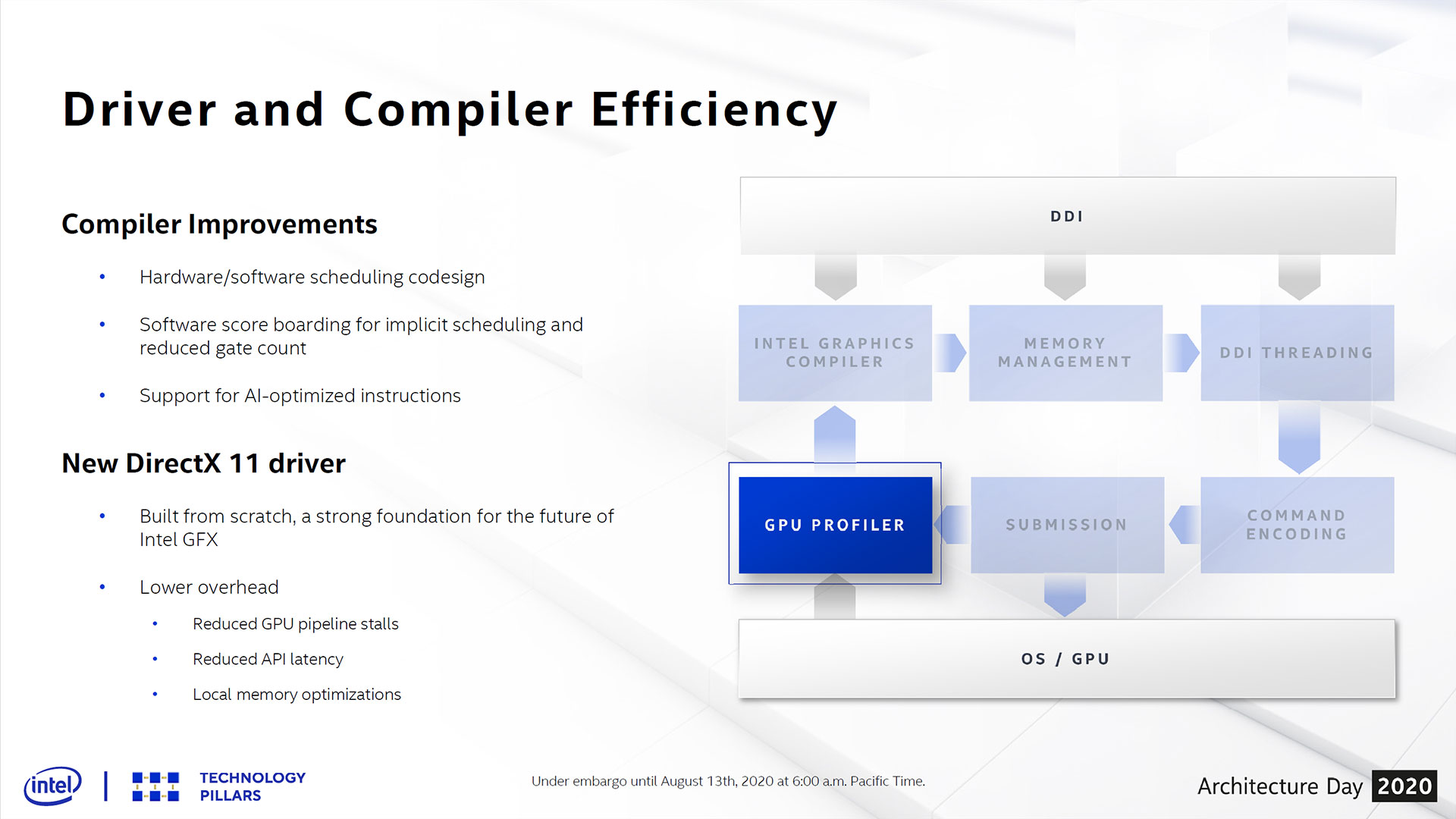

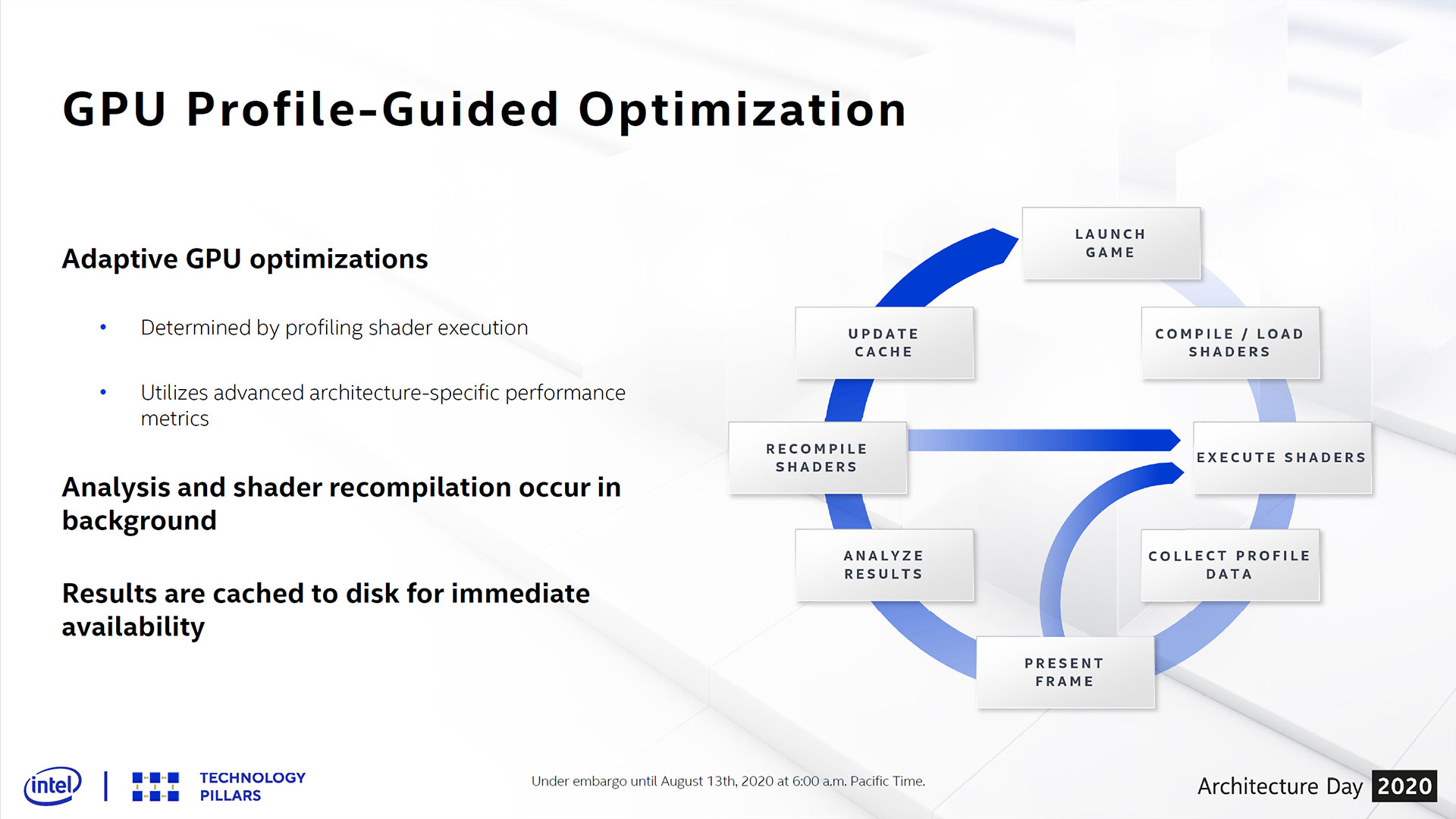

Which isn't to say that Intel doesn't still have work to do with its drivers. New games come out on a regular basis, and ensuring they'll run properly is a continuing challenge for any GPU company. Intel has already made changes with its driver team, and additional enhancements are coming with Xe LP. One change is moving more of the shader compiler work to software rather than hardware, which Intel says improves its optimization opportunities and reduces the complexity of the hardware.

Intel also has a new DirectX 11 driver coming, supposedly built from the ground up. That's a bit odd, considering the number of new games that don't use DirectX 11 (Doom Eternal, Red Dead Redemption 2, Death Stranding, Horizon New Dawn, and more). Still, DX11 is a popular API thanks to its ease of support. With the next generation consoles both adding support for ray tracing, however, future games are far more likely to use either DirectX 12 or Vulkan RT — one or the other is required for ray tracing, so we expect more game engines to switch to being fully DX12 or VulkanRT.

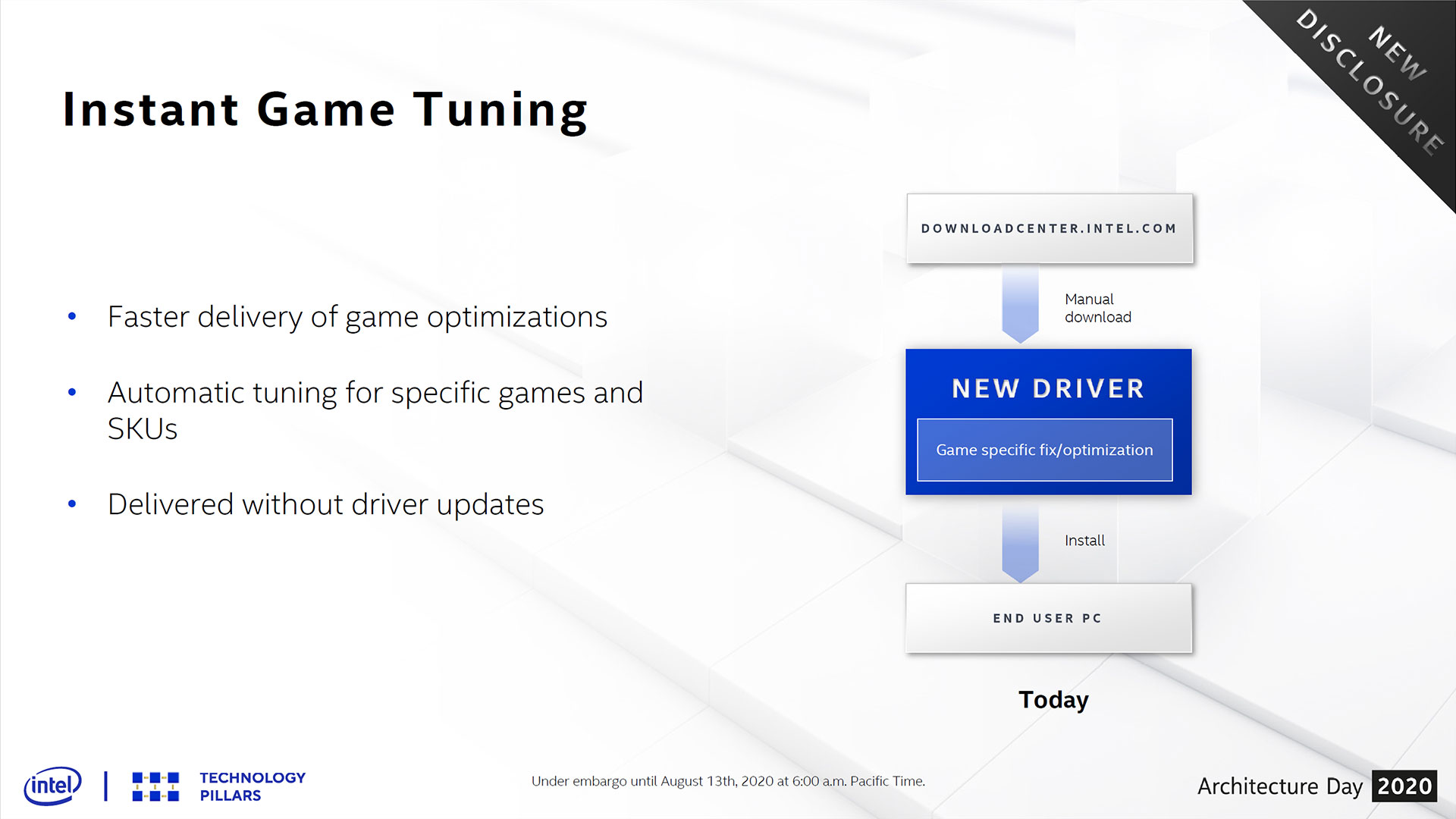

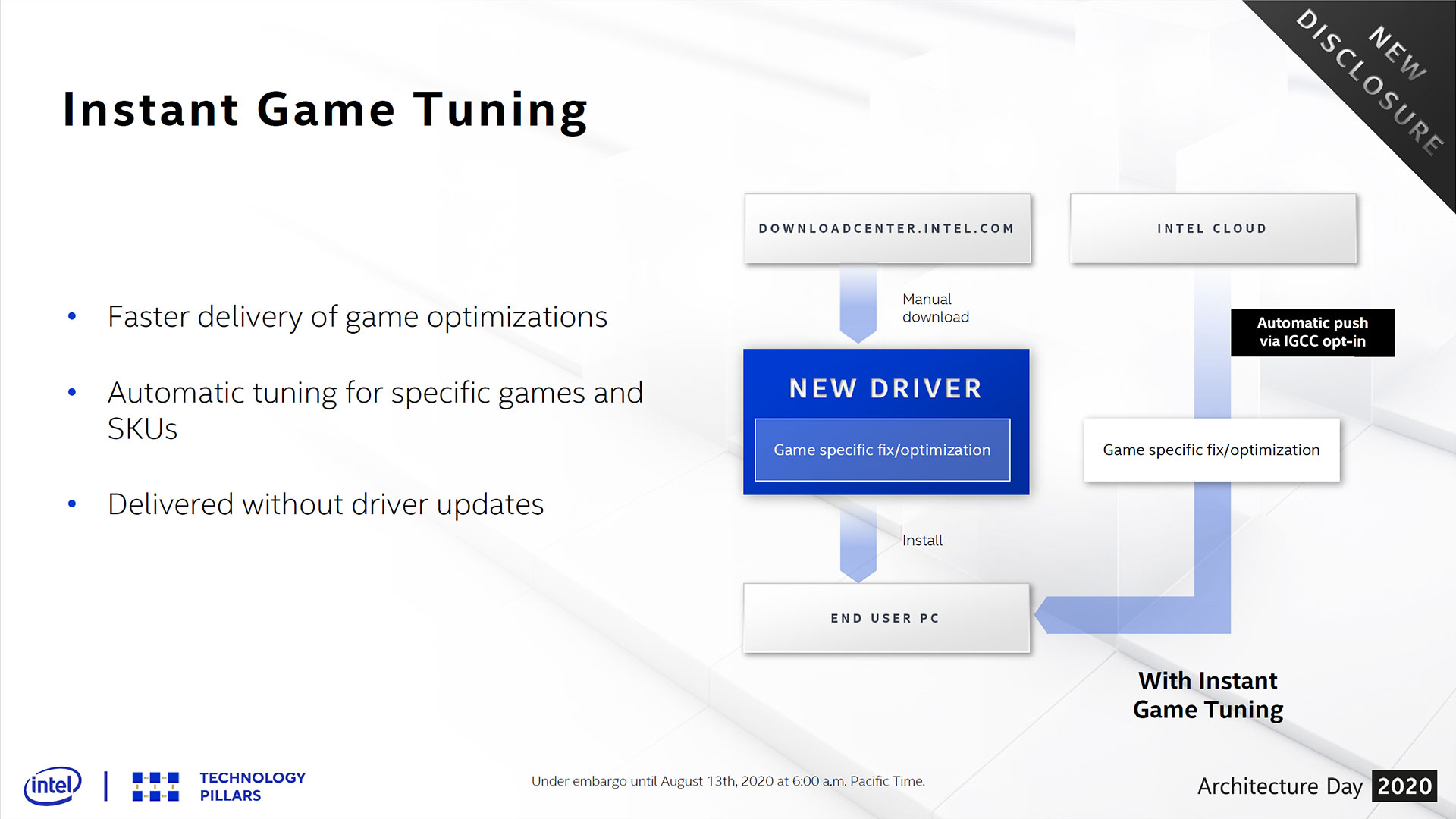

Another interesting aspect of Intel's driver strategy — and something that might be beneficial for AMD and Nvidia to copy — is "instant game tuning." The idea is to deliver updated profiles and optimizations without requiring end users to download and install a completely new driver. That should make updates go much faster and hopefully improve performance and compatibility, though we'll have to see the feature in action for a while before we can assess whether it truly works or not.

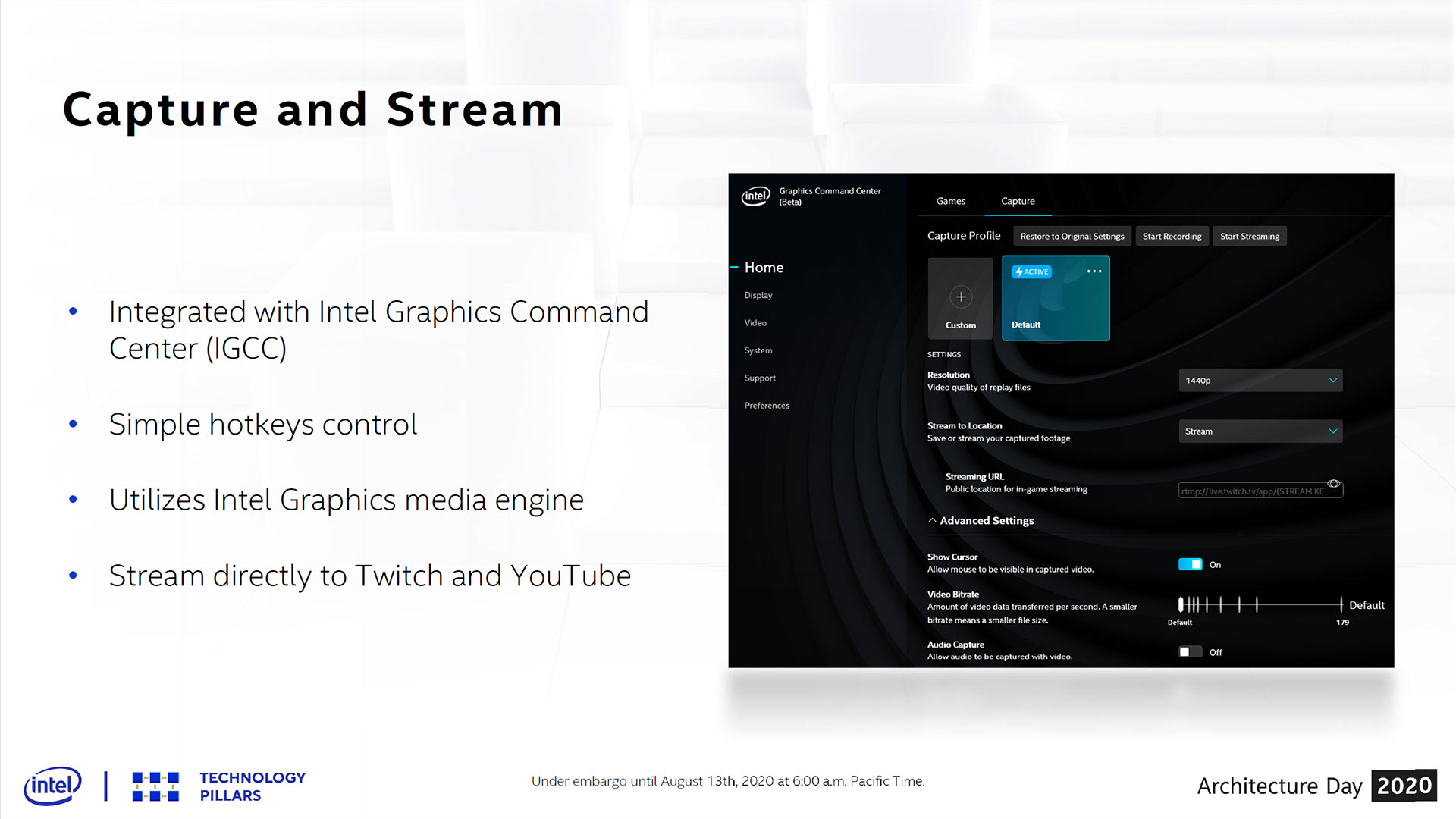

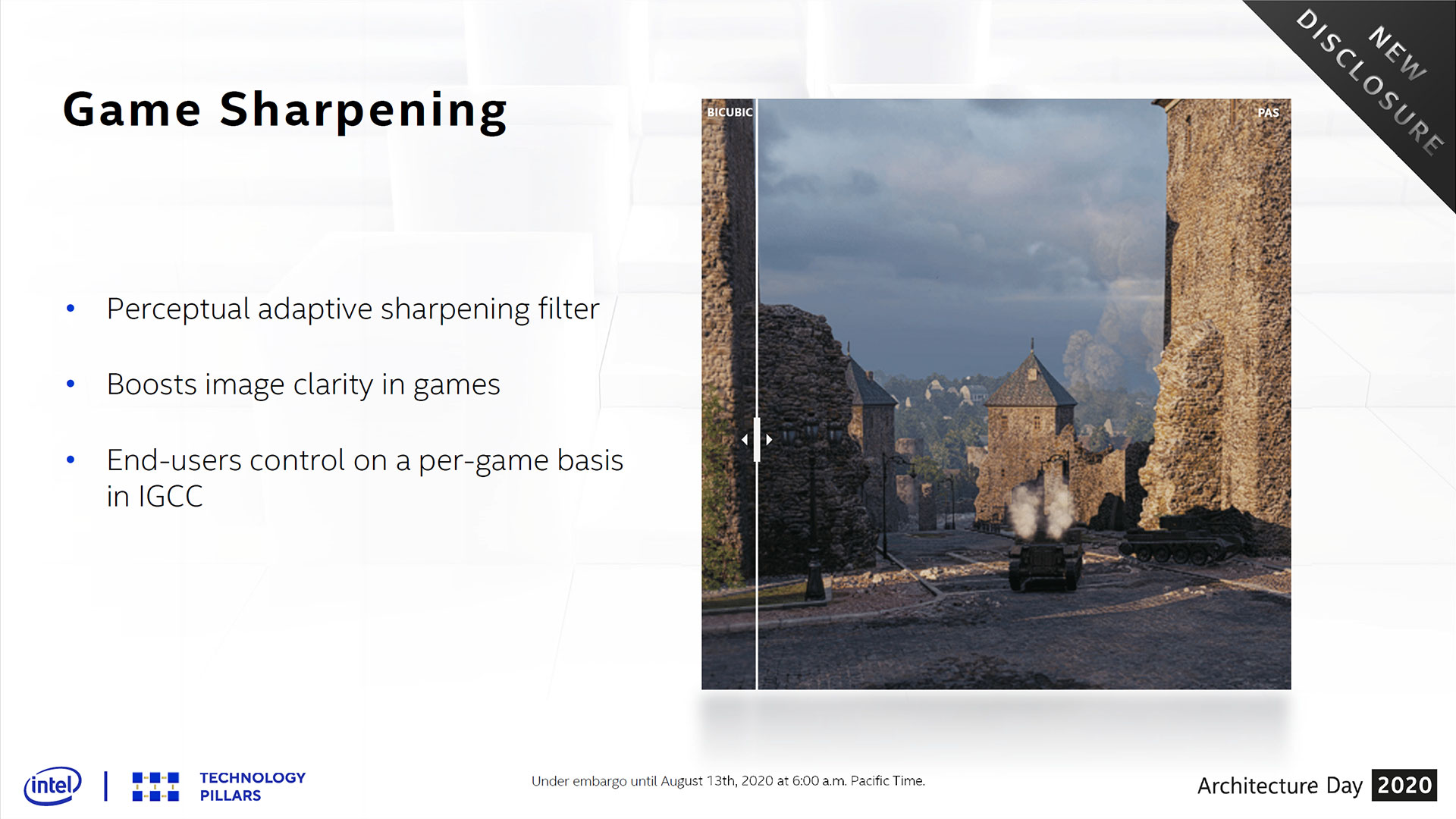

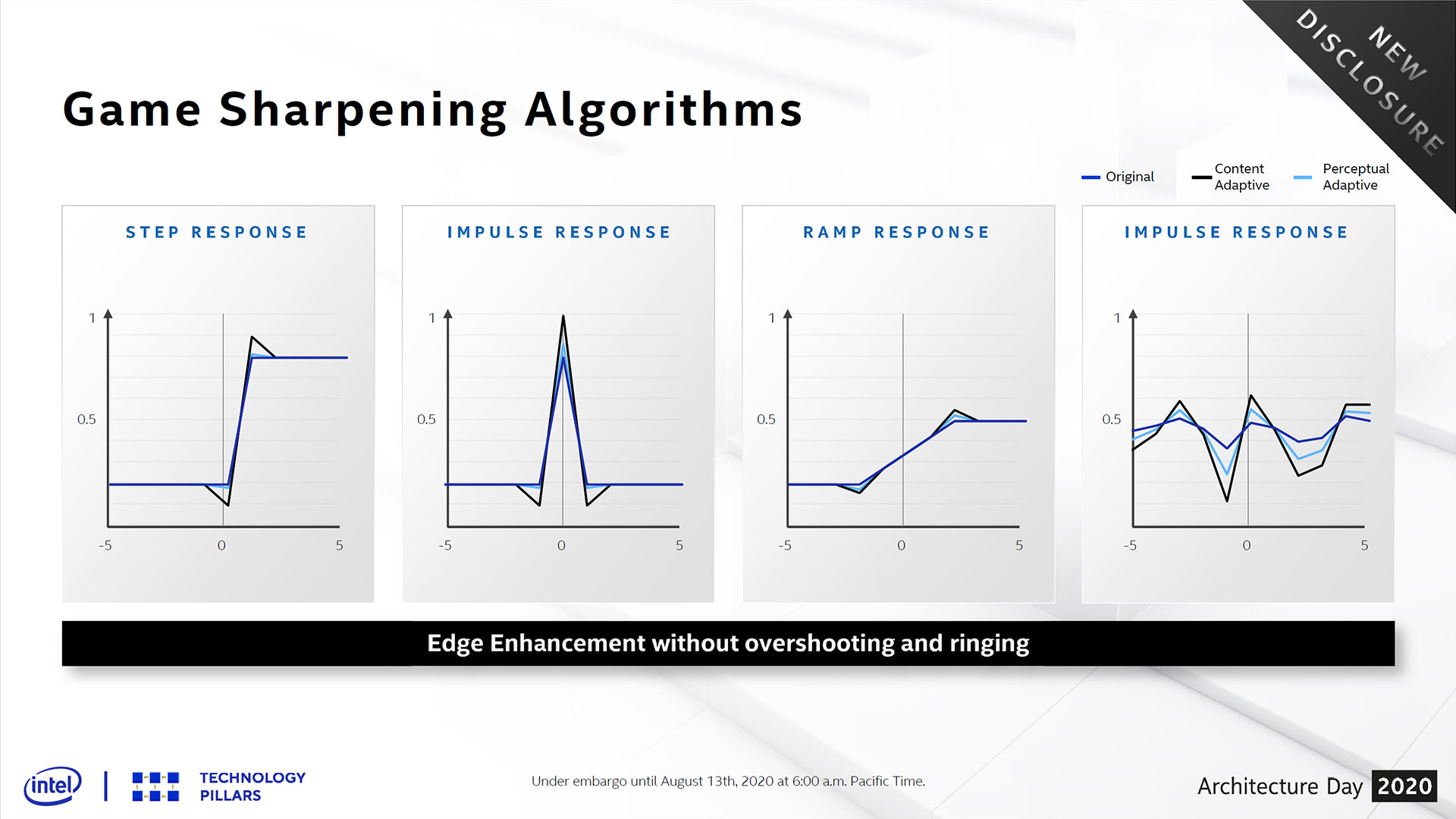

Intel is also adding game streaming and game sharpening algorithms to its drivers, both of which should be low overhead. This is Intel catching up with AMD and Nvidia in terms of standard GPU and driver features, but they're necessary features in today's gaming environment.

Closing Thoughts

Again, all of this applies primarily to Intel's Xe LP Graphics solutions. Serious gamers will be far more interested in what Intel has to offer with its Xe HPG solution, but we don't have nearly as many details on what's happening there. It should be much faster than Xe LP while benefiting from all of the other Xe enhancements discussed here, but we won't know how it performs until 2021.

As for Tiger Lake and Xe LP, all indications are that Intel will launch the new CPUs on September 2. How soon after the launch will we see the laptops? That depends on Intel's partners, but with back to (virtual) school season in full swing, we expect to see plenty of options available for purchase. I'm really hoping to be able to snag a reasonably priced model with a 28W TDP, which should prove fairly capable as a thin and light solution that can still play games. You can be sure we'll benchmark Xe LP as soon as we can get our hands on Tiger Lake.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

JarredWaltonGPU It's going to be very interesting to see how Tiger Lake Xe LP stacks up to AMD's Renoir in the coming months. I suspect Renoir will maintain a CPU cores advantage, and a modest lead on GPU performance, but if Intel can do what it's claiming, this will easily be the most compelling Intel GPU ever. Fingers crossedReply -

watzupken AMD Renoir will surely have a core count advantage since Tiger Lake is pretty much confirmed to cap out at 4c/8t. Graphic wise, we should see a good improvement with Xe graphics. However I feel if the performance is close to the enhanced Vega 8, then there is not much of an incentive for people to consider a Tiger Lake based laptop over a Renoir base laptop.Reply -

shrapnel_indie It will probably be a premium part, pushing Iris to mid-tier... Exact and real-world performance has yet to be seen though. As pointed out, paper specs don't tell the whole story unless comparing the exact same architecture. (but, even then...)Reply -

spongiemaster Replywatzupken said:However I feel if the performance is close to the enhanced Vega 8, then there is not much of an incentive for people to consider a Tiger Lake based laptop over a Renoir base laptop.

The reason to go with Intel is because the OEM's will produce a wider variety of models including more premium models than they will for AMD. So you're more likely to find a model that exactly fits your needs. -

JarredWaltonGPU Reply

Sad but true. There are very few Renoir laptops available right now, compared to Intel models. I would love a great 15-inch with an IPS 2.5K display, not 4K or 1080p. The LG Gram 17-inch is probably as close as I can find to my ideal specs, outside of the CPU and GPU. One of those with Renoir 8-core (Ryzen 7 or 9) would be awesome, but then price would probably be in the $1,500 range as well, which is more than I want to spend.spongiemaster said:The reason to go with Intel is because the OEM's will produce a wider variety of models including more premium models than they will for AMD. So you're more likely to find a model that exactly fits your needs.