Intel Unveils Xe DG1 Mobile Graphics in Discrete Graphics Card for Developers

Intel pulls back the covers on its Xe DG1 processors in a discrete graphics card for developers.

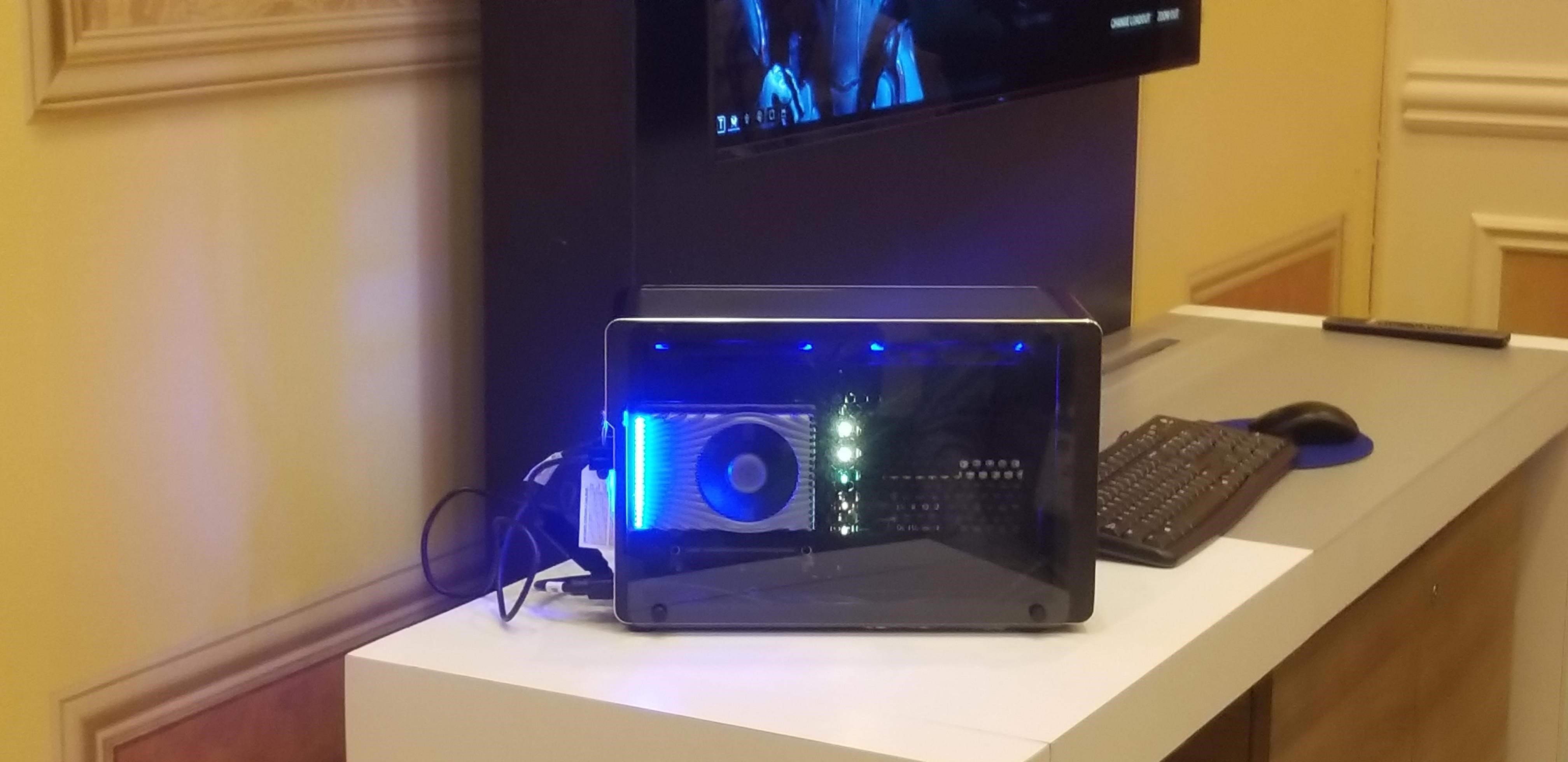

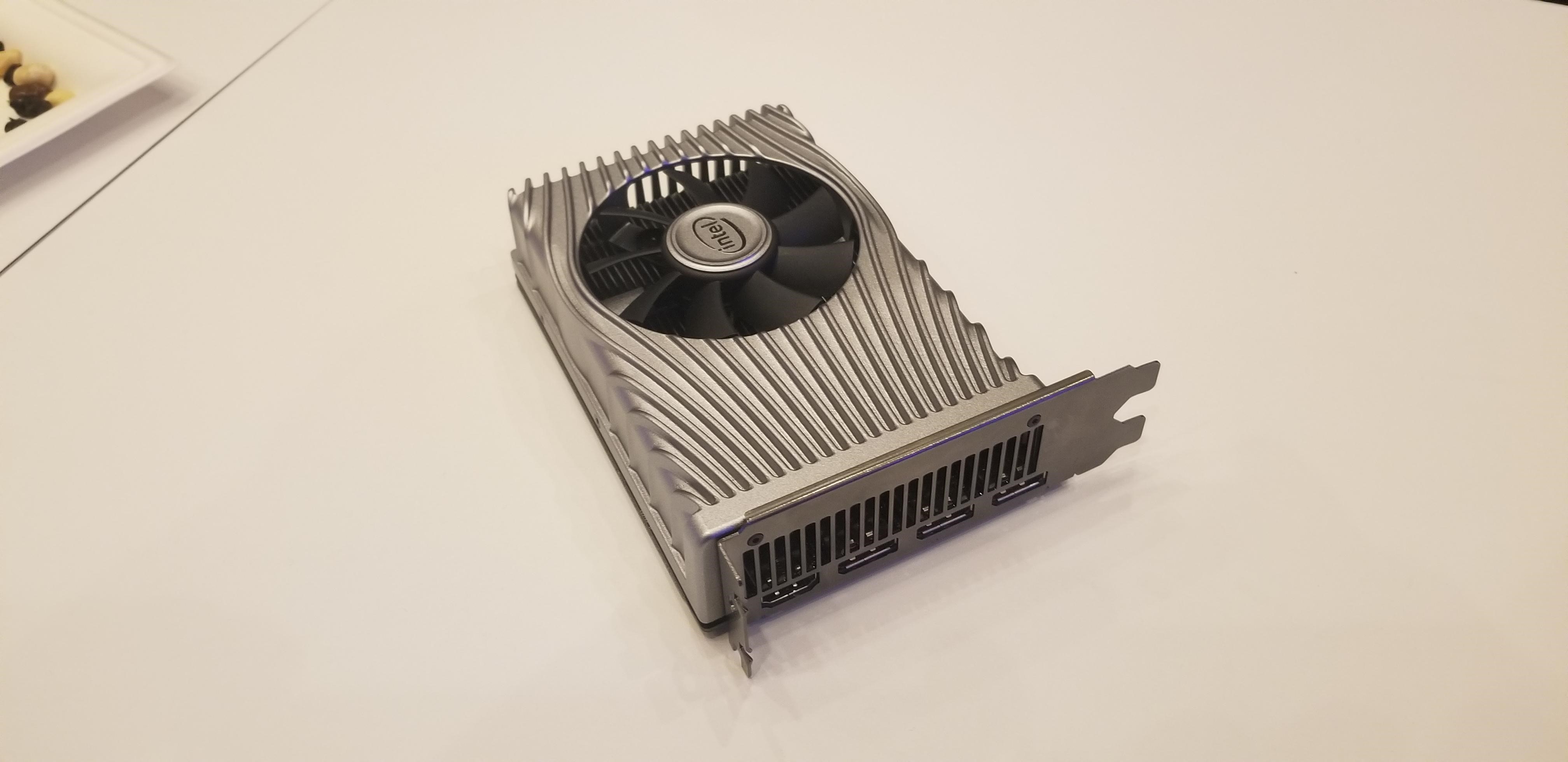

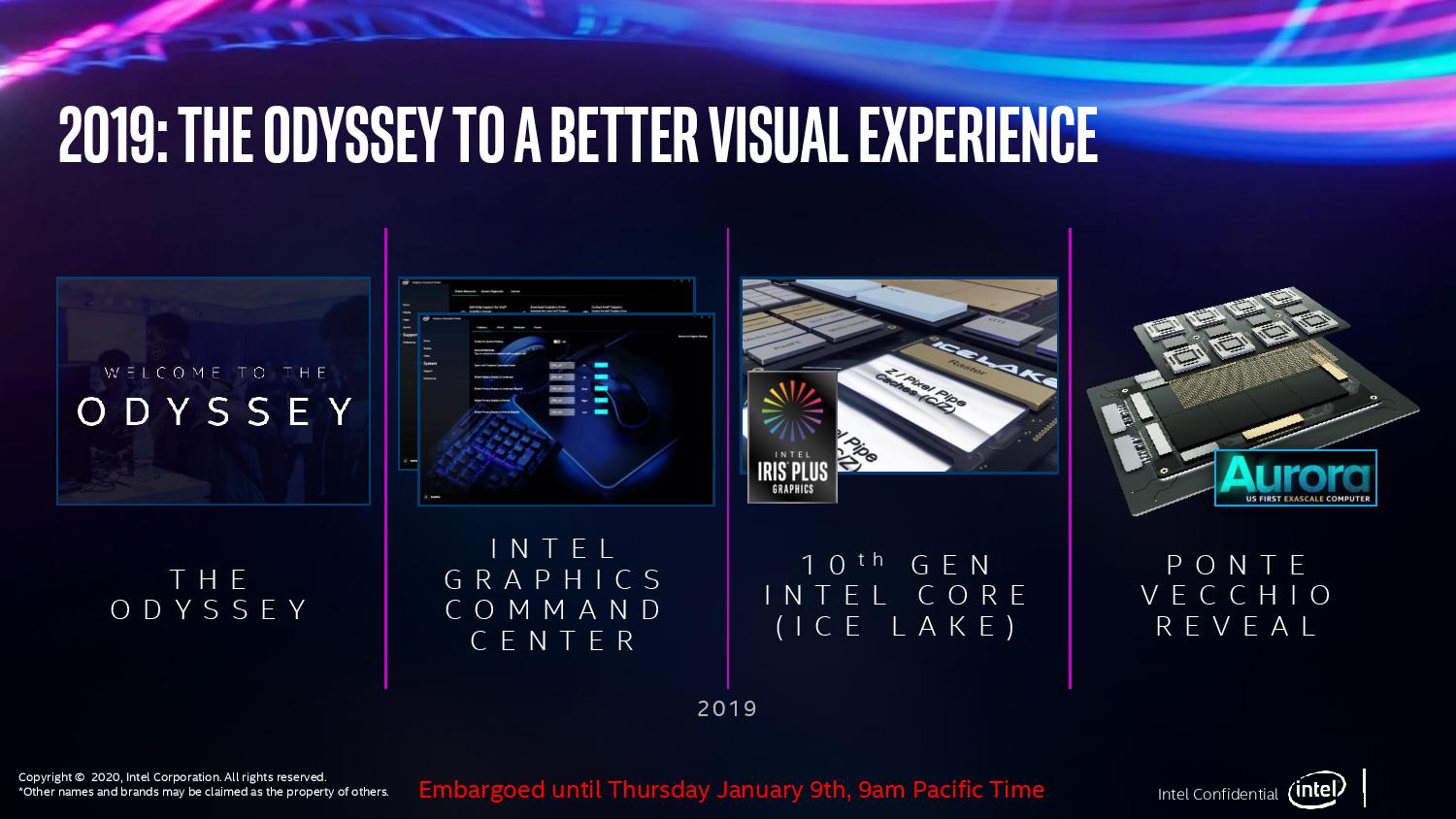

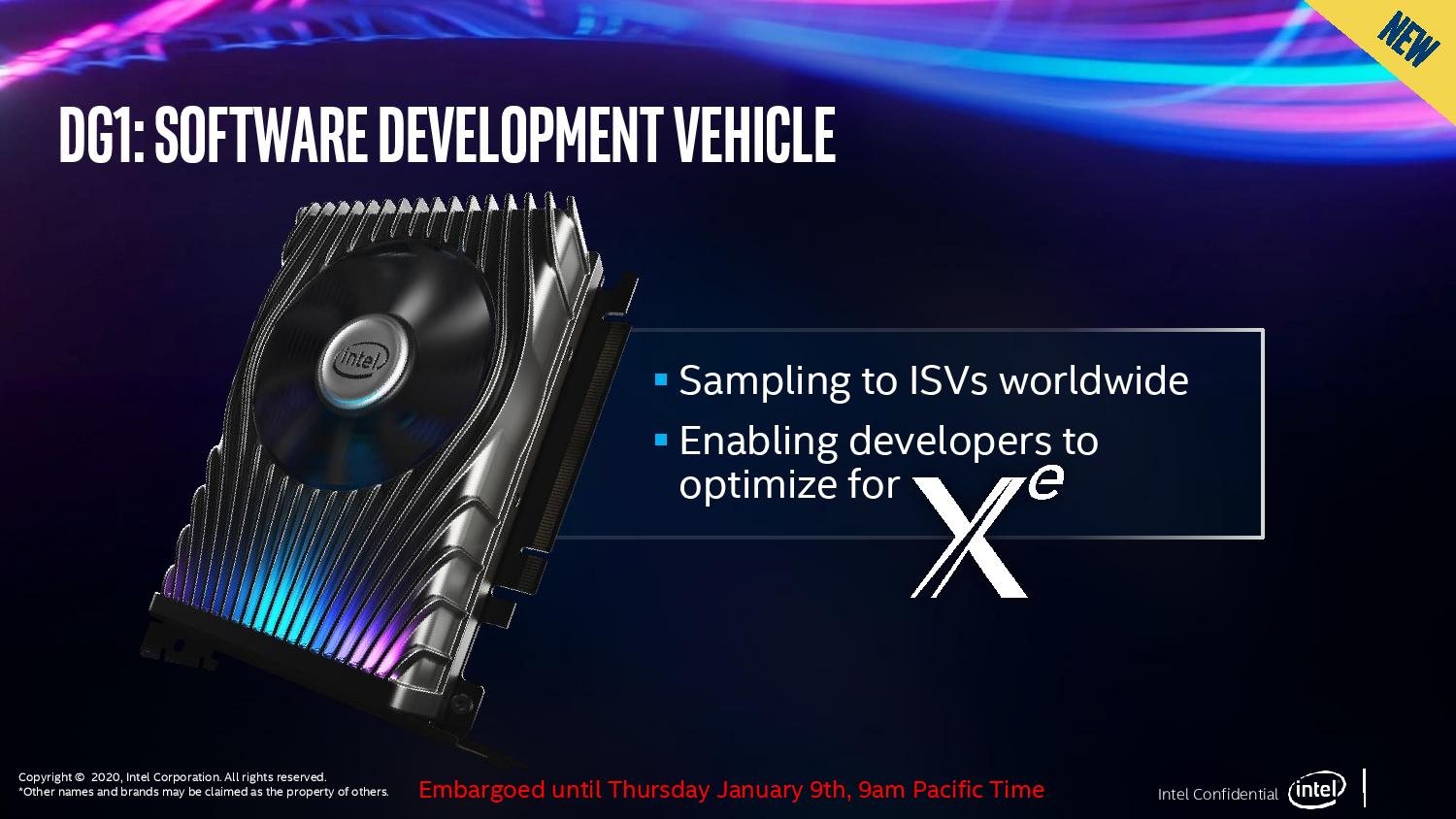

Intel revealed its first details of the DG1 discrete graphics here at CES 2020, but chose to peel the covers back slowly on its fledgling project with a few details sprinkled in a pre-brief, and then an onstage demo of a discrete laptop graphics chip inside of a Tiger Lake-powered notebook. Today the company pulled the covers back further as it revealed the new DG1 development kit and its DG1 mobile graphics in a standard graphics card form factor that the company seeds to independent software vendors (ISV) to help them optimize for its new graphics architecture. It also demoed the card running Warframe at 1080p.

Intel says the new Gen12 architecture is a major step forward that is four times faster than the current Gen9.5 graphics architecture inside its current-gen processors, and given that this is a scalable design, that could prove to be impressive when ported over to larger chips.

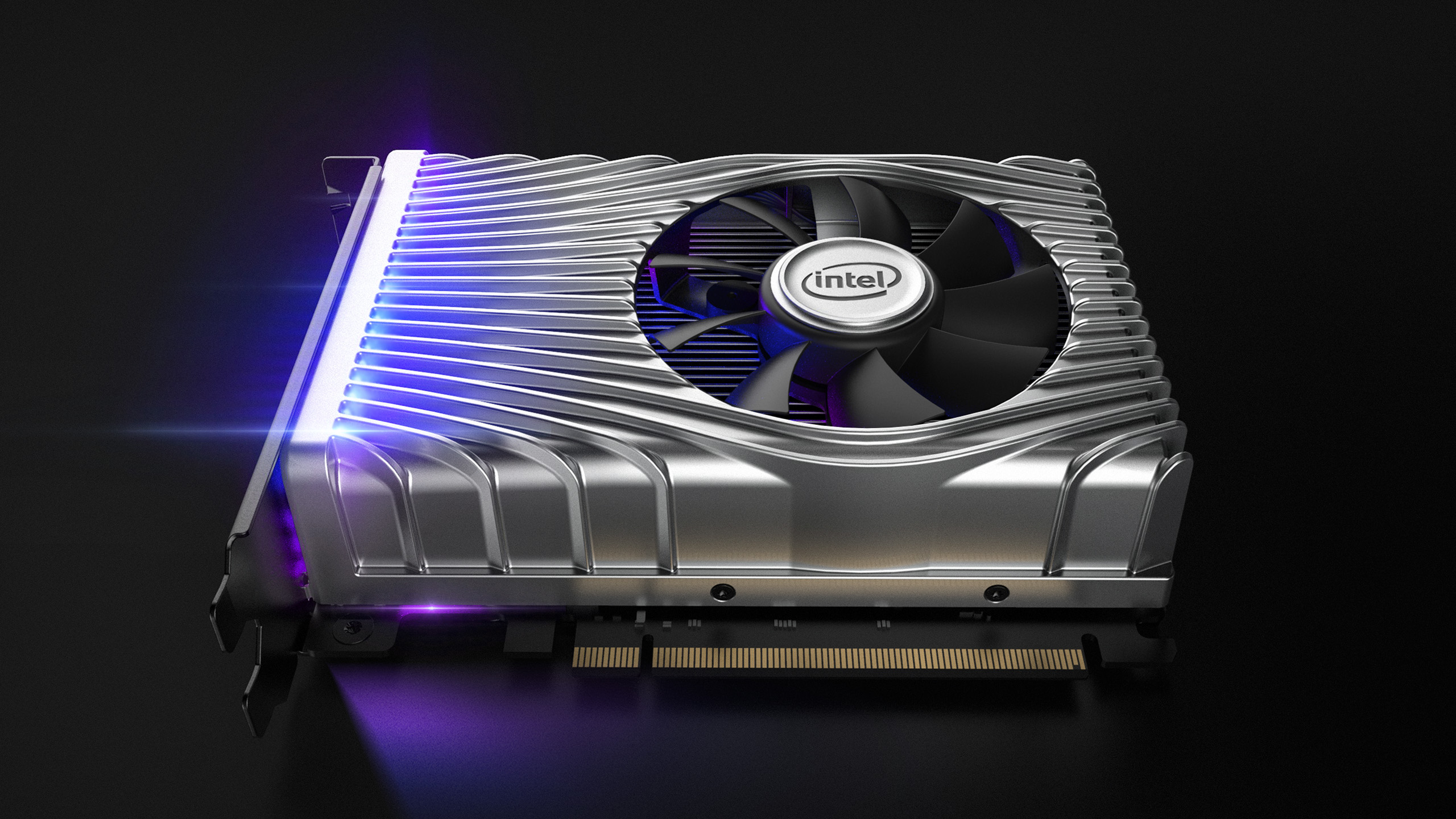

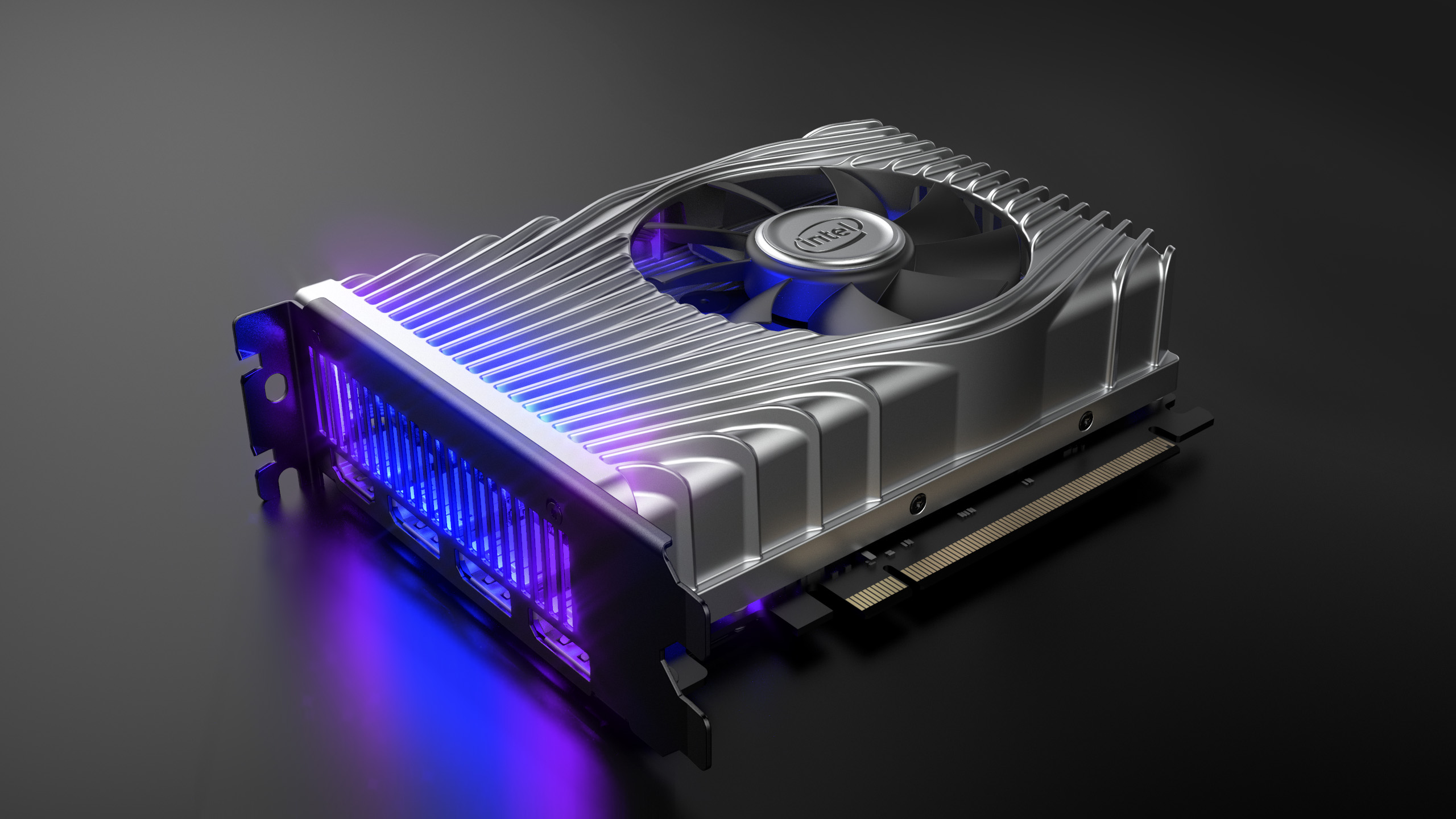

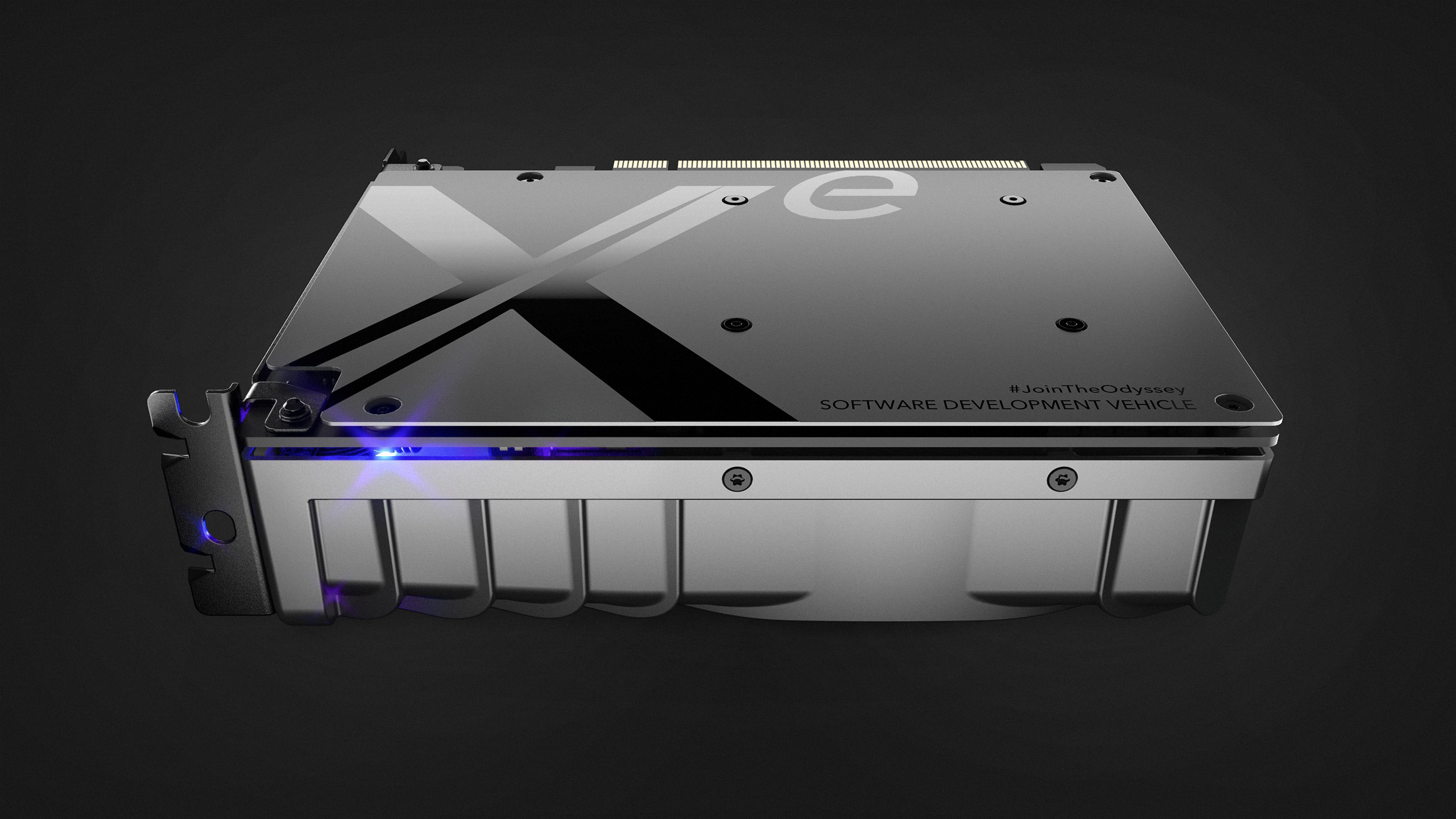

Intel is adamant that this current card is simply a vehicle to speed testing of its mobile GPU, but the Xe architecture will eventually arrive in some form as a discrete graphics card. It certainly looks an awful lot like an actual discrete graphics card, and even comes with addressable RGB lighting that Intel had set to its favorite shade of blue. When asked why Intel would go to this much trouble to create such an elaborate design for a development card, the company said it wants to show off its first discrete graphics unit in style and get feedback from the community.

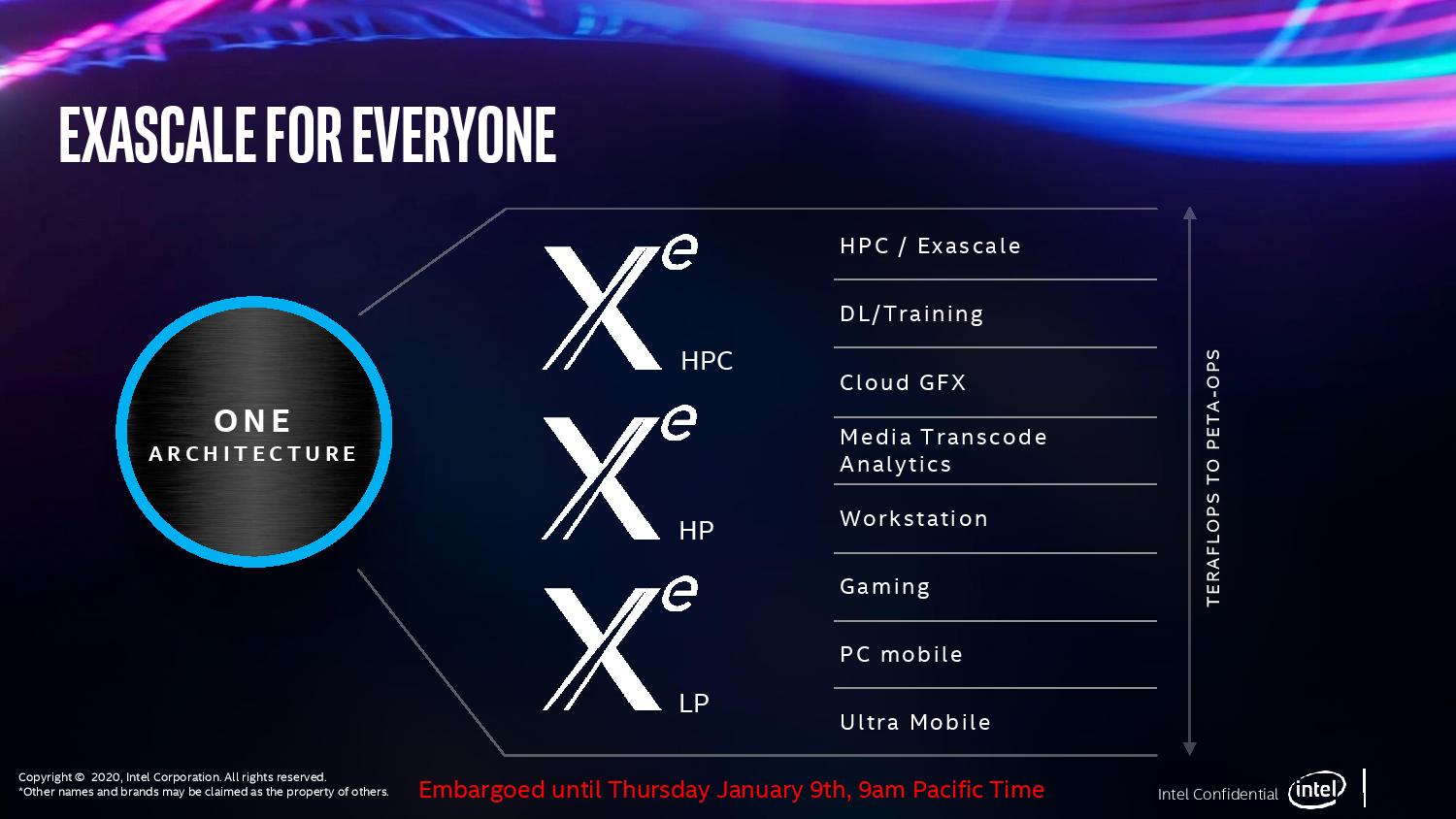

While Intel's first reveal of the new discrete mobile graphics unit was disappointing for some, particularly because it didn't come in the form of a graphics card for desktop PCs, it foreshadows Intel's imminent assault on the stomping grounds of AMD and Nvidia. It takes time to build out a full stack, and these are just the first steps on Intel's long journey to deliver a new end-to-end family of graphics solutions that spans from integrated graphics up to gaming cards and the data center.

From Intel's prior disclosures, we know that the Xe architecture will span across three segments: Xe-LP for low-power chips like the DG1 that will land in ultra-mobile products, Xe-HP for high performance variants, and Xe-HPC for chips destined for the high performance computing market.

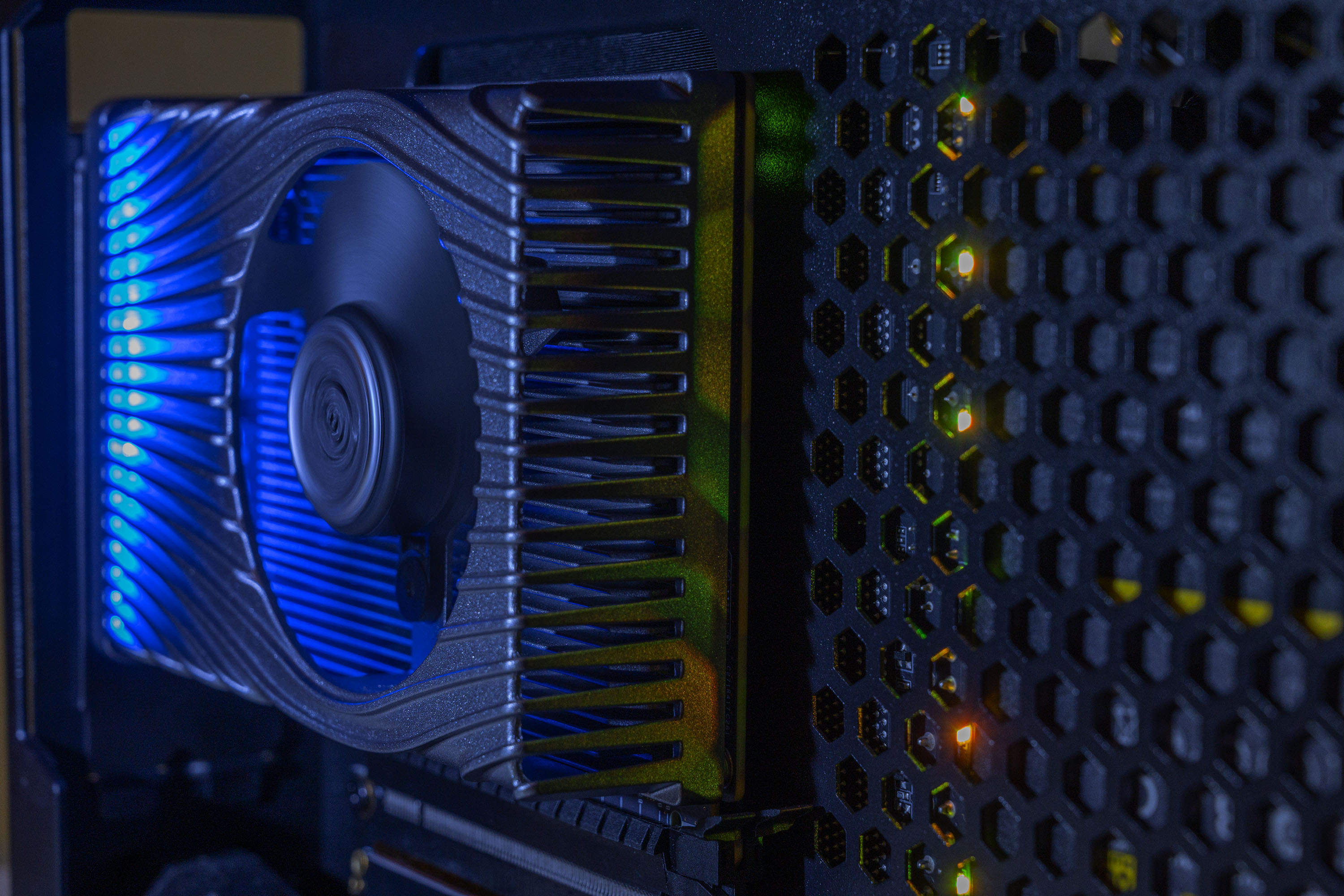

The development card, which houses Intel's DG1 graphics chip, is surprisingly heavy given that this is an Xe-LP model and likely consumes less than 25W, though Intel won't confirm any hard power details. The underlying DG1 test chip obviously doesn't consume more than 75W, which is evidenced by the lack of a PCIe auxiliary power connector (like an eight-pin), meaning this card pulls all of its power from the PCIe slot. The PCIe interface is x16-capable, but we aren't sure if it uses all 16 lanes, or if it has PCIe 3.0 or 4.0.

Here we see one HDMI port and three DisplayPort outputs, which implies the chip can support a minimum of four screens. The hefty outer shroud, which appears to be metallic, covers a thinner heatsink inside that runs the length of the card and is cooled by the fan.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Intel didn't show the actual DG1 silicon, or provide any technical details, like architectural design points, die size, or transistor counts. Intel also didn't verify the process node, though it is largely thought to be 10nm+. We also don't know what type of memory, or how much, feeds the graphics processor.

Here we see the metal backplate that covers the rear of the card. Intel emblazoned the backplate with Xe branding and "Software Development Vehicle."

We also see exhaust ports on the rear I/O bracket, but air can also flow through the front edge of the card via its slotted grill.

Intel currently samples the graphics card to ISVs via its developer kit, which is pretty much a standard Coffee Lake-based computer that comes in a small chassis with glass panels on either side. While Intel demoed DG1 in a Tiger Lake system at its press conference, the company said the GPU works with other processors as well. With the graphics card under power, we can see a row of LEDs that line the rear of the shroud near the I/O plate, and we're told these LEDs are addressable. Naturally, Intel had them set to a shade of blue.

Intel demoed the card running Warframe at 1080p with unspecified quality settings. Warframe is a first-person shooter that isn't very graphically demanding: The game merely requires a "DirectX 10-capable graphics card," but we did see visible tearing during the demo and our own run through the game. Intel cautions that this is early silicon, but the game did appear to run slowly with visible hitching and tearing during our brief hands-on. At times, the game appeared to use some type of foveated rendering technique, like variable rate shading (VRS), but that's hard to confirm given our limited time and the single title.

The DG1 is rumored to come with 96 EUs, and given the surfacing of drivers designed to enable multiple graphics units to work in tandem, it's possible it could work in an SLI-type implementation with the integrated graphics in Intel's processors, enabling powerful solutions. That would make sense because, as we learned with the Ponte Vecchio architecture announcements, Xe's inherent design principles appear to be based on a multi-chiplet arrangement. Intel hasn't verified either of those speculations but did mention that the card supports dynamic tuning to provide balanced power delivery. This technology sounds similar to AMD's new SmartShift technology unveiled here at CES that allows mobile platforms to shift power to the component under heaviest use, be it the CPU or GPU, to boost overall performance. Intel already has the underpinnings of this technology to manage processor thermals in low-power devices, but now it will extend that framework to the GPU, too.

Intel currently has a graphics install base of over a billion users, which comes courtesy of the integrated graphics chips in its CPUs that make Intel the world's largest GPU manufacturer. The company also has an IP war chest (at one point, it owned more graphics patents than the other vendors combined) to do battle. Intel pointed out that DG1 has "fabulous" media display engines, supports the latest codecs, and has "superfast" QuickSync capabilities. Intel also previously disclosed that the graphics engine supports INT8 for faster AI processing. That capability will enable exciting new levels of performance for applications, like creative and productivity apps, designed to use INT8.

However, the company faces an uphill battle in getting developers to optimize for its new architecture, particularly because the software community is notoriously slow to optimize for new architectures. To be successful, Intel has to lay the groundwork and provide both hardware and software tools to developers to speed the process. Remember, Nvidia, the dominant graphics manufacturer, has more software engineers than hardware engineers, so the importance of having mature drivers, software, and development tools can't be overstated.

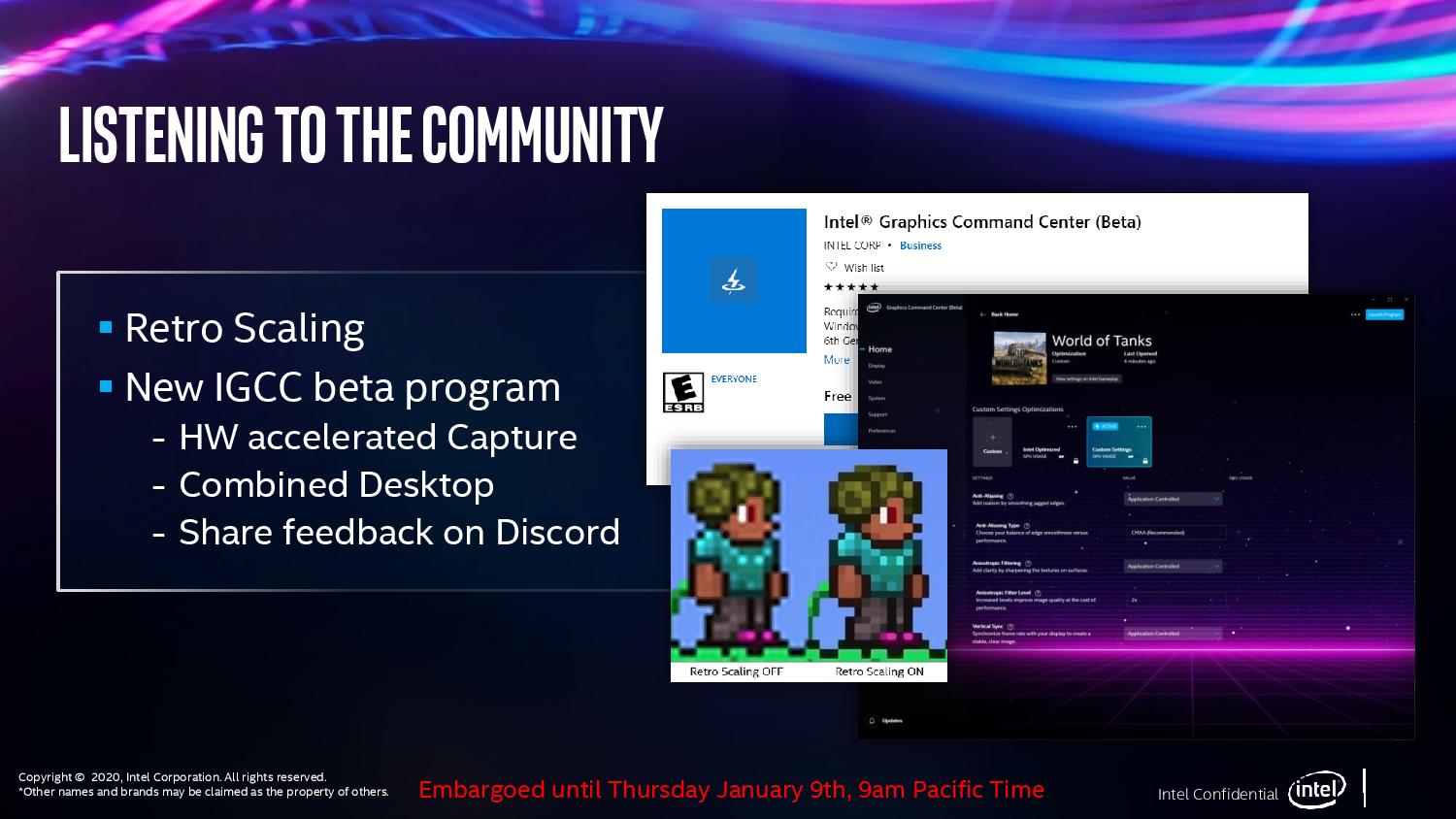

Intel has also taken initial steps to ramp up its graphics drivers by moving to a faster cadence of day-zero driver updates for its integrated graphics, and deploying its Graphics Command Center, which is a new user interface for Intel's control panel that allows you to alter several key aspects of your integrated graphics. We also know from our recent exclusive Intel overclocking lab tour that the company is already integrating the Xe graphics cards into its one-click overclocking software and other utilities. All of these efforts are designed to lay the groundwork for the eventual arrival of the more powerful models.

Intel is also engaging the enthusiast community through its far-ranging "Join the Odyssey" program, which is an outreach program designed to keep enthusiasts up to date on the latest developments through an Intel Gaming Access newsletter, outreach events, and even a beta program. The information-sharing goes both ways, though, as the company also plans to use the program to gather feedback from gamers to help guide its design decisions. That means Intel may choose to take some design cues from community feedback. The company recently added support for retro scaling due to the feedback program.

Thoughts

So, it's actually happening. Even though Intel announced its new Xe graphics project seemingly eons ago, and even though we've seen plenty of evidence the silicon is real, the vestiges of Intel's failed Larabee project hang thick in the air, serving as a reminder that even the world's largest semiconductor producer can fail in its attempt to penetrate a market that hasn't had any meaningful competition outside of the AMD and Nvidia duopoly in 20 years.

But now it's real. The arrival of actual working silicon, even though we can't see the chip and have no information on the key specs, lends credibility to Intel's efforts and shows that it is serious about fleshing out the full stack of Xe graphics solutions for the gaming market. To help speed the uptake, Intel will also help ISVs with its own team of ISV engineers and developer teams.

The company has already gotten the stamp of approval from the Department of Energy, the world's leading supercomputing organization, for its Ponte Vecchio graphics cards that will debut in the Aurora supercomputer. That design is far more complex than what we'd see in the consumer space, but that overarching design undoubtedly pulls in much of the same architectural components we'll see on Intel's future graphics cards.

And that sharing can go both ways: True to Intel's new six-pillar strategy, Intel's Ponte Vecchio uses all of the company's next-gen tech, like intricate interconnects and 3D chips stacking via Foveros, along with innovative new features like Rambo Cache. That gives the company an incredibly vast technology canvas to paint a new picture of the desktop GPU, but as always, it all boils down to process technology and software support.

As we know, software is often the biggest challenge, but Intel has certainly invested heavily to assure its new graphics initiative has experience behind the helm by recruiting top talent from several companies, like Raja Koduri and Jim Keller. Intel's developer outreach is a crucial step in the process of bringing up its graphics silicon, but it could be tough sledding, particularly if its architecture significantly diverges too far away from traditional designs. Only time will tell how long this effort will take, and complexity is going to be a huge factor in uptake.

Intel has also amassed an amazing array of companies as it seeks to move into lucrative new markets that span from the edge to the cloud, but its success in all of these areas hinges on one central factor that has proven problematic: Process technology.

Intel's transition to the 10nm architecture has been painful, and many speculate that it still isn't suitable for economical high-volume manufacturing of large dies, so even though GPUs are well-suited to absorb high defect rates due to their repeating structures, using multiple small dies would be wise. Unfortunately, moving to a multi-chip arrangement as the key underlying design would be a first for a mainstream GPU, which could slow game developer optimizations. We've already seen dual-GPU systems die out over time, so transparently implementing a multi-chip architecture is going to be key.

We don't know all of the details of the underlying hardware yet, but it is clear that Intel is moving quickly to get its silicon validated and in the hands of ISVs. We expect to learn more as the company moves closer to the launch of its Tiger Lake laptop processors, which are expected to debut this year.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

bigdragon Warframe is a 7 year old game. If Xe DG1 struggles with Warframe, then I don't want to see how it handles something modern like Monster Hunter World or Forza Horizon 4. I'd like to see more competition in the GPU space, but I'm not seeing any reason to take Intel seriously yet. Too much marketing; not enough performance.Reply

Get it together, Intel! -

Giroro "An executive told us to put integrated graphics into a dedicated GPU, and nobody knows why"Reply

-A lead engineer at intel, probably

Really, though, What do you think the chances are that DG1 die is just a faulty CPU? -

jimmysmitty Replybigdragon said:Warframe is a 7 year old game. If Xe DG1 struggles with Warframe, then I don't want to see how it handles something modern like Monster Hunter World or Forza Horizon 4. I'd like to see more competition in the GPU space, but I'm not seeing any reason to take Intel seriously yet. Too much marketing; not enough performance.

Get it together, Intel!

Considering its a mobile GPU being shown off and the information TH put in here its too early to judge it for the desktop market. But if its as powerful as they claim it to be we could see a decent iGPU form Intel to compete with AMD.

Giroro said:"An executive told us to put integrated graphics into a dedicated GPU, and nobody knows why"

-A lead engineer at intel, probably

Really, though, What do you think the chances are that DG1 die is just a faulty CPU?

I doubt it.

Considering that Intel is planning a discrete GPU for the desktop market and a HPC variant they are probably already developing stand alone GPU dies for testing. -

grimfox ReplyWe've already seen dual-GPU systems die out over time, so transparently implementing a multi-chip architecture is going to be key.

I disagree with this comparison. I think there are some pretty stark differences between what Intel is doing today compared to what nV and ATI were doing back in the day. From the ground up Intel is designing this thing to be multichiplette based device. I half expect the same driver that runs a meager 24EU device to work the 96EU or even a 384EU device. I think that since the first place they are deploying this design is a super computer lends further evidence that the quantity of chiplettes has little effect on the firmware. In a super computer they could have 1000s of these devices networked together operating as a single massive processing unit.

There are certainly going to be limitations but I doubt they will be as painful as they were strapping two full chips together. -

Pat Flynn Replybigdragon said:Warframe is a 7 year old game. If Xe DG1 struggles with Warframe, then I don't want to see how it handles something modern like Monster Hunter World or Forza Horizon 4. I'd like to see more competition in the GPU space, but I'm not seeing any reason to take Intel seriously yet. Too much marketing; not enough performance.

Get it together, Intel!

As was stated in the article, this is early silicon. This means the drivers have an enormous amount of optimizations and bug fixes to be done, and the silicon likely needs to be tweaked once it's ready for the market. The developers of the games/game engines also need to optimize for this hardware, so again, more software work to be done. Also, being that this is a sub 75W card, don't expect screaming performance. -

jimmysmitty Replygrimfox said:I disagree with this comparison. I think there are some pretty stark differences between what Intel is doing today compared to what nV and ATI were doing back in the day. From the ground up Intel is designing this thing to be multichiplette based device. I half expect the same driver that runs a meager 24EU device to work the 96EU or even a 384EU device. I think that since the first place they are deploying this design is a super computer lends further evidence that the quantity of chiplettes has little effect on the firmware. In a super computer they could have 1000s of these devices networked together operating as a single massive processing unit.

There are certainly going to be limitations but I doubt they will be as painful as they were strapping two full chips together.

Honest truth is we should be screaming for Intel to have a competitive GPU. We have had a duopoly for so long that AMD and Nvidia have been guilty of working together to price fix.

Having a third option to put pressure on the other two is a great thing. AMD alone has been unable to really get prices down. Maybe Intel will be able to. -

mcgge1360 Reply

That's actually not a bad idea. Could be making graphics cards for dirt-cheap for builds that need an iGPU but don't need anything for gaming (cough cough Ryzen). Dead CPUs would be scrap anyways, so no cost there, strap on a $10 heat sink and $3 PCB and boop, you got a GPU capable of DX12 and 3/4 monitors for the price of a few coffees.Giroro said:"An executive told us to put integrated graphics into a dedicated GPU, and nobody knows why"

-A lead engineer at intel, probably

Really, though, What do you think the chances are that DG1 die is just a faulty CPU? -

bit_user Reply

AMD's RX 550 has only 8 CUs, whereas their APUs have up to 11. So, it's not unprecedented to have a dGPU that's the same size or smaller than an iGPU.Giroro said:"An executive told us to put integrated graphics into a dedicated GPU, and nobody knows why"

-A lead engineer at intel, probably

No, that would be silly. Not least, because the dGPU will want GDDR5 or better, which the CPU doesn't support. The RX 550 I mentioned supports GDDR5, giving it 112 GB/sec of memory bandwidth, which about 3x as fast as the DDR4 that 1st gen Ryzen APUs of the day could support. On bandwidth-bound workloads, that would give it an edge over a comparable iGPU.Giroro said:Really, though, What do you think the chances are that DG1 die is just a faulty CPU?

Also, for security reasons, some of the iGPUs registers & memory might not be accessible from an external PCIe entity. -

bit_user Reply

Defects that would kill the entire CPU would probably be in places like the ring bus, memory controller, PCIe interface, or other common areas that would also compromise the iGPU's functionality.mcgge1360 said:Dead CPUs would be scrap anyways, so no cost there,

It would be very unlikely to have defects in so many cores, especially if the iGPU is then somehow unaffected (or minimally so). -

bit_user Reply

Source?jimmysmitty said:We have had a duopoly for so long that AMD and Nvidia have been guilty of working together to price fix.