Deepfake Yourself with Nvidia Maxine's Video Chat Tools

It's October now, which means it's mask season. Or, it would be if this whole year weren't mask season. At any rate, it seems that Nvidia's getting in on the holiday cheer with its newly announced Nvidia Maxine video streaming platform, which (among other features) essentially lets you video chat while wearing a deepfake style mask of...yourself. Maybe the result wasn't intended to be spooky, but looking at Nvidia's video demonstrations, Maxine is perfectly fitting for October.

Maxine actually comes with a bunch of features, but the one that first caught my eye was its new AI-assisted video compression tool. Have you ever wanted to deepfake your own face? Or turn your own face into a virtual chat avatar to then animate with something like the Facerig tool that's so common among virtual youtubers like Kizuna Ai? Because that's essentially what this tool does, all with the end goal of reducing bandwidth and (maybe) improving video streaming quality.

Essentially, rather than constantly sending video data to whoever you're chatting with, this new video compression tool sends them a static picture of your face, then reads the movements of your lips, eyes, cheeks and other key facial features to animate that picture on the other end using AI. Nvidia gives an example of a video stream using nearly 100KB per frame vs. an AI compressed stream using just 0.12KB per frame, meaning about a 1000X difference in size. The result is a mostly realistic depiction of what you actually look like talking, but with much less data being sent over the network. Emphasis on "mostly."

Because the compression tool isn't actually sending video, but is instead animating a static picture, it has to make some guesses, which results in things like blurry teeth, fuzzy edges and an animatronic style feel on some motions. It's up to you whether a lower bandwidth cost is worth some uncanny valley imagery, but it does kind of feel like a little like an alien is wearing a skin suit in Nvidia's example video.

Assuming those kinks get worked out, it still feels odd that we could eventually live in a future where video chats essentially use computer-generated facsimiles of our own faces...which we would operate using actual video of those same faces. And, like deepfakes, this does raise questions as to potential impersonation. Could I send someone a picture of Tim Cook and just map my facial movements to his face? But given that this is currently being positioned as a developer-focused tool rather than a consumer-facing one, companies might consider the tradeoff in realism worth it for increased performance.

Of course, Maxine doesn't stop just at recreating your face. It's also promising AI-powered 'enhancements,' like Face Re-animation. The concept here is pretty simple. Say you're focusing your eyes on a certain corner of your monitor screen, or tilting your head off to the side so you can look at a second monitor. Much like the AI video compression outlined above, Face Re-animation will use a still reference image and your facial movement data to adjust how you look on camera so that you appear to be looking directly at the screen, with your eyes focused on its center.

Nvidia's example video shows that this still has a ways to go, as the re-animated face is distinctly lower quality than the input data and stutters a bit as it moves to the center. It also comes with the same uncanny valley quality as the AI video compression tool. But assuming this all gets worked out, I could see something like this being helpful for workers who need to multitask during meetings, or even students dealing with overly aggressive virtual learning software punishing them for not looking directly at the screen.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

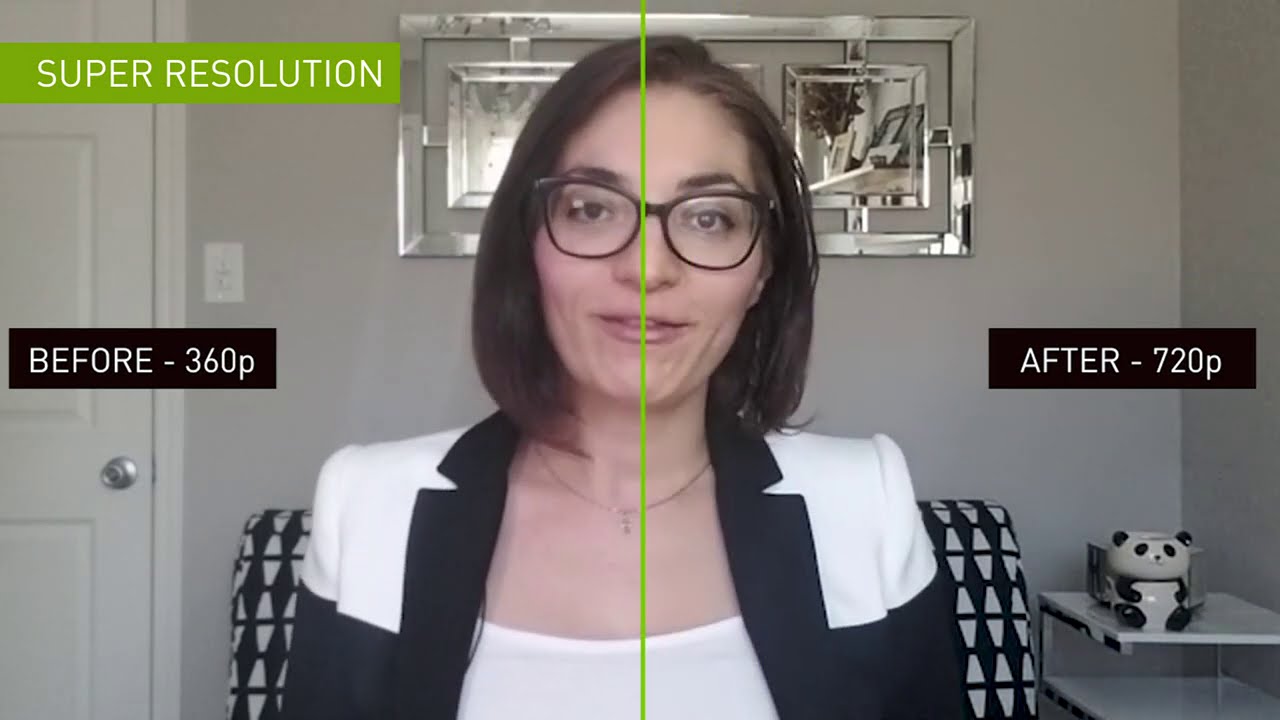

On a less unsettling end of the spectrum, Maxine also promises AI-assisted video upscaling, which could help those who don't have the best webcams, as well as similar features to RTX Voice's noise reduction and Nvidia Broadcast's auto-frame. Nvidia's demo video also briefly shows off tools for live language translation and for mapping facial movements to cartoon avatars, which might help offset the uncanny valley nature of Maxine's AI compression and Face Re-animation tools. We currently don't know much about these features, but they seem like they'd be genuinely helpful regardless of whether someone is a developer or not.

For now, Nvidia Maxine isn't coming straight to consumers. Instead, Nvidia's offering free cloud access to it to third-party firms, who can then use it to improve their own software. That's probably good, because while running these tools locally off your own RTX cards could improve performance, keeping them to the cloud will make them more accessible to the average person and will go further toward normalizing them. Still, communications firm Avaya is the only partner to have currently announced that it's using Maxine, so don't expect to see these features popping up in your Zoom calls anytime soon.

All jokes aside, as work-from-home continues to be the new normal across plenty of industries, it's not surprising to see companies like Nvidia step up to try to make these spaces easier and more professional. Even if it means they have to walk through the uncanny valley first.

Michelle Ehrhardt is an editor at Tom's Hardware. She's been following tech since her family got a Gateway running Windows 95, and is now on her third custom-built system. Her work has been published in publications like Paste, The Atlantic, and Kill Screen, just to name a few. She also holds a master's degree in game design from NYU.

-

w_barath This is going to be awesome for video games, specifically for RPG/MMO for both conversation assets, and for players to have conversations with each other in character.Reply -

ProfQuatermass The only trouble is, most of us move about and display objects and our hands.Reply

Then there is the cost of owning this technology at both ends of a call....