Analysis: PhysX On Systems With AMD Graphics Cards

Rarely does an issue divide the gaming community like PhysX has. We go deep into explaining CPU- and GPU-based PhysX processing, run PhysX with a Radeon card from AMD, and put some of today's most misleading headlines about PhysX under our microscope.

CPU PhysX: The x87 Story

CPU PhysX and Old Commands

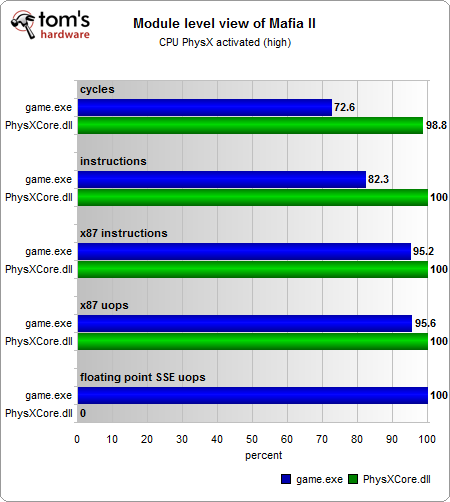

In an interesting article by David Kanter at Real World Technologies, he explored using Intel’s VTune to analyze CPU-based PhysX. Looking at the results, he found loads of x87 instructions and x87 micro operations.

- Explanation: x87 is a small part of the x86 architecture’s instruction set used for floating point calculations. It is a so-called instruction set extension, a hardware implementation providing essential elements for solving common numerical tasks faster (sine and cosine calculations, for example). Since the introduction of the SSE2 instruction set, the x87 extension has lost much of its former importance. However, for calculations requiring a mantissa of 64 bits, only possible with the 80-bit wide x87 registers, x87 remains important.

David speculated that optimizing PhysX code using the more modern and faster SSE2 instruction set extension instead of x87 might make it run more efficiently. His assessment hinted at 1.3 to 2 times better performance. He also carefully noted that Nvidia would have nothing to gain from such optimizations, considering the company’s focus on people using its GPUs.

We reconstructed these findings using Mafia II instead of Cryostasis, and switching back to our old Intel-based test rig, since VTune unfortunately could/would not work with our AMD CPU.

Assessment

Our own measurements fully confirm Kanter's results. However, the predicted performance increase from merely changing the compiler options is smaller than the headlines from SemiAccurate might indicate. Testing with the Bullet Benchmark only showed a difference of 10% to 20% between the x87- and SSE2-compiled files. This might seem like a big increase on paper, but in practice it’s rather marginal, especially if PhysX only runs on one CPU core. If the game wasn’t playable before, this little performance boost isn’t going to change much.

Nvidia wants to give a certain impression by enabling the SSE2 setting by default in its SDK 3.0. But ultimately it’s still up to developers to decide how and to what extent SSE2 will be used. The story above shows that there’s still potential for performance improvements, but also that some news headlines are a bit sensationalistic. Still, even after putting things in perspective, it’s obvious that Nvidia is making a business decision here, rather than doing what would be best for performance overall.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: CPU PhysX: The x87 Story

Prev Page CPU-Based PhysX: Relevance Next Page CPU PhysX: Multi-Threading?

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

eyefinity So it's basically what everybody in the know already knew - nVidia is holding back progress in order to line their own pockets.Reply -

Emperus Is it 'Physx by Nvidia' or 'Physx for Nvidia'..!! Its a pity to read those lines wherein it says that Nvidia is holding back performance when a non-Nvidia primary card is detected..Reply -

It looks like the increase in CPU utilization with CPU physX is only 154%, which could be 1 thread plus synchronization overhead with the main rendering threads.Reply

-

eyefinity The article could barely spell it out more clearly.Reply

Everyone could be enjoying cpu based Physics, making use of their otherwise idle cores.

The problem is, nVidia doesn't want that. They have a proprietary solution which slows down their own cards, and AMD cards even more, making theirs seem better. On top of that, they throw money at games devs so they don't include better cpu physics.

Everybody loses except nVidia. This is not unusual behaviour for them, they are doing it with Tesellation now too - slowing down their own cards because it slows down AMD cards even more, when there is a better solution that doesn't hurt anybody.

They are a pure scumbag company. -

rohitbaran In short, a good config to enjoy Physx requires selling an arm or a leg and the game developers and nVidia keep screwing the users to save their money and propagate their business interests respectively.Reply -

iam2thecrowe The world needs need opencl physics NOW! Also, while this is an informative article, it would be good to see what single nvidia cards make games using physx playable. Will a single gts450 cut it? probably not. That way budget gamers can make a more informed choice as its no point chosing nvidia for physx and finding it doesnt run well anyway on mid range cards so they could have just bought an ATI card and been better off.Reply -

archange Believe it or not, this morning I was determined to look into this same problem, since I just upgraded from an 8800 GTS 512 to an HD 6850. :OReply

Thank you, Tom's, thank you Igor Wallossek for makinng it easy!

You just made my day: a big thumbs up! -

jamesedgeuk2000 What about people with dedicated PPU's? I have 8800 GTX SLi and an Ageia Physx card where do I come into it?Reply -

skokie2 What is failed to be mentioned (and if what I see is real its much more predatory) that simply having an onboard AMD graphics, even if its disabled in the BIOS, stops PhysX working. This is simply outragous. My main hope is that AMD finally gets better at linux drivers so my next card does not need to be nVidia. I will vote with my feet... so long as there is another name on the slip :( Sad state of graphics generally and been getting worse since AMD bought ATI.. it was then that this game started... nVidia just takes it up a notch.Reply