Nvidia's SLI Technology In 2015: What You Need To Know

SLI Scaling In Synthetic Benchmarks

We've all seen plenty of SLI scaling benchmarks, where the analysis focused on some piece of test data, such as "SLI scaling in game x at preset y is z%". Unfortunately, reality is more complex than that, and almost all of those single-number tests don't tell you the whole story. Without some sort of context on what is limiting scaling, it's almost impossible to truly understand the technology's potential.

Together, let's explore SLI scaling in more detail starting with simple synthetic benchmarks. Later, we'll move on to real-world games.

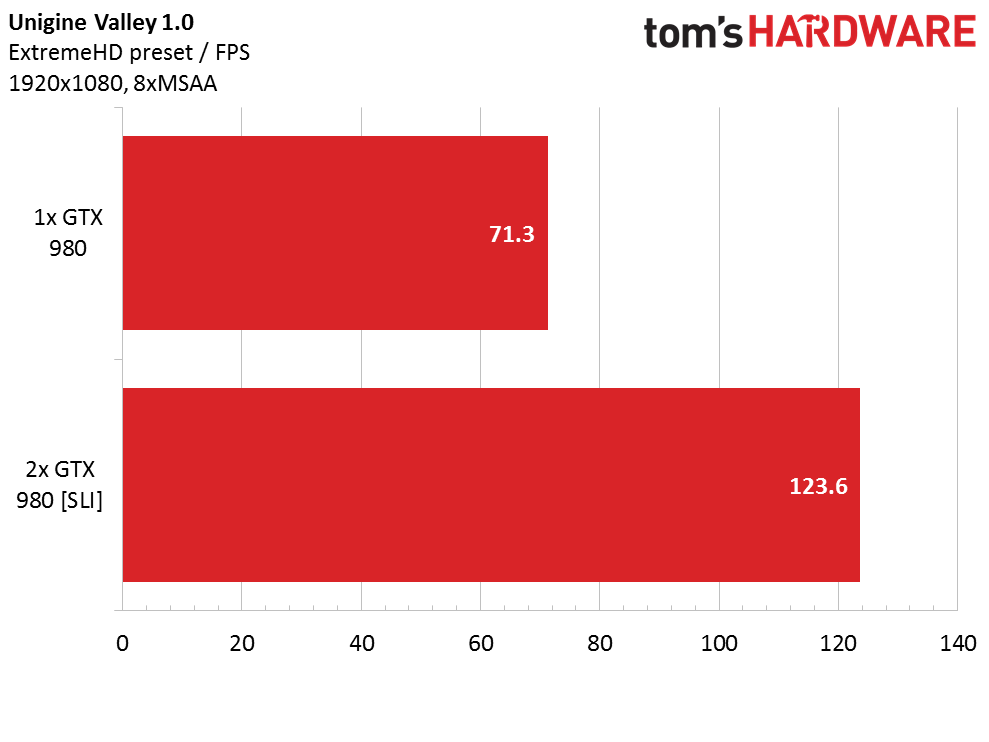

Unigine Valley's Extreme HD preset is run at 1080p (with 8x MSAA). As we'll see from testing other settings, above about 150 FPS, the system's bottleneck shifts from the GPUs to the CPU. Hence the apparent scaling of "only" 73% in this scenario.

What's really happening is that scaling for most scenes is actually close to 100%, while some of the highest-FPS sequences are CPU-limited with SLI active. This leads to a lower average for that benchmark.

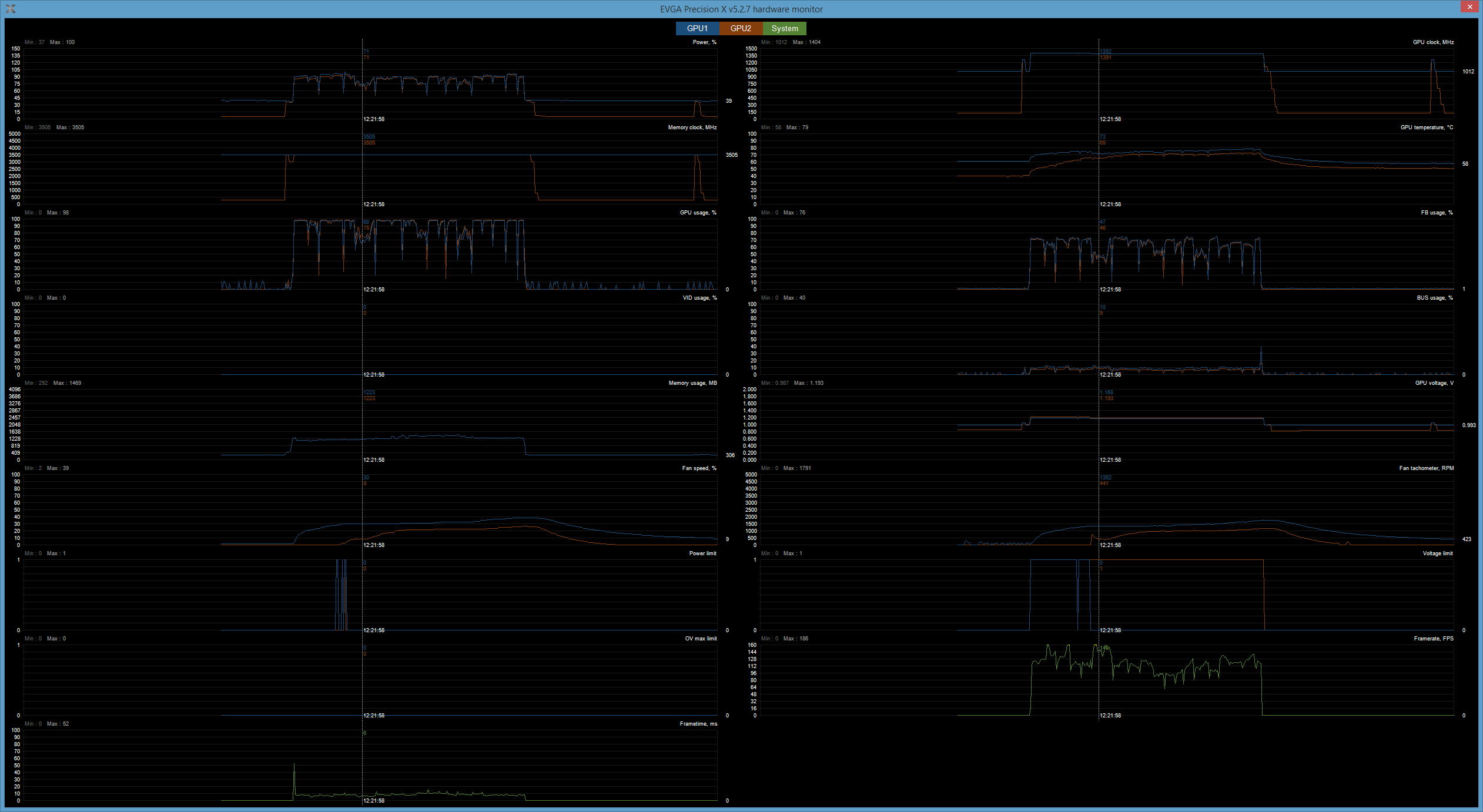

Notice that, at about 12:21 PM in the data, very high FPS (above 150) are coupled with decreased GPU core utilization. That's our evidence that the bottleneck is shifting (in this case, to the CPU). I also want to point out that, despite the 980's lower memory bandwidth, the video memory controller is never a bottleneck in this test; it hovers at 80% utilization. Third, look at how low the PCIe link utilization is, even though it's only eight lanes wide. We're reporting about 10% there, though that metric is considered inaccurate by Nvidia and not used internally.

Now for more demanding resolutions...

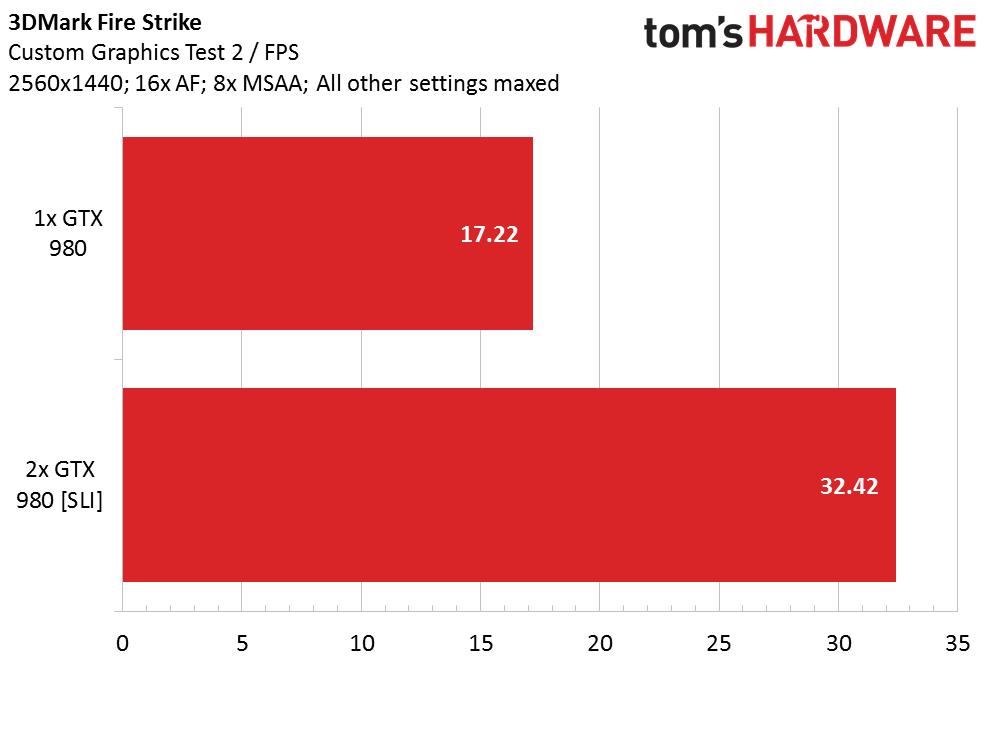

In order to properly assess SLI scaling, we want to give the GPUs a more taxing workload. How about the Custom Graphics Test 2 of 3DMark Fire Strike at 1440p with 16xAF, 8x MSAA and all of the eye candy turned all the way up? In this scenario, we see closer-to-ideal 88% scaling.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Result links are here (SLI) and here (no SLI).

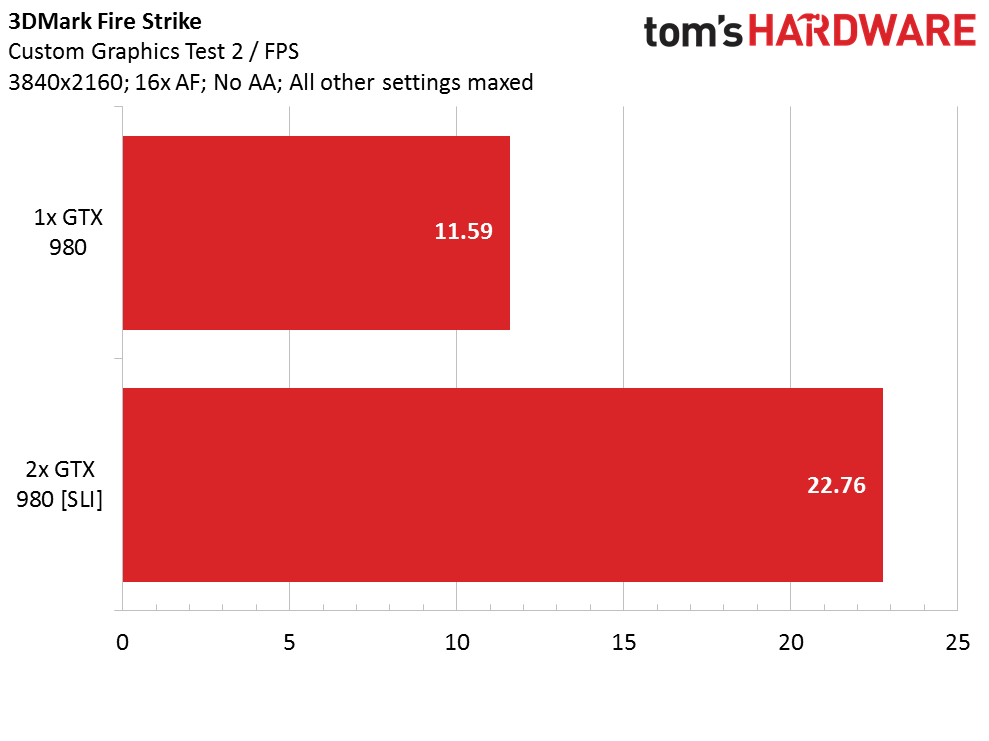

It's time to really punish those GPUs by turning the resolution up to 2160p (also known as 4K) with 16x AF, no AA and all of the detail settings maximized. Scaling is an almost theoretically-ideal 96%, though, in this extreme test, we don't observe acceptable framerates, even with two GeForce GTX 980s in SLI.

Note that custom runs of Fire Strike do not produce a numerical "points" score. They should be interpreted as relative to each other.

Current page: SLI Scaling In Synthetic Benchmarks

Prev Page How We Tested Next Page SLI Scaling In Game Benchmarks-

PaulBags Nice article. Looking foreward to comparing to a dx12+sli article when it happens, see how much it changes the sli game since cpu's will be less likely to bottlneck.Reply

Do you think we'd see 1080p monitors with 200hz+ in the future? Would it even make a difference to the human eye? -

none12345 They really need to redesign the way multigpu works. Something is really wrong when 2+ gpus dont work half the time, or have higher latency then 1 gpu. That fact that this has persisted for like 15 years now is an utter shame. SLI profiles and all the bugs and bs that comes with SLI needs to be fixed. A game shouldnt even be able to tell how many gpus there are, and it certainly shouldnt be buggy on 2+ gpus but not on 1.Reply

I also believe that alternating frames is utter crap. The fact that this has become the go to standard is a travesty. I dont care for fake fps, at the expense of consistent frames, or increased latency. If one card produces 60fps in a game. I would much rather have 2 cards produce 90fps and both of them work on the same frame at the same time, then for 2 cards to produce 120 fps alternating frames.

The only time 2 gpus should not be working on the same frame, is 3d or vr, where you need 2 angles of the same scene generated each frame. Then ya, have the cards work seperatly on their own perspective of the scene. -

PaulBags Considering dx12 with optimised command queues & proper cpu scaling is still to come later in the year, I'd hate to imagine how long until drivers are universal & unambiguous to sli.Reply -

cats_Paw The article is very nice.Reply

However, If i need to buy 2 980s to run a VR set or a 4K display Ill just wait till the prices are more mainstream.

I mean, in order to have a good SLI 980 rig you need a lot of spare cash, not to mention buying a 4K display (those that are actually any good cost a fortune), a CPU that wont bottleneck the GPUs, etc...

Too rich for my blood, Id rather stay on 1080p, untill those technologies are not only proven to be the next standard, but content is widely available.

For me, the right moment to upgrade my Q6600 will be after DX12 comes out, so I can see real performance tests on new platforms. -

Luay I thought 2K (1440P) resolutions were enough to take a load off an i5 and put it into two high-end maxwell cards in SLI, and now you show that the i7 is bottle-necking at that resolution??Reply

I had my eye on the two Acer monitors, the curved 34" 21:9 75Hz IPS, and the 27" 144HZ IPS, either one really for a future build but this piece of info tells me my i5 will be a problem.

Could it be that Intel CPUs are stagnated in performance compared to GPUs, due to lack of competition?

Is there a way around this bottleneck at 1440P? Overclocking or upgrading to Haswell-E or waiting for Sky-lake? -

loki1944 Really wish they would have made 4GB 780Tis, the overclock on those 980s is 370Mhz higher core clock and 337Mhz higher memory clock than my 780Tis and barely beats them in Firestrike by a measly 888 points. While SLI is great 99% of the time there are still AAA games out there that don't work with it, or worse, are better off disabling SLI, such as Watchdogs and Warband. I would definitely be interested in a dual gpu Titan X card or even 980 (less interested in the latter) because right now my Nvidia options for SLI on a mATX single PCIE slot board is limited to the scarce and overpriced Titan Z or the underwhelming Mars 760X2.Reply -

baracubra I feel like it would be beneficial to clarify on the statement that "you really need two *identical* cards to run in SLI."Reply

While true from a certain perspective, it should be clarified that you need 2 of the same number designation. As in two 980's or two 970's. I fear that new system builders will hold off from going SLI because they can't find the same *brand* of card or think they can't mix an OC 970 with a stock 970 (you can, but they will perform at the lower card's level).

PS. I run two 670's just fine (one stock EVGA and one OC Zotac) -

jtd871 I'd have appreciated a bit of the in-depth "how" rather than the "what". For example, some discussion about multi-GPU needing a separate physical bridge and/or communicating via the PCIe lanes, and the limitations of each method (theoretical and practical bandwidth and how likely this channel is to be saturated depending on resolution or workload). I know that it would take some effort, but has anybody ever hacked a SLI bridge to observe the actual traffic load (similar to your custom PCIe riser to measure power)? It's flattering that you assume knowledge on the part of your audience, but some basic information would have made this piece more well-rounded and foundational for your upcoming comparison with AMDs performance and implementation.Reply -

mechan ReplyI feel like it would be beneficial to clarify on the statement that "you really need two *identical* cards to run in SLI."

While true from a certain perspective, it should be clarified that you need 2 of the same number designation. As in two 980's or two 970's. I fear that new system builders will hold off from going SLI because they can't find the same *brand* of card or think they can't mix an OC 970 with a stock 970 (you can, but they will perform at the lower card's level).

PS. I run two 670's just fine (one stock EVGA and one OC Zotac)

What you say -was- true with 6xx class cards. With 9xx class cards, requirements for the cards to be identical have become much more stringent!