Nvidia's SLI Technology In 2015: What You Need To Know

Micro-Stuttering: Is It Real?

You've probably heard the term micro-stuttering used to describe an artifact experienced by owners of certain multi-GPU configurations. In short, it's caused by the rendering of frames at short but irregular time intervals, resulting in sustained high average FPS, but gameplay that still doesn't feel smooth.

The most common cause of stuttering is turning on v-sync when your hardware can't maintain a stable 60 FPS. The same applies to games that forcibly enable v-sync, the best example of which is Skyrim. This phenomenon has nothing to do with SLI, but it will manifest in SLI-based systems as well. In those cases, the output jumps between 30 and 60 FPS in order to maintains synchronization with the screen refresh, meaning some frames are displayed once, while others appear twice. The result is perceived stuttering. The workarounds are using Nvidia's Adaptive V-Sync setting in the graphics control panel, which causes some tearing, V-Sync (smooth), which prevents tearing but limits the frame rate to 30. Of course, if you own a newer G-Sync-capable display, enabling that feature will circumvent the problem altogether.

Micro-stuttering is a different phenomenon altogether. It is evident even when v-sync is disabled. What causes the issue is a variance in so-called frame times. That is, different frames are rendered (and displayed) using different amounts of time, which in turn appears as FPS values that are high (say, above 30-40), but gameplay that is not perceived as smooth. The data defining micro-stuttering is thus the variance in frame times for a given test run. The higher the variance, the less smooth the experience. While frame times do depend on frame rates overall (100 FPS = 10 milliseconds average frame time), frame time variance expressed in relative terms does not.

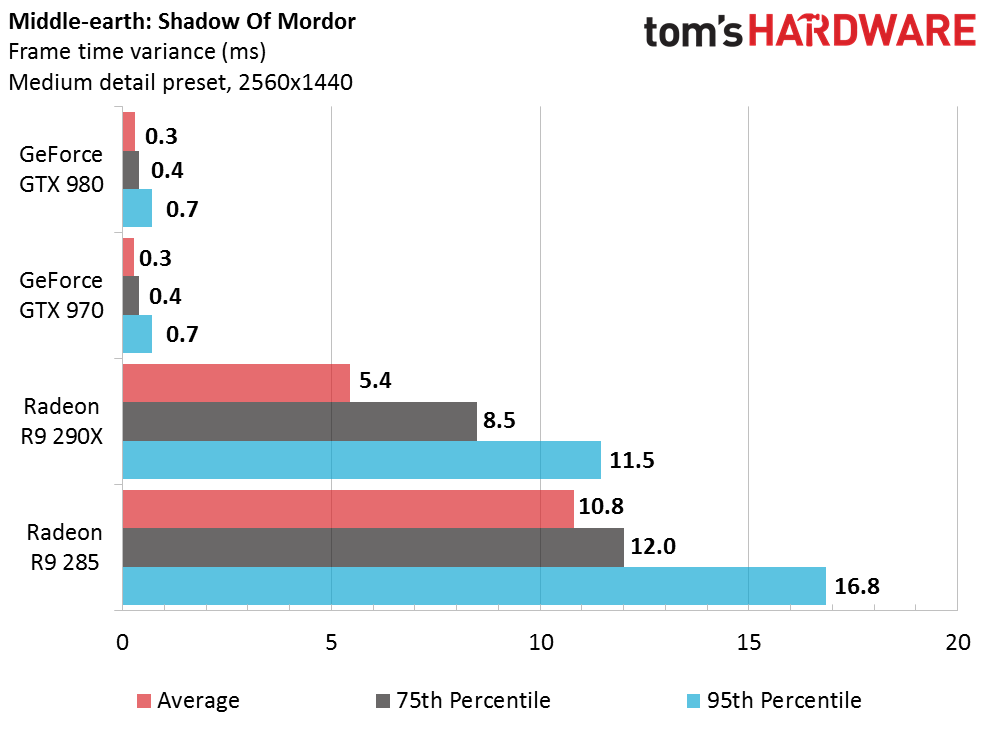

Sometimes micro-stuttering is caused by a game's engine optimization issues, irrespective of multi-GPU configurations. Don Woligroski tested Middle-earth: Shadow of Mordor, for instance, and observed that game's issues upon release. The problems with AMD cards persisted until it was patched. See below pre- and post-patch frame time variance for single cards. Clearly, there was an issue that needed to be fixed.

Any multi-GPU-equipped system faces a challenge in trying to minimize frame time variance while maximizing average frames per second and diminishing input lag. In the past, older combinations of hardware and software were really hampered by micro-stuttering. And it wasn't until Nvidia and AMD made an effort to meter the rate at which frames appeared did it start getting better.

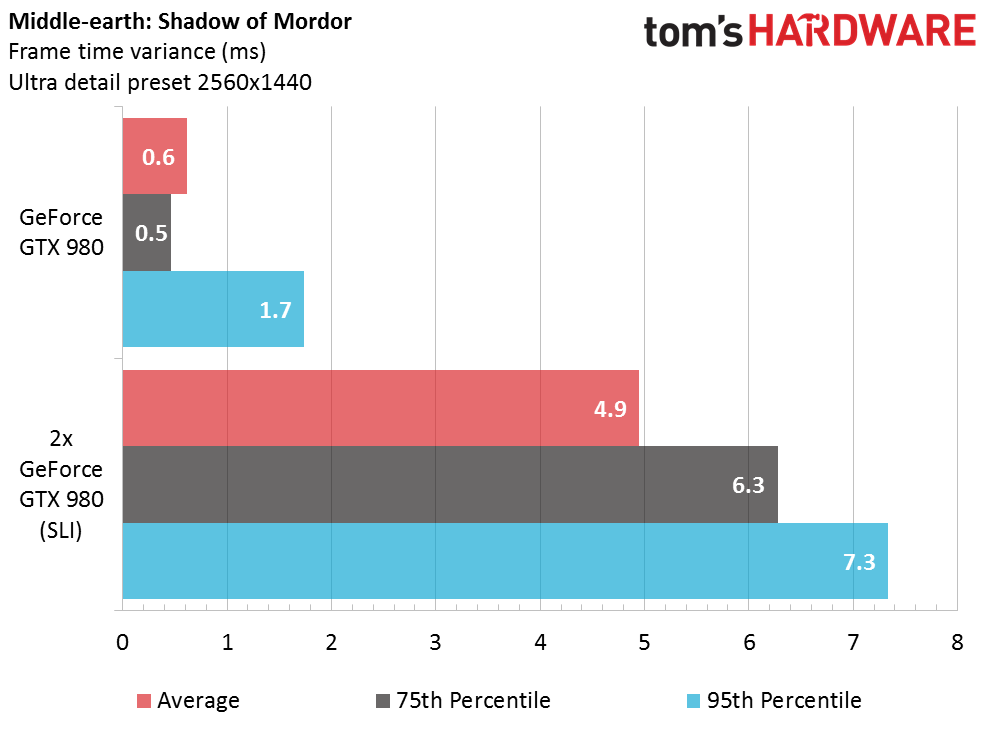

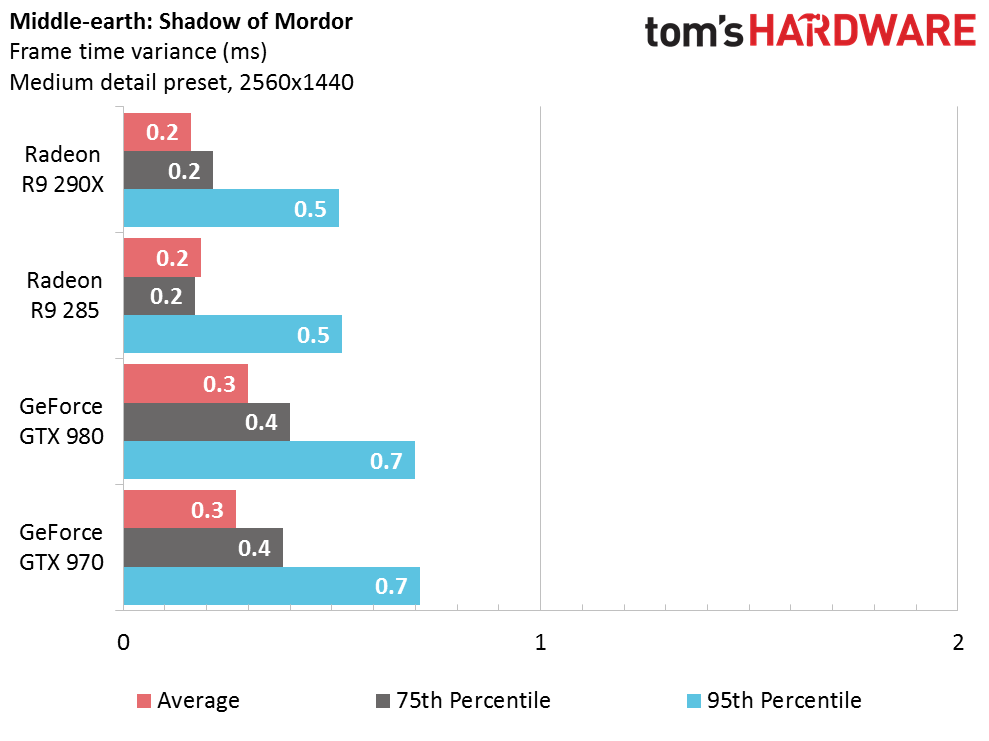

Middle-earth: Shadow of Mordor's benchmark at 1440p outputs extraordinarily consistent frame times without SLI. Even with the technology enabled, the game behaves very well.

No doubt, this is also attributable to how well Middle-earth and its associated SLI profile are optimized. Again, it took a major game patch before initial issues associated with gameplay smoothness were addressed.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

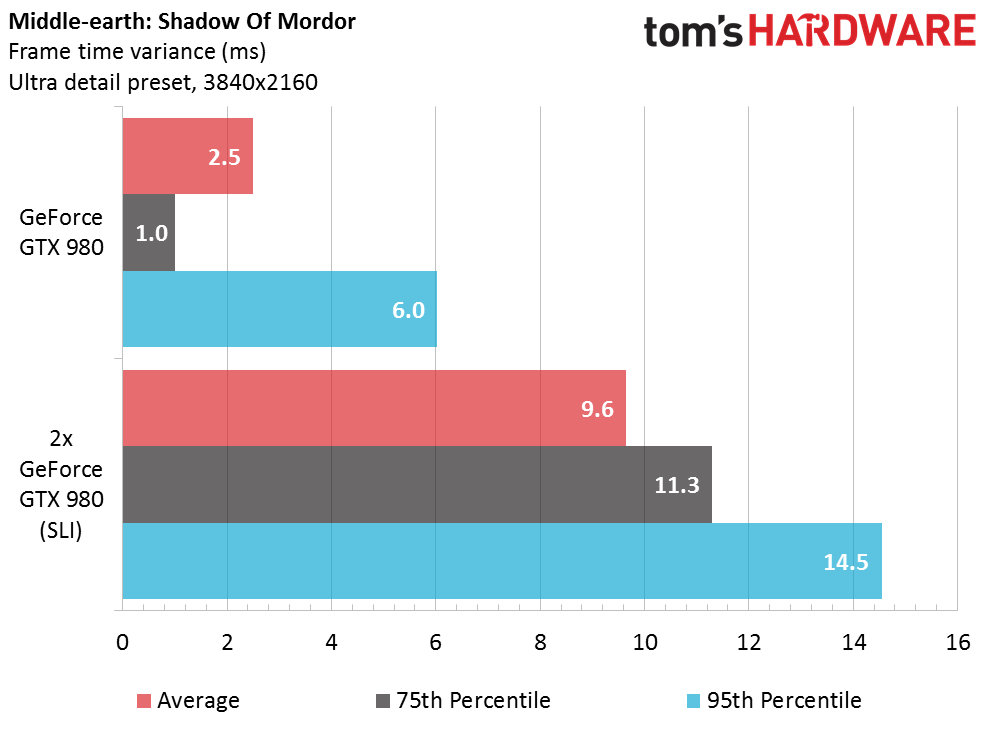

No we'll increase this title's resolution to 2160p.

This is the first time we see less than ideal performance in SLI. Shadow of Mordor just doesn't feel smooth at 4K, even with SLI, and despite an average frame rate that would suggest otherwise.

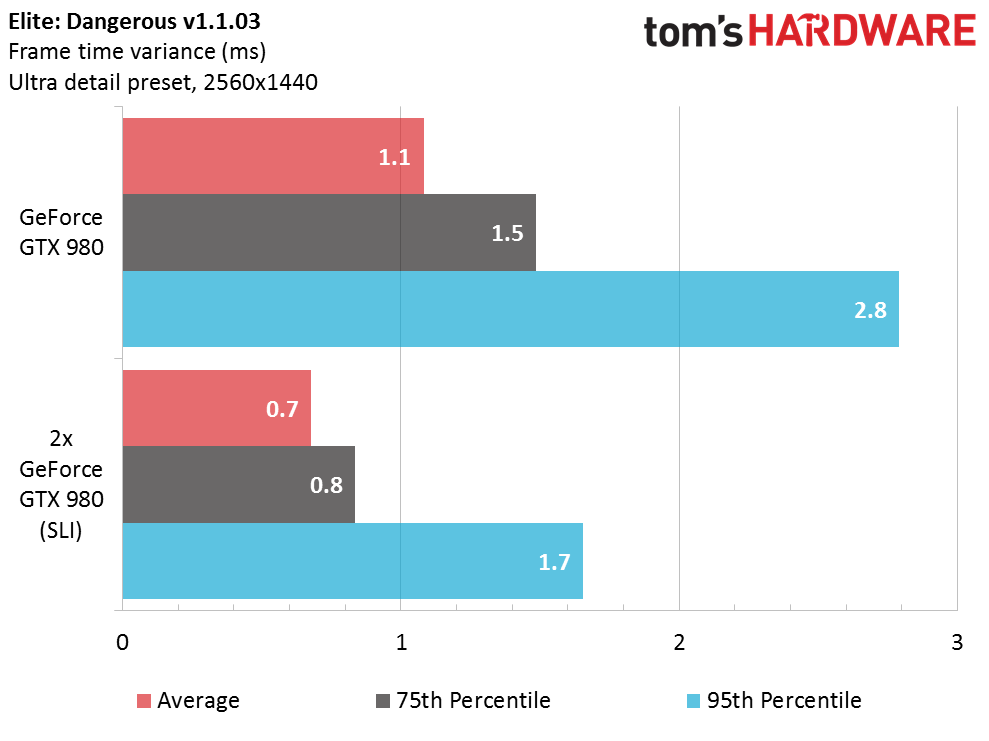

In our tests with Elite: Dangerous, frame time variance at 1440p is actually lower in SLI. This is possible because overall frame times are lower in SLI versus single-GPU mode. Frontier's fourth-generation COBRA engine appears to be really well-optimized for operation in SLI.

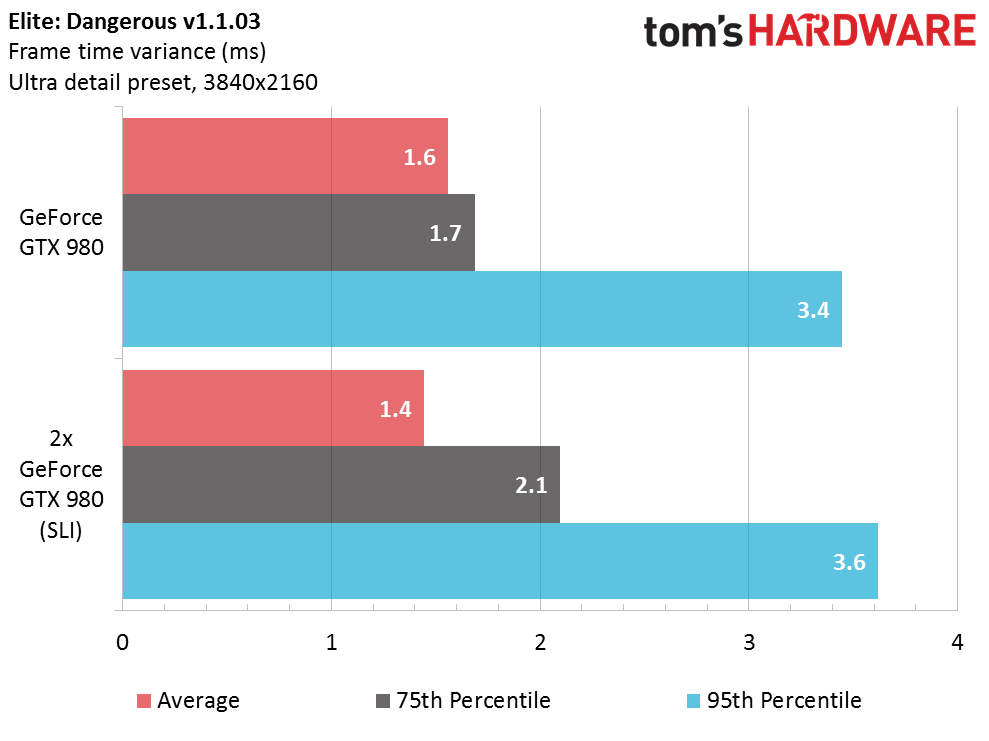

Unlike Shadow of Mordor, performance in Elite: Dangerous' highest-detail preset at 4K appears just as flawless in SLI as it is with a single GPU. Frame time variance is well below what could be identified as micro-stutter.

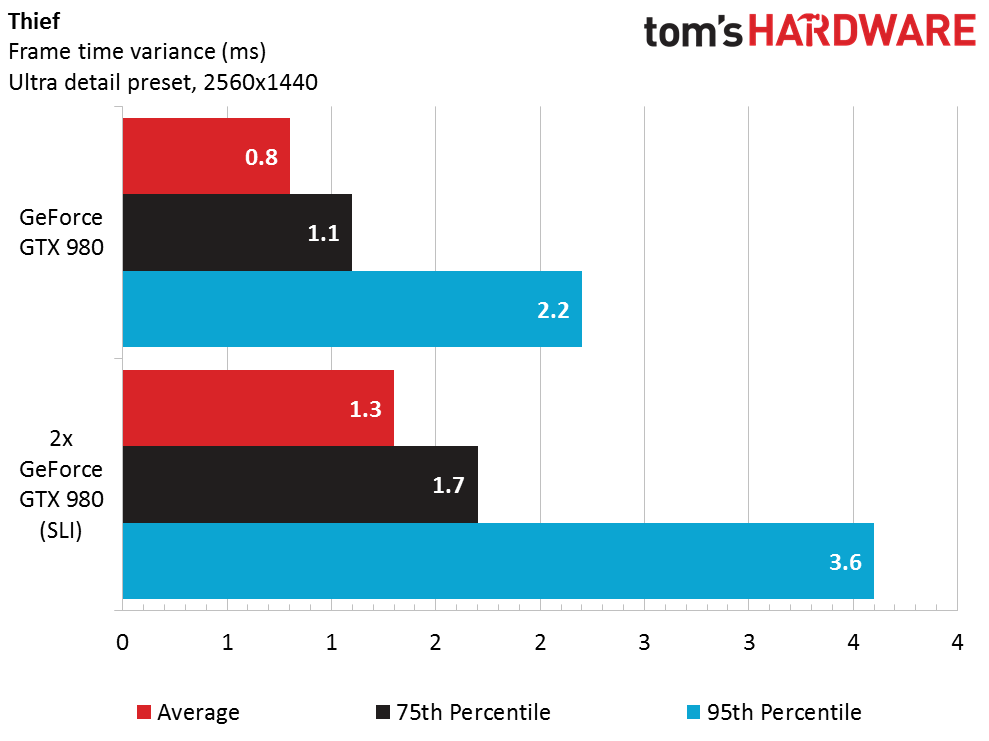

Thief also behaves extremely well in SLI at 1440p, at least as far as frame time variance is concerned.

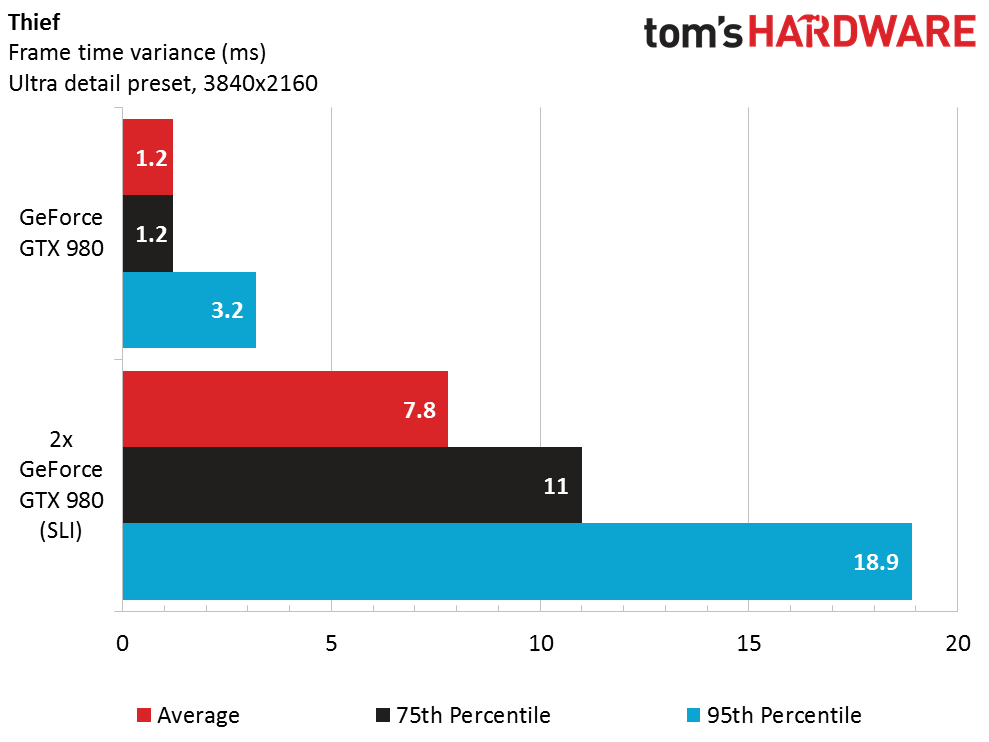

By contrast, Thief struggles at 4K, even with the power of two GeForce GTX 980s behind it. Frame time variance rises to levels where micro-stutter would be noticed.

Over the past two generations of graphics architectures, Nvidia has made a concerted effort to minimize frame time variance in SLI configurations. We didn't encounter any micro-stutter in any of the games we tested at 2560x1440. It's more of an issue at 4K however, as our two 980s in SLI posted much higher variances in Middle-earth: Shadow of Mordor and Thief.

Current page: Micro-Stuttering: Is It Real?

Prev Page Driver Limitations And SLI-AA Mode Next Page Lack Of Support And Image Artifacts-

PaulBags Nice article. Looking foreward to comparing to a dx12+sli article when it happens, see how much it changes the sli game since cpu's will be less likely to bottlneck.Reply

Do you think we'd see 1080p monitors with 200hz+ in the future? Would it even make a difference to the human eye? -

none12345 They really need to redesign the way multigpu works. Something is really wrong when 2+ gpus dont work half the time, or have higher latency then 1 gpu. That fact that this has persisted for like 15 years now is an utter shame. SLI profiles and all the bugs and bs that comes with SLI needs to be fixed. A game shouldnt even be able to tell how many gpus there are, and it certainly shouldnt be buggy on 2+ gpus but not on 1.Reply

I also believe that alternating frames is utter crap. The fact that this has become the go to standard is a travesty. I dont care for fake fps, at the expense of consistent frames, or increased latency. If one card produces 60fps in a game. I would much rather have 2 cards produce 90fps and both of them work on the same frame at the same time, then for 2 cards to produce 120 fps alternating frames.

The only time 2 gpus should not be working on the same frame, is 3d or vr, where you need 2 angles of the same scene generated each frame. Then ya, have the cards work seperatly on their own perspective of the scene. -

PaulBags Considering dx12 with optimised command queues & proper cpu scaling is still to come later in the year, I'd hate to imagine how long until drivers are universal & unambiguous to sli.Reply -

cats_Paw The article is very nice.Reply

However, If i need to buy 2 980s to run a VR set or a 4K display Ill just wait till the prices are more mainstream.

I mean, in order to have a good SLI 980 rig you need a lot of spare cash, not to mention buying a 4K display (those that are actually any good cost a fortune), a CPU that wont bottleneck the GPUs, etc...

Too rich for my blood, Id rather stay on 1080p, untill those technologies are not only proven to be the next standard, but content is widely available.

For me, the right moment to upgrade my Q6600 will be after DX12 comes out, so I can see real performance tests on new platforms. -

Luay I thought 2K (1440P) resolutions were enough to take a load off an i5 and put it into two high-end maxwell cards in SLI, and now you show that the i7 is bottle-necking at that resolution??Reply

I had my eye on the two Acer monitors, the curved 34" 21:9 75Hz IPS, and the 27" 144HZ IPS, either one really for a future build but this piece of info tells me my i5 will be a problem.

Could it be that Intel CPUs are stagnated in performance compared to GPUs, due to lack of competition?

Is there a way around this bottleneck at 1440P? Overclocking or upgrading to Haswell-E or waiting for Sky-lake? -

loki1944 Really wish they would have made 4GB 780Tis, the overclock on those 980s is 370Mhz higher core clock and 337Mhz higher memory clock than my 780Tis and barely beats them in Firestrike by a measly 888 points. While SLI is great 99% of the time there are still AAA games out there that don't work with it, or worse, are better off disabling SLI, such as Watchdogs and Warband. I would definitely be interested in a dual gpu Titan X card or even 980 (less interested in the latter) because right now my Nvidia options for SLI on a mATX single PCIE slot board is limited to the scarce and overpriced Titan Z or the underwhelming Mars 760X2.Reply -

baracubra I feel like it would be beneficial to clarify on the statement that "you really need two *identical* cards to run in SLI."Reply

While true from a certain perspective, it should be clarified that you need 2 of the same number designation. As in two 980's or two 970's. I fear that new system builders will hold off from going SLI because they can't find the same *brand* of card or think they can't mix an OC 970 with a stock 970 (you can, but they will perform at the lower card's level).

PS. I run two 670's just fine (one stock EVGA and one OC Zotac) -

jtd871 I'd have appreciated a bit of the in-depth "how" rather than the "what". For example, some discussion about multi-GPU needing a separate physical bridge and/or communicating via the PCIe lanes, and the limitations of each method (theoretical and practical bandwidth and how likely this channel is to be saturated depending on resolution or workload). I know that it would take some effort, but has anybody ever hacked a SLI bridge to observe the actual traffic load (similar to your custom PCIe riser to measure power)? It's flattering that you assume knowledge on the part of your audience, but some basic information would have made this piece more well-rounded and foundational for your upcoming comparison with AMDs performance and implementation.Reply -

mechan ReplyI feel like it would be beneficial to clarify on the statement that "you really need two *identical* cards to run in SLI."

While true from a certain perspective, it should be clarified that you need 2 of the same number designation. As in two 980's or two 970's. I fear that new system builders will hold off from going SLI because they can't find the same *brand* of card or think they can't mix an OC 970 with a stock 970 (you can, but they will perform at the lower card's level).

PS. I run two 670's just fine (one stock EVGA and one OC Zotac)

What you say -was- true with 6xx class cards. With 9xx class cards, requirements for the cards to be identical have become much more stringent!