GPU vs. CPU Upgrade: Extensive Tests

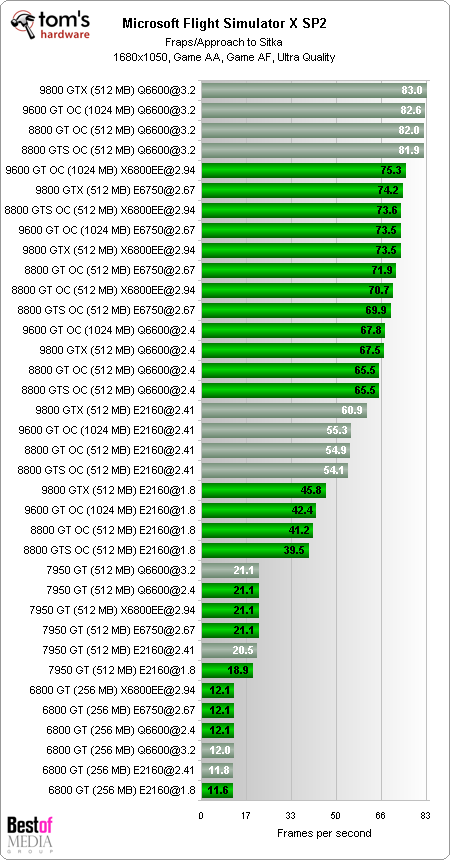

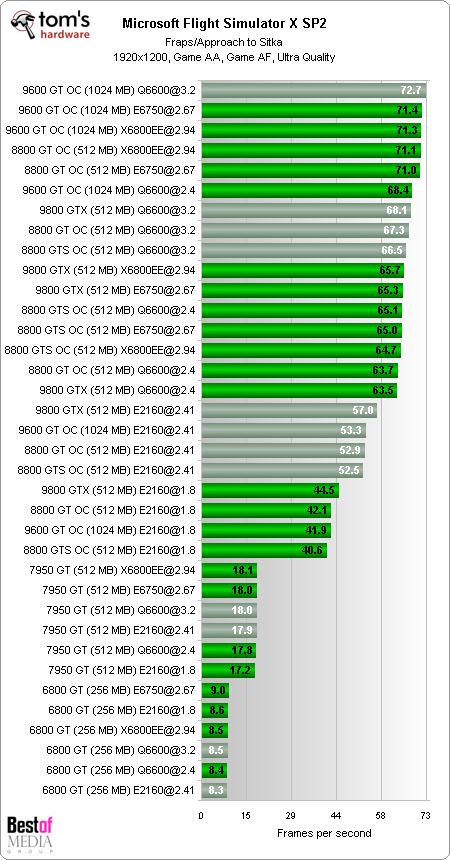

Microsoft Flight Simulator X SP2

The Geforce 6800 GT and 7950 GT only run with DirectX 9 effects. In this mode, the environment is not reflected in the water, but the waves are simulated cleanly by the pixel shader. In DirectX 10 mode, the landscape is reflected in the surface of the water. With the Forceware Version 174.74 graphics driver, the pixel shader still does not produce waves.

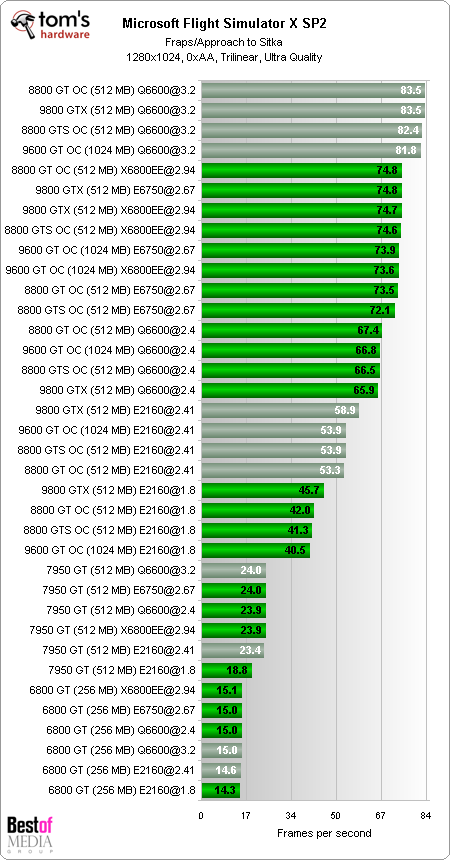

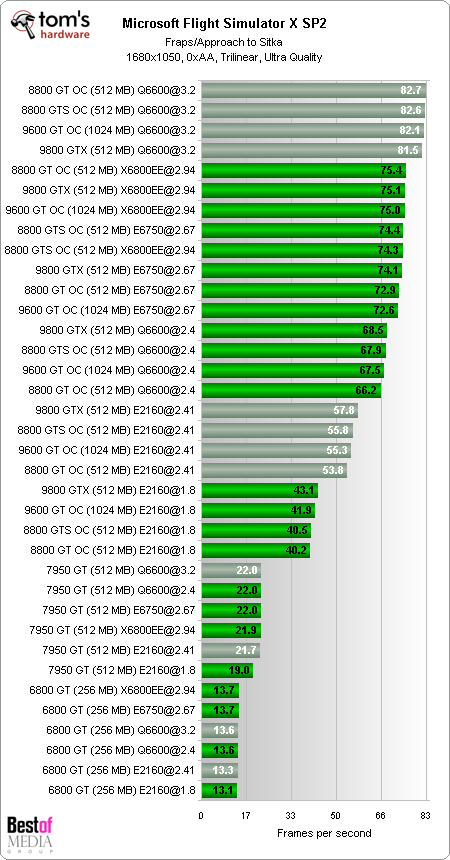

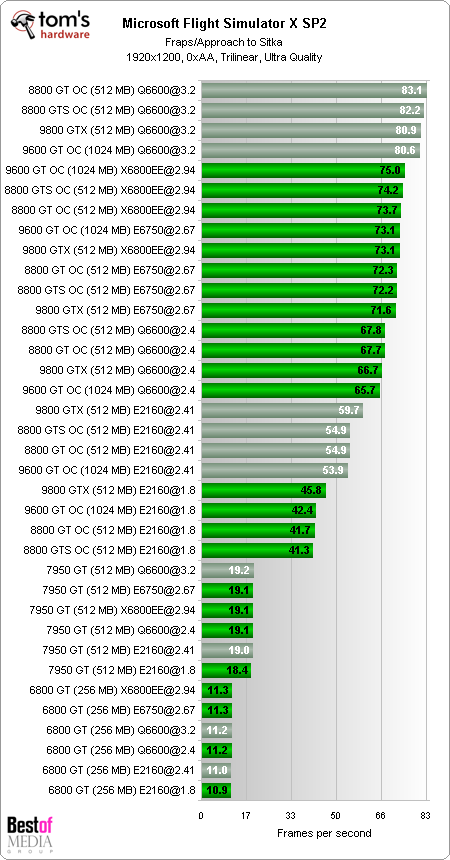

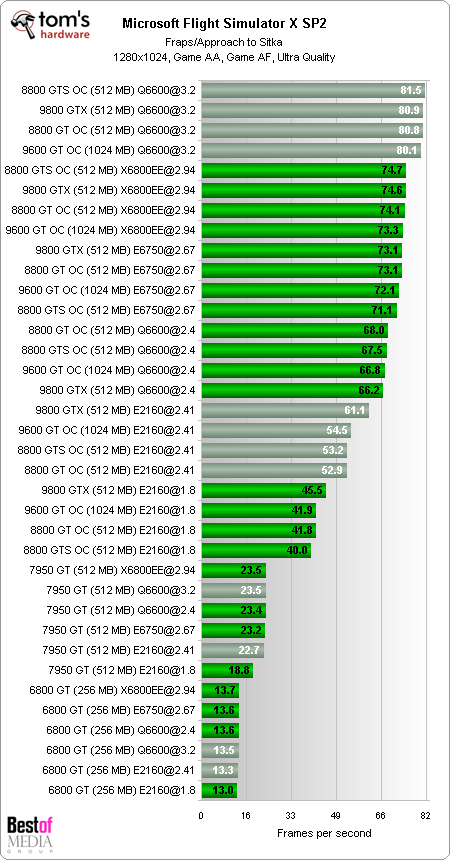

The flight simulator reacts very heavily to the clocking rate of the CPU, the Q6600 with 2.4 GHz being slower than the E6750 at 2.67 GHz. Only when the Q6600 is overclocked to 3200 MHz does it take the top spot with the new G92 graphics chip. The Geforce 6800 GT barely reacts at all, and the Geforce 7950 GT reacts slightly to the better processor speed. The Geforce 9600 GT and its 1024 MB graphics memory achieve the best results, but only in conjunction with the E6750, Q6600 or X6800EE and at the 1920x1200 pixel resolution with antialiasing. If a weaker version of the E2160 is installed, the Geforce 9800 GTX with 512 MB graphics memory wins the race.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

-

randomizer That would simply consume more time without really proving much. I think sticking with a single manufacturer is fine, because you see the generation differences of cards and the performance gains compared to geting a new processor. You will see the same thing with ATI cards. Pop in an X800 and watch it crumble in the wake of a HD3870. There is no need to inlude ATI cards for the sake of this article.Reply -

randomizer This has been a long needed article IMO. Now we can post links instead of coming up with simple explanations :DReply -

yadge I didn't realize the new gpus were actually that powerful. According to Toms charts, there is no gpu that can give me double the performance over my x1950 pro. But here, the 9600gt was getting 3 times the frames as the 7950gt(which is better than mine) on Call of Duty 4.Reply

Maybe there's something wrong with the charts. I don't know. But this makes me even more excited for when I upgrade in the near future. -

This article is biased from the beginning by using a reference graphics card from 2004 (6800GT) to a reference CPU from 2007 (E2140).Reply

Go back and use a Pentium 4 Prescott (2004) and then the basis of these percentage values on page 3 will actually mean something. -

randomizer yadgeI didn't realize the new gpus were actually that powerful. According to Toms charts, there is no gpu that can give me double the performance over my x1950 pro. But here, the 9600gt was getting 3 times the frames as the 7950gt(which is better than mine) on Call of Duty 4. Maybe there's something wrong with the charts. I don't know. But this makes me even more excited for when I upgrade in the near future.I upgraded my X1950 pro to a 9600GT. It was a fantastic upgrade.Reply -

wh3resmycar scyThis article is biased from the beginning by using a reference graphics card from 2004 (6800GT) to a reference CPU from 2007 (E2140).Reply

maybe it is. but its relevant especially with those people who are stuck with those prescotts/6800gt. this article reveals an upgrade path nonetheless -

randomizer If they had used P4s there would be o many variables in this article that there would be no direction and that would make it pointless.Reply -

JAYDEEJOHN Great article!!! It clears up many things. It finally shows proof that the best upgrade a gamer can make is a newer card. About the P4's, just take the clock rate and cut it in half, then compare (ok add 10%) hehehReply -

justjc I know randomizer thinks we would get the same results, but would it be possible to see just a small article showing if the same result is true for AMD processors and ATi graphics.Reply

Firstly we know that ATi and nVidia graphics doesn't calculate graphics in the same way, who knows perhaps an ATi card requiers more or less processorpower to work at full load, and if you look at Can you run it? for Crysis(only one I recall using) you will see the minimum needed AMD processor is slover than the minimum needed Core2, even in processor speed.

So any chance of a small, or full scale, article throwing some ATi and AMD power into the mix?