AMD's 32GB Radeon W6800 Drives 'Outstanding' Performance

An "outstanding" rating from UserBenchmark.

AMD launched its RDNA2-based Radeon RX 6000-graphics cards in early November, so it's about time for the company to use the latest Big Navi GPU for its Radeon Pro graphics cards aimed at CAD/CAM and DCC professionals. Indeed, the first benchmark score of AMD's yet-to-be-announced Radeon Pro W6800 with 32GB of memory got published recently.

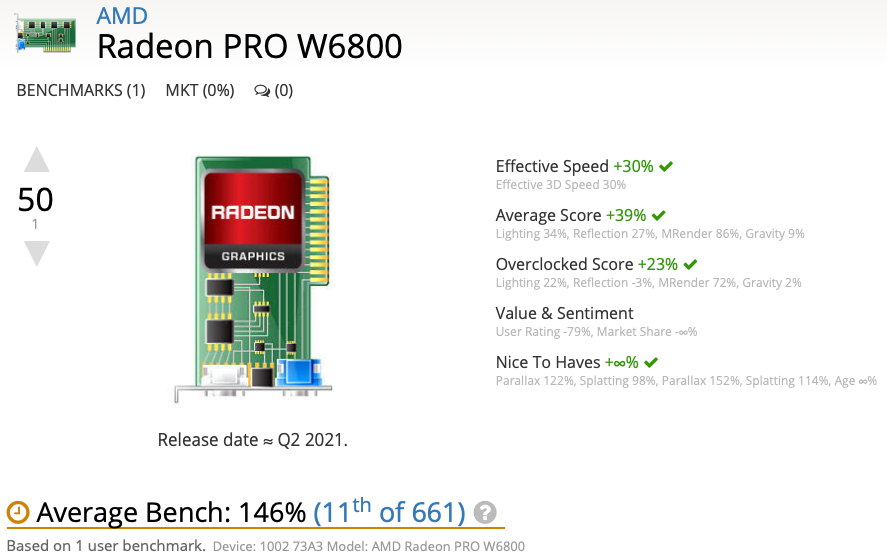

The AMD Radeon Pro W6800 32GB (1002 73A3, 1002 0E1E) scored an 'outstanding' result in UserBenchmark, hitting 146% of the average effective GPU speed. When compared to other graphics cards, 146% is between Nvidia's GeForce RTX 2080 Super (138%) and GeForce RTX 3070 (152%). Meanwhile, AMD's Radeon RX 6800 XT scores between 152% and 171%.

It's hard to make guesses about specifications for AMD's upcoming Radeon Pro W6800 32GB graphics card based on one benchmark score, so we'll refrain from doing so. Meanwhile, it looks like the Radeon Pro W6800 32GB will offer compute performance comparable to that of the Radeon RX 6800 XT. Still, since it will have more memory onboard, it will certainly provide higher performance in high resolutions when compared to the gaming adapter.

Professional graphics cards tend to adopt the latest architectures a bit later than consumer boards as developers need to certify their drivers with developers of professional applications, something that takes time. The leak of a Radeon Pro W6800 benchmark result indicates that AMD is getting closer to release its RDNA2-based cards for CAD, CAM, and DCC users.

Since the UserBenchmark is a general benchmark designed for casual users, it does not really put heavy loads on GPUs, so an 'outstanding' result for the Radeon Pro W6800 32GB is not surprising. Unfortunately, UserBenchmark gives absolutely no idea about performance of the graphics card in professional applications.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

ginthegit More subjective tests on a test suite.Reply

Does this actually mean all that much? Wouldn't it be better to get Average benchmarks from games and then make an Objetive rating. -

docarter If Tomshardware believes at all in journalistic excellence, it will not publish any "ratings" from the garbage website userbench.Reply

When comparing RX 6000 series GPUs to RTX 3000 series GPUs the Radeon cards are consistently given lower scores despite better or equal performance to the Nvidia cards. -

Gam3r01 Reply

I dont see a point in mentioning games for a card not intended or manufactured with gameplay in mind.ginthegit said:More subjective tests on a test suite.

Does this actually mean all that much? Wouldn't it be better to get Average benchmarks from games and then make an Objetive rating.

That being said, still a pointless comparison "Unfortunately, UserBenchmark gives absolutely no idea about performance of the graphics card in professional applications. "

So lets look at a professional card in an unreliable suit for an application load its not tested for. -

jkhoward Oops, did we let that one threw? Please check back later after we skew the results in NVIDIA’s favor. Thanks!Reply -

roblittler77 I hope this article is intended purely as a jib at Userbenchmark for having something made by AMD topping thier charts.Reply

An outstanding result from a single benchmark is known as an outlier, which you disregard, particularly if that benchmark is Userbenchmark.

Stop posting garbage, this arcticle tells us nothing. -

kaalus It takes just 128MB of video RAM to hold two HDR framebuffers at 4K. Versus 32MB for 1080p - an increase of 96MB. Or about 1% of RAM in a standard mid-range GPU of today.Reply

I fail to see why people are constantly banging on about 16GB of RAM will help with 4k gaming. Even a 128MB low-end card from 15 years ago would have plenty enough memory to display in 4k. -

JFKapustka Comparing mainstream performance for a specialized card like the W6800 is more than a little absurd. A CAD/CAM benchmark would be much more relevant to any potential W6800 buyers.Reply -

ginthegit Replyroblittler77 said:I hope this article is intended purely as a jib at Userbenchmark for having something made by AMD topping thier charts.

An outstanding result from a single benchmark is known as an outlier, which you disregard, particularly if that benchmark is Userbenchmark.

Stop posting garbage, this arcticle tells us nothing.

though my point was slightly irrelevant, this is what I meant -

ginthegit Replykaalus said:It takes just 128MB of video RAM to hold two HDR framebuffers at 4K. Versus 32MB for 1080p - an increase of 96MB. Or about 1% of RAM in a standard mid-range GPU of today.

I fail to see why people are constantly banging on about 16GB of RAM will help with 4k gaming. Even a 128MB low-end card from 15 years ago would have plenty enough memory to display in 4k.

I guess it gives more room for multiple things and also more complex algorithms.

I tend to use cards like this for Electronics apps, but in reality, AMD benching high in a niche market is about the only praise AMD generally gets in THG. But these cards are fully capable as Graphics cards with certain things changed on the card and the drivers.