Backblaze Annual Failure Rates for SSDs in 2022: Less Than One Percent

Excellent reliability overall, even if it's "only" for about 3,000 drives.

Backblaze, purveyor of cloud storage, has published the statistics for the 2,906 SSDs used as boot drives on its storage servers. To be clear, these aren't only boot drives, as they also read and write log files and temporary files, the former of which can sometimes generate quite a bit of wear and tear. Backblaze has been using SSDs for boot drives starting in 2018, and like its hard drive statistics, it's one of few ways to get a lot of insight into how large quantities of mostly consumer drives hold up over time.

Before we get to the stats, there are some qualifications. First, most of the SSDs that Backblaze uses aren't the latest M.2 NVMe models. They're also generally quite small in capacity, with most drives only offering 250GB of storage, plus about a third that are 500GB, and only three that are larger 2TB drives. But using lots of the same model of hardware keeps things simple when it comes to managing the hardware. Anyway, if you're hoping to see stats for popular drives that might make our list of the best SSDs, you'll be disappointed.

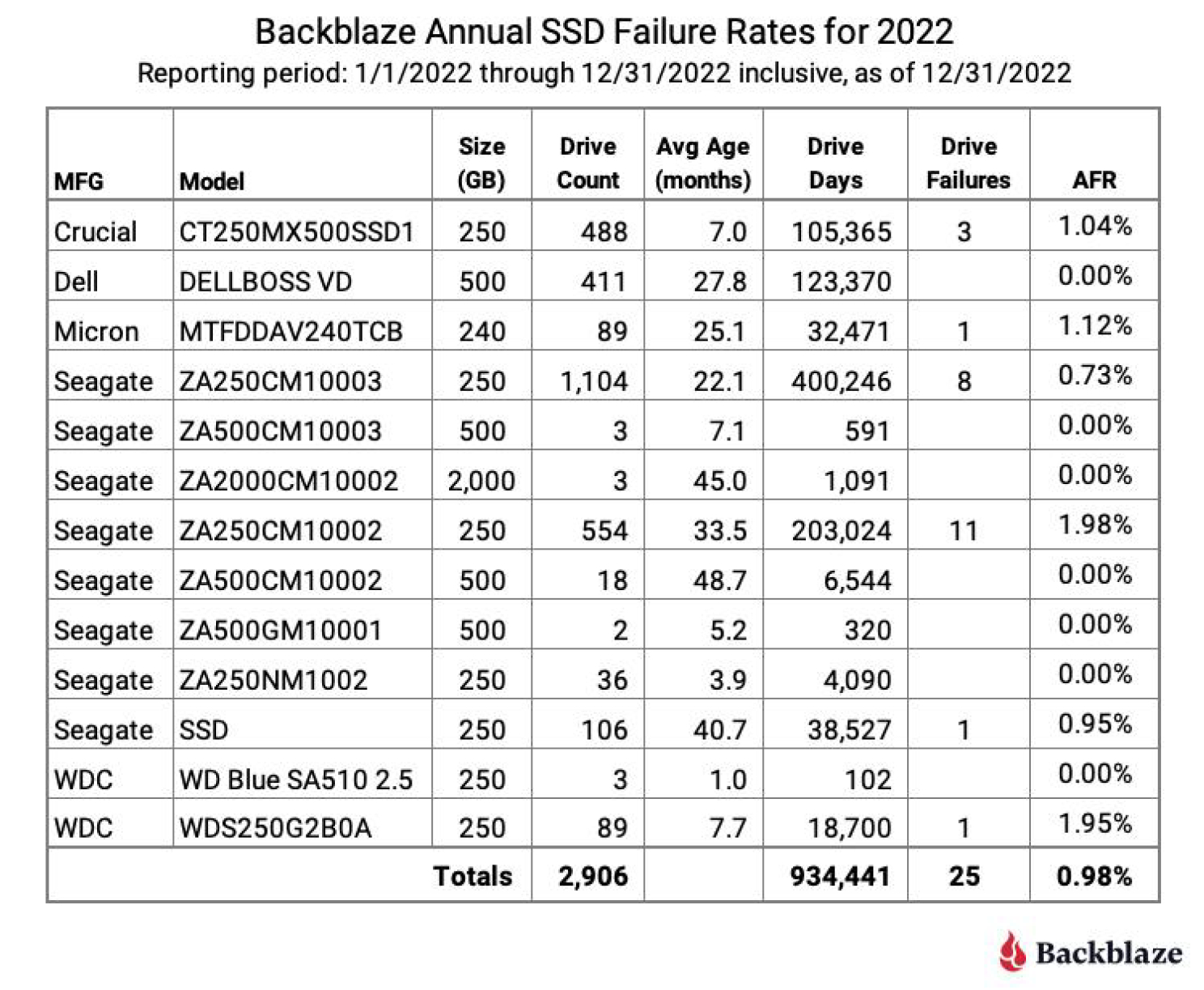

Here are the stats for the past year.

While seven of the drive models used have zero failures, only one of those has a significant number of installed drives, the Dell DELLBOSS VD (Boot Optimized Storage Solution). The other six have fewer than 40 SSDs in use, with four that are only installed in two or three servers. It's more useful to pay attention to the drives that have a large amount of use, specifically the ones with over 100,000 drive days.

Those consist of the Crucial MX500 250GB, Seagate BarraCuda SSD and SSD 120, and the Dell BOSS. It's interesting to note that the average age of the Crucial MX500 drives is only seven months, even though the MX500 first became available in early 2018. Clearly, Backblaze isn't an early adopter of the latest SSDs. Still, overall the boot SSDs have an annualized failure rate below 1%.

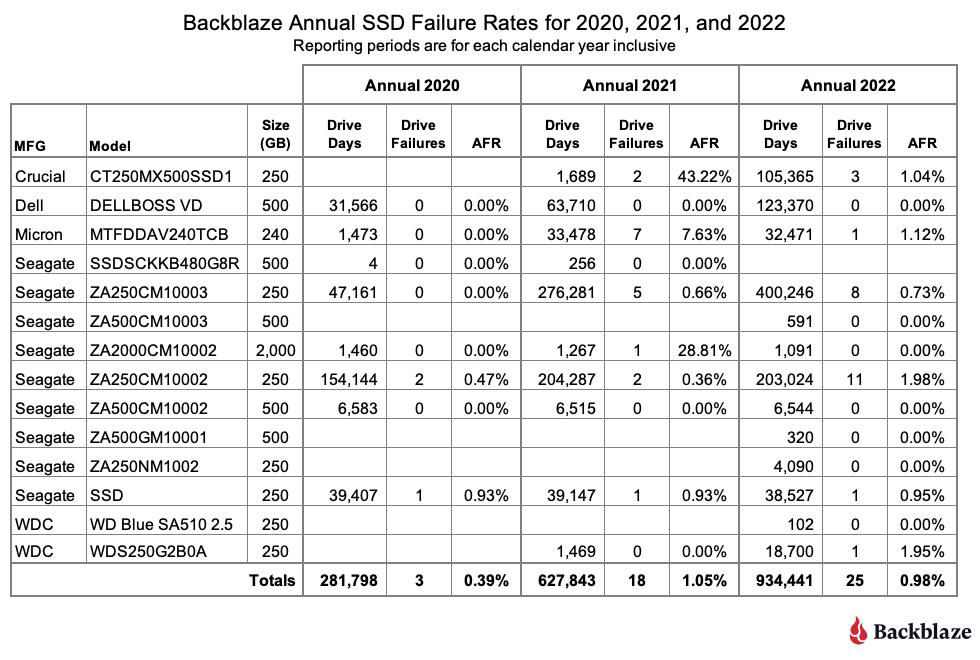

Stepping back to an even longer view of the past three years, that 1% annualized failure rate remains, with just 46 total drive failure over that time span. Backblaze also notes that after two relatively quick failures in 2021, the MX500 did much better in 2022. Seagate's older ZA250CM10002 also slipped to a 2% failure rate last year, while the newer ZA250CM10003 had more days in service and fewer failures, so it will be interesting to see if those trends continue.

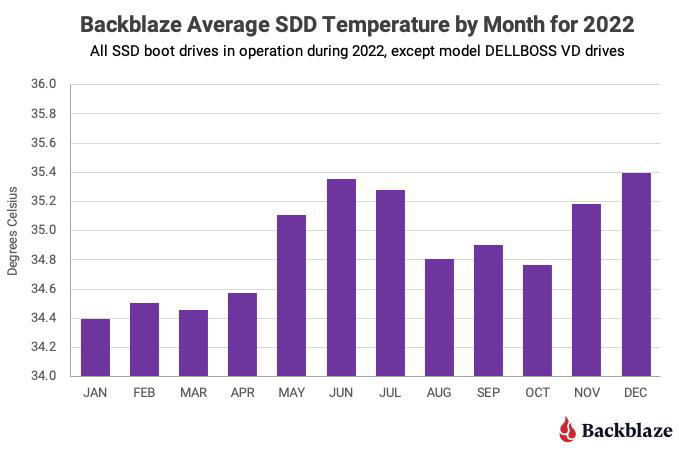

Another piece of data Backblaze looked at is SSD temperature, as reported by SMART (the Dell BOSS doesn't appear to support this). The chart isn't zero-based, so it might look like there's a decent amount of fluctuation at first glance, but in reality the drives ranged from an average of 34.4C up to 35.4C — just a 1C span.

Of course that's just the average, and there are some outliers. There were four observations of a 20C drive, and one instance of a drive at 61C, with most falling in the 25–42 degrees Celsius range. It would be nice if the bell curve seen above also correlated with failed drives in some fashion, but with only 25 total failures during the year, that was not to be — Backblaze called its resulting plot "nonsense."

Ultimately, the number of SSDs in use by Backblaze pales in comparison to the number of hard drives — check the latest Backblaze HDD report, for example, where over 290,000 drives were in use during the past year. That's because no customer data gets stored on the SSDs, so they're only for the OS, temp files, and logs. Still, data from nearly 3,000 drives is a lot more than what any of us (outside of IT people) are likely to access over the course of a year. The HDDs incidentally had an AFR of 1.37%.

Does this prove SSDs are more reliable than HDDs? Not really, and having a good backup strategy is still critical. Hopefully, in the coming years we'll see more recent M.2 SSDs make their way into Backblaze's data — we'd love to see maybe 100 or so each of some PCIe 3.0 and PCIe 4.0 drives, for example, but that of course assumes that the storage servers even support those interfaces. Given time, they almost certainly will.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

digitalgriffin Reply-Fran- said:I don't see Samsung in there bringing the score/median down! xD

Regards.

I felt the NAND burn from here. -

tennis2 Reply

You beat me to it!-Fran- said:I don't see Samsung in there bringing the score/median down! xD

Regards. -

PlaneInTheSky It's a pointless stat for consumers, because most SSD fail during loss of power or during changes in power.Reply

Because servers are under constant power and have many ways to mitigate loss of power, they are not comparable to consumer PC.

Backblaze literally says their data is ONLY applicable to comparable server environments and has no relevance to consumer devices.

You can in theory install an uninterruptible power supply behind a PC, which would give you similar power stability as a server, but it would be much cheaper to just back up on more media. Another thing is that cheap consumer UPS use batteries, and they are just as likely to catch fire than storage failing from a power interruption. -

digitalgriffin ReplyPlaneInTheSky said:It's a pointless stat for consumers, because most SSD fail during loss of power or during changes in power.

Because servers are under constant power and have many ways to mitigate loss of power, they are not comparable to consumer PC.

Backblaze literally says their data is ONLY applicable to comparable server environments and has no relevance to consumer devices.

You can in theory install an uninterruptible power supply behind a PC, which would give you similar power stability as a server, but it would be much cheaper to just back up on more media. Another thing is that cheap consumer UPS use batteries, and they are just as likely to catch fire than storage failing from a power interruption.

Depends on drive and power supply. Some have capacitors which allow all write operations to complete. If you are on a UPS or laptop, you are covered there too.

That said, power loss to a SSD results in FILE CORRUPTION (data) NOT DRIVE FAILURE (physical). Physical failures affect everyone, power failure or not.

File corruption errors are what redundant filesystems like ZFS check for. They add another few layers to the block sector checksum. And NT Drives has a transaction log. Transact begin/end on table entries. If power goes out before the operation is done, the transact end is missing and the file assumed bad and the table rolled back. XFS isn't bad either. I consider it reliable enough for smaller backup arrays. -

bit_user Reply

You mean Lithium Ion batteries? Makes me feel better about having a Lead Acid-based UPS.PlaneInTheSky said:Another thing is that cheap consumer UPS use batteries, and they are just as likely to catch fire than storage failing from a power interruption. -

dsavidsarmstrong Interestingly we use SSDs on our servers in exactly the same way: as a boot and program drive and for logs so it's encouraging to see that Backblaze came to the same conclusion.Reply

We tried using an SSD for the data drive (Postgresql Database tablespace) but noticed that it would hang every now and then which we attribute to the drive's GC. And in the end it wasn't significantly faster than the HDD's which we over-provisioned to make sure the data could be kept as much as possible in the outer most tracks.

In 23 years we've never had an HDD failure but we replace servers and thus the drives every 4 years. -

bit_user Reply

Which drive? Datacenter SSDs put a lot of emphasis on minimizing tail latencies. Consumer SSDs: most, not at all.dsavidsarmstrong said:We tried using an SSD for the data drive (Postgresql Database tablespace) but noticed that it would hang every now and then which we attribute to the drive's GC.

StorageReview routinely does reviews of enterprise SSDs. Here's a recent one:

https://www.storagereview.com/review/micron-9400-pro-ssd-review

Now, I'm dying to know which SSD you tried. Because the most optimal data layout, on the fastest 15k RPM HDDs, are still limited to mere hundreds of IOPS. Whereas a good NVMe drive can deliver several tens of thousands at a mere QD=1.dsavidsarmstrong said:And in the end it wasn't significantly faster than the HDD's which we over-provisioned to make sure the data could be kept as much as possible in the outer most tracks.

Your server fleet consists of just 1, I take it?dsavidsarmstrong said:In 23 years we've never had an HDD failure but we replace servers and thus the drives every 4 years. -

dsavidsarmstrong Replybit_user said:Which drive? Datacenter SSDs put a lot of emphasis on minimizing tail latencies. Consumer SSDs: most, not at all.

StorageReview routinely does reviews of enterprise SSDs. Here's a recent one:

https://www.storagereview.com/review/micron-9400-pro-ssd-review

Now, I'm dying to know which SSD you tried. Because the most optimal data layout, on the fastest 15k RPM HDDs, are still limited to mere hundreds of IOPS. Whereas a good NVMe drive can deliver several tens of thousands at a mere QD=1.

It is a Samsung SSD bought in 2019 or 2020 IIRC. The model is a Samsung SSD 970 PRO 1TB. It has a current TBW of 48TB.

If there are a lot of updates HDDs can update a sector in-place whereas SSD's must write a new block and that block size tends to be large. Still we get better performance initially with the SSD but it slows down in heavy loads to due to the occasional seconds-long hanging. The issue was mentioned in forums at the time. It might even be that the linux driver doesn't handle trim correctly but that's just a theory.A database restore is significantly faster on the SSD but we don't notice much difference in the running application is general speed except for the occasional hanging. Who knows, maybe it's a defect.

Your server fleet consists of just 1, I take it?

We have a main server and a backup server for the application. We recycle older servers to fulfill other server roles such as printing or archiving. My development machines are all SSDs (one SATA and two NVMe and my backup drives are all HDDs. -

bit_user Reply

Still, in spite of the name, it's a consumer-grade drive. Maybe pro-sumer, but definitely not server-grade.dsavidsarmstrong said:It is a Samsung SSD bought in 2019 or 2020 IIRC. The model is a Samsung SSD 970 PRO 1TB. It has a current TBW of 48TB.

dsavidsarmstrong said:If there are a lot of updates HDDs can update a sector in-place whereas SSD's must write a new block and that block size tends to be large.

Both SSDs and HDDs have to write entire blocks. An update of a partial block or RAID stripe necessarily involves a read-modify-write operation. SSDs are much faster at that than HDDs.dsavidsarmstrong said:

It'd be interesting to know why it hangs for entire seconds, but the mere fact that consumer SSDs bog down under sustained load is a well-known fact. It's principally due to the way they use low-density storage to buffer writes. Once you fill that buffer, then your write speed drops to the native speed of high-density writes.dsavidsarmstrong said:Still we get better performance initially with the SSD but it slows down in heavy loads to due to the occasional seconds-long hanging.

While it seems the 970 can handle sequential writes without a dropoff, there definitely appears to be some buffering in effect for small writes:

Another issue with sustained workloads on M.2 drives is thermal throttling! And that's something that you'll definitely encounter with a heavy database workload.

Still, it's not a big enough drop to explain the hang.

It's recommended not to mount it with the TRIM option. The preferred way to handle TRIM is to schedule fstrim to run during off-peak hours.dsavidsarmstrong said:It might even be that the linux driver doesn't handle trim correctly but that's just a theory.

Whatever the specific cause of the hangs, I think the main issue is probably trying to use a consumer SSD outside of its intended usage envelope. The issue might've been compounded by misuse of the TRIM mount option.