CEVA’s NeuPro Chips Promise Up To 12.5 TOPS Embedded AI Performance

CEVA announced a family of new “AI processors,” called NeuPro, that scale in performance from 2 trillions operations per second (TOPS) to 12.5 TOPS. The chips will target a range of products from Internet of Things (IoT) devices to smartphones and connected cars.

Rise AI At The Edge

Over the past couple of years we’ve seen a growing trend of “AI chips” that could be used in embedded devices such as IoT devices, smartphones, drones, and surveillance cameras. One of their primary uses is for their “computer vision” capabilities, which allow devices to identify objects on the spot without needing to connect to the cloud, while also protecting your privacy.

Many people might not want companies to watch their homes at all times via smart surveillance cameras, just so the users can benefit from object recognition capabilities of their devices, for instance. Embedded AI chips can largely achieve the same capabilities without all the privacy downsides that machine learning tends to bring. As we know, for AI to be effective, it needs to use as much of your data as possible.

In the past year, we’ve also seen smartphones quickly jump in embedded AI performance from 0.6 TOPS (Apple iPhone 8/X), to 1.9 TOPS (Huawei Mate 10 Pro), to 3 TOPS (Google Pixel 2). This year, we should also see even bigger jumps in performance, as smartphone makers start to go “all-in” on embedded AI performance.

Of course, having a fast AI chip in your device won’t do much on its own, unless there’s software to take advantage of it, too. This is why, for instance, Android 8.1 now supports the Tensorflow Lite software library and the Neural Network API, which allow smartphone makers to give app developers access to their chips in a standardized way. CEVA also released its Ceva Deep Neural Network (CDNN) software library for its previous generation chips, back in 2016.

CEVA’s NeuPro

Last year, CEVA hinted that Apple may be using one of its last-generation AI chips in the iPhone 8 and X, but Apple never confirmed it. Either way, it seems that CEVA’s new AI chips will be seeing a big jump in performance this year, starting at 2 TOPS and reaching up to 12.5 TOPS.

The smallest processor, called the “NP500,” comes with 512 Multiply-Accumulate (MAC) units and targets IoT, wearables, and cameras.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The NP1000 comes with twice as many units (1024) and targets mid-range smartphones, advanced driver assistance systems, industrial applications, and AR/VR headsets.

The NP2000 has 2048 MAC units and targets smartphones, surveillance cameras, robots, and drones.

The most powerful version, the NP4000, has 4096 MAC units and can be used in enterprise surveillance and autonomous driving.

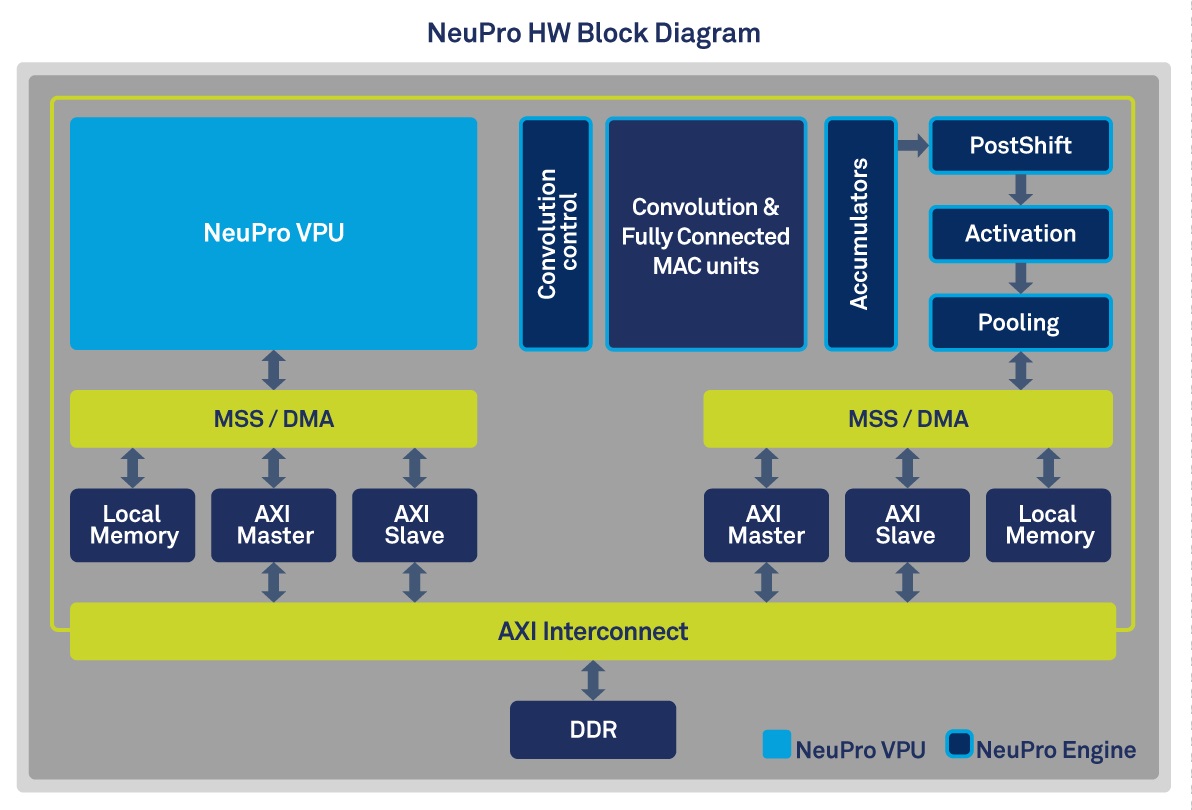

Each coprocessor contains the NeuPro engine and the NeuPro visual processing unit (VPU). The NeuPro engine is the hardwired implementation of network layers, while the VPU is a programmable vector digital signal processor (DSP), which provides software support for new AI algorithms.

NeuPro processors support both 8-bit and 16-bit computation, each type being activated in real-time depending on the workload. According to CEVA, its MAC units achieve over 90% utilization, which means the AI algorithms take (almost) full advantage of the processors’ performance. The NeuPro’s design also substantially lowers RAM utilization, which should improve battery energy efficiency, too.

Ilan Yona, vice president and general manager of the Vision Business Unit at CEVA, said that:

It’s abundantly clear that AI applications are trending toward processing at the edge, rather than relying on services from the cloud. The computational power required along with the low power constraints for edge processing, calls for specialized processors rather than using CPUs, GPUs or DSPs. We designed the NeuPro processors to reduce the high barriers-to-entry into the AI space in terms of both architecture and software. Our customers now have an optimized and cost-effective standard AI platform that can be utilized for a multitude of AI-based workloads and applications.

The NeuPro chips will be available for licensing to select customers in the second quarter of 2018, and to everyone else in the third quarter of the year.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.