DDR5 Specifications Land: Up To 8400 MHz, Catering To Systems With Lots of Cores

DDR5 will be big, and very fast!

It doesn't take a genius to figure out that DDR5 is nearing launch, but details have been surprisingly scarce. JEDEC, the organization behind memory standards, still hasn't finalized the DDR5 specification, but that's not to say that the memory makers don't know what they're doing, either. SK Hynix was kind enough to share some very juicy details in its newsroom yesterday, finally giving us some concrete information to share.

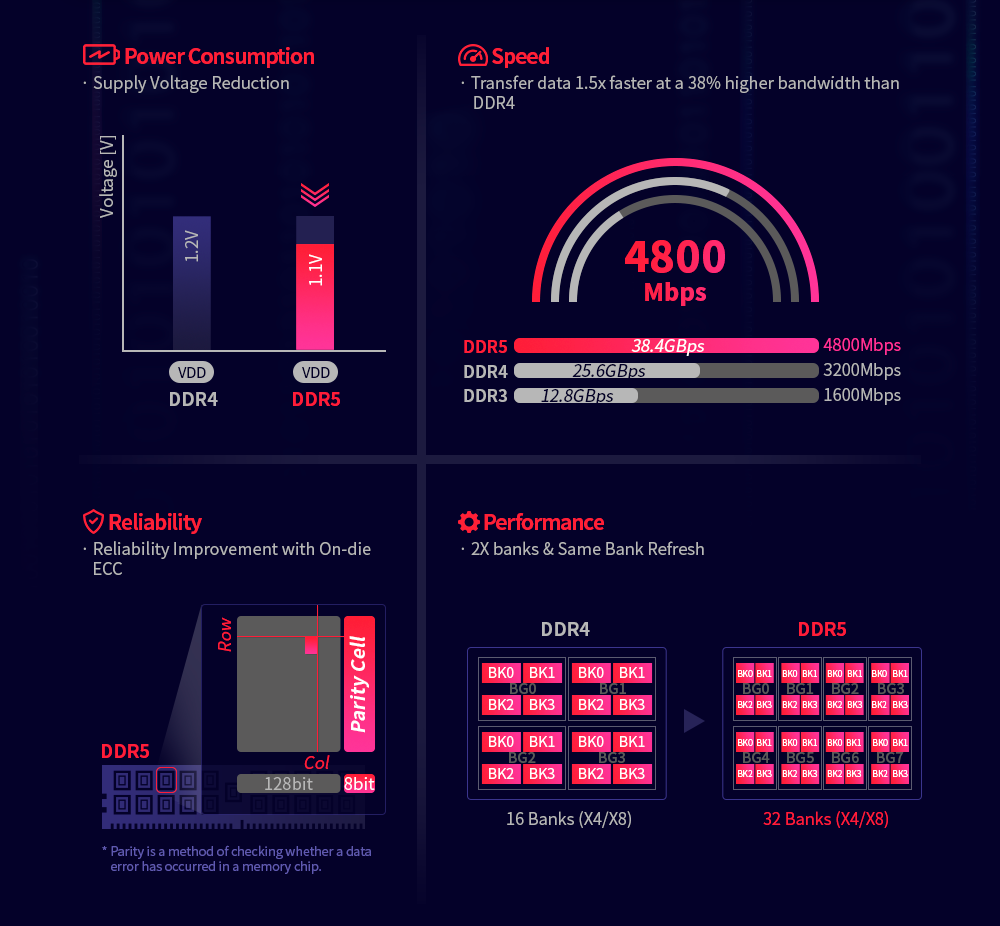

DDR4's memory bandwidth has become a major bottleneck as core counts on CPUs increased dramatically over the last few years -- and that's no surprise with that many cores battling over memory access. To address that need, DDR5 is built to offer significantly higher bandwidth that spans up to a whopping 8400 MHz.

DDR4's specification was initially meant to top out at 3200 MHz, but over the years developments in the memory space have made this speed easy to hit, and motherboards and memory controllers on CPUs can handle higher frequencies, too. This led to memory modules that operated at well over 4000 MHz and the fastest kits advertise up to 5000 MHz, though they come with rather unpalatable price tags to match.

DDR5: Faster Than (Almost) All DDR4 From The Get Go

DDR5, therefore, has a much wider range of frequencies, giving memory makers more room to grow within the specification over the coming years. SK Hynix' data suggests that the slowest DDR5 memory will run at 3200 MHz while the fastest spans up to 8400 MHz as developments progress. However, as a starting point, the company intends for all its DDR5 memory to run at least at 4800 MHz, and speeds below 4800 MHz will simply serve to conserve power in efficiency-critical applications.

| Header Cell - Column 0 | DDR4 | DDR5 |

|---|---|---|

| Frequency Range | 1600 - 3200 MHz | 3200 - 8400 MHz |

| Density | 2 Gb, 4 Gb, 8 Gb, 16 Gb | 8 Gb, 16 Gb, 24 Gb, 32 Gb, 64 Gb |

| On Die ECC | No | Yes |

| Bank | 16 Banks | 32 Banks |

| Voltage | 1.2 V | 1.1 V |

| Burst Length | 8 | 16 |

| DFE | No | Yes |

| Same Bank Refresh | No | Yes |

DDR5's increased bandwidth comes thanks to a 32-bank structure spread over 8 bank groups compared to DDR4's 16 banks over 4 bank groups, and the burst length has also been doubled from 8 to 16. The increased bank sizes, paired with the higher density of the modules, means that DDR5 will also support significantly higher capacities.

DDR5 also accomplishes a so-called 'Same Bank Refresh' method. This entails each memory bank refreshing independently while other banks remain accessible to the system. In DDR4, all the memory banks have to refresh simultaneously, causing the CPU to have to wait for a split moment while this happens -- this wasn't a problem in systems with small memory pools, but as memory capacities, workloads, and CPU cores increase, we're reaching a point where every bit of extra memory performance will make a difference.

DDR5 also comes with a new Decision Feedback Equalization (DFE) circuit, which is said to significantly increase the per-pin bandwidth on the DIMMs.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

“In the 4th Industrial Revolution, which is represented by 5G, autonomous vehicle, AI, augmented reality (AR), virtual reality (VR), big data, and other applications, DDR5 DRAM can be utilized for next-gen high performance computing (HPC) and AI-based data analysis,” said Sungsoo Ryu, Head of DRAM Product Planning at SK hynix. “DDR5 will also offer a wider range of density based on 16Gb and even 24Gb monolithic die, in order to meet the needs of cloud service customers. By supporting higher density and performance scalability compared to its predecessor, DDR5 has set a firm foothold to lead the era of big data and AI. With this, SK hynix will secure a competitive edge in the premium server market while providing distinguished memory solutions to customers.”

When Will We Get This Fast Memory?

Samsung is starting mass production of its EUV DDR5 memory in 2021, and SK Hynix just announced that it will commence volume production of its DDR5 products by the end of this year.

Intel's leaked roadmap suggests that it will jump onto DDR5 in 2021 in the server space.

That being said, it's unclear whether you'll be purchasing a PC with DDR5 in 2020 or 2021. Based on the data, it's clear that the main target audience for DDR5 will be high-end systems with lots of CPU cores that need the increased bandwidth and accessibility to avoid bottlenecks. As such, it's possible that DDR5 will initially be limited to HEDT and server systems.

However, given AMD's history with its CPU sockets, the company will likely make the switch to DDR5, too, when it transitions to AM5. Nevertheless, it is unclear whether AMD will jump to a new socket with the Ryzen 4000 desktop chips (not to be confused with the just-launched mobile Ryzen 4000 lineup) or Ryzen 5000 chips in two years time.

Niels Broekhuijsen is a Contributing Writer for Tom's Hardware US. He reviews cases, water cooling and pc builds.

-

N.Broekhuijsen Reply

Well spotted, thanks! All fixed.coolitic said:"Samsung started mass production of its EUV DDR5 memory in 2021 "

Uhhh, hello? -

King_V Replycoolitic said:"Samsung started mass production of its EUV DDR5 memory in 2021 "

Uhhh, hello?

What are you complaining about??! I mean, jeez, it's like you've never even heard of the Flux Capacitor option in cars! Don't judge, maybe Niels just happens to have one! :LOL: -

bit_user Presumably, on-die ECC is optional? Is there also any form of parity on the data/address bus?Reply

I also wonder how DDR5 latencies will compare with earlier memory generations (i.e. in terms of ns). -

jimmysmitty Replybit_user said:Presumably, on-die ECC is optional? Is there also any form of parity on the data/address bus?

I also wonder how DDR5 latencies will compare with earlier memory generations (i.e. in terms of ns).

I would assume that like most it will go up and will be the trade off for the higher speeds. But with time we will also see higher speeds and latency to go down from original highs. -

bit_user Reply

Here's an interesting comparison of DDR3 vs. DDR4. In their tests, the best DDR4 latencies never quite equaled the best DDR3, which is roughly as I expected.jimmysmitty said:I would assume that like most it will go up and will be the trade off for the higher speeds. But with time we will also see higher speeds and latency to go down from original highs.

https://www.techspot.com/news/62129-ddr3-vs-ddr4-raw-bandwidth-numbers.html

Note that two of their test systems are dual-channel, while the other two are quad-channel. Presumably, that explains the differences within the same memory speed grade. It's also interesting to see how much channel-doubling can compensate for lower memory speeds (note: 2x the channels isn't simply 2x as fast). -

spongiemaster Does this even matter besides in enterprise level applications? Especially on the Intel side, you're well into the who cares range of performance increases by the time you're in the mid 3000's. Moving from dual channel to quad channel also rarely results in any tangible improvements. Is there going to be a significant drop in latency?Reply -

Chung Leong Replybit_user said:Presumably, on-die ECC is optional? Is there also any form of parity on the data/address bus?

Sounds like it's not. They mentioned how ECC is going to improve node scaling by correcting single-bit errors. Presumably too many chips would not work reliably without it. -

bit_user Reply

Is that still true for 8+ cores?spongiemaster said:Moving from dual channel to quad channel also rarely results in any tangible improvements.

I feel like Intel knew what they were doing, when they outfitted their server and workstation platforms with > 2 channels.

If you check the techspot link, the quad-channel setups have higher latency. Of course, they're also both 1 generation older than the 2-channel CPUs.spongiemaster said:Is there going to be a significant drop in latency? -

JamesSneed To those saying faster memory isn't needed or more channels aren't needed, they surely are especially in the enterprise. You can't keep a 64 core CPU properly fed with dual channel 3200Mghz memory ie huge performance killing memory bottleneck. This is also why you don't see SMT4 because until memory bandwidth increases SMT4 is pretty pointless for many workloads as you would never keep the CPU fed unless you build in a ton of level 4 cache like the IBM power 9 chips did with 128 MiB of level 4 cache.Reply