GeForce Now Ultimate RTX 4080 Tier Tested

The fastest game streaming yet

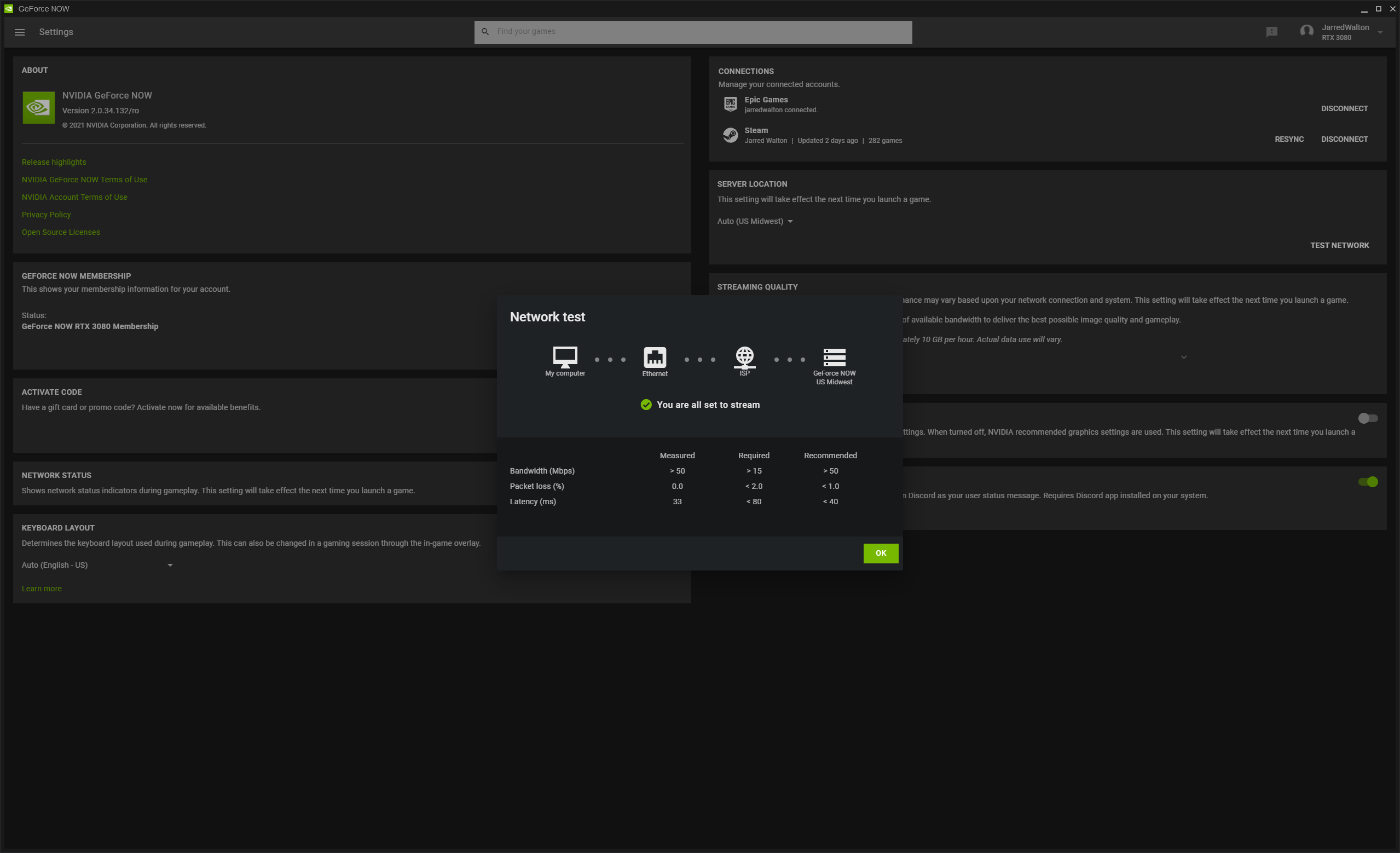

Nvidia's GeForce Now subscription game streaming service just got upgraded again. Existing users of the service at the previous RTX 3080 level will automatically switch to the new GeForce Now Ultimate tier, for the same price: $19.99 billed monthly, or $99.99 for six months. We were able to test the new hardware to see how it handles the latest games, which naturally includes support for DLSS 3 courtesy of the Ada Lovelace architecture.

What's the catch? If you're already a GFN subscriber, there is none, though we're still trying to sort out what these upgrades means for the other tiers. We know from past testing that there were previously at least three classes of hardware: RTX 3080, RTX 2080, and RTX 2060 (possibly GTX 1080). Those are all more like fast mobile variants of said GPUs, though the exact specs differ — actual 3080/2080/2060 GPUs running locally would generally be faster.

With the introduction of the 4080 tier, does that mean the bottom 2060/1080 equivalents will be phased out and everything shifts down? We're not sure, but it's one possibility. We do know that, increasing prices notwithstanding, the RTX 4080 ranks as one of the best graphics cards. But how does the cloud variant compare?

Let's start with the streaming performance. I ran five gaming tests — Assassin's Creed Valhalla, Cyberpunk 2077, Far Cry 6, Shadow of the Tomb Raider, and Watch Dogs Legion — on both a local RTX 4080 and on the GeForce Now Ultimate RTX 4080. The actual GPUs are not the same, as the GeForce Now "4080" comes with 24GB VRAM and 18,176 CUDA cores — basically, it sounds like an RTX 6000 with half the VRAM. The Ada architecture also means the cloud 4080 supports things like DLSS 3.

Incidentally, the GeForce Now 4080 tier also uses an unspecified AMD Ryzen chip with 8-cores and 16-threads (or at least that's what the end users sees). Another interesting piece of information is that, based on your tier and hardware, the streams can use H.264, HEVC, or AV1 encoding. AV1 is only available on the RTX 4080 tier and requires an end device that supports AV1 decoding (like Intel's latest integrated solutions as well as Arc). HEVC encoding has been available on the RTX 3080 tier, again with the actual codec depending on your target device.

All of the testing was done at 4K 120 fps on GFN, running on a Samsung Odyssey Neo G8 monitor (4K 240 Hz). Game settings were basically maxed out, including ray tracing options, with DLSS Quality mode enabled where applicable. Unfortunately, none of the games with DLSS 3 Frame Generation (currently) have a built-in benchmark, so I couldn't capture any performance metrics for those games.

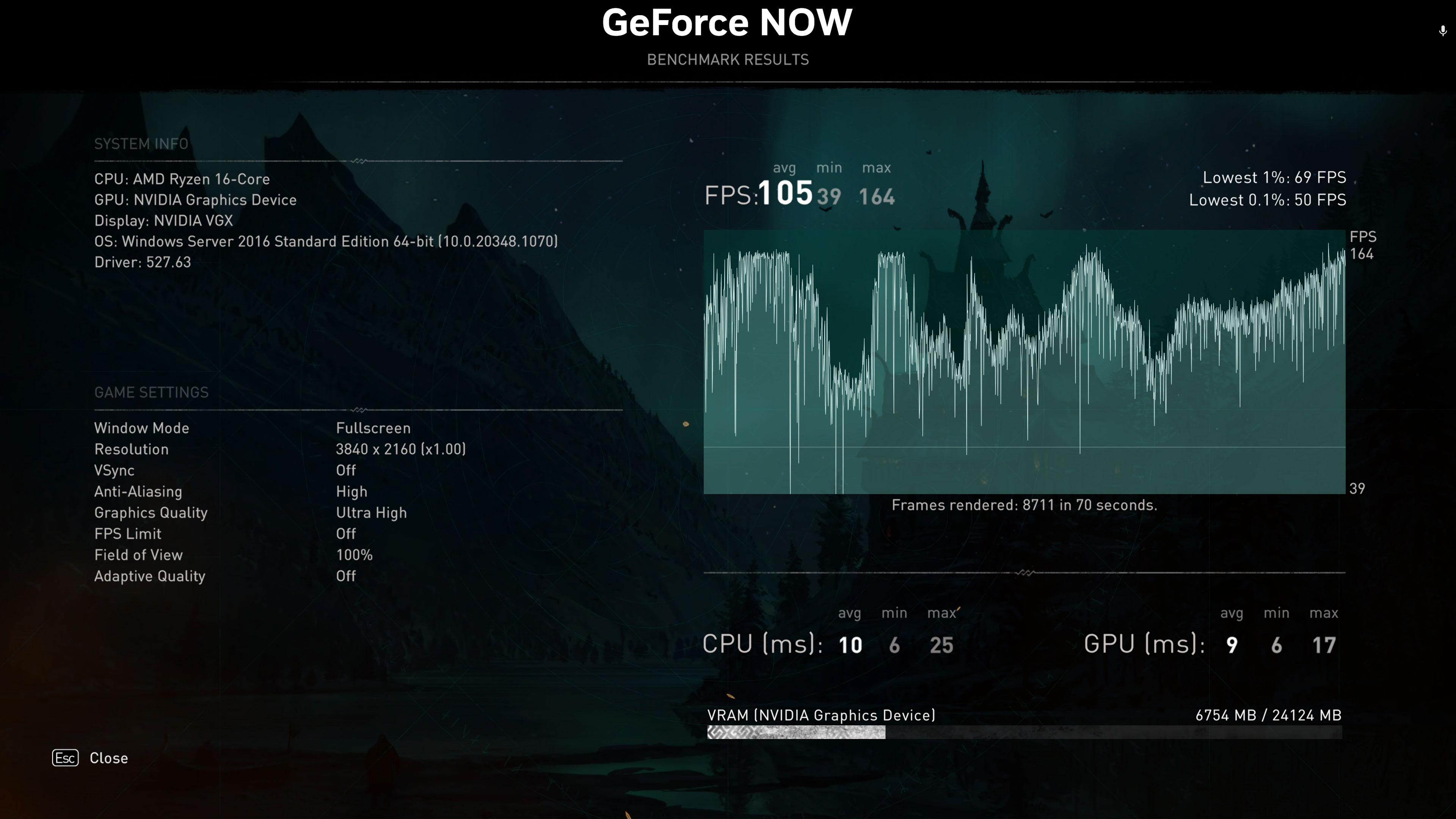

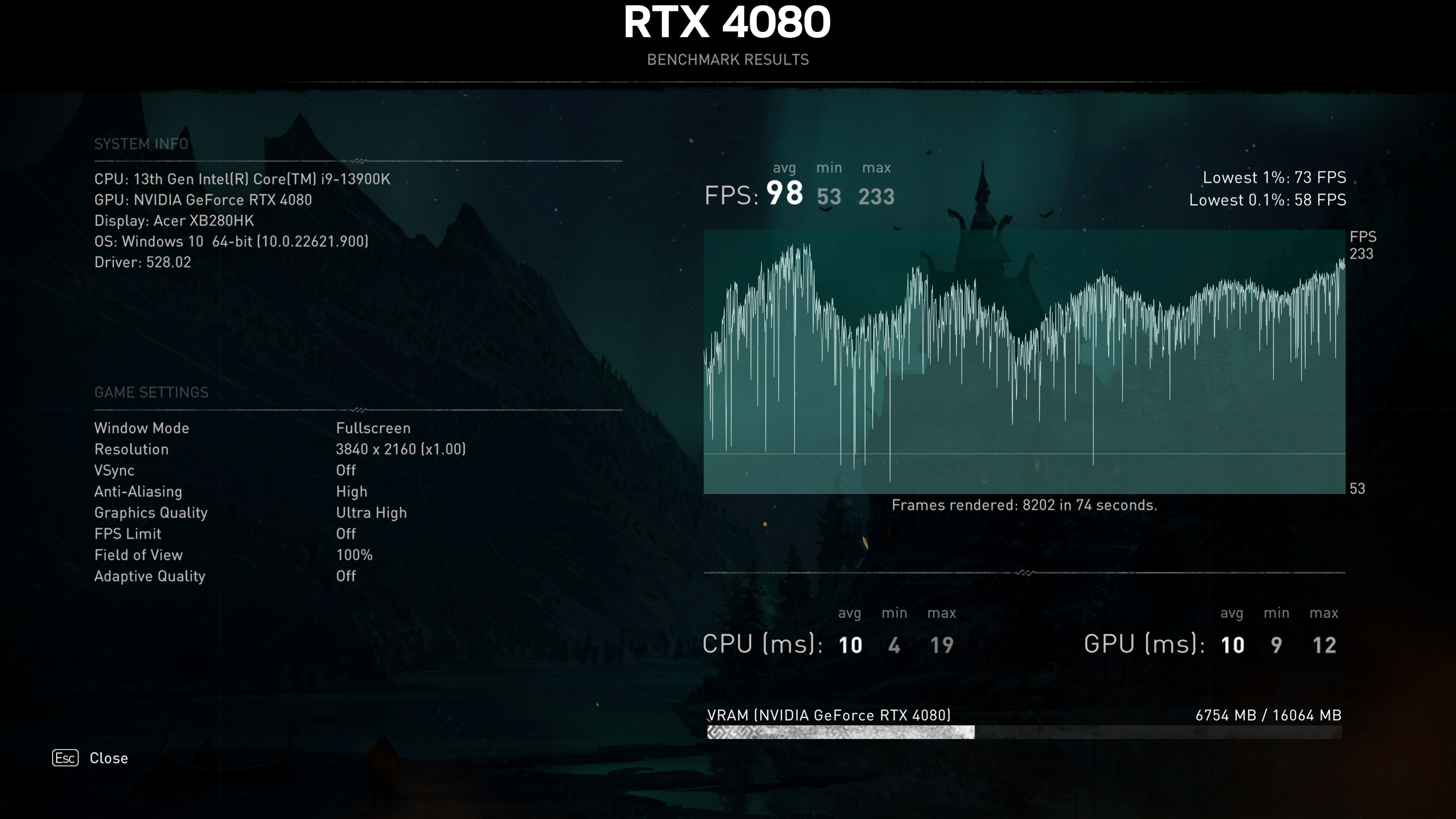

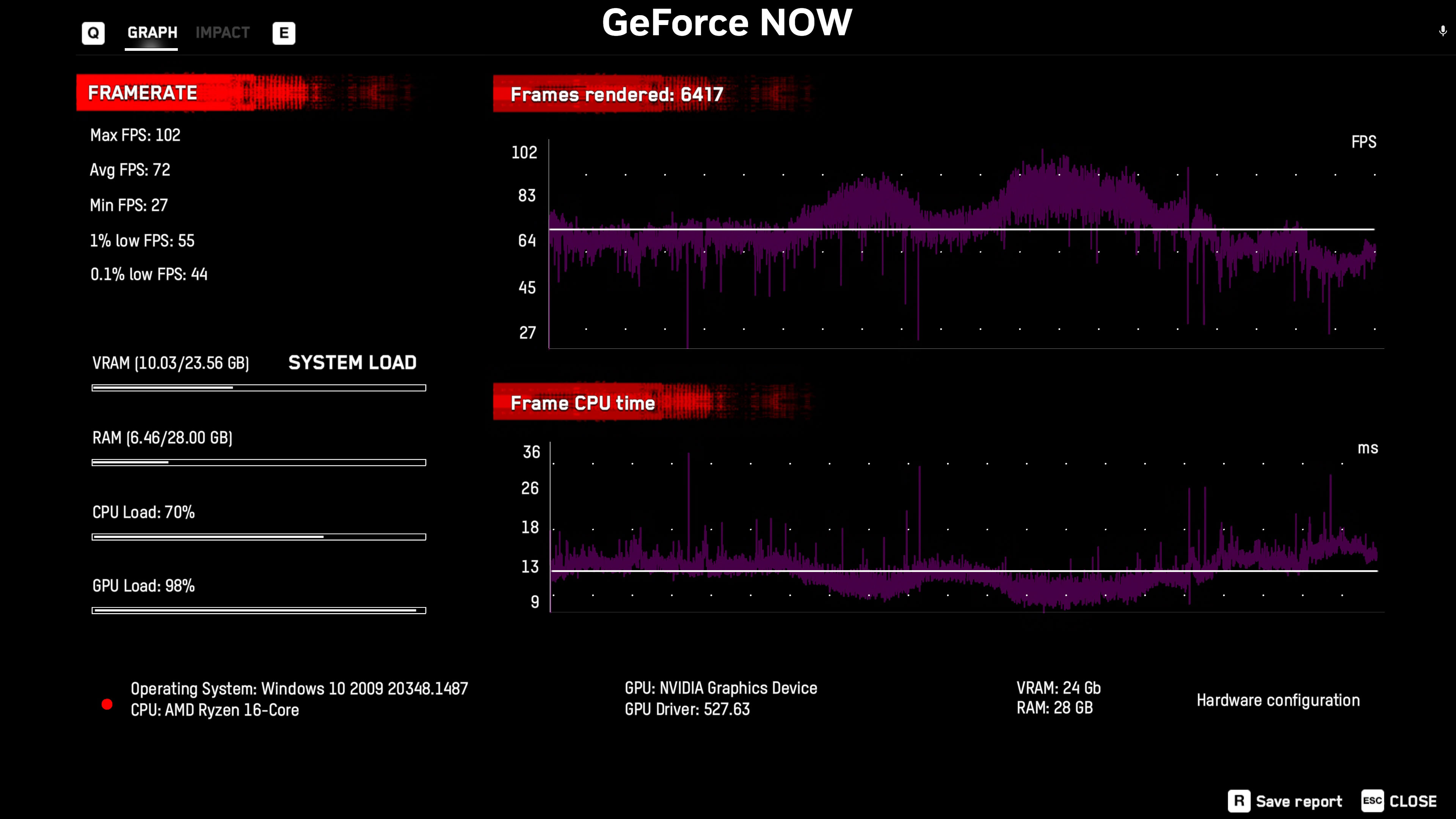

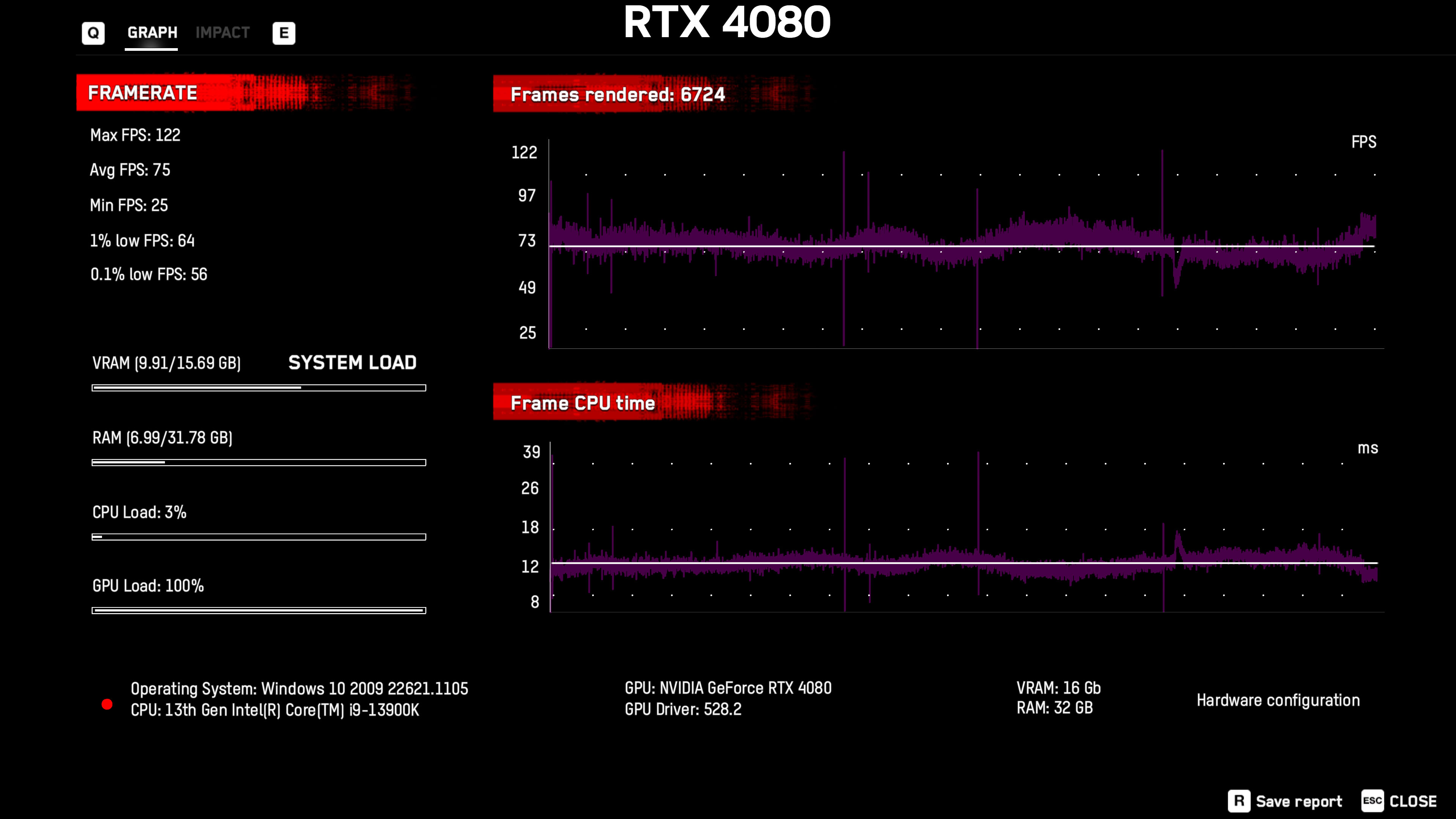

Assassin's Creed Valhalla

Assassin's Creed Valhalla is one of the games that reports a "AMD Ryzen 16-core" CPU with an "Nvidia Graphics Device" for the GPU. That's about as much detail as we could garner on what the new RTX 4080 hardware entails. We tested using the Ultra preset.

Performance on GFN came in at 105 fps, while on our Core i9-13900K test PC equipped with an actual RTX 4080 Founders Edition, we achieved... 98 fps. Wait, seriously? Yes, GeForce Now actually came out faster than a local RTX 4080 in this case, though that's not always going to happen. It could be that this particular game is simply better optimized for Ryzen CPUs as opposed to the 13900K.

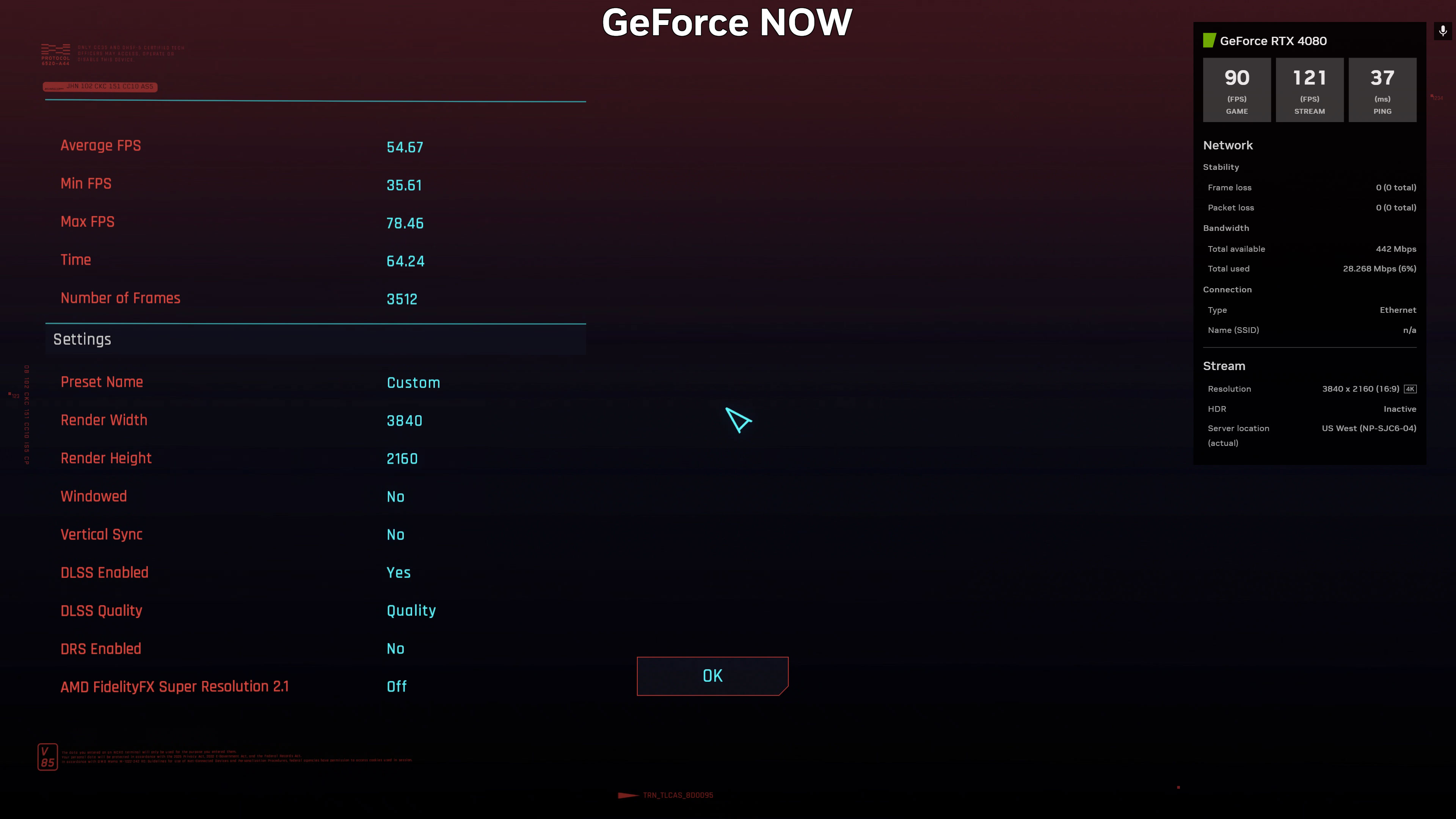

Cyberpunk 2077

It's interesting that Cyberpunk 2077 is one of the games that will get DLSS 3 support, but it's still not available in the public build over three months after the RTX 4090 launch. So we're using DLSS 2 in Quality mode with the RT-Ultra preset.

And the results are pretty dang close. The GFN cloud got 55 fps while the local RTX 4080 scored 57 fps. Most gamers wouldn't notice the difference, and of course you can change the settings to ensure you're getting more than 60 fps, with the potential to hit 120 fps once Frame Generation becomes publicly available.

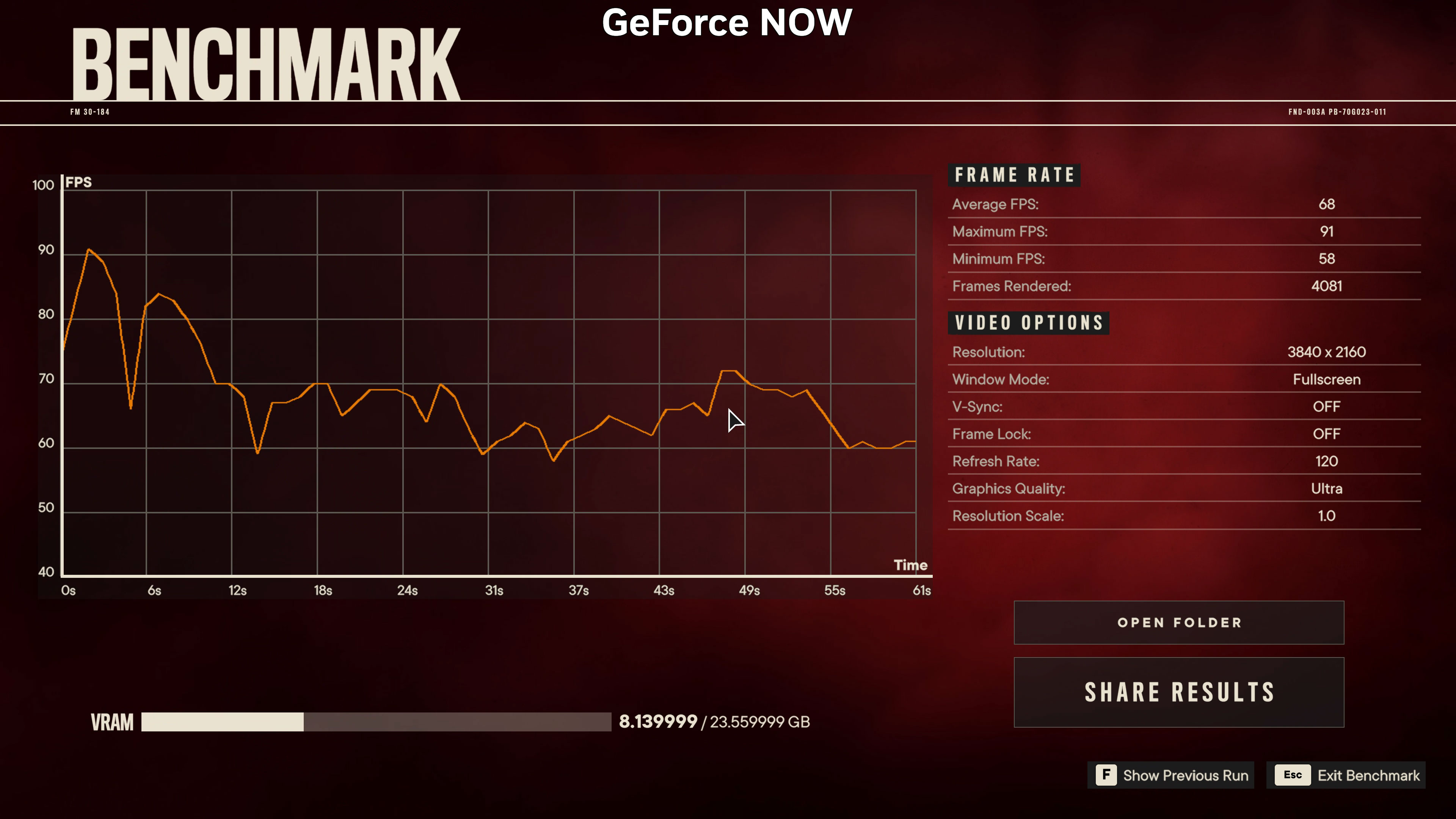

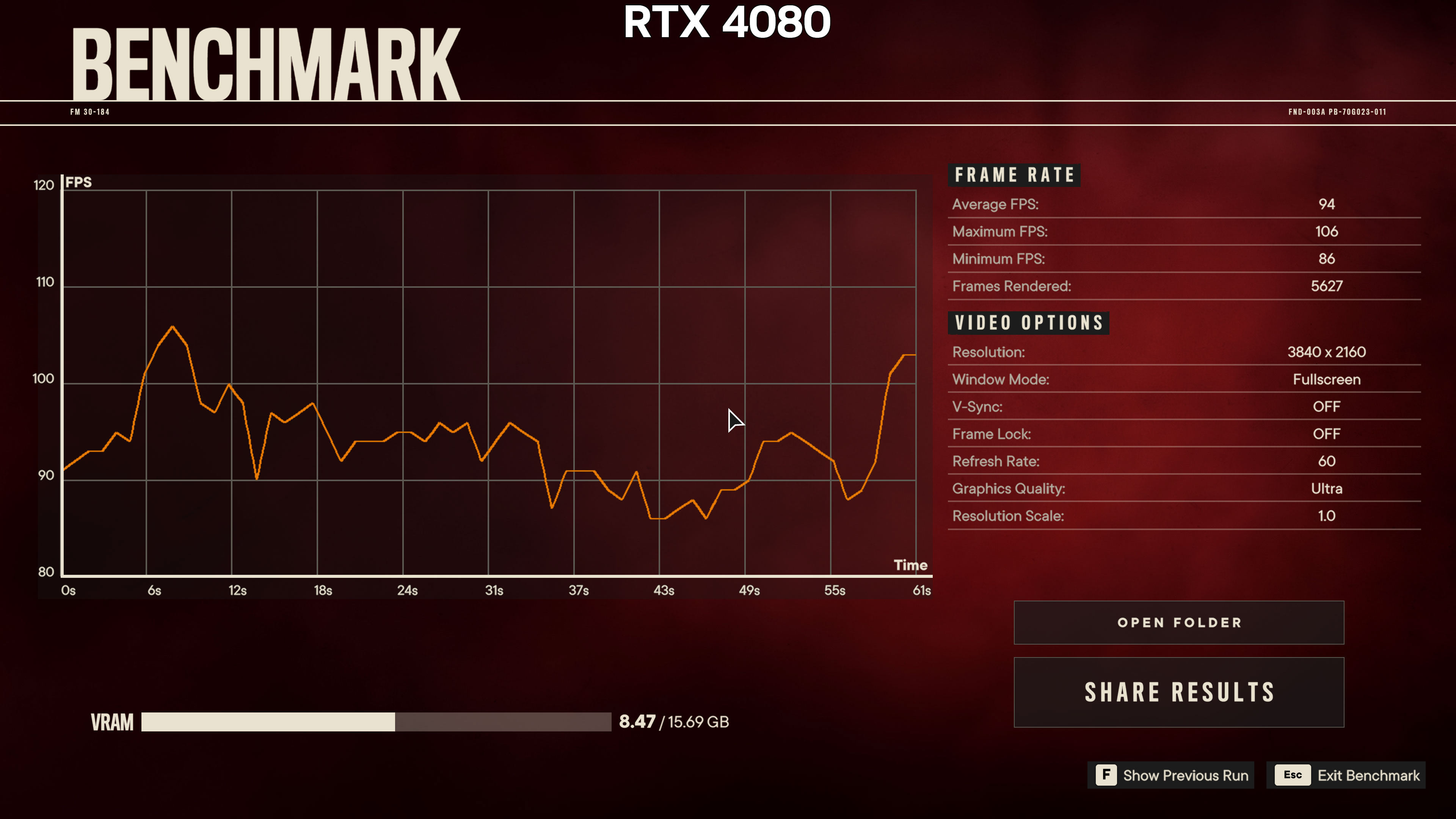

Far Cry 6

Next up is Far Cry 6, using the ultra preset, HD textures, and with both DXR (DirectX Raytracing) reflections and shadows enabled. GeForce Now's 'RTX 4080' this time managed 68 fps, while the locally running RTX 4080 scored 94 fps. That's a big difference and a complete reversal of what we saw in Assassin's Creed Valhalla.

Shadow of the Tomb Raider

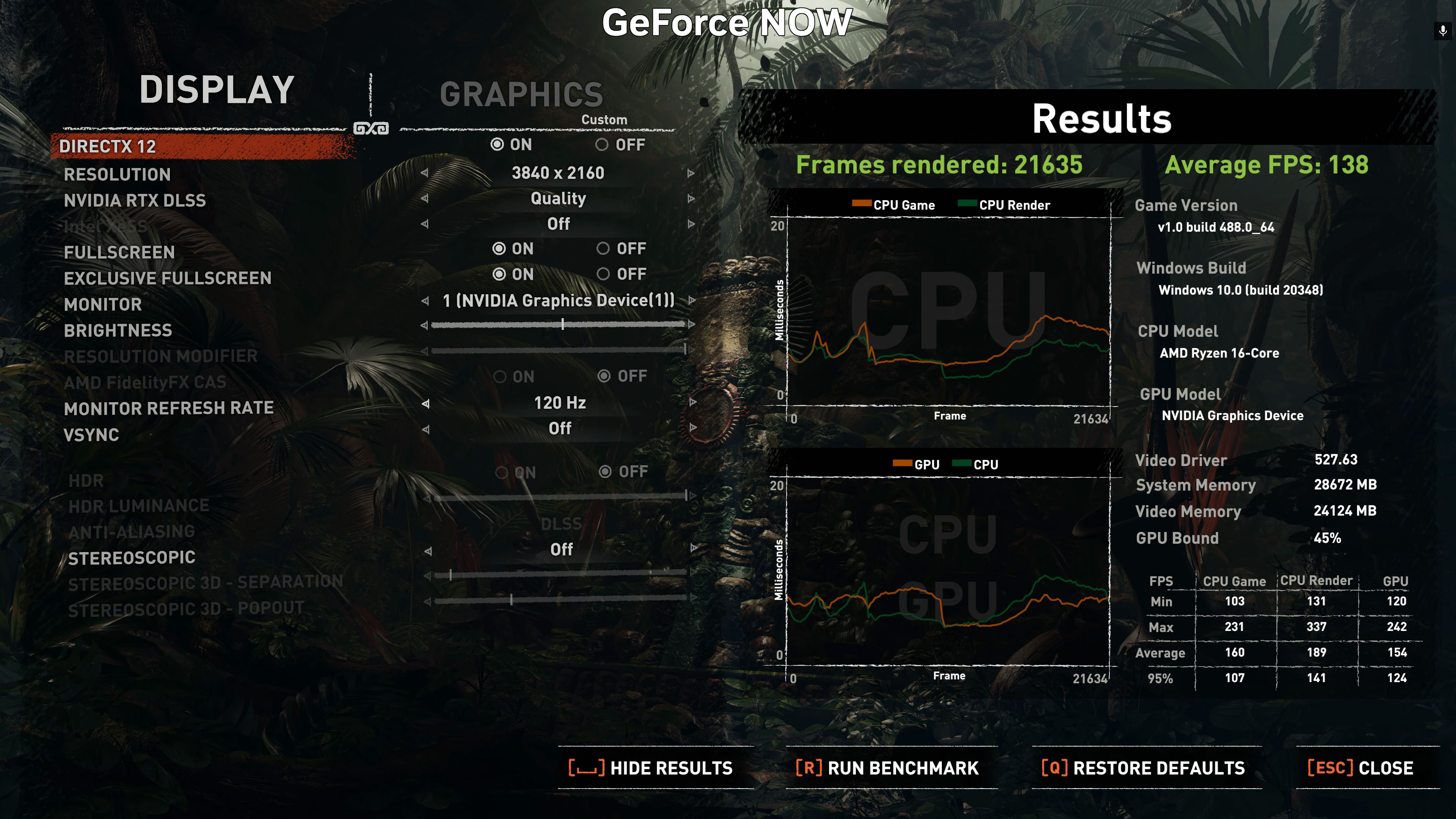

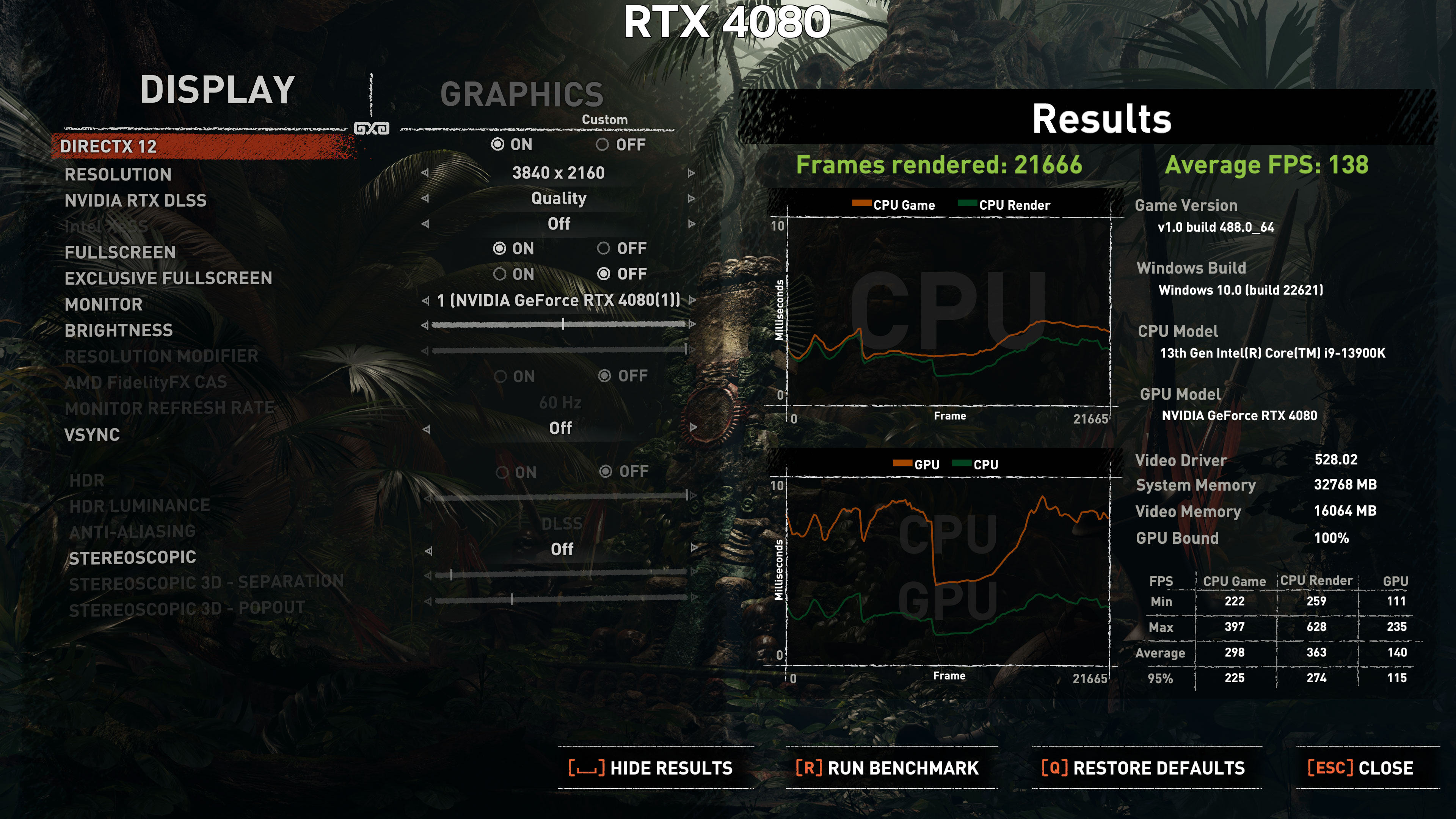

Moving on to Shadow of the Tomb Raider, we've used maxed out settings (including ray tracing and some of the other items that are normally set one step below max), and we're running with DLSS Quality upscaling. This is an older game so we'd expect rather high performance, and that's exactly what we got.

Both GeForce Now and the local RTX 4080 scored the same 138 fps average, though it's interesting to note in the details (in the bottom-right) that the numbers for individual elements (CPU Game, CPU Render, GPU) favor the 13900K for the first two and GFN for the latter. But the real performance metric is generally more important than the detailed data.

Watch Dogs Legion

Last we have Watch Dogs Legion, which again ends up with pretty similar scores on both the RTX 4080 and GeForce Now. We're running with mostly maxed out settings (Extra object detail at 50%), including DXR reflections, with DLSS Quality mode.

The local RTX 4080 scored slightly higher, 75 fps compared to 72 fps, but that's close enough to call it a draw. Which means, out of the five games we were able to benchmark, one favored GFN, one heavily favored the local RTX 4080, and the other three were basically tied.

GeForce Now Image Quality and Latency

The above gaming tests use built-in benchmarks to assess actual FPS from the various games, but what's it actually like to play on GeForce Now? That will vary somewhat based on your internet connection and latency.

In my case, I consistently got around 40 ms of latency to the West coast GeForce Now data center. Ideally, you want to be closer to the location and get under 30 ms, but the RTX 4080 hardware wasn't available at other locations yet, and even then I live in northern Colorado and the best ping I achieved was 36 ms to the US Central location (which didn't have 4080 hardware yet).

I've played and tested enough games that the increased latency was immediately obvious to me. It wasn't bad and certainly not unplayable, but just moving the mouse cursor around the main menus in the various games I tried was perceptibly slower. But once you get into the actual games? You quickly adapt.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

I played through the prologue of Warhammer 40,000: Darktide on GeForce Now, with maxed out settings, and generally stayed above 60 fps. That's one of the games that also supports DLSS 3 Frame Generation, and while you might worry about the potentially higher latency that creates, in practice I found it felt about the same as not having FG enabled, but with a slightly smoother video frame cadence.

Without FG (but with DLSS Quality upscaling), performance tended to be just above 60 fps. Turning on FG bumped that to 100–120 fps. On a 120 Hz (or higher) display, that was enough to smooth out the overall look of the game just a bit. Again, it didn't feel any faster or more responsive, but it looked a bit smoother on the display.

What about image quality? It's the usual with game streaming. Even though the new Ultimate tier supports 4K and 120 fps, everything looks a bit soft thanks to the video compression and decompression. It's more like playing with DLSS Performance upscaling (locally) at 4K than the crisp look I'm used to seeing.

GeForce Now Bandwidth Requirements

GeForce Now requires a decent internet connection. It's not too crazy on the downstream bandwidth requirements, as even the new Ultimate tier at 4K 120 fps "only" uses about 45 Mbps, but latency is a big factor. Hotel Wi-Fi? Probably not going to cut it on anything that needs decent responsiveness. Do note that 45 Mbps means you could potentially consume up to 16GB of data per hour of play, so if you have a data cap, I'd skip game streaming.

Lower quality settings can reduce the bandwidth requirements. Nvidia offers three default settings, Balanced, Data Saver and Competitive. Balanced is the default and uses about 16GB per hour, with support for 4K 120. Data Saver cuts that to just 4GB per hour, with a 720p 60 fps stream. Competitive is mostly for multiplayer esports gaming and uses a 1080p 120 fps stream, though it's now supposed to have 240 fps support as well (which seemed to be missing); it uses about 10GB per hour.

On a related note, I would avoid using wireless networking if at all possible. That will introduce additional latency and could result in a less than ideal experience. If you're on a laptop and that's your only option, sure, give it a shot, but for 4K 120 fps in particular you'll want the fastest and most stable connection possible.

GeForce Now Ultimate (RTX 4080) Availability

The GeForce Now RTX 4080 tier has officially rolled out, but it's not available at all GeForce Now installations. Nvidia has this page that shows where the RTX 4080 hardware is now available. At the time of writing, it's in the San Jose, Los Angeles, Dallas, and Frankfurt data centers — everything else is still on RTX 3080 or lower hardware.

Nvidia will continue upgrading its GeForce Now data centers, but there's no official timeline for how that will happen. We also don't know how many active GeForce Now subscribers there are, or how many RTX 4080 slots are available at the various data centers.

GeForce Now Ultimate Closing Thoughts

The cost of PC gaming hardware has shot up over the past few years, particularly on graphics cards. Where the RTX 3080 had a nominal launch price of $700, it mostly sold at $1,500 throughout 2021 and early 2022. Things have improved now, but the 3080 is on its way out, to be replaced by the RTX 4080 that now sits at the former RTX 3080 Ti price point: $1,200.

Performance is better than on previous generation GPUs, no doubt about that, but the value proposition is unquestionably getting worse. $1,200 is enough for a PlayStation 5, Xbox Series X and several games and accessories for each — never mind that you'd also need the rest of the PC to go along with the graphics card.

The idea of game streaming starts to look more reasonable when you consider those prices. At $100 for six months, that's six years of GeForce Now subscriptions for the price of an RTX 4080 — and during the next six years, we'll probably see three more GPU upgrades arrive (5080, 6080, 7080). You can skip all the hardware upgrade worries and get a pretty great cloud streaming experience instead, using whatever hardware you already have available.

But the experience of GeForce Now isn't quite the same as local gaming. In some ways it's better (less heat and noise from your system). In other ways it's clearly worse: a large but still fundamentally limited selection of games, with higher latency, plus the need for a good internet connection (preferably one without data caps). At least the hardware this round seems to be pretty close in performance to an actual RTX 4080.

You can always give the free GeForce Now tier a shot, which currently provides "GTX 1080" class hardware — you can't enable RTX effects or DLSS. You're also limited to one hour play sessions, where the paid tiers provide six hours and eight hours. I've tried the free tier, and while it's not as nice as the RTX 4080 tier, it's certainly playable.

If that gets upgraded to RTX 2080 hardware, it would dramatically improve the overall experience, though Nvidia will likely keep ray tracing locked behind a paywall. And even if you're only able to get 1080p at 60 fps, that's still not bad for a game streaming service.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

DougMcC Latency is a disaster on these services. I can't imagine playing with 40ms of bonus lag, who is buying this?Reply -

PlaneInTheSky ReplyThe idea of game streaming starts to look more reasonable when you consider those prices. You can skip all the hardware upgrade worries and get a pretty great cloud streaming experience instead.

The thing is, no one is doing this. When you are already short on cash, you are not going to start a subscription on top of it.

And there is no reason to go this route to begin with.

What people are doing is voting with their wallet, and simply ignoring the handful of games every year that require high-end PC.

This is why on the Steam Hardware survey, the 1650, 1050Ti and 1060 have been the most used GPU for years now. And they will be next year, and the year after that. PC sales are in the gutter.

The number of PC games actually requiring high-end hardware is actually very low. I went through last year's releases and I could only find 3 games requiring high-end hardware.

It just seems like games require high-end hardware because these games are shoved into your face by Nvidia, AMD, and the "AAA" studios making them have tons of money to get them constantly on the front page.

Here is a list of PC releases in January, only 1 game (Forspoken) requires high-end hardware. There are tons more indie games not on that list.

Instead of buying a subscription to a high-end PC in the cloud to play this 1 game, it is way easier to just ignore this game and cloud services altogether and enjoy games that have reasonable PC requirements instead.

January PC releases.

-One Piece Odyssey

-A Space for the Unbound

-Colossal Cave

-Persona 4 Golden

-Persona 3 Portable

-Forspoken-Warlander

-Devolver Tumble Time

-OddBallers

-Shoulders of Giants

-Dead Space

-Age of Empires 2: Definitive Edition

-Season: A letter to the future

-Superfuse -

magbarn Thanks to Jensen for planning on neutering the Shield with the local version of this which is a much better experience.Reply -

AkRazor The CPU of the GeForceNOW 4080 rig is somelike an AMD Ryzen Threadripper PRO 5955WX (Zen 3). And the GPU is a nVidia L40G Ada Lovelace. It can be checked in the Steam hardware info with the codenames.Reply -

iedynak Reply

Whaaat? I've been using GeForce Now since beta and the latency is negligible. Not only in TPP but in FPS like Fortnite.DougMcC said:Latency is a disaster on these services. I can't imagine playing with 40ms of bonus lag, who is buying this? -

AkRazor Reply

You're absolute right. Latency is superb in GeForceNOW. The other guy doesn't know what is talking about. Or maybe he has a really poor Internet connection.iedynak said:Whaaat? I've been using GeForce Now since beta and the latency is negligible. Not only in TPP but in FPS like Fortnite.