GeForce Now With RTX 3080 Tested

It's not actually an RTX 3080

Nvidia just upgraded its GeForce Now subscription game streaming service with the RTX 3080 tier, which promises gamers the performance of a GeForce RTX 3080 in the cloud. Well, sort of. You see, it's actually an Nvidia A10G GPU in the cloud, which is both better and worse than an RTX 3080 from a performance and features standpoint — more on that below. The RTX 3080 is still our top pick for the best graphics cards, contingent upon actually finding one for close to the recommended price. Also, it's in the cloud, which inherently limits what you can do with the GPU. This can be good and bad.

For example, there's no cryptocurrency mining using GeForce Now. Yay! Users are also allocated a GPU solely for their use, while the CPU gets shared between two users. Unfortunately, you're limited to games that are supported on the GeForce Now platform, and while there are many Steam, Epic, GoG, and Ubisoft games that will run on GeForce Now, there are plenty of missing titles as well: Borderlands 3 (and all the rest of the series), Dirt 5, Horizon Zero Dawn, Metro Exodus Enhanced Edition, and Red Dead Redemption 2 are just a few of the missing games. Also, anything that requires the Microsoft Store is out.

But it's not just about the games and hardware. One of the biggest barriers to PC gaming right now is the lack of graphics cards. Unless you want to pay eBay GPU prices, which still tend to be 50–100 percent higher than the official MSRPs, it's virtually impossible to buy a graphics card. That goes double for the RTX 3080. During the past couple of weeks, the average price on RTX 3080 cards purchased on eBay still sits at nearly $1,700, more than double the nominal price.

What if you could forget about the difficulty of buying a graphics card and simply rent the performance in the cloud? That's the theory behind the latest upgrades to GeForce Now. The RTX 3080 tier supports up to 2560x1440 resolution gaming, and even streams at 120 fps. Nvidia also claims improved latencies — supposedly better than playing locally on a latest generation Xbox Series X. The cost for this tier is $100 every six months.

That might seem steep, but considering an RTX 3080 might cost $1,500 or more, that's seven and a half years of GeForce Now streaming. Or, you know, however long it takes for GPU prices and availability to return to normal. Theoretically, you could pay for the service for six months, or even two years, and then when the current shortages and extreme retail pricing fades away, you can upgrade your home PC.

It's not the worst idea we've ever heard, but how does the new RTX 3080 tier of GeForce Now actually perform? And how does it compare to running games locally on an RTX 3080? We set out to do some testing to see for ourselves. Let's quickly start with the basics.

GeForce Now Superpod Hardware

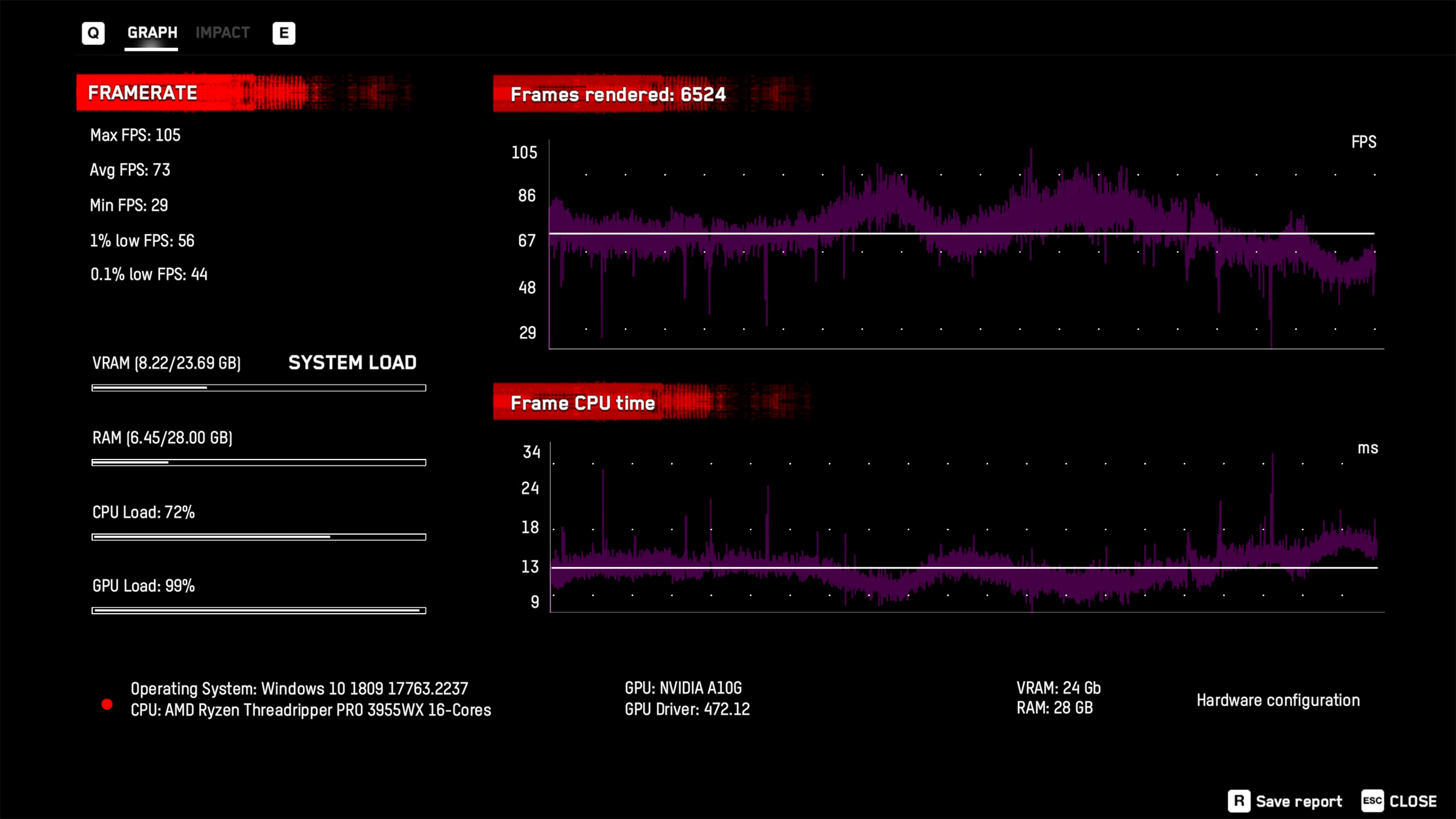

The new GeForce Now Superpods come packed with a lot of hardware. Nvidia didn't want to get into all the specifics, unfortunately for us because we love that kind of detail, but we do know that each Superpod houses a bunch of rack mounted servers equipped with Threadripper 3955X CPUs and Nvidia A10G graphics cards.

The Nvidia A10G isn't the same as an RTX 3080, and we assume it's basically the same core hardware as the datacenter Nvidia A10. It comes equipped with 24GB of GDDR6 memory — the same amount you'd get with an RTX 3090! Except, the VRAM runs at 12.5Gbps (probably to save power), giving you 600GBps of bandwidth. However, it has 9,216 CUDA cores where an RTX 3080 has 8,704 CUDA cores, so in theory it should be comparable to an RTX 3080: more memory, more compute, less memory bandwidth.

The Threadripper 3955X is a Zen 2 processor with two 8-core compute chiplets. Nvidia allocates all of the cores and threads from one chiplet to a single user, which should improve overall performance since it keeps commonly accessed data local to the chiplet that way. Clock speeds are 3.9–4.3GHz on the 3955X, but because it's the older Zen 2 architecture it's probably a bit slower for gaming purposes than many of AMD's and Intel's latest chips. Still, it shouldn't be a serious bottleneck for gaming at 1440p, especially when doing so from the cloud.

Besides the CPU and GPU, each RTX 3080 GeForce Now instance gets 28GB of DDR4-3200 memory and access to around 30TB of fast PCIe Gen4 SSD storage. When you put everything together, the total cost per GeForce Now instance has to run at least $2,000. But Nvidia doesn't expect people to use these instances 24/7, so even though users only pay $16.67 per month for unlimited streaming (limited to eight hour sessions at a time), Nvidia presumably allocates dozens of users per installed set of hardware.

We asked a bunch of other questions about the GeForce Now Superpods, but unfortunately Nvidia didn't want to provide answers or photos. These look similar to the A100 Superpods, but the individual servers are obviously quite different. Our back of the napkin math (based on Nvidia's statement that each Superpod houses 8,960 CPU cores and 11,477,760 CUDA cores, plus the above photo), is that there are 20 racks per Superpod, with each rack housing 28 servers. Each server would come equipped with a single Threadripper Pro 3955X and two Nvidia A10G GPUs.

Except, that works out to 10,321,920 CUDA cores, so the A10G may actually look more like an RTX 3080 Ti with double the VRAM. 10,240 CUDA cores per A10G would give us 11,468,800 CUDA cores spread out over 1,120 GPUs, and the 'missing' 8,960 CUDA cores could be located in Nvidia Bluefield network devices. Or perhaps there's some other explanation, but that's all we've got for now. We do know that Nvidia says each Superpod supports "over 1,000 concurrent users," which jives with most of the other numbers.

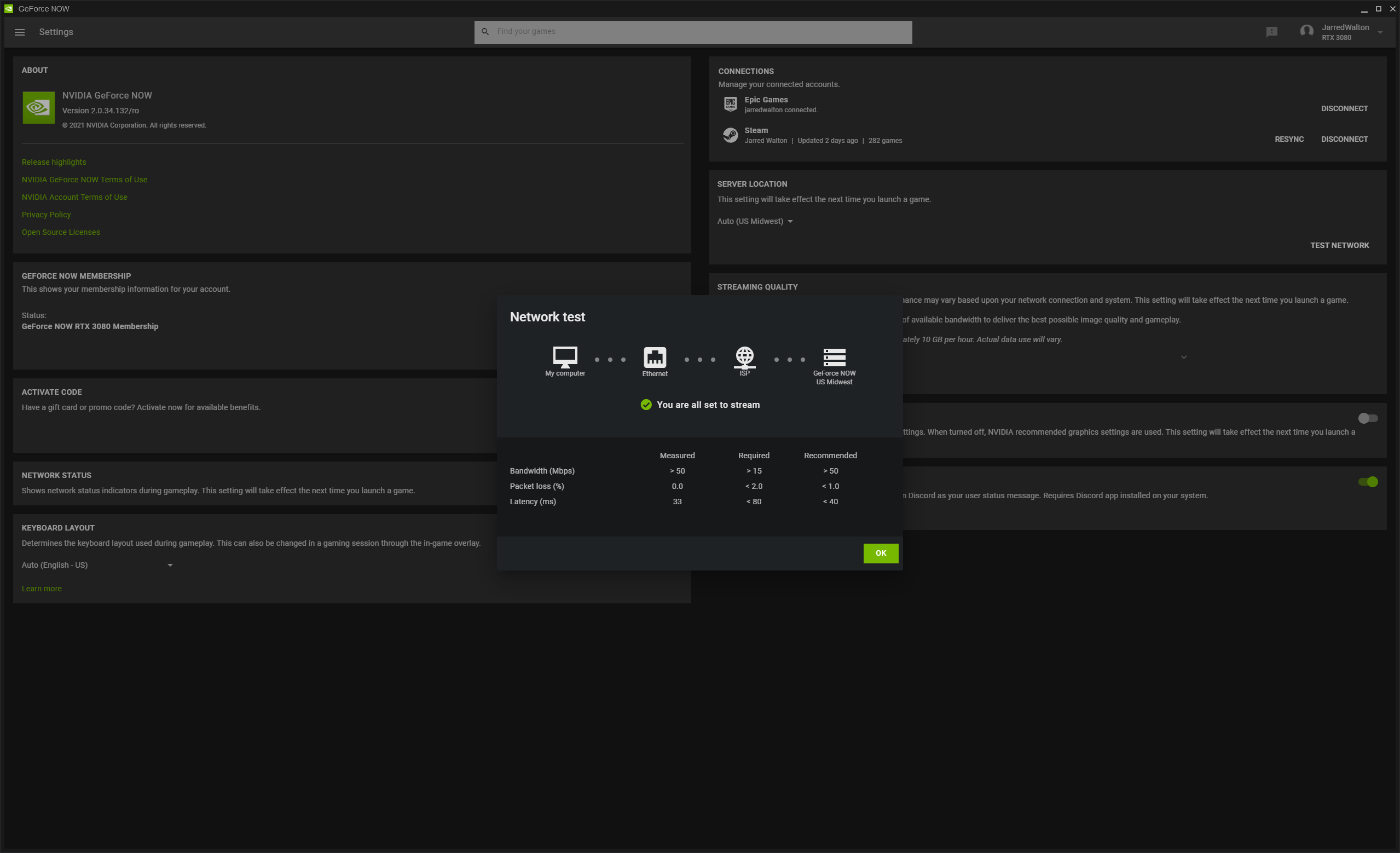

GeForce Now Bandwidth Requirements

Before we get into the comparisons, let's get this out of the way: GeForce Now, like any game streaming service, requires a decent internet connection. It's not too onerous, though, with a 30Mbps download speed as the baseline, and 50Mbps as the maximum configurable stream quality. I did some testing and found that using the default Balanced connection settings (30Mbps), actual data usage was typically in the 20–30 Mbps range, averaging around 25Mbps. That works out to about 11GB of data per hour. Using the Custom option and selecting the maximum quality (50Mbps, 2560x1440, 120 fps, and no automatic network speed adjustments), data use wasn't actually that much higher: about 30Mbps, or 13.5 GB per hour.

How you feel about that amount of data usage will depend very much on your internet provider. I used to have Comcast Xfinity, which cost me around $110 per month for the X-Fi Complete package with unlimited data, or $100 a month with a 1.2TB data cap (technically 1228GB), and charged $10 for each additional 50GB up to a maximum of $100 per month. The data cap sucked, mostly because of multiple game downloads and updates every month that could easily consume hundreds of GB of data, and my household routinely came close to that limit. Paying an extra $10 for unlimited data was an easy fix, though you'd need to check whether your provider offers unlimited data and how much it charges.

With a data cap of 1TB, which unfortunately still seems relatively common these days, that's enough for about 75 hours of GeForce Now gaming each month — assuming no one does anything else with the internet. It's basically equivalent to the amount of data used by streaming 4K movies and television. But seriously, if you have a data cap and play games a lot, or stream TV and play games, forget about game streaming services or look into upgrading to an unlimited plan first. (For the record, I moved and now have faster Internet via TDS for about $97 a month, with no data cap. Hallelujah!)

If you think 30 or 50 Mbps seems like a lot, it's good to put things into perspective. The source data for 2560x1440 at 120 fps would require 14.16 Gbps without compression. Yeah, we're not getting that level of bandwidth into our homes any time soon. We're basically looking at about a 300:1 compression ratio, using lossy video compression algorithms because that's the only way to make this work.

Wired or Wireless Networking?

The next thing you need to consider is whether you'll be using wired or wireless networking — or possibly both. Nvidia recommends wired connections, which makes sense. Wireless connections are far less predictable. Despite having a strong signal (>400Mbps down and 20Mbps up), when I tried GeForce Now on a laptop I ended up with a lot of intermittent lag. Playing off a wired connection fixed the problem.

Your particular network setup and devices will play a role, however. Maybe my router isn't the best, or perhaps the wireless adapter in my laptop was to blame. Or maybe there was some other interference causing issues. If you can use a wired gigabit (or faster) Ethernet connection, that will undoubtedly work best. If not, try to stay close to your router and perhaps downgrade the resolution and framerate to 1080p at 60 fps.

GeForce Now works on Android smartphones and tablets, Chromebooks, MacBooks, and of course Windows. Some people claim to have gotten it to work on Linux as well, but that's not officially supported — and neither are iOS devices. Some of these options often lack wired connections, in which case you'd again be better off with the $50 per six month tier of GeForce Now.

GeForce Now RTX 3080 Availability

The GeForce Now RTX 3080 tier has officially rolled out, but it's not available at all GeForce Now installations. Specifically, you'll want to check this page to see if you have a reasonably close node that will work. I'm in Colorado (US Mountain region), but the US Midwest region provided a good result while the US West region basically failed the latency test. The GeForce Now client software does check for optimal region selection automatically, and you can start with the free tier of service to see what it selects.

Nvidia will continue upgrading its GeForce Now data centers with the new Superpods, but there's no indication of how quickly that will happen. Note also that, like the original GeForce Now Founders Edition, the RTX 3080 tier has a limited number of slots available. Presumably, more slots will be added over time, but Nvidia doesn't want too many people signing up for something when it doesn't have the infrastructure in place to support those users. Nvidia wouldn't tell us the ratio of users to hardware that it's using.

Bottom line: If you want the benefits of the RTX 3080 tier, you'll probably want to jump on it quickly and subscribe.

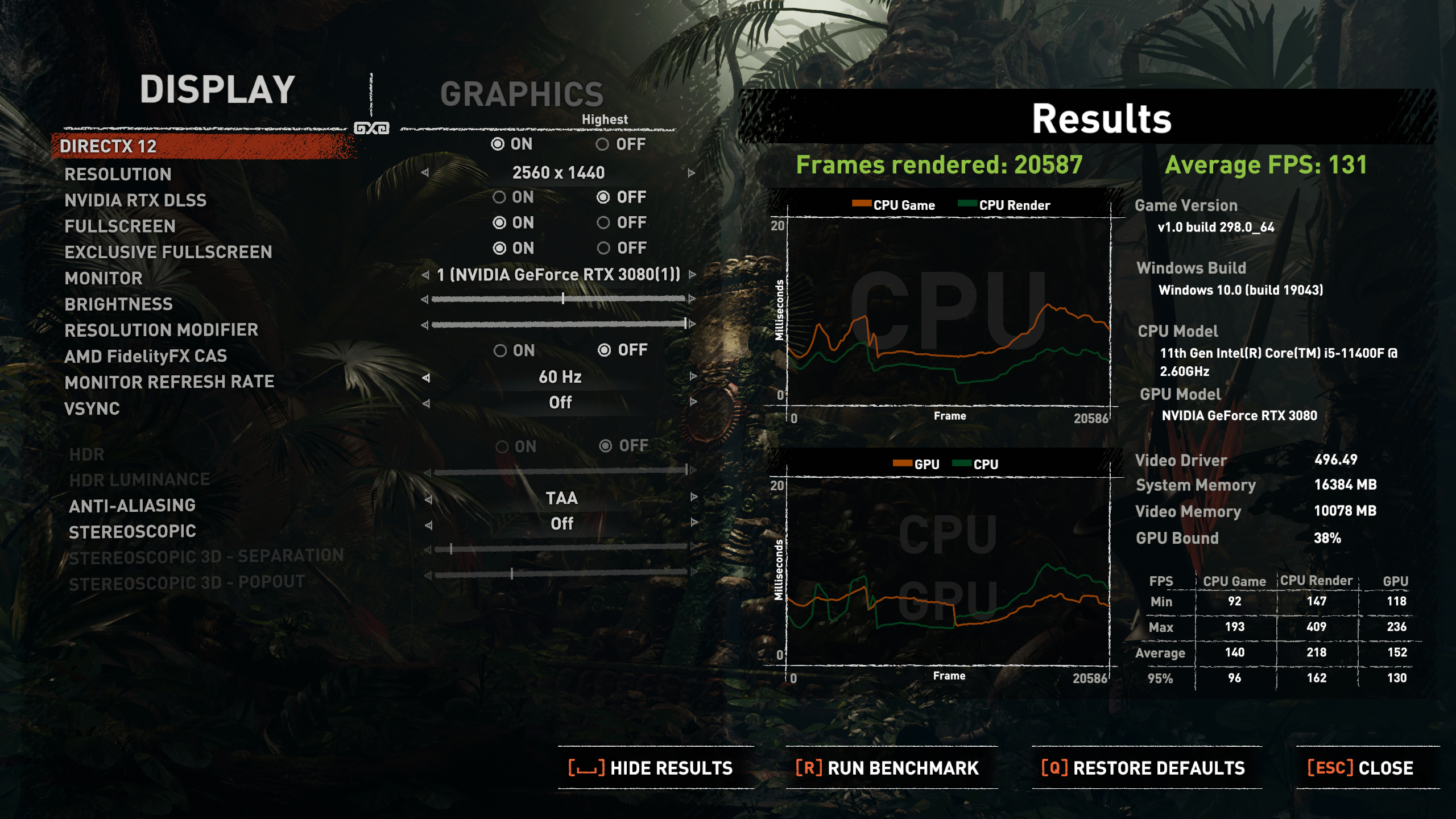

GeForce Now Test Setup

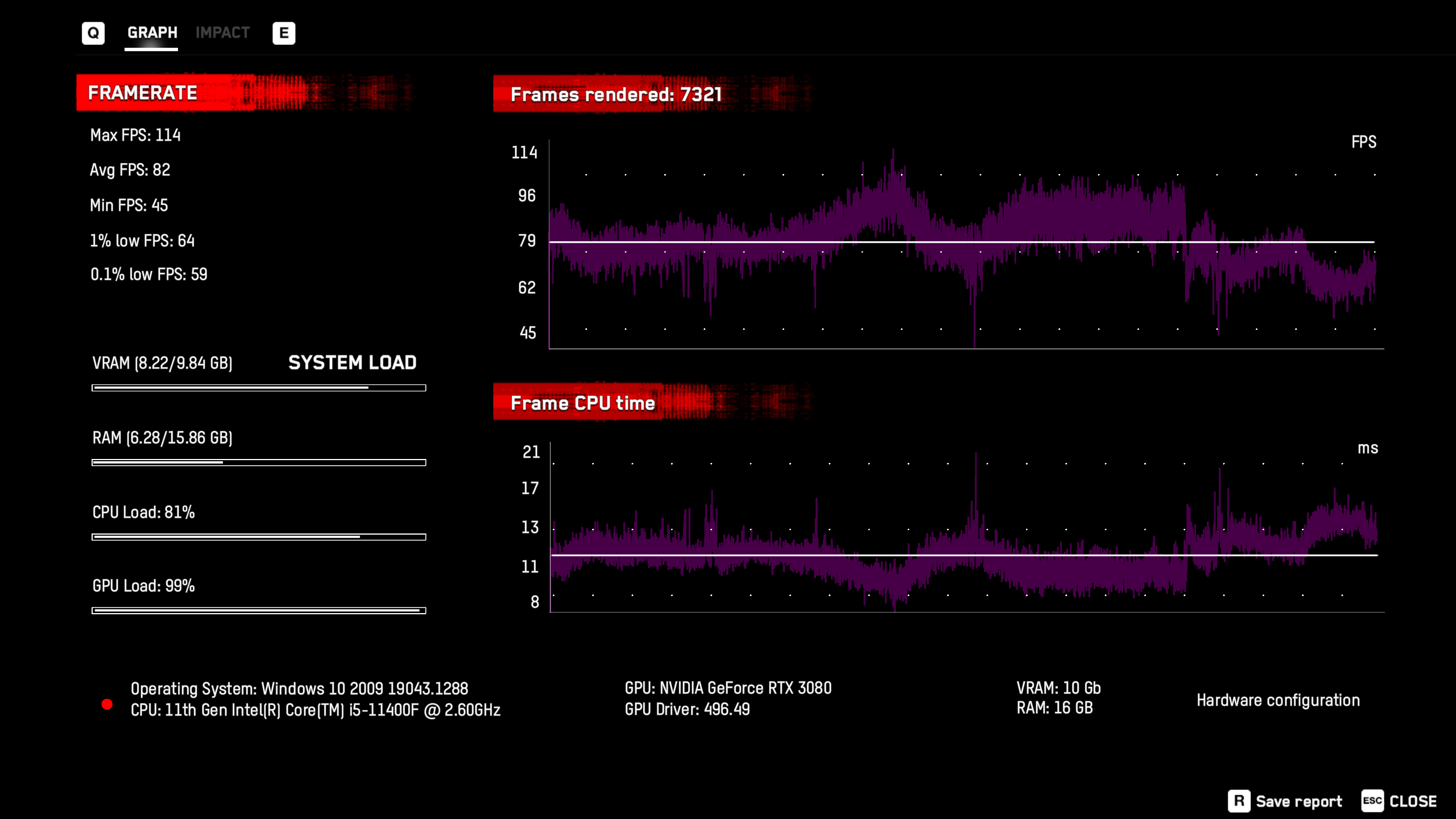

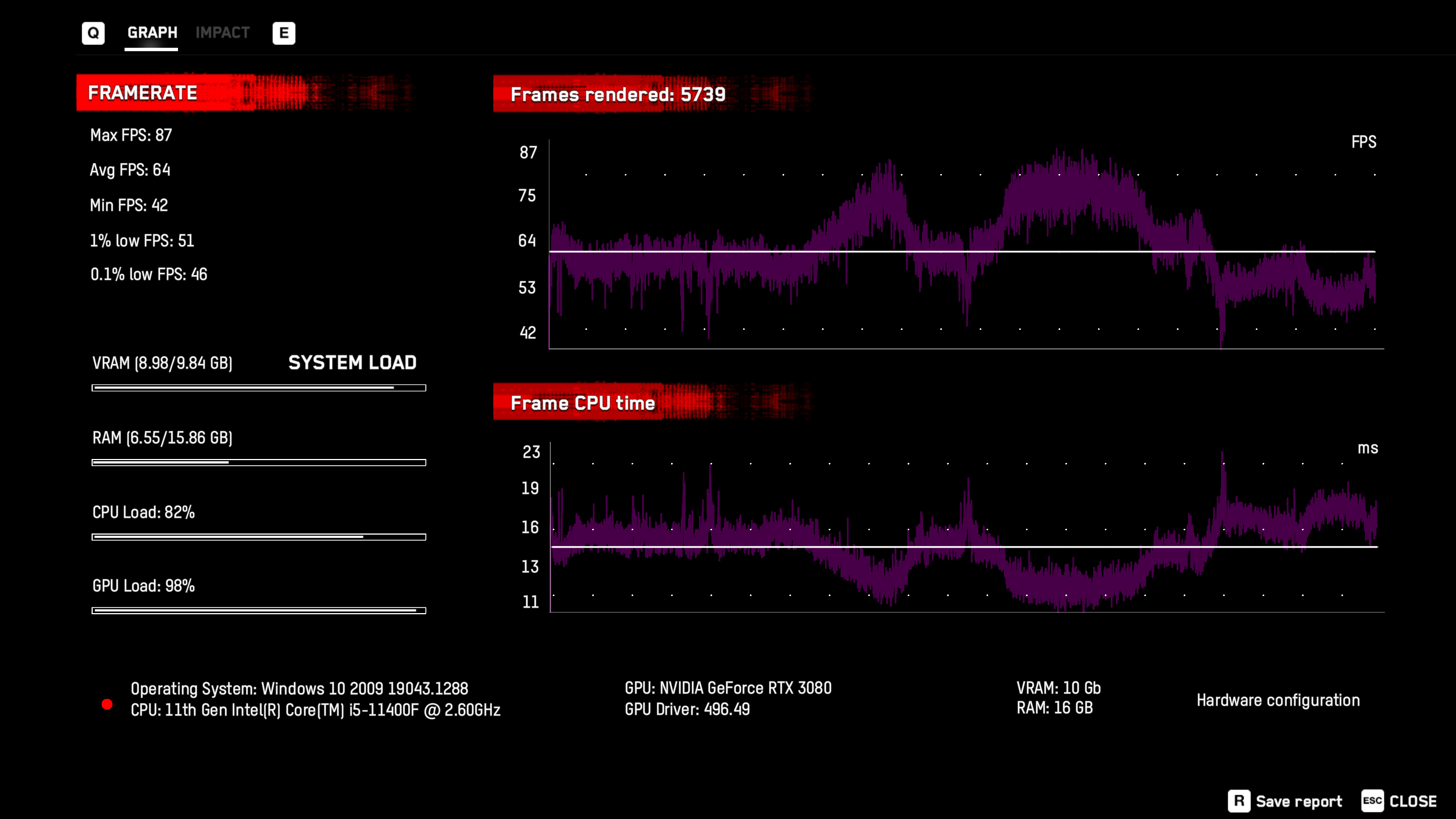

For these tests, I've used a PC equipped with a Core i5-11400F CPU, 16GB of DDR4-3200 memory, with an RTX 3080 Founders Edition for the local GPU testing. It also has SSD storage for the games, and it's connected to an Acer Predator X27 monitor (for 144Hz support). I also tested on an Ice Lake i7-1065G7 laptop with a 4K display (set to 1440p) for wireless testing, but that wasn't a pleasant experience.

My main goal here was to compare actual performance using in-game benchmarks (you can't capture frametimes for games running on GeForce Now), and also to look at image quality. I also played some games running off the service, which you can read about in the experiential gaming section below.

Note that GeForce Now RTX 3080 tier also supports 4K and 60 fps streaming, but only if you have a Shield TV. I didn't test this, and in general I'd prefer 1440p and 120 fps just because that should improve both latency and performance — 4K gaming tends to be a bit too demanding in a lot of games, even with an RTX 3080 equivalent. Nvidia hasn't enabled 4K support on PCs due to the wide disparity in video decoding hardware, but don't be surprised if that shows up somewhere down the road (with a minimum requirement of a 6th Gen Intel CPU or better).

Due to the limited selection of games available on GeForce now (as I noted above, Borderlands 3, Dirt 5, Horizon Zero Dawn, and Red Dead Redemption 2 are all unavailable on GeForce Now, which is sad as they all have useful built-in benchmarks), I've tested with Assassin's Creed Valhalla, Far Cry 6, Shadow of the Tomb Raider, and Watch Dogs Legion. Three of those games come from Ubisoft and ran off Ubisoft Connect, while Shadow of the Tomb Raider used Steam.

Watch Dogs Legion and Shadow of the Tomb Raider both support ray tracing effects as well, so we tested that. Unfortunately, while Far Cry 6 supports ray tracing, it uses DirectX 12 Ultimate, and that's not currently supported on Windows Server 2016. Nvidia says it's working on upgrading to Windows Server 2022, which will allow DX12 Ultimate games to work with ray tracing enabled. We manually configured GeForce Now for 2560x1440 and 120 fps, set Windows to 2560x1440 and 144Hz with G-Sync enabled, and proceeded to run some tests.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

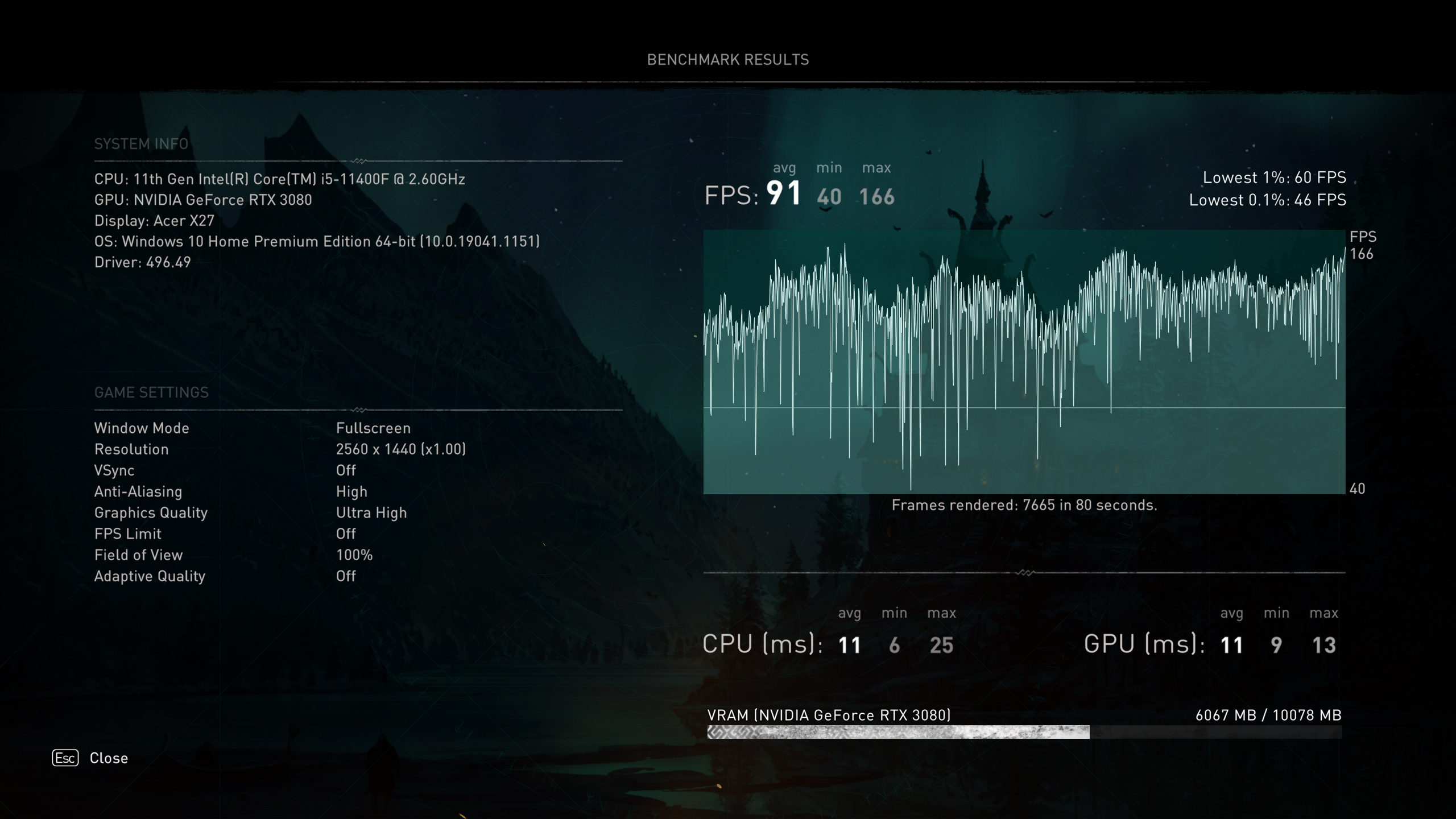

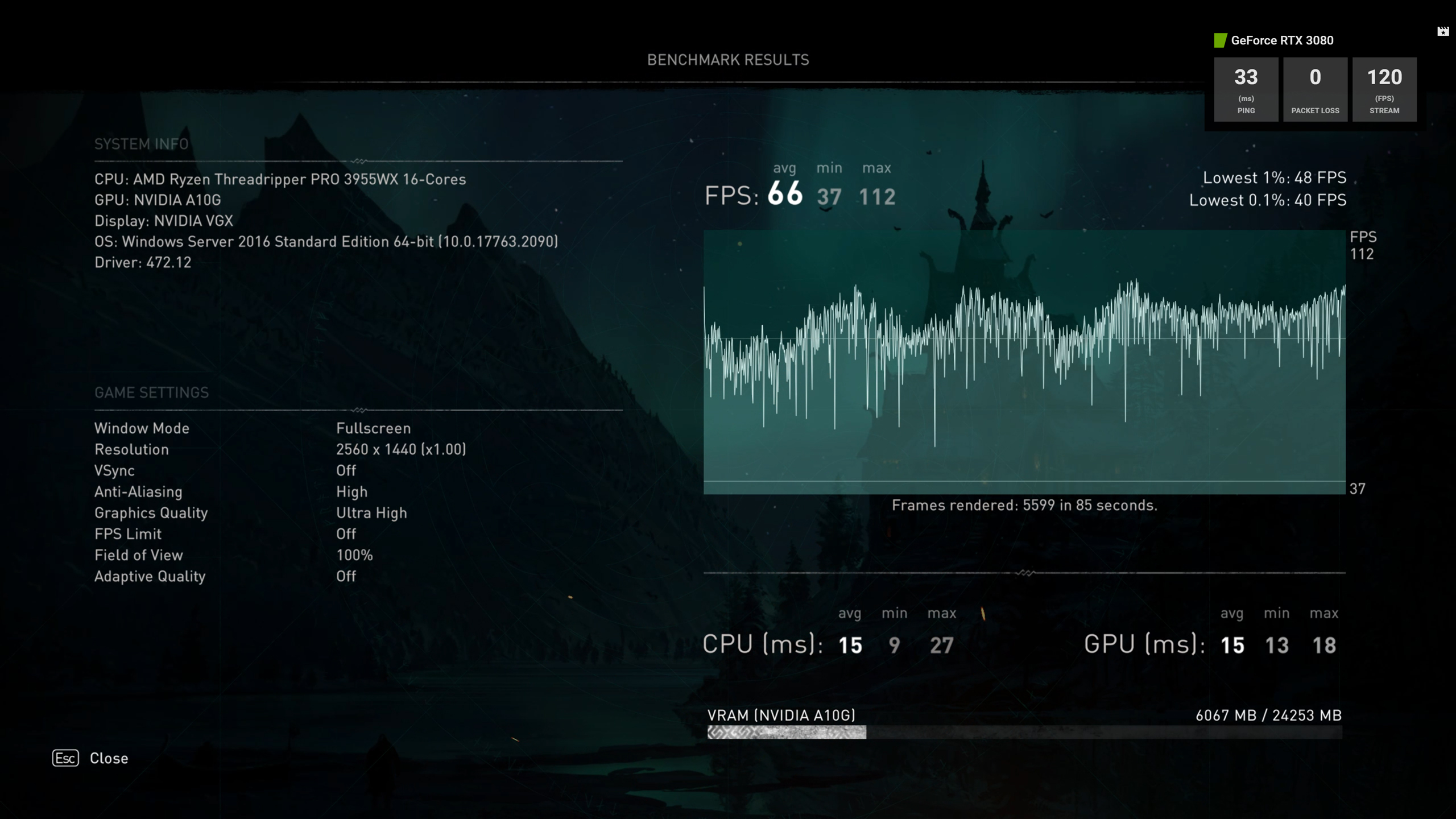

Assassin's Creed Valhalla

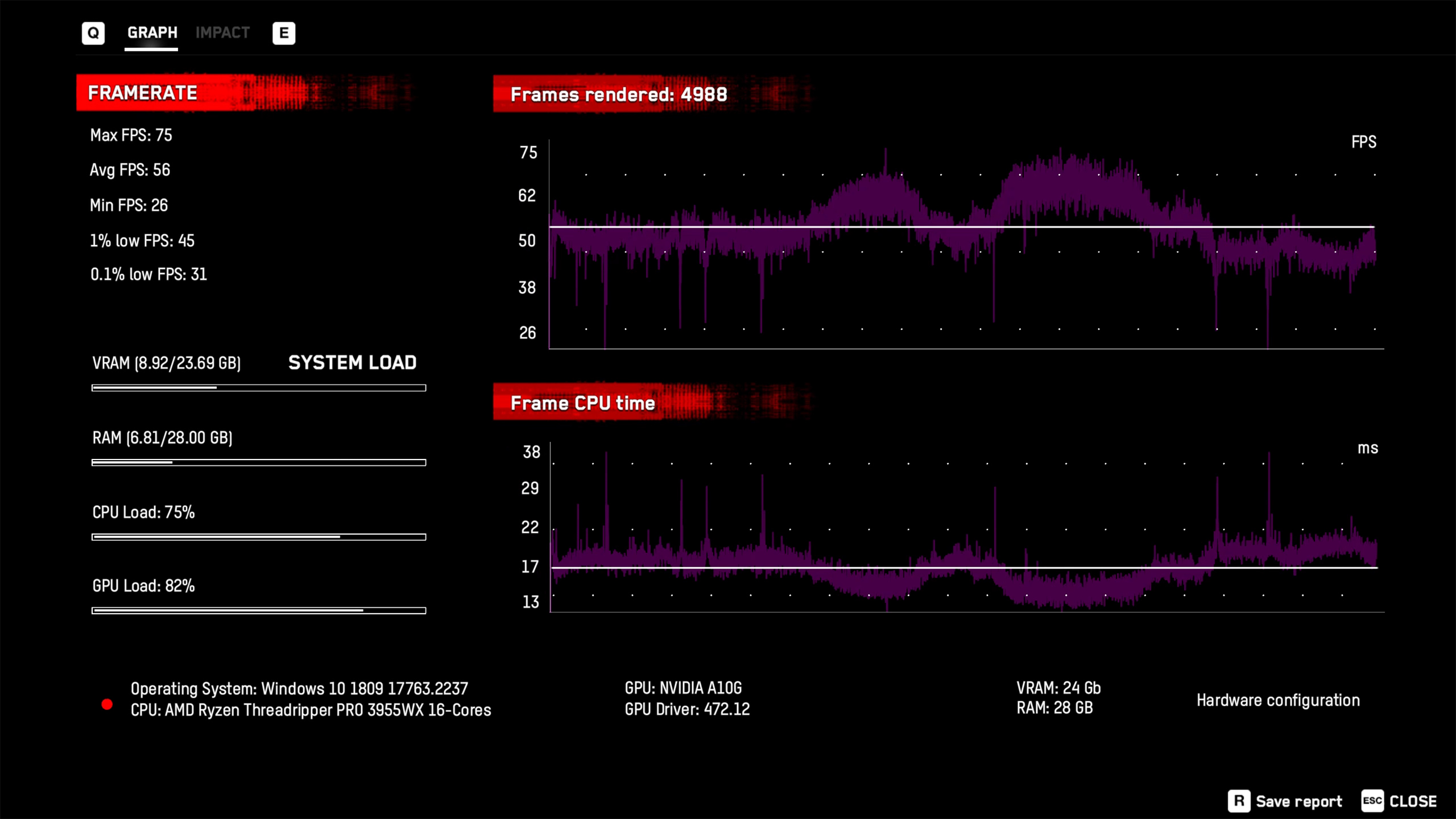

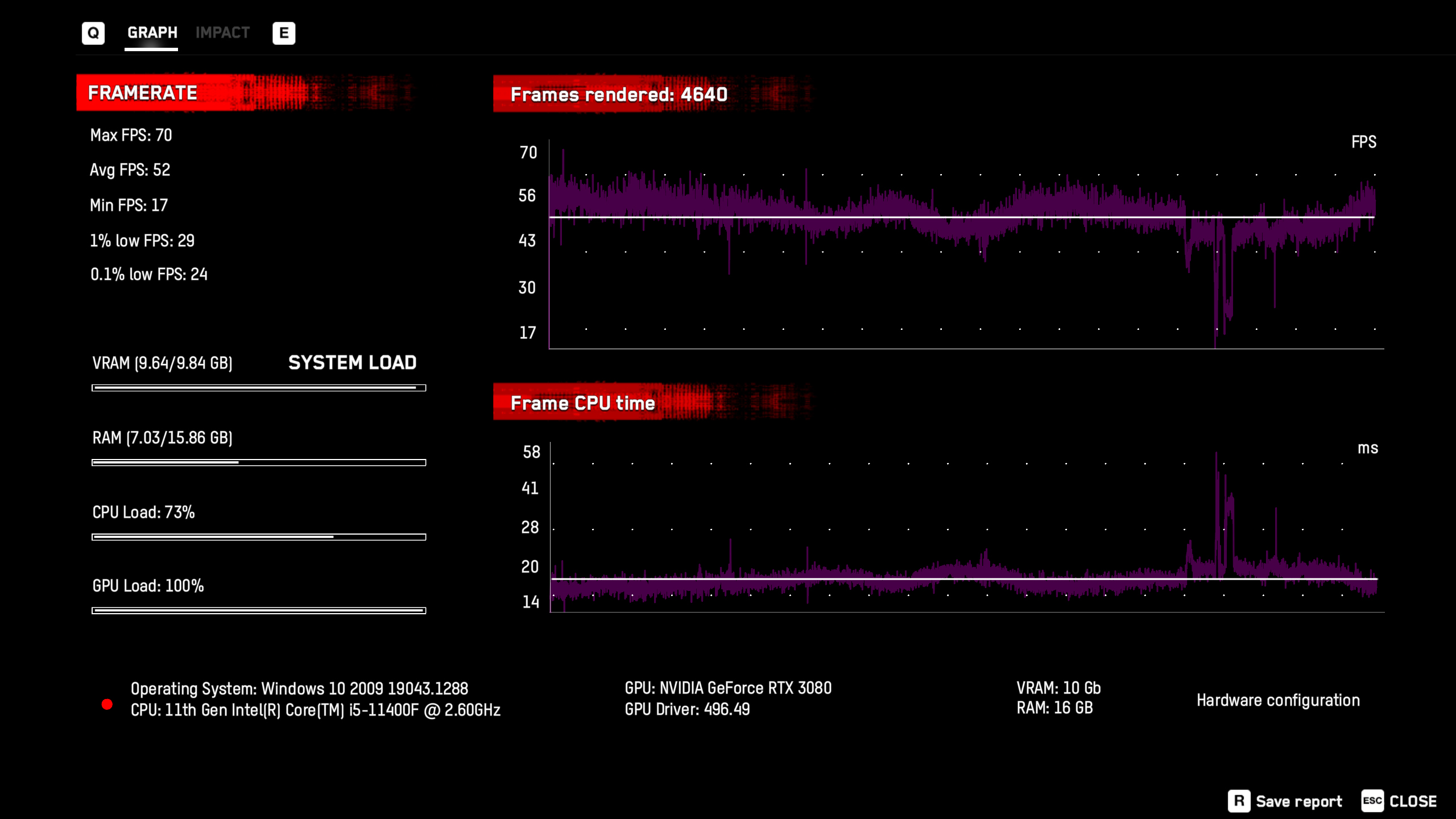

RTX 3080 local

GeForce Now streaming

RTX 3080 local

GeForce Now streaming

In terms of performance, Assassin's Creed Valhalla establishes a pattern we'll see repeated in the other games. Average performance at 1440p ultra was 66 fps, while running locally an RTX 3080 delivered performance of 91 fps — 38% faster. That's actually the worst result, relative to the RTX 3080, that we'll see in the games we tested. We suspect it might be a CPU bottleneck, as Valhalla can be pretty demanding in that area, but it may also be due to VRAM bandwidth or some other factors.

You'll want to look at the full resolution images (click the expand icon in the bottom-right, then click "View original") for the image quality comparisons, as the resized images are highly compressed and lose quite a bit of detail. Ignoring the slightly different colors and different camera position, there's clearly a loss of crispness with the GeForce Now screen captures. The text and line chart also show the loss of detail, and it basically looks like a decent upscaling filter was used on a 1080p source.

Perhaps a more apt comparison would be with something like FSR and DLSS. We recently did some in-depth comparisons of those two upscaling solutions, and at higher settings there's a lot of fuzziness that you start to notice when playing games. It's not terrible, and neither is GeForce Now, but don't expect to end up with sharp images like you'd get without compressing and streaming the frames over the internet.

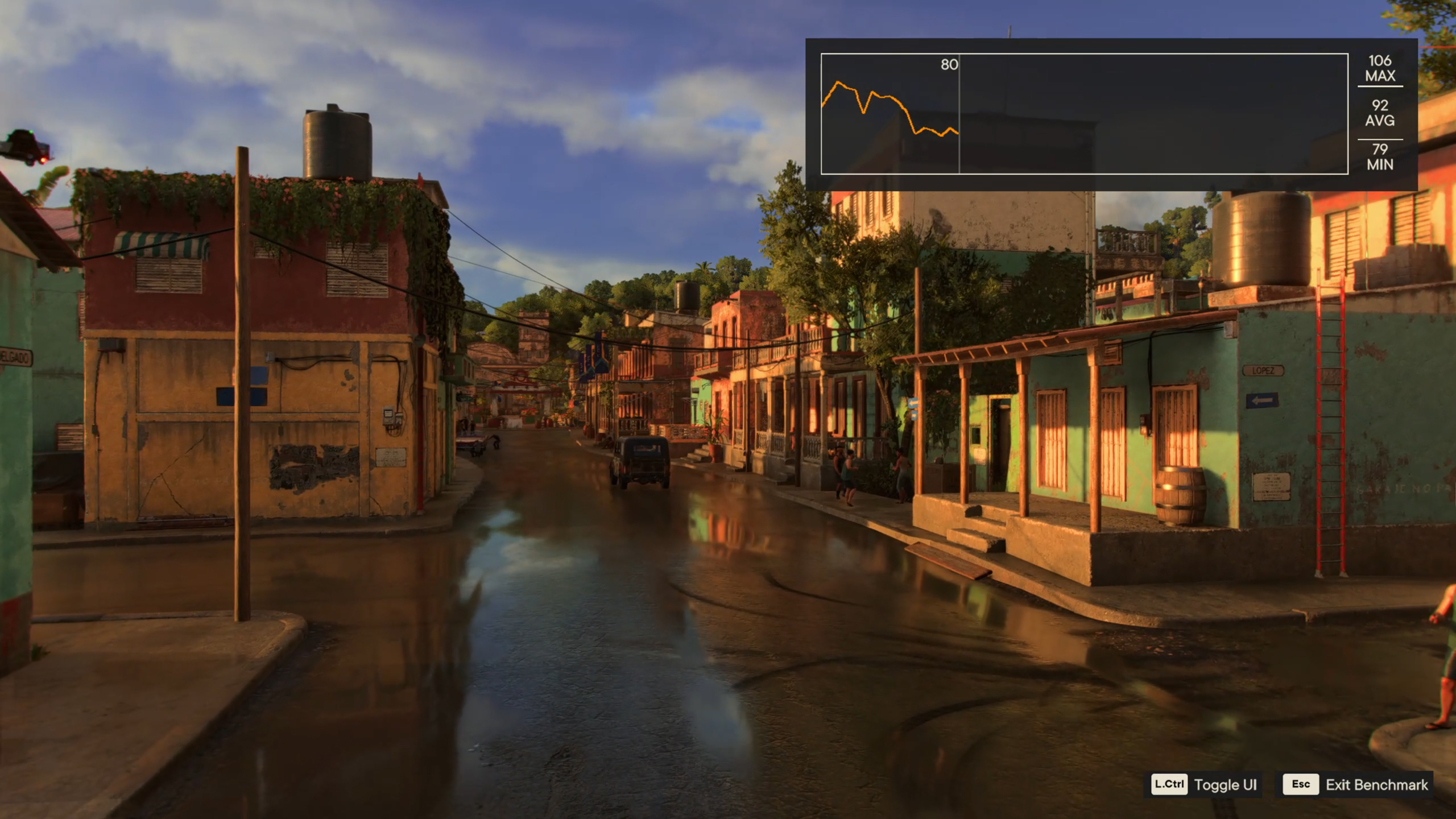

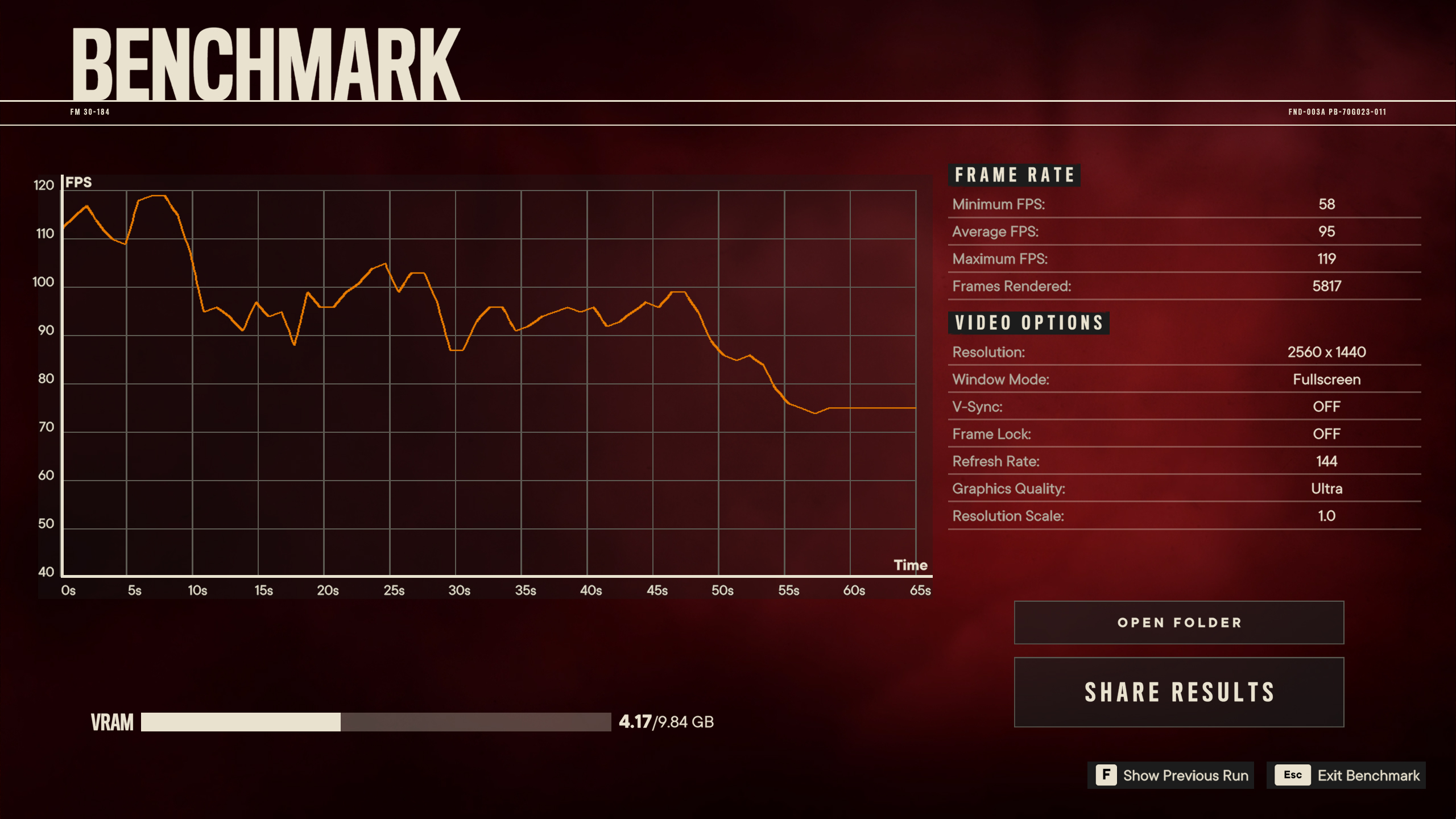

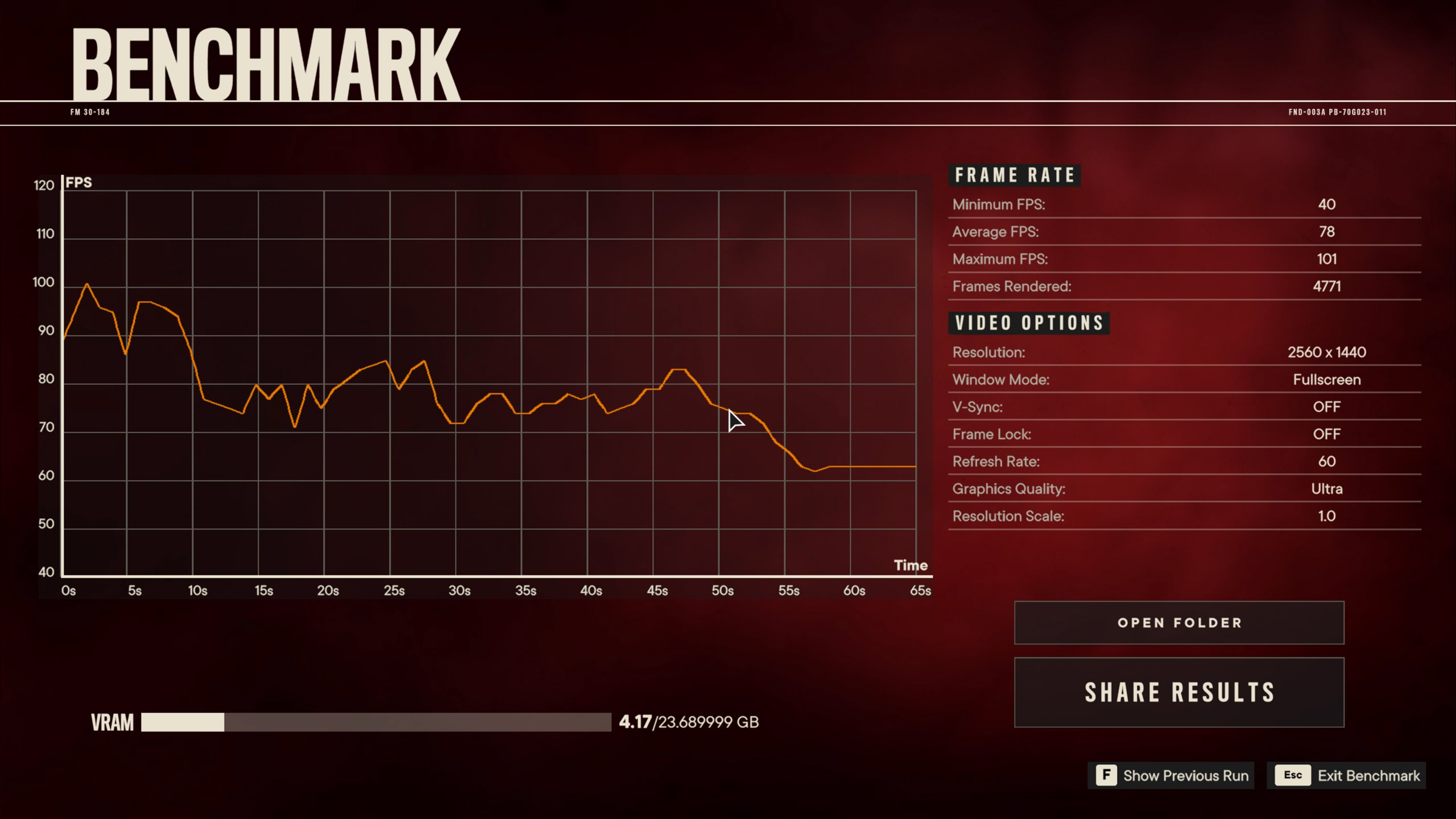

Far Cry 6

RTX 3080 local

GeForce Now streaming

RTX 3080 local

GeForce Now streaming

Next up, Far Cry 6 just came out earlier this month, and we ran a bunch of Far Cry 6 benchmarks. The GeForce Now version doesn't allow the use of ray tracing or HD textures, for now, so we just used the ultra preset. Performance this time was quite a bit closer, with the RTX 3080 achieving 95 fps locally while the GeForce now ran at 78 fps. That's still 22% faster, and based on our GPU benchmarks hierarchy, we'd say the RTX 3080 tier in GeForce Now performs more like an RTX 3070 than a 3080 (or maybe RTX 3070 Ti in some cases). Except you have triple the VRAM amount.

The colors and saturation of the GeForce Now screenshot are again different from the locally captured frame, which is a bit interesting (see how to take screenshots in Windows). Most likely some of that comes from the video stream compression, though it could just be variations in the lighting for a particular benchmark run. We don't see these differences in the other two games, though.

As with Valhalla, the streamed version of Far Cry 6 looks a bit fuzzy around the edges. There's a loss of detail on the street, sidewalk, and walls, and you can really see the blurriness in the leaves on the trees as well as in the balcony railing (roughly in the center of the screenshot). This is all thanks to the heavy video stream compression that's required to send the data over the internet. The fact that all of this happens with relatively minimal latency (more on that below) is quite impressive, even if the image quality ultimately suffers.

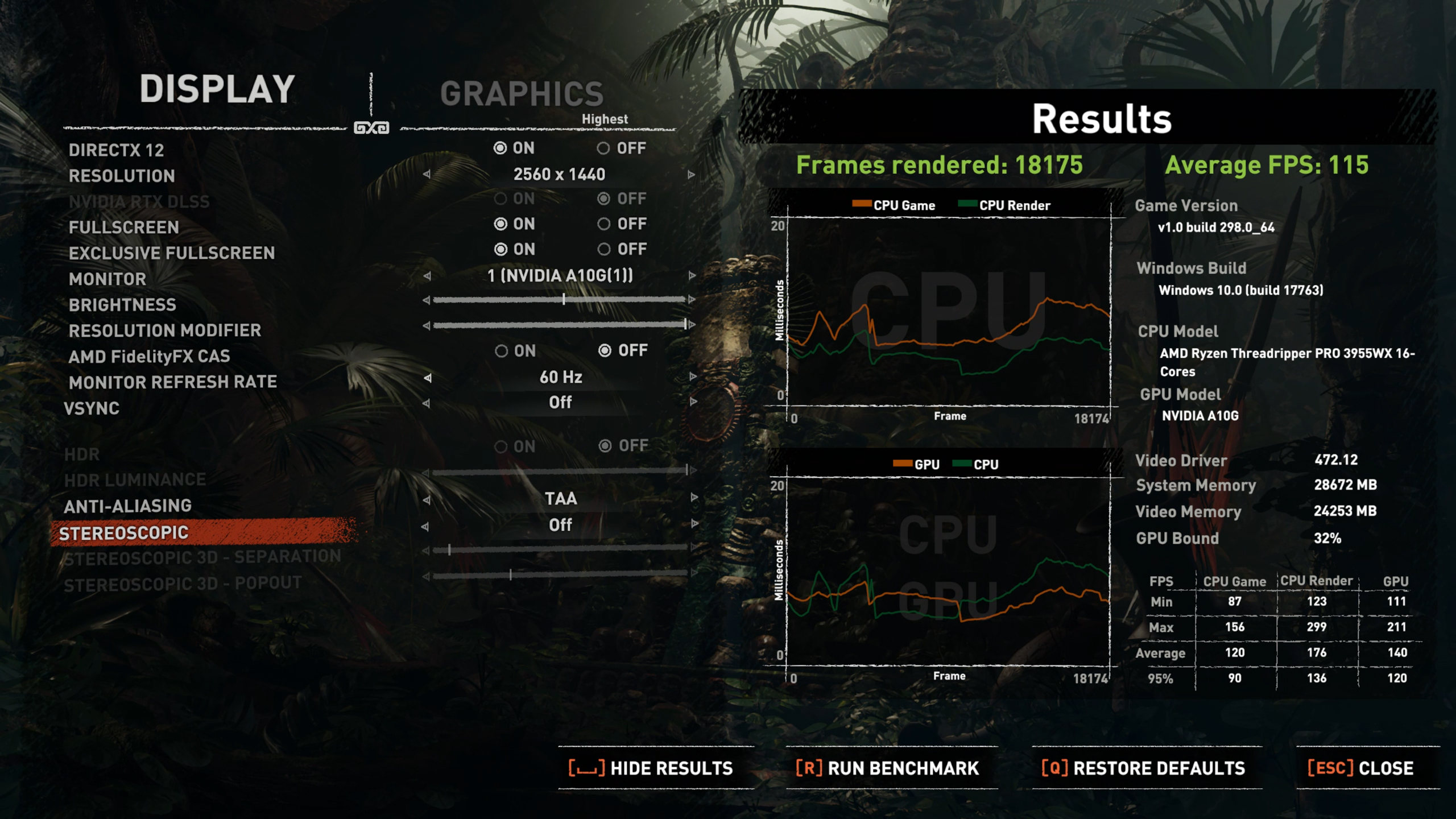

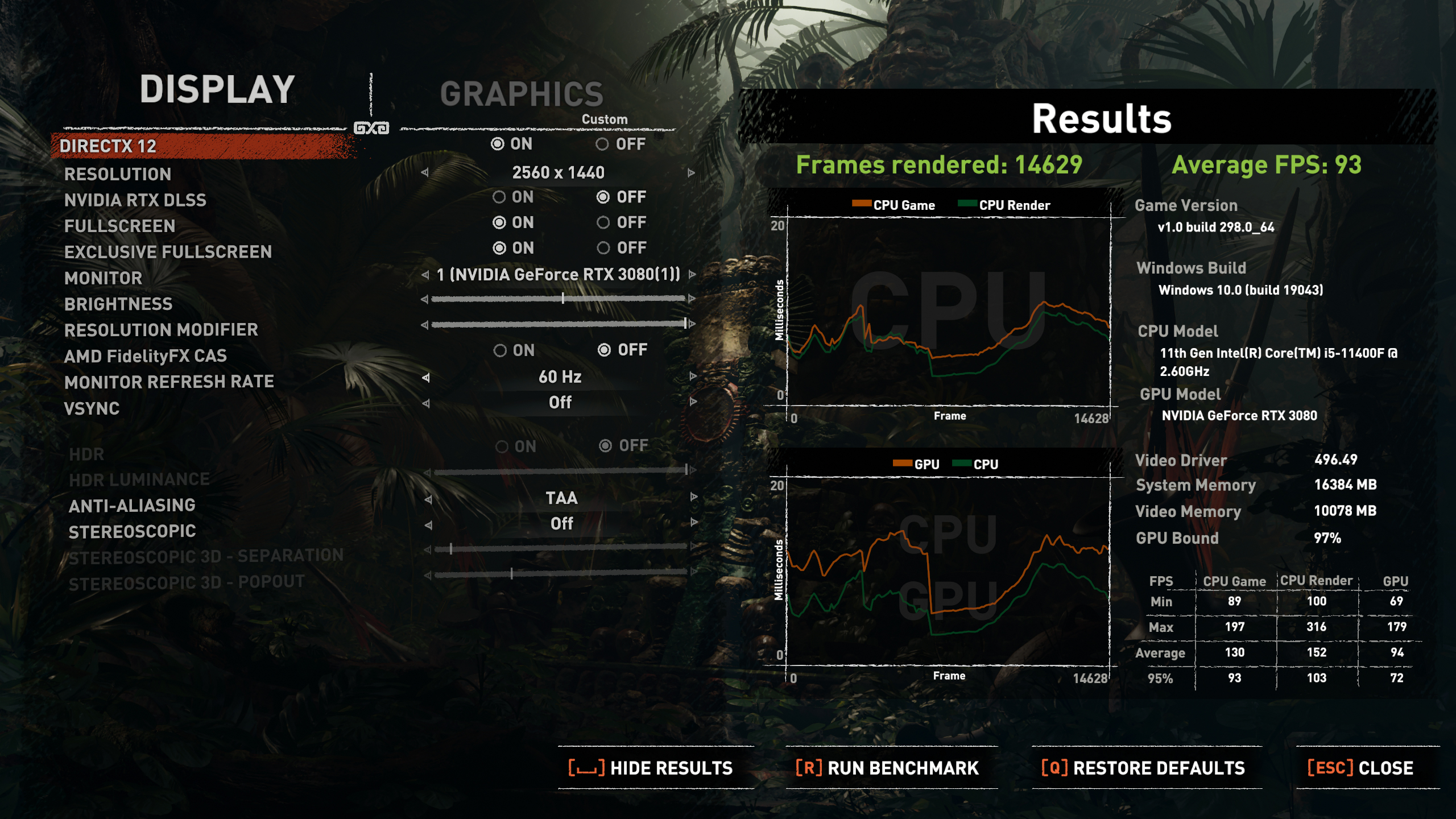

Shadow of the Tomb Raider

RTX 3080 local

GeForce Now streaming

RTX 3080 local

GeForce Now streaming

RTX 3080 ray tracing local

GeForce Now ray tracing streaming

RTX 3080 ray tracing local

GeForce Now ray tracing streaming

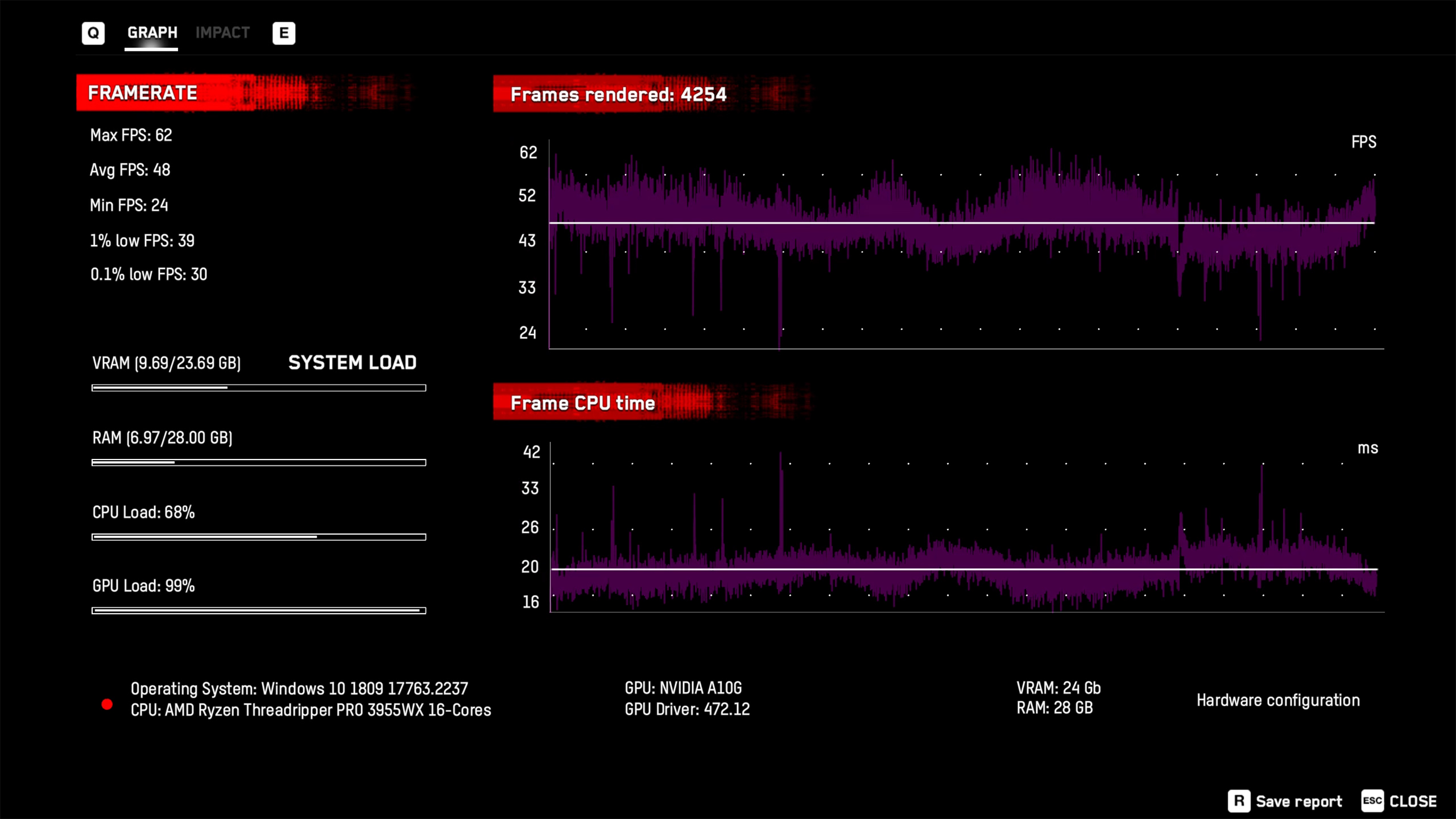

Shadow of the Tomb Raider has been around for about three years now. We've included both normal and ray traced results in our testing, though you can see how little the ray traced shadows actually matter. Basically, the shadows get a bit fuzzier for objects that are further away from the light source. Is it better? Sure, probably. Is it worth a 30% hit to performance? Nope.

Performance of the local RTX 3080 was closer here than in the first two games, as the 3080 was only 16% faster than the GFN A10G. That's without ray tracing enabled, and turning on RT closes the performance gap to just 10%. We're also getting close to the 120 fps limit of GeForce Now, though we disabled vsync so it should be able to go higher than that.

In terms of image quality, the fuzziness is still present, but it's not quite as visible as before. We also don't see any color shifts this time. Also note that while these still images tend to emphasize any loss in visual fidelity, when you're running around in a game the fuzziness is far less noticeable. It's still there, and some people will inevitably get irritated by it, but it's no worse than FSR balanced mode I'd say.

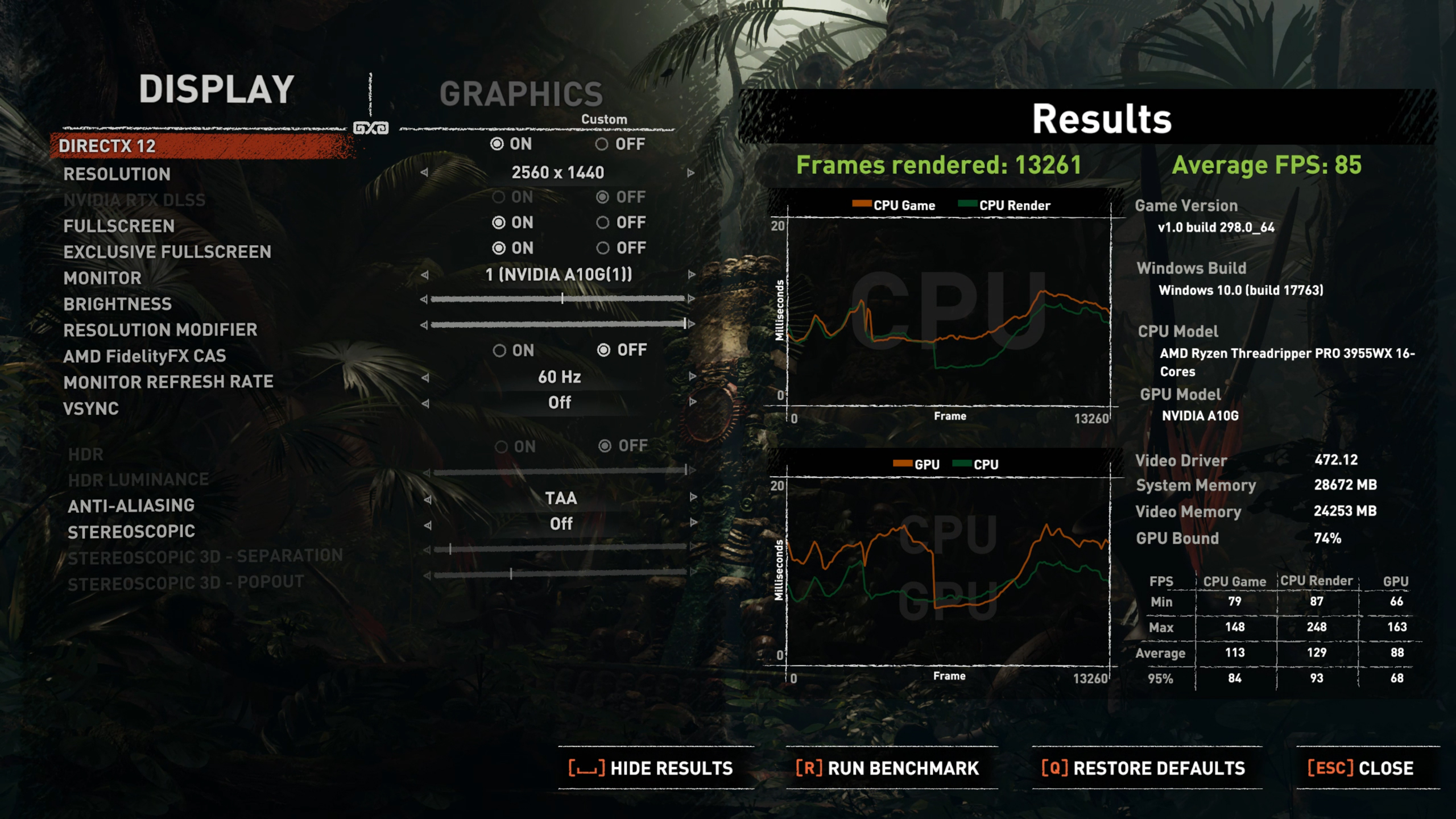

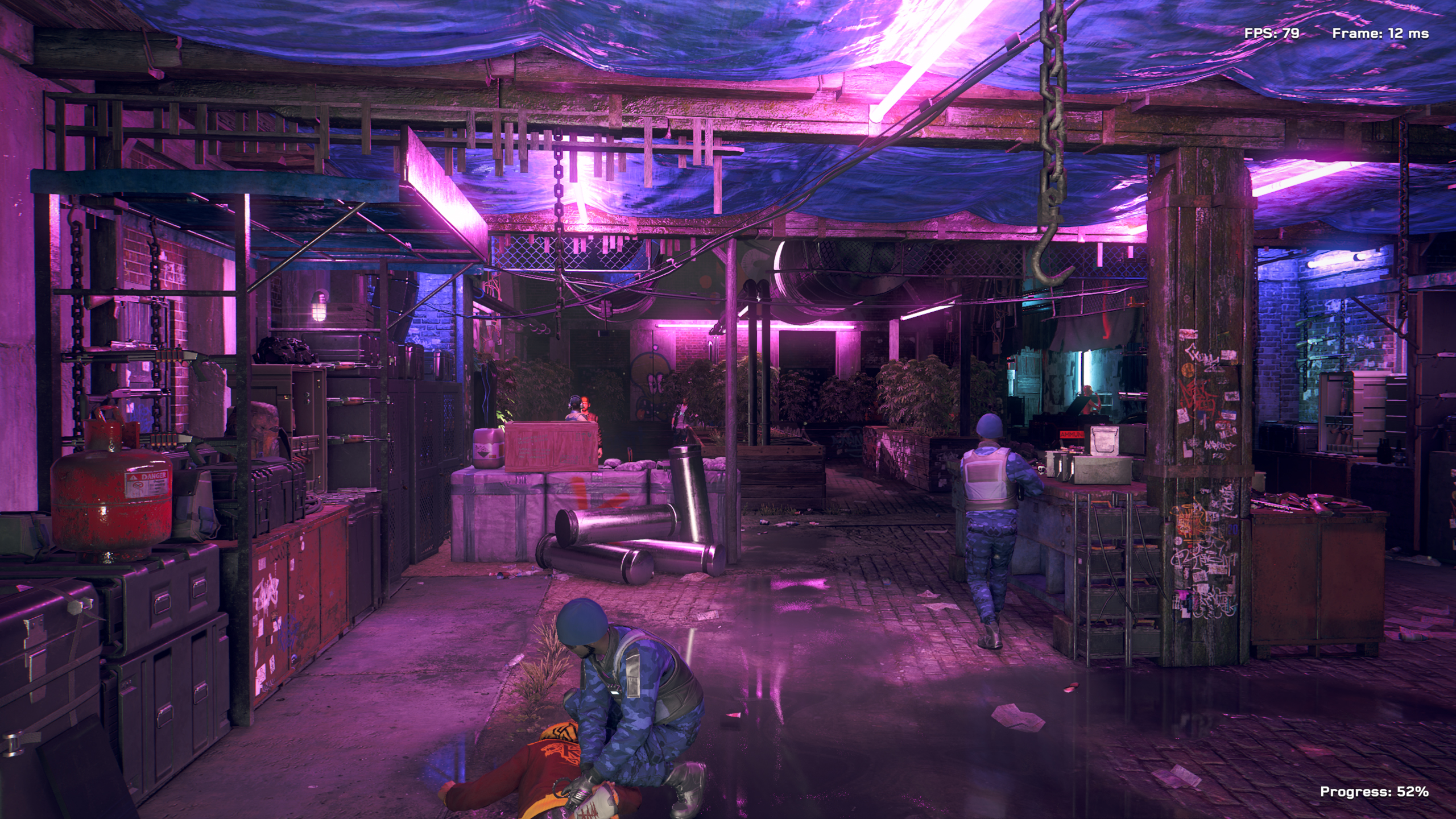

Watch Dogs Legion

RTX 3080 local

GeForce Now streaming

RTX 3080 local

GeForce Now streaming

RTX 3080 ray tracing with DLSS Quality mode local

GeForce Now ray tracing with DLSS Quality mode streaming

RTX 3080 ray tracing with DLSS Quality mode local

GeForce Now ray tracing with DLSS Quality mode streaming

RTX 3080 ray tracing without DLSS local

GeForce Now ray tracing without DLSS streaming

Our final game comparison, Watch Dogs Legion tells a similar story to the above games. Performance of the local RTX 3080 was 12% faster without ray tracing, and 16% faster with ray tracing enabled (using DLSS Quality mode). We also tested at native resolution with ray tracing, without DLSS, and the RTX 3080 was only 8% faster (52 vs. 48 fps).

There's an interesting tangent here with DLSS. We tested with and without DLSS, as well as using both the quality and performance modes. With GeForce Now, changing DLSS from Quality to Performance didn't seem to affect performance at all, while on the RTX 3080 Performance mode tends to be about 15% faster. It's not clear if this is just a GeForce Now bug or if there's something else going on, but regardless, performance between the 3080 and the streaming service was pretty close.

We have two sets of image quality comparisons as well. The first (taken from the benchmark sequence) shows the same blurriness and loss of detail that we've noticed elsewhere. Perhaps that's because the game was in motion, meaning the video compression has to be more lossy to stay within its 50Mbps budget. The second set, by the lake, is taken while we're standing still, and since the video compression uses data from previous frames, it can achieve a better result in terms of details. The temporal aspect of video compression ends up being a bit like DLSS, allowing for better results when there's less stuff happening on screen.

Gaming via GeForce Now, Including the Free Tier

So far, all of the testing has been focused on performance and image quality, but what's it like to actually play games on GeForce Now? You'd think with the need to send data over the internet, collect that and use it to update the game world, and then send it all back over the wire to your home that the increase in lag would be very noticeable. The reality is that I personally found it totally playable. Disclaimer: Yeah, I'm older and wouldn't call myself a competitive gamer, but I can hold my own in most multiplayer games.

The type of game does matter, of course. Assassin's Creed Valhalla, Watch Dogs Legion, Control, and Cyberpunk 2077 all tend to be less twitch-focused and I had no complaints with any of them. In shooters like Far Cry 6, the ~40ms increase in latency felt a bit more noticeable. We didn't perform extensive latency testing, but Nvidia's claims of 40ms seemed to be accurate for our location.

Frankly, I was quite surprised at how good the experience of playing games via GeForce Now was. I've tried a few game streaming services in the past, including GeForce Now, and the latency was always far more noticeable before. Either I'm less tuned in to the latency, or things are simply better with the RTX 3080 tier — or probably a little of both. GeForce Now reported a ping latency of just 33ms on my connection, which is only two frames on a 60Hz display. That's totally fine for my needs, assuming I needed a game streaming service.

After testing the RTX 3080 GeForce Now service for the past few days, I figured I needed a second opinion on the matter, so I signed up for a free account. Wow. What a difference that made! The hardware was noticeably slower, framerates couldn't maintain a steady 60 fps, and even at 1080p, ultra quality in many games was no longer a viable option. Once I dropped to 1080p medium in Far Cry 6, though, it wasn't too bad and I found myself enjoying the game — right up until I got booted after the one hour time limit was reached.

The loss in image quality, specifically the overall fuzziness of the stream, was also much more noticeable. However, it wasn't something that I couldn't ignore, and particularly in the midst of fights — in Assassin's Creed Valhalla, Control, Watch Dogs Legion, and Far Cry 6 — I stopped thinking about image fidelity and just got lost playing games. Playing with the RTX 3080 account was far better, but even that still had some fuzziness. But it was great to be able to basically max out the settings at 1440p in the games I tried, rather than trying to tune things to make the game work okay with the free tier.

GeForce Now RTX 3080 Closing Thoughts

Nvidia has been working on a game streaming service since the original Nvidia Shield handheld. I remember playing a few games via the Grid service back in the day, streamed to the device at 720p and 30 fps. That was eight years ago. Flash forward and the free Grid beta morphed into GeForce Now, with substantially upgraded streaming quality and support for a lot more platforms.

Of course, Nvidia isn't doing all of this out of kindness to gamers. Look at what Netflix did for video streaming, and that's potentially the prize for the first real success story in game streaming. Netflix has over 200 million subscribers, paying $10 per month. The profits are staggering. The hardware side of things is just as telling.

Assuming a 30-day month as the average, that's 720 hours in a month. Realistically, it's doubtful most people will stream games for more than a few hours a day, and a lot of people may only stream 5–10 hours per week. If Nvidia can average 20 hours per month of streaming time on its GeForce Now RTX 3080 hardware, that would potentially be 36 people sharing one A10G GPU. Nominally, that GPU costs Nvidia about $500 and could be sold for perhaps $1,500. Or Nvidia can charge 36 people $16.67 per month to access that GPU in the cloud. That's $600 per month, give or take, for the same GPU.

Assuming game streaming takes off, the potential upside (for Nvidia) is massive. The data center and infrastructure would all cost a lot of money as well, but long-term there's a potential revenue stream of hundreds of millions of dollars per month — if everything works out. And if it doesn't, Nvidia can convert the GeForce Now Superpods into some other form of cloud servers and lease time on them to someone other than gamers.

The biggest drawbacks right now are the missing games and the price. I don't think $100 for six months is necessarily too much to ask, and certainly $50 for six months is viable (for RTX 2080 level hardware), but do you really want yet another subscription? If you're more of a casual gamer, you can try the free plan, but the 60 minute sessions can be a bit irritating if you time out in the middle of a critical moment. Also, if you're using the free version, you get to play on a Tesla RTX T10-8, basically an RTX 2060 equivalent, except I believe it can be shared between two people. Whatever the hardware, it's much slower than the other solutions, and struggled to maintain 60 fps in Far Cry 6 even when set to medium quality 1080p. Playing the game was still okay, but you definitely want to keep settings lower.

As for the games you can play, that's the blessing and curse of GeForce Now. You have to own all the games you want to use, and they have to work with GeForce Now. Many don't, but more are added every Thursday, and at least you can take the games with you if you ever use your own PC without streaming. There are "thousands" of games available on GeForce Now, which is certainly more than you'll find on Stadia, and Google doesn't have a great reputation when it comes to keeping products around (RIP Google+, among others). GeForce Now also does periodic free trials of games, and since the games are all on cloud servers, you don't have to spend time downloading and installing them.

Fundamentally, GeForce Now is a way for Nvidia to use data center hardware to power a game streaming service. The free version gets you in the door, but at least in my testing, the latency and experience of the RTX 3080 tier was far better than the free tier. That's understandable, but it also means people might try the free version and get frustrated with it rather than upgrading to a paid tier. Either way, if you're in need of a graphics card and can't seem to find one, Nvidia has apparently put tens of thousands of them into GeForce Now for people to use. That's got to be better than having them in the hands of miners, right?

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

-Fran- So 8 hours per day tops... This is a third of a day, effectively, making 6 months of time elapsed more like 2 months. Sure, not realistic, but that's effectively their cost-time per user.Reply

Also, 8 hours sound... Kiddish? What's the real reason they are implementing this limit, really? To comply with China or something?

Regards. -

JarredWaltonGPU Reply

No, you've got that wrong. Eight hour sessions, at which point you get booted but can immediately launch another session.Yuka said:So 8 hours per day tops... This is a third of a day, effectively, making 6 months of time elapsed more like 2 months. Sure, not realistic, but that's effectively their cost-time per user.

Also, 8 hours sound... Kiddish? What's the real reason they are implementing this limit, really? To comply with China or something?

Regards.

Free Tier: ~RTX 2060 (shared by two users?), 1-hour sessions, may have to wait in a queue depending on demand.

Priority Tier: ~RTX 2080 (not shared), 6-hour sessions, still potentially limited availability but I've never had to wait. (Same as "Founders Edition" tier if you signed up for that back in the day.)

RTX 3080 Tier: ~RTX 3080 (really 3070), 8-hour sessions, supports 1440p 120fps, or 4K 60fps on a Shield TV. Limited quantity available, but we don't know what that means exactly. -

-Fran- Reply

OooOOoooOOh. That's better, I guess. Thanks for clearing it up.JarredWaltonGPU said:No, you've got that wrong. Eight hour sessions, at which point you get booted but can immediately launch another session.

Free Tier: ~RTX 2060 (shared by two users?), 1-hour sessions, may have to wait in a queue depending on demand.

Priority Tier: ~RTX 2080 (not shared), 6-hour sessions, still potentially limited availability but I've never had to wait. (Same as "Founders Edition" tier if you signed up for that back in the day.)

RTX 3080 Tier: ~RTX 3080 (really 3070), 8-hour sessions, supports 1440p 120fps, or 4K 60fps on a Shield TV. Limited quantity available, but we don't know what that means exactly.

Still, I wouldn't like to be kicked out in the middle of a quest or boss fight, but it's better than just not being able to connect at all back into the game for another 16 hours, lol.

And the "free tier" is an important mention. I didn't know that existed at all. How does that hold up?

Regards. -

JarredWaltonGPU Reply

I talk about the free tier at the end of the article. It's noticeably worse, both in image quality and settings you can run, but it's still viable. Think 1080p medium as the target, mostly. The one hour sessions can definitely feel too short. On the other hand, it does give you a hard time limit so maybe you won't waste as much time playing games instead of working? LOLYuka said:OooOOoooOOh. That's better, I guess. Thanks for clearing it up.

Still, I wouldn't like to be kicked out in the middle of a quest or boss fight, but it's better than just not being able to connect at all back into the game for another 16 hours, lol.

And the "free tier" is an important mention. I didn't know that existed at all. How does that hold up?

Regards. -

VforV A year ago Jensen had an brilliant marketing idea: why don't I parade this amazing RTX 3080 deal, bang for buck dream (after the 2080 Ti fiasco) and point out how much stronger it is at "only" $700 MSRP (for those who believe in unicorns). Everyone will go nuts for that and I can go to phase 2 from there.Reply

This is the result of phase 2 from the best BS-er in the tech industry: Mr. Jensen H.

Create the most desired carrot on a stick, parade it long enough to produce salivation than make the carrot unachievable and put all those sweet 3080 is in the Cloud to make all the muppets that want one buy nvidia's services instead, for $200/year: brilliant! (slow clap) -

-Fran- Reply

Ah, as per usual my bad habit of selective reading. Apologies and thanks for pointing it out again; I went and re-read that part.JarredWaltonGPU said:I talk about the free tier at the end of the article. It's noticeably worse, both in image quality and settings you can run, but it's still viable. Think 1080p medium as the target, mostly. The one hour sessions can definitely feel too short. On the other hand, it does give you a hard time limit so maybe you won't waste as much time playing games instead of working? LOL

I don't think it's that elaborate of a plan, TBH. For whatever reason a lot of execs think (still) the "future is in the cloud", as if the 50s and 60s never happened. This is what the industry is trying to move towards to: you don't own anything and just rent it. It is the best way to keep revenue streams forever and harvest people for their money in the most effective of ways, so...VforV said:A year ago Jensen had an brilliant marketing idea: why don't I parade this amazing RTX 3080 deal, bang for buck dream (after the 2080 Ti fiasco) and point out how much stronger it is at "only" $700 MSRP (for those who believe in unicorns). Everyone will go nuts for that and I can go to phase 2 from there.

This is the result of phase 2 from the best BS-er in the tech industry: Mr. Jensen H.

Create the most desired carrot on a stick, parade it long enough to produce salivation than make the carrot unachievable and put all those sweet 3080 is in the Cloud to make all the muppets that want one buy nvidia's services instead, for $200/year: brilliant! (slow clap)

Anyway, point is: it's not a "Jensen" thing at all. It's just a coincidence.

Regards. -

husker Obviously, the review can only take into account the current state of things, and it does look promising. But at what point will the data demands of multiple high volume data streams overtake what can be seamlessly handled by the "magic" of the internet? I'm not talking about Nvidia's servers, I'm talking wires between us and them. Sure, the overall theoretical capacity may be high, but are there not localized bottlenecks that could be overwhelmed if something like this became commonplace? Does the business model even take this variable into account or is the proper attitude to simply view the internet as an infinite resource that can take whatever is thrown at it and it is someone else's job to make sure that streaming graphics for games remains a high traffic priority?Reply

In short, building a business model relying heavily on existing public infrastructure better take that infrastructure into account. -

VforV Reply

Of course it's not just Jensen in the Cloud business, but here I'm talking about his "plan".Yuka said:I don't think it's that elaborate of a plan, TBH. For whatever reason a lot of execs think (still) the "future is in the cloud", as if the 50s and 60s never happened. This is what the industry is trying to move towards to: you don't own anything and just rent it. It is the best way to keep revenue streams forever and harvest people for their money in the most effective of ways, so...

Anyway, point is: it's not a "Jensen" thing at all. It's just a coincidence.

Regards.

Even if he did not had the full plan from the beginning, he sure made it work after he saw what a big mistake was telling people a 3080 for that performance is $700... so he "rectified" it, like this.

Also, there are no coincidences ever, just lack of information for us (our/your POV) to actually know the reasons behind things. Someone somewhere always knows more and why... -

-Fran- Reply

No, there are coincidences. Make no mistake that "dumb luck" is a thing, even in multi-billion dollar businesses.VforV said:Of course it's not just Jensen in the Cloud business, but here I'm talking about his "plan".

Even if he did not had the full plan from the beginning, he sure made it work after he saw what a big mistake was telling people a 3080 for that performance is $700... so he "rectified" it, like this.

Also, there are no coincidences ever, just lack of information for us (our/your POV) to actually know the reasons behind things. Someone somewhere always knows more and why...

You can think of whatever conspiracy theory behind the time after COVID and the whole chain of events that has made the market go bonkers, but what nVidia is doing is basically a basic thing in capitalism: make the best out of situations that favour you. AMD has been doing it and, to a lesser degree, Intel. Then every chip manufacturer has as well.

For short, what you think is the biggest juicy evil plot in GPU history, is just high paid execs making the best possible decisions in a bad situation. This being said, and my point with the previous comment, is this was something they've been doing for a while now; not just nVidia but other providers. I'm pretty sure Google, Netflix, Microsoft and Sony are also sourcing part of their cloud stuff from AMD and/or nVidia, so you can put AMD in the same bag as nVidia for this one?

Reference: https://www.amd.com/en/graphics/server-cloud-gaming

Regards. -

vinay2070 Reply

I think it would just be like watching 4K TV. There are more people watching 4K TV than people wanting to game on these servers. I am hoping that if the internet pipes can handle these TV content, then should be able to cope with the gaming as well. I may be wrong though. Personally this is a good service, but there is a very slight noticeable lag when I tried to play (in Australia). I would prefer a GPU in my cabinet and an ultrawide monitor anyday over the streaming.husker said:Obviously, the review can only take into account the current state of things, and it does look promising. But at what point will the data demands of multiple high volume data streams overtake what can be seamlessly handled by the "magic" of the internet? I'm not talking about Nvidia's servers, I'm talking wires between us and them. Sure, the overall theoretical capacity may be high, but are there not localized bottlenecks that could be overwhelmed if something like this became commonplace? Does the business model even take this variable into account or is the proper attitude to simply view the internet as an infinite resource that can take whatever is thrown at it and it is someone else's job to make sure that streaming graphics for games remains a high traffic priority?

In short, building a business model relying heavily on existing public infrastructure better take that infrastructure into account.