New Tools From Google Promise PC Quality VR On Mobile

Google is expanding its Daydream VR platform at the same time that it’s looking to both improve the quality of VR content and make developing great experiences easier.

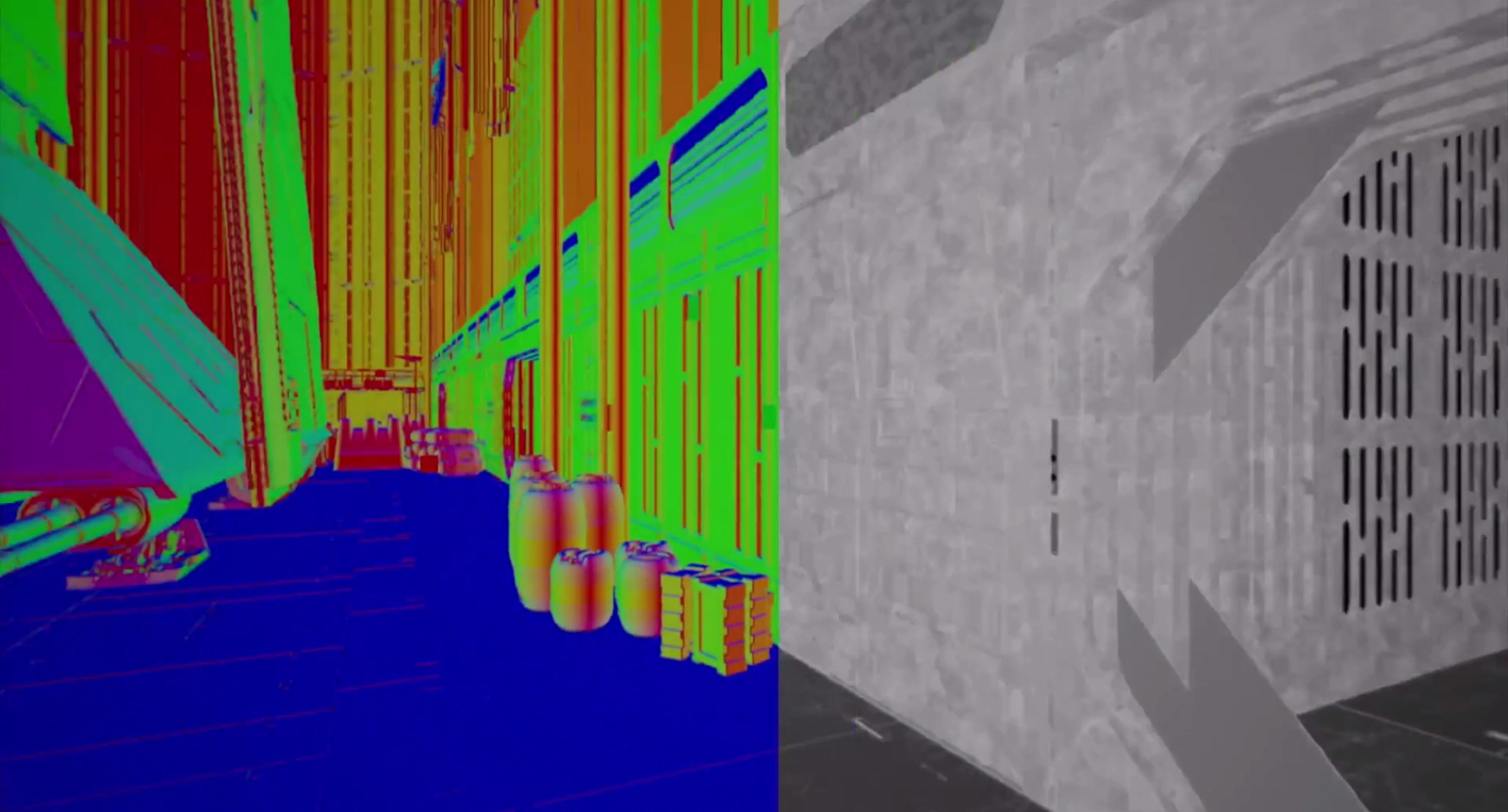

Daydream 2.0 (codenamed “Euphrates”) will include an improved rendering engine that Google has named “Seurat” (after the painted famous for pointillism). The details are scarce, but Seurat seems like it will improve performance in a number of ways. The biggest change seems to be support for an advanced form of “occlusion culling.”

Occlusion culling is a technique used in computer graphics where the engine renders only the parts of a scene that are visible to the viewer. So instead of rendering an entire house, a system using occlusion culling would render only the outside walls that the player can see. Put a fence and some bushes in front of the house, and Daydream will stop rendering the parts of the house hidden by the bushes.

This reduces the total number of polygons in the scene and can improve performance.

Some form of occlusion culling has actually been a part of most games software for a while, including Unity and Unreal, but on Android the performance penalty was so great that it negated any benefit from rendering a simpler scene. In Google’s demos at I/O, though, it looks like Seurat could potentially lead to huge performance gains.

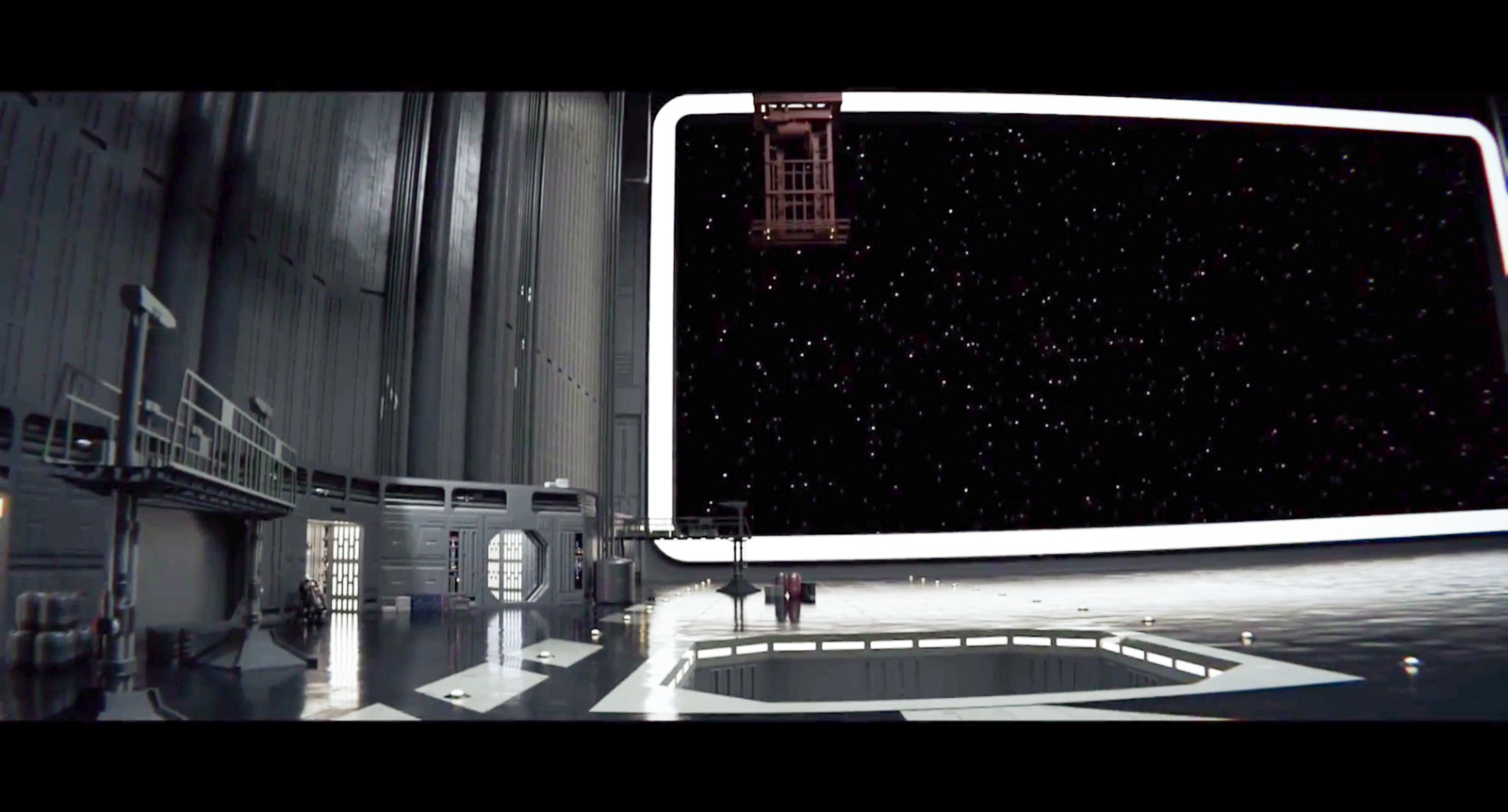

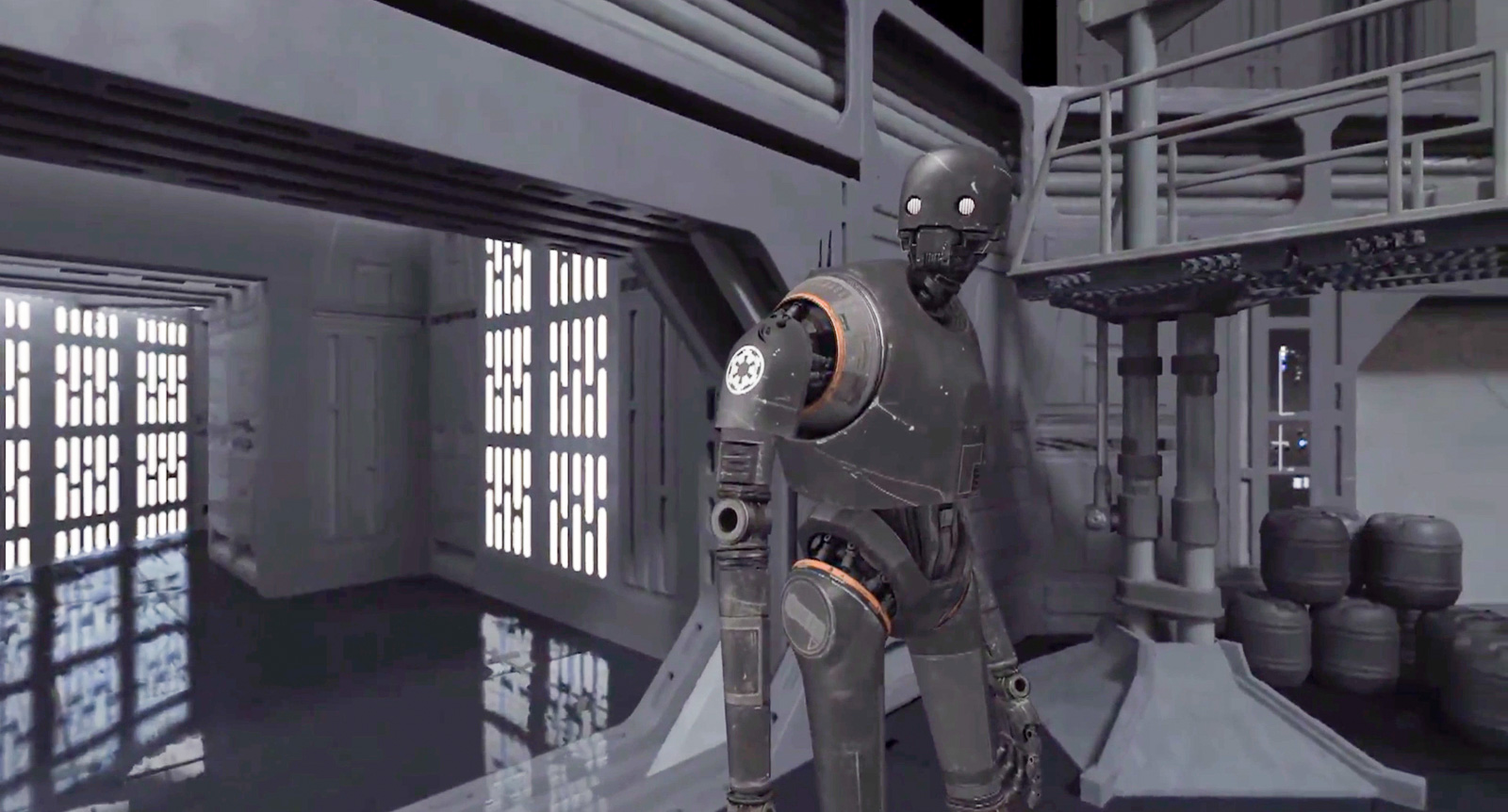

Google worked with a division of the special effects house ILM to create a high fidelity VR scene from the Star Wars movie Rogue One, and then used Seurat to make it playable on a smartphone.

The original scene was 50,000,000 polygons and 3GB of textures, and it took an hour-per-frame to render on one of ILM’s computers. After Google processed the scene with Seurat, it could be rendered at more than 70 frames per second on a smartphone GPU.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Seurat reduced the complexity of the scene to 50,000 polygons and 10MB of texture files. They claim it looks as good as the original, although we’ll need to experience the content before we can make that judgement for ourselves. What's still unclear is whether this technology would work for freeform games, or only for on-rails "experiences", but more info on Seurat will be coming later this year.

Sneak Peek

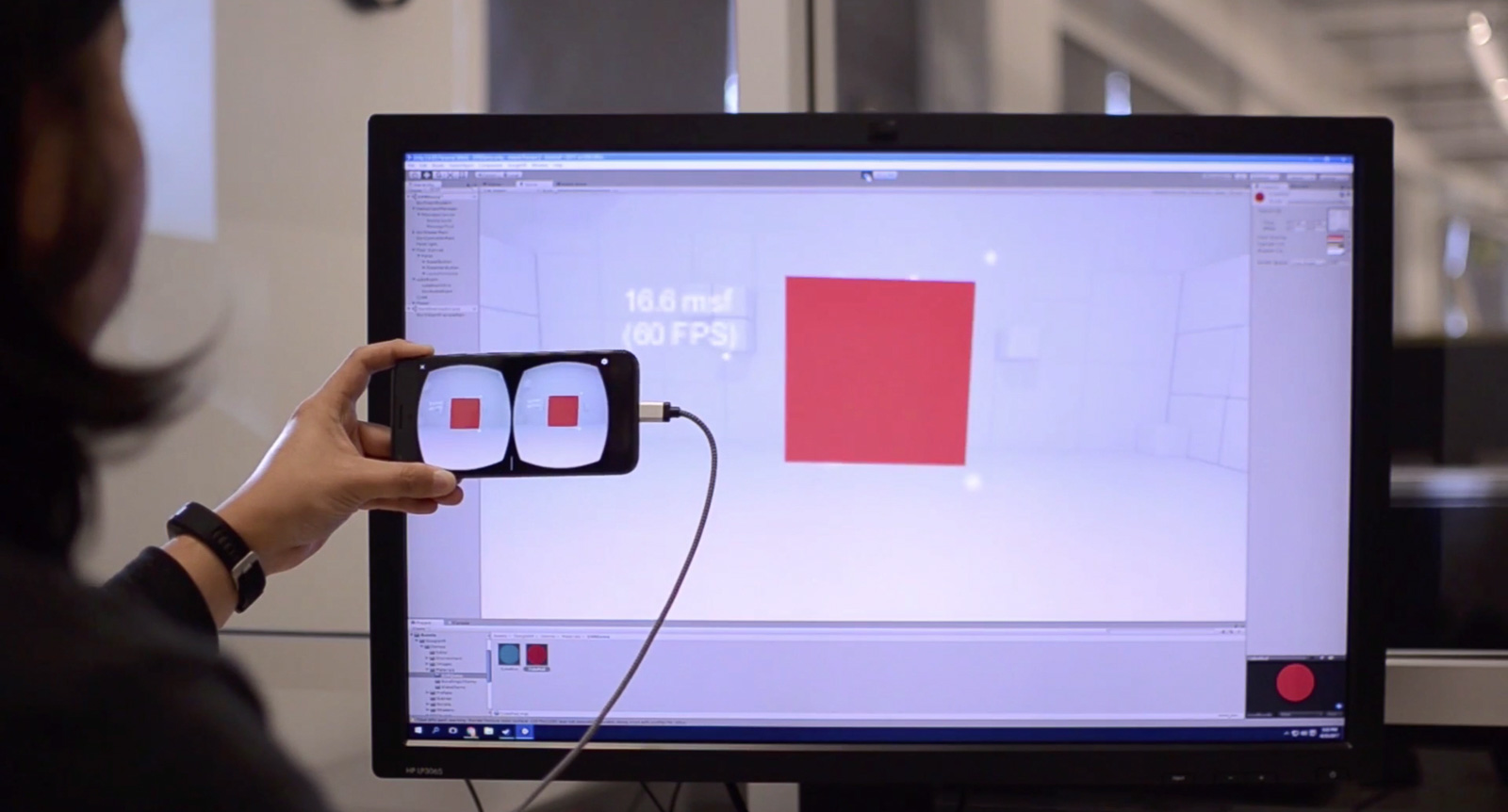

If you’re trying to create VR content, one of the biggest hurdles has traditionally been that development takes places in a 2D environment (your screen) but is viewed on a headset in a 3D projection.

You can render previews and send them to an attached VR headset, but at best you’ll have serious latency, and at worst the scene will actually take minutes or even hours to render any new change you make.

Google Instant preview (which shares a name with the defunct web search preview function from four or five years ago) is a new Unity and Unreal Engine plugin and companion Android App that tries to streamline the process. Using a Daydream-ready phone and when connected to a computer, developers can view a real-time stereoscopic preview of their content, as they create it.

According to Google, the mobile app on the phone steams sensor data on position and orientation to the computer, where the plugin uses that data to interpret the scene and sends a live preview back to the phone, which renders out the 3D scene.

It’s hard to overstate how useful this could be. To get a sense of how 360 or VR content creation has been, imagine editing a photo or a video, but every time you make a change, you have to wait five minutes before you can see the result of your edits. This is how most VR content has been made, and Instant Preview could do a lot to accelerate the speed of development.

-

bit_user ReplyWhat's still unclear is whether this technology would work for freeform games, or only for on-rails "experiences"

This is a key point, because the downside of sophisticated visibility algorithms is often having to build costly scene graphs. So, it will be important to see how expensive it is to build & modify the scene graph.

I'm also curious to know whether their renderer is compatible or mutually-exclusive with existing frameworks like Unity, Unreal, etc. If the latter, how does its functionality compare (e.g. in terms of features like collision detection, physics, animation, etc.)?