The Week In Storage: 3D XPoint Optane And Lenovo SSDs Spotted, Google's SSDs Get Pwned, Toshiba Rising

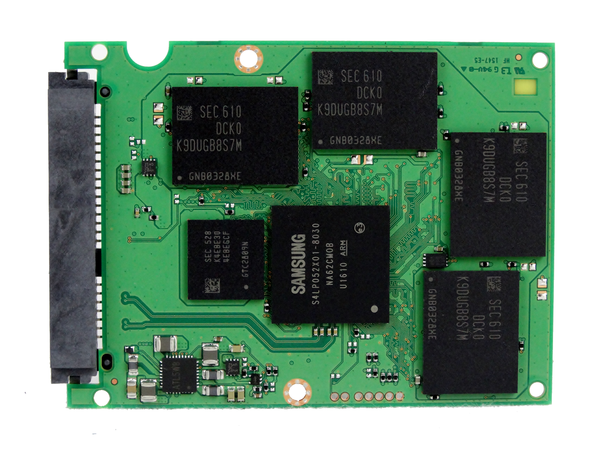

The week in storage began with Samsung's 850 Evo, which breaks through the high-density barrier with 4 TB of capacity. The right balance of performance and cost is always important, so the high capacity alone doesn't make the SSD a winner. Chris Ramseyer tested the 850 Evo to see if it would take flight, and the verdict is resoundingly positive, as long as one ignores the $1,499 price tag.

We covered the latest news on the Seagate restructuring plan as the company announced positive preliminary earnings, which sent its stock into happy territory. However, the silver cloud has a dark lining in the form of a total of 8,100 layoffs. The restructuring doesn't include just severing employees; news trickled out that the company is also closing its plant in Havant, England, which it obtained when it bought Xyratex.

The 2nd U.S. Circuit Court of Appeals ruled that the U.S. government cannot compel Microsoft to turn over email data that it physically stores in other countries. This watershed ruling will help protect the rights of foreign citizens, as the U.S. will have to work in tandem with local authorities to issue a warrant for the data. Several countries now have indigenous data regulations that require all data to be stored locally due to the perception (and perhaps reality) of ever-prying U.S. government eyes.

NAS is becoming increasingly popular as we look for beefier methods to store our digital lives. The Drobo B810n 8-bay offers plenty of storage capacity in an aesthetically attractive design. The nuts and bolts of software interaction and critical capabilities are what separate the wheat from the chaff, but Drobo may have taken simplicity too far.

Of course, there are always other interesting tidbits spread out over the week, so let's take a closer look at a few of the latest.

3D XPoint Optane SSDs Spotted

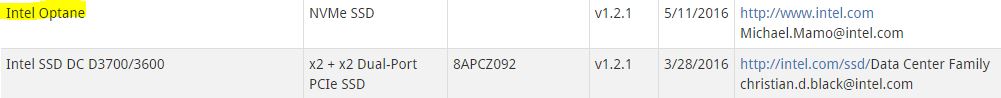

Standards bodies and interoperability labs are great places to watch if you are interested in a glimpse of the future. The University Of New Hampshire InterOperability Lab (UNH-IOL) ensures that NVMe SSDs are compliant with the NVMe spec. To that effect, it holds regular plugfests, which bring together a multitude of vendors (20 companies in this case) to test their respective SSDs. Devices that pass the tests make it to the UNH-IOL Integrators List, which proves the devices are up to NVMe snuff.

The list now includes the recently certified Intel Optane, which is Intel's 3D XPoint-powered SSD of the future. Intel and Micron tout that 3D XPoint has 1000x the performance and endurance of NAND with 10x the density of DRAM and Intel revealed that it helped establish the NVMe interface with its then-secret 3D XPoint in mind.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

3D XPoint performance would overwhelm SAS or SATA connections, so the use of the PCIe interface is hardly surprising. However, 3D XPoint can also be used as memory DIMMs that allow the computer to address the storage as memory (hence the "Storage Class Memory" moniker). Intel disclosed that its Kaby Lake platforms would be 3D XPoint-compatible, which indicates that it will support memory mapping via the RAM slots, but OS/application support for storage class memory is rudimentary and still evolving.

The 3D XPoint-powered Optane SSDs are the near-term home run hitter, as they will certainly be faster than any NAND alternative (though just how much remains open to debate), and NVMe has broad compatibility.

The Optane future is nearly upon us, as the listing indicates that Intel has a fully functional and complete device ready. The Flash Memory Summit and Intel's own IDF event are approaching quickly. We suspect the full unveiling of Optane will occur at one of these two events.

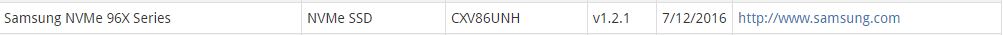

On another note, Samsung also has an "NVMe 96X Series" listed, and it bears a different firmware than the OEM models we recently tested. The listing implies that we will see the consumer version of the SSD soon, which Samsung will likely unveil at its upcoming yearly event.

There is also a Lenovo Atasani M.2 SSD listed, which suggests that Lenovo is now building its own SSDs. Samsung is the traditional Lenovo SSD supplier, which makes that finding all the more interesting.

Google's Pwns Its Own SSDs; Clouds Go Dark

One of the best things about the cloud is that we do not have to manage the servers and storage attached to it; we merely consume the end product with as little thought as possible. Oh, the freedom!

One of the worst things about the cloud is that we do not have any control over said infrastructure, so when an outage occurs we are powerless. Unfortunately, it is difficult to obtain accurate reports of cloud outages and disturbances, as there are no industry-standard rules and regulations that govern reporting. Some disturbances go unreported, thus giving the cloud more of a bulletproof image than it deserves.

Google is one of the cloud vendors that appear to be transparent about its outages. On July 10, the company reported there were severe problems in one of its service zones for 211 minutes. The outage may not seem to be a huge issue, unless you are a company that generates money, or is entirely reliant upon Google's services.

The company stated that instances using SSDs as their root partition were likely completely unresponsive during this period and that secondary SSD volumes suffered "slightly elevated latency and errors."

A previously unseen software bug, triggered by the two concurrent maintenance events, meant that disk blocks which became unused as a result of the rebalance were not freed up for subsequent reuse, depleting the available SSD space in the zone until writes were rejected.

The details of the event are telling, at least if you understand the inner working of SSDs. Google is one of the world's largest SSD manufacturers because many of its SSDs are actually custom units that it designs and manufactures itself. The details of how many of the company's SSDs are custom units is a closely guarded secret, but Google builds so many SSDs that it appeared as one of the largest SSD vendors in some SSD market reports.

SSD designs are generally broken into two categories: those that control their own inner workings, and those that the host computer manages directly. Virtually every single consumer SSD, such as the ones we pop into the desktop, are self-contained SSDs that manage the myriad functions internally and independently from the host.

The host manages some enterprise SSDs, which gives the user more granularity and control. The fact that the blocks were not re-designated as open, and summarily returned to use, suggests that software is managing the SSDs at the block layer. This technique fits well with the software-defined nature of today's hyperscale data centers, but it also opens the door to unexpected issues due to host interactions that expose it to numerous various software- or host-imposed errors.

In either case, Google has identified the problem and is already testing a fix on non-production machines in tandem with boosting its error monitoring capabilities to speed its reaction time. The outage serves as a reminder that we are still in the early days of the cloud, and these teething problems are a perfect example of why many companies are reluctant to be entirely reliant upon cloud services.

This Week's Storage Tidbit

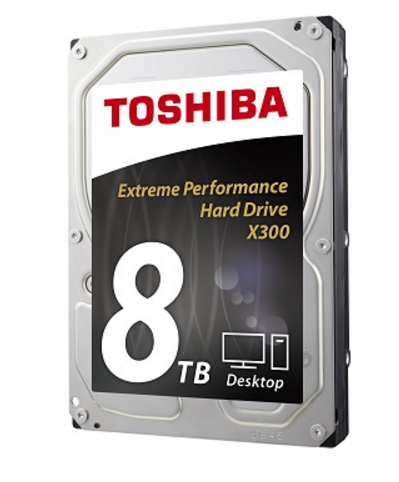

Trendfocus released its preliminary CQ2 2016 HDD market update, which revealed that Toshiba is making remarkable gains in the HDD market. Western Digital and Seagate recorded a 12- and 17-percent decline (respectively) in year over year HDD shipments, while Toshiba chalked up a 26 percent gain. Toshiba has a much smaller presence than WDC and Seagate, so it is easier for it to score an impressive growth rate, but any growth at all in the plummeting HDD market is impressive.

There have been unconfirmed rumblings that WDC and Seagate's system efforts may have angered the OEM overlords, who are now moving to Toshiba in retaliation. Toshiba is emerging from a restructuring effort in the wake of an accounting scandal, and many speculated that the company might sell off its HDD division. The latest market reports indicate quite the turnaround for the Toshiba HDD unit, so it's a safe bet the company's HDD efforts will soldier on.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

MRFS The next SATA and SAS standards should support variable clock speeds perhaps with pre-sets like 6G, 8G, 12G and 16G. A second option should support the 128b/130b jumbo frames now in the PCIe 3.0 spec. By the time PCIe 4.0 becomes the current standard, "syncing" data channels with chipsets has much to recommend it. We should not be forced to buy new motherboards if our immediate needs are faster storage.Reply -

jasonf2 SATA and SAS are and were not really suitable bus types for the newer emerging ultra low latency storage types. While they will be necessary for spin drives for a long time I don't see SATA or SAS being able to be anything but a man in the middle that will act as a bottleneck for solid state. We will be seeing spin drives for years to come, but only due to cost per gig and as a mainstream/ big data tech. Mainstream spin drives aren't even coming close to maxing out the bus as is and probable never really will. So what is the point to continued evolution of SATA? SAS I can see with large data center deployments of drives. The two need to merge into a single standard as they are already quasi interoperable as a specific spec for mechanical drives. PCI on the other hand, while one step closer to the CPU, is ultimately going to prove to still be too far away from the CPU to address latency issues with the emerging non volatile ram types. That is why we are seeing these things stuck on the RAM bus, which has been working the ultra low latency game for decades. The challenge there is that the addressable ram space to date is far too small for an enthusiast/consumer terabyte drive on most machines. With all of this in mind I fully expect that motherboard replacement will be necessary for faster storage until at least 3 players are competing in the NVRAM space (I only consider Intel/Micron as one at this time.) and real standards on the RAM bus and NVRAM come back into play. What we have coming is another RAMBUS Rimm moment.Reply

NVMe is only a temporary stop gap to address this issue. The only reason SATA was ever used for SSDs in the first place is that the manufactures needed somewhere to plug them into and almost all spin drives at that time were SATA. NVMe on the PCI bus was an easily implementable standard without having to dream up a whole new RAM system all without a technology that even needed it. This is going to kick AMD around pretty good for a little bit because it won't be cross system operable on the RAM side giving Intel a performance edge because of a chip-set feature that they have already told everyone is going to be propitiatory. AMD machines on the other hand will have NVMe implementation so that Intel/Micron can still sell drives to that market segment. I give Intel kudos on this one. With CPU performance gains flagging they are going to have a pretty compelling performance advantage until the standards can catch back up and that will probably take a while. -

MRFS Very good answer: thanks.Reply

There is a feasible solution, particularly for workstation users (my focus)

in a PCIe 3.0 NVMe RAID controller with x16 edge connector, 4 x U.2 ports,

and support for all modern RAID modes e.g.:

http://supremelaw.org/systems/nvme/want.ad.htm

4 @ x4 = x16

This, of course, could also be implemented with 4 x U.2 ports

integrated onto future motherboards, with real estate made

available by eliminating SATA-Express ports e.g.:

http://supremelaw.org/systems/nvme/4xU.2.and.SATA-E.jpg

This next photo shows 3 banks of 4 such U.2 ports,

built by SerialCables.com :

http://supremelaw.org/systems/nvme/A-Serial-Cables-Avago-PCIe-switch-board-for-NVMe-SSDs.jpg

Dell and HP have announced a similar topology

with x16 edge connector and 4 x M.2 drives:

http://supremelaw.org/systems/nvme/Dell.4x.M.2.PCIe.x16.version.jpg

http://supremelaw.org/systems/nvme/HP.Z.Turbo.x16.version.jpg

Kingston also announced a similar Add-In Card, but I could not find

any good photos of same.

And, Highpoint teased with this announcement, but I contacted

one of the engineers on that project and she was unable to

disclose any more details:

http://www.highpoint-tech.com/USA_new/nabshow2016.htm

RocketStor 3830A – 3x PCIe 3.0 x4 NVMe and 8x SAS/SATA PCIe 3.0 x16 lane controller;

supports NVMe RAID solution packages for Window and Linux storage platforms.

Thanks again for your excellent response!

MRFS

-

MRFS p.s. Another way of illustrating the probable need for new motherboardsReply

is the ceiling already imposed by Intel's DMI 3.0 link. It's upstream bandwidth

is exactly the same as a single M.2 NVMe "gum stick":

x4 lanes @ 8G / 8.125 bits per byte

(i.e. 128b/130b jumbo frame = 130 bits / 16 bytes) -

MRFS > I wish intel stops the DMI links rubbish and rely on PCIE lanes for storage ...Reply

Indeed. We've been proposing that future DMI links

have at least x16 lanes, to match PCIe expansion slots.

That way, 4 x U.2 ports can run full-speed downstream

of that expanded DMI link.

4 @ x4 = x16

There's an elegant simplicity to that equivalence.

Plus, at PCIe 4.0, the clock increases to 16G:

x16 @ 16G / 8.125 = 31.50 GB/second

That should be enough bandwidth for a while.

-

Paul Alcorn Reply18292703 said:So what is the point to continued evolution of SATA? SAS I can see with large data center deployments of drives...

We have, in fact, seen the last generation of SATA. The committee in charge of the spec has indicated that the increase in power for a faster interface is simply not tenable, and that there is no real way around it, short of creating a new spec. SATA is done, it will not move forward with new revisions, which will push us to PCIe.

I agree, the end game is the memory bus, but industry support is, and will continue, to be slow. At least until there are non-proprietary standards.

-

Paul Alcorn Reply18293620 said:Very good answer: thanks.

There is a feasible solution, particularly for workstation users (my focus)

in a PCIe 3.0 NVMe RAID controller with x16 edge connector, 4 x U.2 ports,

and support for all modern RAID modes e.g.:

http://supremelaw.org/systems/nvme/want.ad.htm

4 @ x4 = x16

This, of course, could also be implemented with 4 x U.2 ports

integrated onto future motherboards, with real estate made

available by eliminating SATA-Express ports e.g.:

http://supremelaw.org/systems/nvme/4xU.2.and.SATA-E.jpg

This next photo shows 3 banks of 4 such U.2 ports,

built by SerialCables.com :

http://supremelaw.org/systems/nvme/A-Serial-Cables-Avago-PCIe-switch-board-for-NVMe-SSDs.jpg

3x PCIe 3.0 x4 NVMe and 8x SAS/SATA PCIe 3.0 x16 lane controller;

supports NVMe RAID solution packages for Window and Linux storage platforms.

Thanks again for your excellent response!

MRFS

Those aren't technically U.2 ports, they are MiniSAS-HD connectors that are re-purposed to carry PCIe. There is no problem with it, but it is noteworthy because they can also carry SAS or SATA as well, making a nice triple-play for a single port.

Most of the M.2 x4 carrier cards, which aggregate multiple M.2's into a single device, are designed by Liqid, which is partially owned by Phison.

The Highpoint solution isn't unique, and is probably built using either a PMC-Sierra (now Microsemi) or an LSI (now Broadcom) ROC. Both companies have already announced their hardware RAID capable NVMe/SAS/SATA ROCs and adaptors.

http://www.tomsitpro.com/articles/broadcom-nvme-raid-hba-ssd,1-3165.html

http://www.tomsitpro.com/articles/pmc-flashtec-nvme2016-nvme2032-ssd,1-2798.html

-

jasonf2 My overall point has very little to do with bandwidth itself. It is an issue with latency. More PCIe lanes won't fix this but actually make it worse. Conventional RAM isn't just able to move large blocks of data it is able to access and write them in a fraction of the time necessary for flash to do it in and much faster than the PCI bus. The new Quasi-NVRAM that Intel/Micron is bringing forward is promising considerably faster access times. As any device gets further away from the CPU additional latency is put into play. So to put the PCI bus into the system as an intermediate just negates the ability for the low latency memory to do its thing. This is why you would never see the RAM system operating on top of the PCI bus. While it might be technically possible it would significantly bottleneck the computer. Putting NVRAM on the PCI Bus is pretty much the same thing in my opinion. Data center and buisness class work has a distinct need for data safeguarding and redundancy (IE Raid and crypto) and will be at least partially stuck on NVMe for a while. First Gen enthusiast class isn't probably going to have RAID in the controller but it will be very fast on the RAM bus. On the other hand I would not be at all surprised for later xeon implementations with an enhanced memory controller end up with at least some RAID like capability with possible crypto. (I don't think RAID will apply here as Redundant Array of Inexpensive Drives will be more like Redundant Array of Very Expensive Memory Modules.) Any additional controllers and bus additions being put between this and RAM itself that aren't necessary are just bottlenecks. Anything PCI just throws an IRQ polling request into the mix along with some memory to PCI interface controller to slow things down. Think homogeneous Ram and storage memory type hybrids that have no need to move large memory blocks into ram cache from slow storage. This is because storage is directly addressed to be processed on from the CPU. This has some caveats with Xpoint due to it still having a limited write cycle. But mixing static RAM and Xpoint in a hybrid situation is going to really change things. No matter what though motherboard change and memory replacement will not be an option on this level for future upgrades just like RAM is today. Again Kudos to Intel.Reply -

MRFS > the increase in power for a faster interface is simply not tenableReply

In my professional opinion, that's BS.

Here's why:

We now have 12G SAS and 10G USB 3.1.

The latter also implements a 128b/132b jumbo frame.

The PCIe 4.0 spec calls for a 16G clock.

Well, if a motherboard trace can oscillate at 16G,

a flexible wire can also oscillate at 16G. DUUH!!

I think that "committee" is full of themselves.

They crowded behind SATA-Express and

now they refuse to admit it's DOA.