Move Over GPUs: Startup's Chip Claims to Do Deep Learning Inference Better

Habana Labs, a startup that came out of “stealth mode” this week, announced a custom chip that is said to enable much higher machine learning inference performance compared to GPUs.

Habana Goya Specifications

According to the startup, its Goya chip is designed from scratch for deep learning inference, unlike GPUs or other types of chips that have been repurposed for this task. The chip’s die is composed of eight VLIW Tensor Processing Cores (TPCs), each having their own local memory, as well as access to shared memory. The external memory is accessed through a DDR4 interface. The processor supports the FP32, INT32, INT16, INT8, UINT32, UINT16 and UINT8 data types.

The Goya chip supports all the major machine learning software frameworks, including TensorFlow, MXNet, Caffe2, Microsoft Cognitive Toolkit, PyTorch and the Open Neural Network Exchange Format (ONNX). After a trained neural network model is loaded, the chip converts it to an internal format that’s more optimized for the Goya chip.

Models for vision, neural machine translation, sentiment analysis and recommender systems have been executed on the Goya chip, and Habana said that the processor should handle all sorts of inference workloads and application domains.

Goya Performance

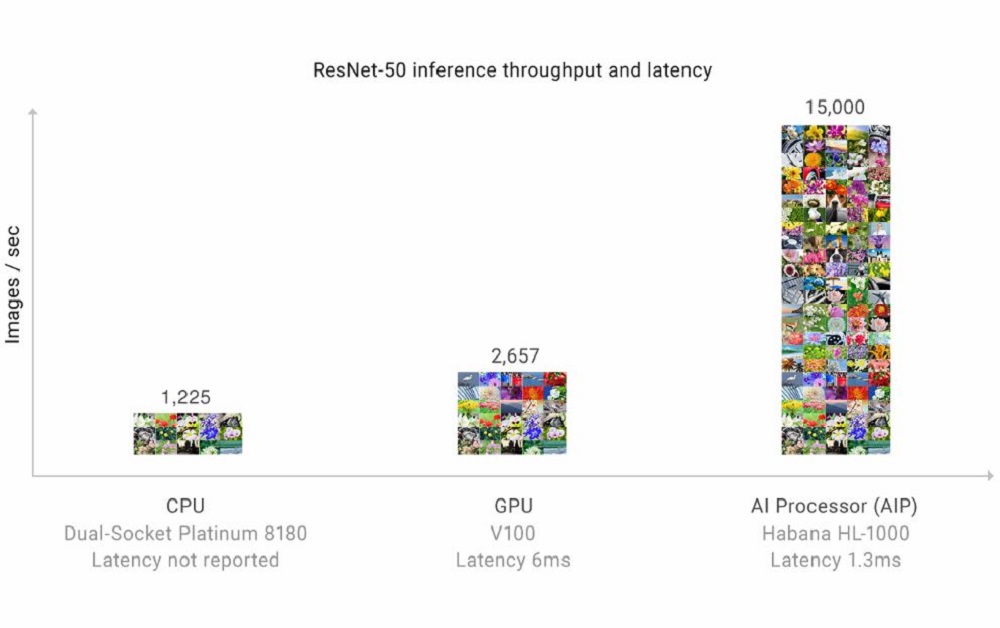

Habana says the Goya chip has shown a performance of 15,000 ResNet-50 images/second with a batch size of 10 and a latency of 1.3ms, while using only 100W. In comparison, Nvidia’s V100 GPU has shown a performance of 2,657 images/second.

A dual-socket Xeon 8180 was able to achieve an even lower performance than that: 1,225 images/second. According to Habana, when using a batch size of one, the Goya chip can handle 8,500 ResNet-50 images/second with a 0.27-ms latency.

This level of inference performance is given by the chip’s architecture design, mixed-format quantization, a proprietary graph compiler and software-based memory management.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Habana intends to reveal a deep learning training chip, called Gaudi, to pair with its Goya inference processor. The two chips will actually use the same VLIW core of Goya and will be software-compatible with it. The 16nm Gaudi chip will start sampling in Q2 2019.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

bit_user Something seems wrong with their benchmark, if a V100 only rates 2x as fast as Intel Xeon. I'm skeptical even 56 Xeon cores would be that fast.Reply

Anyhow, V100 is old news. Turing is yet 2x to 4x faster, still. -

alextheblue Reply

I can't help but think this wouldn't be a match for Nvidia's tensor cores if they (Nvidia) built a chip that was basically a big 100W Tensor block. But maybe I'm wrong. As of today though this Habana design does have the best performance in that power envelope. Of course, that's all just on paper.21334433 said:Something seems wrong with their benchmark, if a V100 only rates 2x as fast as Intel Xeon. I'm skeptical even 56 Xeon cores would be that fast.

Anyhow, V100 is old news. Turing is yet 2x to 4x faster, still. -

WINTERLORD so my next motherboard will have one of these for realtime raytracing kinda makes me wonder now what amd will offer in the futureReply -

bit_user Reply

You're declaring a winner based on just one benchmark and so little other information?21334801 said:As of today though this Habana design does have the best performance in that power envelope. Of course, that's all just on paper.

Anyway, according to this, Nvidia claims 6,275 images/sec with a single V100:

https://images.nvidia.com/content/pdf/inference-technical-overview.pdf

But that really doesn't tell us how well their architecture performs on different types of networks. The fact that it seems to rely on internal memory means it probably hits a brick wall, as you increase network size and complexity. -

bit_user Okay, looking at that Nvidia doc some more, I think I know what Habana did. They probably found the latency which was the sweet spot for their chip, and then looked at the closest V100 result below that.Reply

However, what that means is if you increase the batch size to where V100 does well, you're probably past the point where the Goya's internal memory is exhausted.

AFAIK, I don't think 6 ms is some kind of magic latency number, for the cloud (where these chips would be used). So, it's probably better to compare Goya's best throughput against the V100's best throughput.

But we're overlooking something. Goya is clearly using integer arithmetic to hit that number, while the V100 is using fp16. What they don't say is the hit you take on accuracy from using their 8-bit approximation. And if you're going to use 8-bit, then you can use Nvidia's new TU102 and probably get nearly the same performance (should be about 2x of the V100's fp16 throughput, according to Nvidia's numbers). -

bit_user Reply

First, Nvidia's GPU rely on the CUDA cores to drive the Tensor cores. I'm not sure how many CUDA cores you can remove, before impacting Tensor core performance, but the answer might be that they're already optimally balanced. That means all you could really remove is the fp64 support and the graphics blocks. That said, I keep wondering if you couldn't use the texture units for on-the-fly weight decompression.21334801 said:I can't help but think this wouldn't be a match for Nvidia's tensor cores if they (Nvidia) built a chip that was basically a big 100W Tensor block.

The CUDA cores are also valuable for implementing various layer types that can't simply be modeled with tensor products. -

softwarehouse Too slow Seagate. Still stuck at 14TB?Reply

Here's 16TB in action.

https://www.win-raid.com/t3548f45-The-XP-Yeager-Project-TB-Breaking-the-TB-Capacity-Barrier.html