Intel Gen12 Graphics Linux Patches Reveal New Display Feature for Tiger Lake (Update)

Update, 9/7/19, 4:38 a.m. PT: Some extra information about Gen12 has come out. According to a GitHub merge request, Gen12 will be one of the biggest ISA updates in the history of the Gen architecture and removal of data coherency between register reads and writes: “Gen12 is planned to include one of the most in-depth reworks of the Intel EU ISA since the original i965. The encoding of almost every instruction field, hardware opcode and register type needs to be updated in this merge request. But probably the most invasive change is the removal of the register scoreboard logic from the hardware, which means that the EU will no longer guarantee data coherency between register reads and writes, and will require the compiler to synchronize dependent instructions anytime there is a potential data hazard.”Twitter user @miktdt also noted that Gen12 will double the amount of EUs per subslice from 8 to 16, which likely helps with scaling up the architecture.

Original Article, 9/6/19, 9:34 a.m. PT:

Some information about the upcoming Gen12 (aka Xe) graphics architecture from Intel has surfaced via recent Linux kernel patches. In particular, Gen12 will have a new display feature called the Display State Buffer. This engine would improve Gen12 context switching.

Phoronix reported on the patches on Thursday. The patches provide clues about the new Display State Buffer (DSB) feature of the Gen12 graphics architecture, which will find its way to Tiger Lake (and possibly Rocket Lake) and the Xe discrete graphics cards in 2020. In the patches, DSB is generically described as a hardware capability that will be introduced in the Gen12 display controller. This engine will only be used for some specific scenarios for which it will deliver performance improvements, and after completion of its work, it will be disabled again.

Some additional (technical) documentation of the feature is available, but the benefits of the DSB are described as follows: “[It] helps to reduce loading time and CPU activity, thereby making the context switch faster.” In other words, it is new engine that offloads some work from the CPU and helps to improve context switching time.

Of course, the bigger picture here is the enablement for Gen12 that has been going on in the Linux kernel (similar to Gen11), which is especially of interest given that it will mark the first graphics architecture from Intel to get released as a discrete GPU. To that end, Phoronix reported in June that the first Tiger Lake graphics driver support was added to the kernel, with more batches in August.

Tiger Lake and Gen12 Graphics: What we know so far

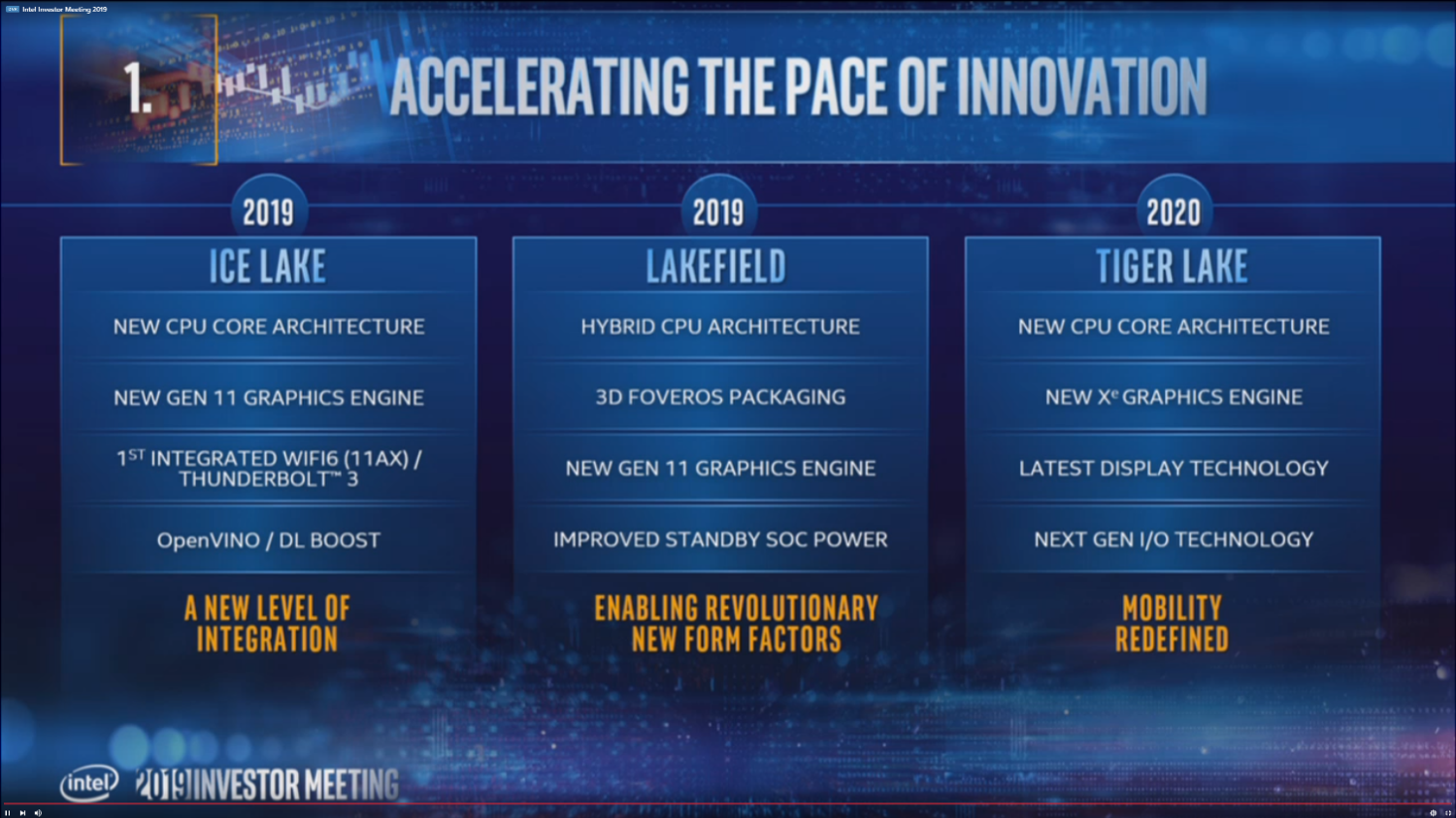

With the first 10th Gen (10nm) Ice Lake laptops nearly getting into customers’ hands after almost a year of disclosures, Intel has already provided some initial information about what to expect for the 11th-Gen processors next year, codenamed Tiger Lake (with Rocket Lake on 14nm still in the rumor mill). Ice Lake focused on integration and a strong CPU and GPU update, and with the ‘mobility redefined’ tag line, Tiger Lake looks to be another 10nm product solely for the mobile market.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

On the CPU side, Tiger Lake will incorporate the latest Willow Cove architecture. Intel has said that it will feature a redesigned cache, transistor optimizations for higher frequency (possibly 10nm++), and further security features.

While the company has been teasing its Xe discrete graphics cards for even longer than it has talked about Ice Lake, details remain scarce. Intel said it had split the Gen12 (aka Xe) architecture in two microarchitectures, one that is client optimized, and another one that is data center optimized, intending to scale from teraflops to petaflops. From a couple of leaks from 2018, it is rumored that the Arctic Sound GPU would consist of a multi-chip package (MCP) with 2-4 dies (likely using EMIB for packaging), and was targeted for qualification in the first half of next year. The leak also stated that Tiger Lake would incorporate power management from Lakefield.

The MCP rumor is also corroborated by some recent information from an Intel graphics driver, with the (Discrete Graphics) DG2 family coming in variants of what is presumably 128, 256 and 512 executions units (EUs). This could indicate one, two and four chiplet configurations of a 128EU die. Ice Lake’s integrated graphics (IGP) has 64EUs, and the small print from Intel’s Tiger Lake performance numbers revealed that it would have 96EUs.

A GPU with 512EUs would have in the neighborhood of 10 TFLOPS, which does not look sufficient to compete with 2020 GPU offerings from AMD and NVIDIA in the high-end space. However, not all the gaps are filled yet. A summary chart posted by @KOMACHI_ENSAKA talks about three variants of Gen12:

- Gen12 (LP) in DG1, Lakefield-R, Ryefield, Tiger Lake, Rocket Lake and Alder Lake (the successor of Tiger Lake)

- Gen12.5 (HP) in Arctic Sound

- Gen12.7 (HP) in DG2

How those differ is still unclear. For some speculation, the regular Gen12 probably refers simply to the integrated graphics in Tiger Lake and other products. However, the existence of DG1 and information about Rocket Lake could indicate that Intel has also put this IP in a discrete chiplet. This chiplet could then serve as the graphics for Rocket Lake by packaging it together via EMIB. If we assume Arctic Sound is the mainstream GPU, then Gen12.5 would refer to the client optimized version and Gen12.7 to the data center optimized version of Xe. In that case, the amount of EUs Intel intends to offer to the gaming community remains unknown.

Moving to the display, it remains to be seen if the Display State Buffer is what Intel referred to with the ‘latest display technology’ bullet point, or if the DSB is just one of multiple new display improvements. Tiger Lake will also feature next-gen I/O, likely referring to PCIe 4.0.

Given the timing of Ice Lake and Comet Lake, Tiger Lake is likely set for launch in the second half of next year.

Display Improvements in Gen11 Graphics Engine

With display being one of the key pillars of Tiger Lake, it is worth recapping the big changes in Gen11’s display block (we covered the graphics side of Gen11 previously).

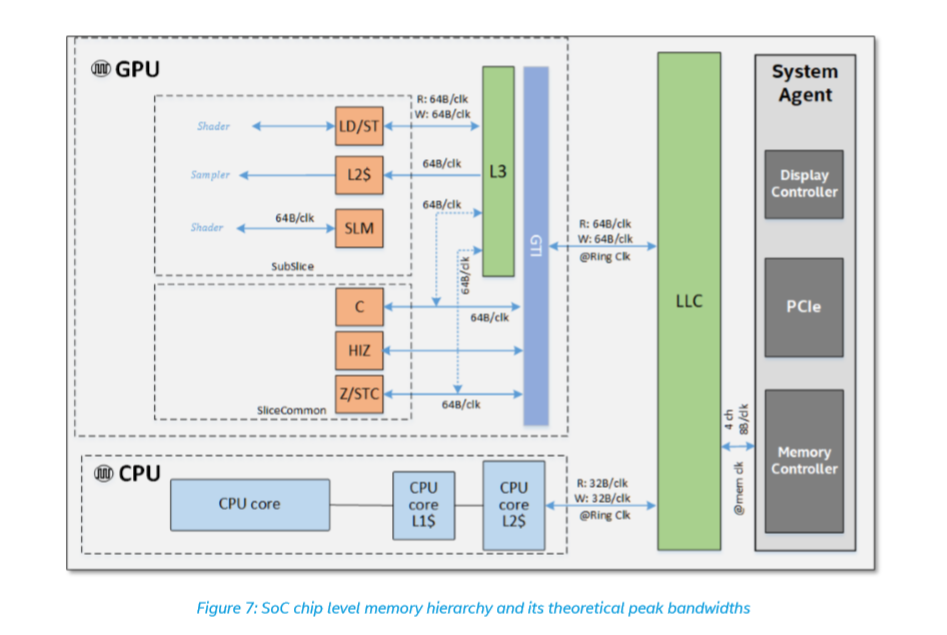

As the name implies, the display controller controls what is displayed on the screen. In Ice Lake’s Gen11, it is part of the system agent, and it had some hefty improvements. The Gen11 display engine introduced support for Adaptive-Sync (variable display refresh rate technology) as well as HDR and a wider color gamut. The Gen11 platform also integrated the USB Type-C subsystem, and the display controller has specific outputs for Type-C, and it can also target the Thunderbolt controller.

Intel also introduced some features for power management, most notably Panel Self Refresh (PSR), a technology first introduces in the smartphone realm. With PSR, a copy of the last frame is stored in a small frame buffer of the display. In the case of a static screen, the panel will refresh out of the locally stored image, which allows the display controller to go to a low power state. As another power-saving feature, Intel added a buffer to the display controller, to fetch pixels for the screen into. This allows the display engine to concentrate its memory accesses into a burst, meanwhile shutting down the rest of display controller. This is effectively a form of race to halt, reminiscent of the duty cycle Intel introduced in Broadwell’s Gen8 graphics engine.

Lastly, on the performance side, in response to the increasing monitor resolutions, the display controller now has a two-pixel-per-clock pipeline (instead of one). This reduces the required clock rate of the display controller by 50%, effectively trading die area for power efficiency (since transistors are more efficient at lower clocks as voltage is reduced). Additionally, the pipeline has also gained in precision in response to HDR and wider color gamut displays. The Gen11 controller now also supports a compressed memory format generated by the graphics engine to reduce bandwidth.

-

bit_user ReplyTiger Lake will also feature next-gen I/O, likely referring to PCIe 4.0.

I'm guessing that's referring to Thunderbolt 3, DisplayPort 2.0, and maybe HDMI 2.1.

None of the roadmap leaks or info on LGA-1200 mention any client CPUs having PCIe 4.0 this year or next. -

Unolocogringo This is also not Intel's first foray into the discrete graphics market. The 740 was dismal.Reply -

bit_user Reply

That was 20 years ago!Unolocogringo said:This is also not Intel's first foray into the discrete graphics market. The 740 was dismal.

AMD still made uncompetitive CPUs as recently as 3 years ago, and look at them now!

It's a lot more informative to look at the strides Intel has made in their integrated graphics. They're hardly starting from zero, this time around. -

NightHawkRMX They are late to the PCIe 4.0 party. Its not even remotely surprising they would try to incorporate it.Reply

bit_user said:None of the roadmap leaks or info on LGA-1200 mention any client CPUs having PCIe 4.0 this year or next.

And at one point their roadmaps also showed Intel would be manufacturing 7nm nearly 3 years ago. And were still on 14nm for the desktop with just medeocre 10nm mobile processors...

https://www.anandtech.com/show/13405/intel-10nm-cannon-lake-and-core-i3-8121u-deep-dive-review/2At the end of the day, they are projections. They can and usually are wrong, especially when they look many months or years in the future, just like the slide above. -

bit_user Reply

It's understandable when they under-deliver on their roadmap promises, because technology is actually hard, sometimes.remixislandmusic said:And at one point their roadmaps also showed Intel would be manufacturing 7nm nearly 3 years ago. And were still on 14nm for the desktop with just medeocre 10nm mobile processors...

However, what you're saying is that they're going to over-deliver on their roadmap promises. It could happen, but I don't think they've had a track record of doing that.

They're not projections as in stock market or weather forecasts - these are their plans that they're communicating to partners and customers! And work that's not planned doesn't usually get done. Furthermore, features that their partners weren't expecting wouldn't necessarily get enabled or properly supported.remixislandmusic said:At the end of the day, they are projections. They can and usually are wrong, especially when they look many months or years in the future, just like the slide above. -

NightHawkRMX Reply

Correct. I'm just reiterating Intel.bit_user said:They're not projections as in stock market or weather forecasts - these are their plans that they're communicating to partners and customers! And work that's not planned doesn't usually get done.

It says in that corner of the slide pictured, "projected" -

Unolocogringo Reply

They also started project Larabee, but no consumer devices were sold.bit_user said:That was 20 years ago!

AMD still made uncompetitive CPUs as recently as 3 years ago, and look at them now!

It's a lot more informative to look at the strides Intel has made in their integrated graphics. They're hardly starting from zero, this time around.

So I did not count it.

Just stating that this is their third attempt to enter the Discrete GPU market, the first 2 attempts were complete failures.

The article states this is their first. -

bit_user Reply

Yeah, forcing x86 into GPUs was an exercise in trying to fit a square peg into a round hole.Unolocogringo said:They also started project Larabee, but no consumer devices were sold.

So I did not count it.

To be fair, there were a lot of 3D graphics chips, back then - S3, 3D Labs, Matrox, Rendition, Tseng, Cirrus Logic, Number Nine, PowerVR, 3DFX, and of course ATI and Nvidia. Plus, even a few more I'm forgetting. Most weren't very good. Even Nvdia's NV1 could be described as a failure.Unolocogringo said:Just stating that this is their third attempt to enter the Discrete GPU market, the first 2 attempts were complete failures.

From the sound of it, the i740 was far from the worst. Perhaps it just didn't meet with the level of success that Intel was used to. Being a late entrant to a crowded market surely didn't help.

http://www.vintage3d.org/i740.php#sthash.e4kIOqFj.MxbFM9tE.dpbs

Ah, I had missed that.Unolocogringo said:The article states this is their first. -

GetSmart Reply

And Intel's i740 was not the last Intel discrete GPU in that era, there was also Intel i752 which never made it to mass market. As for the worst, probably Cirrus Logic Laguna3D despite using RDRAM and only lasted a single generation. Others like Trident 3DImage series was very buggy, and NEC PowerVR had quirky rendering. Companies like Tseng, along with Weitek and Avance Logic (Realtek), never made it into the 3D acceleration segment. Also NVidia's NV1 quadratic texturing technology made its way into Sega's Saturn console.bit_user said:To be fair, there were a lot of 3D graphics chips, back then - S3, 3D Labs, Matrox, Rendition, Tseng, Cirrus Logic, Number Nine, PowerVR, 3DFX, and of course ATI and Nvidia. Plus, even a few more I'm forgetting. Most weren't very good. Even Nvdia's NV1 could be described as a failure.

From the sound of it, the i740 was far from the worst. Perhaps it just didn't meet with the level of success that Intel was used to. Being a late entrant to a crowded market surely didn't help.

http://www.vintage3d.org/i740.php#sthash.e4kIOqFj.MxbFM9tE.dpbs

Ah, I had missed that. -

kinggremlin Hasn't it been rumored for a while that Intel would skip PCIE 4.0 and go straight to 5.0 which is only a year behind 4.0? With so little benefit of 4.0 right now to most of the market, would seem a waste of time to implement it when it will already be outdated a year later without ever having been any real use.Reply