Microsoft Uses Boiling Liquid to Cool Data Center Servers

Boiling Liquid promises to be better in every way compared to air cooling

It probably won't make the list of best CPU coolers for end users anytime soon, but Microsoft's data center servers could be getting a massive thermal management upgrade in the near future. Right now, the software giant is testing a radical new cooling technology known as boiling liquid, which promises to be higher performance, more reliable, and cheaper to maintain compared to traditional air cooling systems in data centers right now.

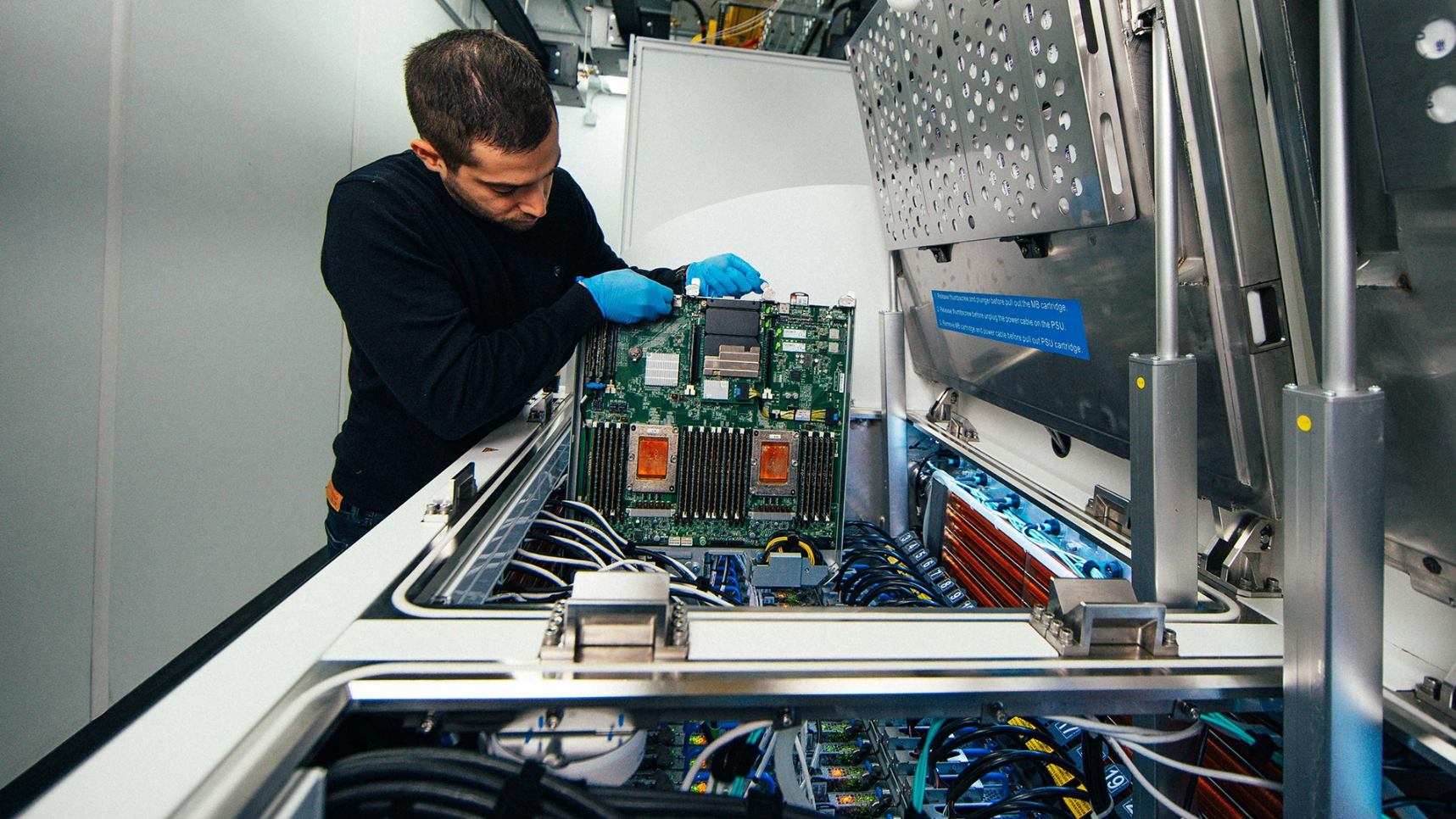

Servers that are equipped with this new prototype cooling system look very similar to mineral oil PCs if you've seen one. Dozens of server blades are packed tightly together in a fully submerged tank of boiling liquid. The liquid of course is non-conductive so the servers can operate safely inside the liquid environment.

The liquid is a special unknown recipe that boils at 122 degrees Fahrenheit (which is 90 degrees lower than the boiling point of water). The low boiling point is needed to drive heat away from critical components. Once the liquid begins boiling, it'll automatically roll up to the surface, allowing cooled condensers to contact the liquid, returning the cooling liquid to its fully liquidized state.

Effectively, this system is one gigantic vapor chamber. Both cooling systems rely on chemical reactions to bring heat from system components to cooling chambers, whether that be heatsinks or, in this case, a condenser.

Death of Moore's Law Is to Blame

Microsoft's says that it is developing such a radically new cooling technology is because of the rising demands of power and heat from computer components, which are only going to get worse.

The software giant claims that the death of Moore's Law is to blame for this; transistors on computer chips have become so small that they've hit the atomic level. Soon you won't be able to shrink the transistors on a new process node any smaller as it will be physically impossible to do so.

To counter this, chip fabs have had to increase power consumption quite significantly to keep increasing CPU performance -- namely from adding more and more cores to a CPU.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Microsoft notes that CPUs have increased from 150 watts to more than 300 watts per chip, and GPUs have increased to more than 700 watts per chip on average. Bear in mind that Microsoft is talking about server components and not about consumer desktops where the best CPUs and best graphics cards tend to have less power consumption than that.

If server components get more and more power-hungry, Microsoft believes this new liquid solution will be necessary to keep costs down on server infrastructure.

Boiling Liquid Is Optimized For Low Maintenance

Microsoft took inspiration from the datacenter server clusters operating on seabeds when developing the new cooling technology.

A few years ago Microsoft unleashed Project Natick, which was a massive operation to bring datacenters underwater to inherit the benefits of using seawater as a cooling system.

To do this, the server chambers were filled with dry nitrogen air instead of oxygen and use cooling fans, a heat exchanger, and a specialized plumbing system that pipes in seawater through the cooling system.

What Microsoft learned was the sheer reliability of water/liquid cooling. The servers on the seafloor experienced one-eighth the failure rate of replica servers on land with traditional air cooling.

Analysis of the situation indicates the lack of humidity and corrosive effects of oxygen were responsible for the increased sustainability of these servers.

Microsoft hopes its boiling liquid technology will have the same effects. If so, we could see a revolution in the data center world where servers are smaller, and much more reliable. Plus with this new cooling system, server performance is hopefully increased as well.

Perhaps the boosting algorithms we see on Intel and AMD's desktop platforms can be adopted into the server world so processors can automatically hit higher clocks when they detect more thermal headroom.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

tennis2 I've seen this before... years ago.Reply

Pretty sure this is it.

https://www.anandtech.com/show/12522/immersion-server-liquid-cooling-zte-makes-a-splash-at-mwc -

Old news. Cray was using a similar system decades ago to cool the XMP's/YMP's. They used freon, which bubbled like crazy when the CPU was running.Reply

-

hotaru251 Der8aur also worked with a company at a show to show this type of stuff off. (it was on consumer hardware)Reply

just hope that it becomes popular enough in server to make it to mainstream users (as there are perks of having ur stuff submerged in liquid) -

kanewolf Reply

The big difference between what Microsoft is doing and what Cray did is the closed loop that MS is doing. Crays had huge liquid to liquid heat exchangers that shed heat into conventional chilled water systems. They were not known for energy efficiency :)ex_bubblehead said:Old news. Cray was using a similar system decades ago to cool the XMP's/YMP's. They used freon, which bubbled like crazy when the CPU was running. -

OriginFree Replygg83 said:The enclosure looks like a giant computer pressure-cooker

Exactly. Now what they need to do is to somehow attach a generator (like geothermal power generators) and they could have regenerative computing where the heat actually generates power to generate more heat.

Well, till the cooling system somehow gets a blue screen and melts down like a nuclear reactor. -

coloradoleo76 ReplyBoth cooling systems rely on chemical reactions to bring heat from system components to cooling chambers, whether that be heatsinks or, in this case, a condenser.

Phase change is not a chemical reaction. -

hotaru.hino Reply

That does sound like a neat idea to reuse the waste heat from hardware. If they could pressurize the boiled medium that could spin some turbines.OriginFree said:Exactly. Now what they need to do is to somehow attach a generator (like geothermal power generators) and they could have regenerative computing where the heat actually generates power to generate more heat.