Nvidia's GTC 2015 Keynote: Titan X, Digits DevBox, Pascal, Drive PX, And Lots Of Deep Learning

At the opening keynote of GTC 2015, Nvidia Co-Founder and CEO Jen-Hsun Huang announced many things, all of which are related to deep learning and neural networks.

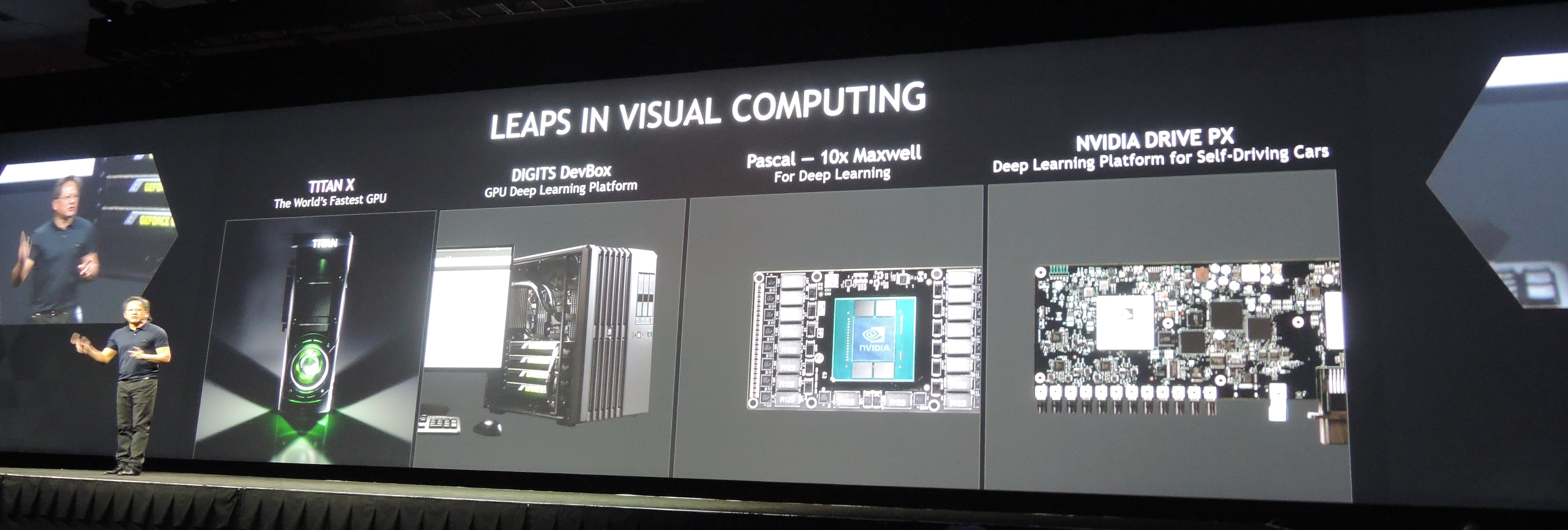

Like every GTC in the past, Nvidia's CEO Jen-Hsun opened the conference with a keynote wearing a brown leather jacket. To kick things off, he mentioned that there were going to be four new announcements. These were "A New GPU and Deep Learning," "A Very Fast Box and Deep Learning," "Roadmap Reveal and Deep Learning," and "Self Driving Cars and Deep Learning." See a trend?

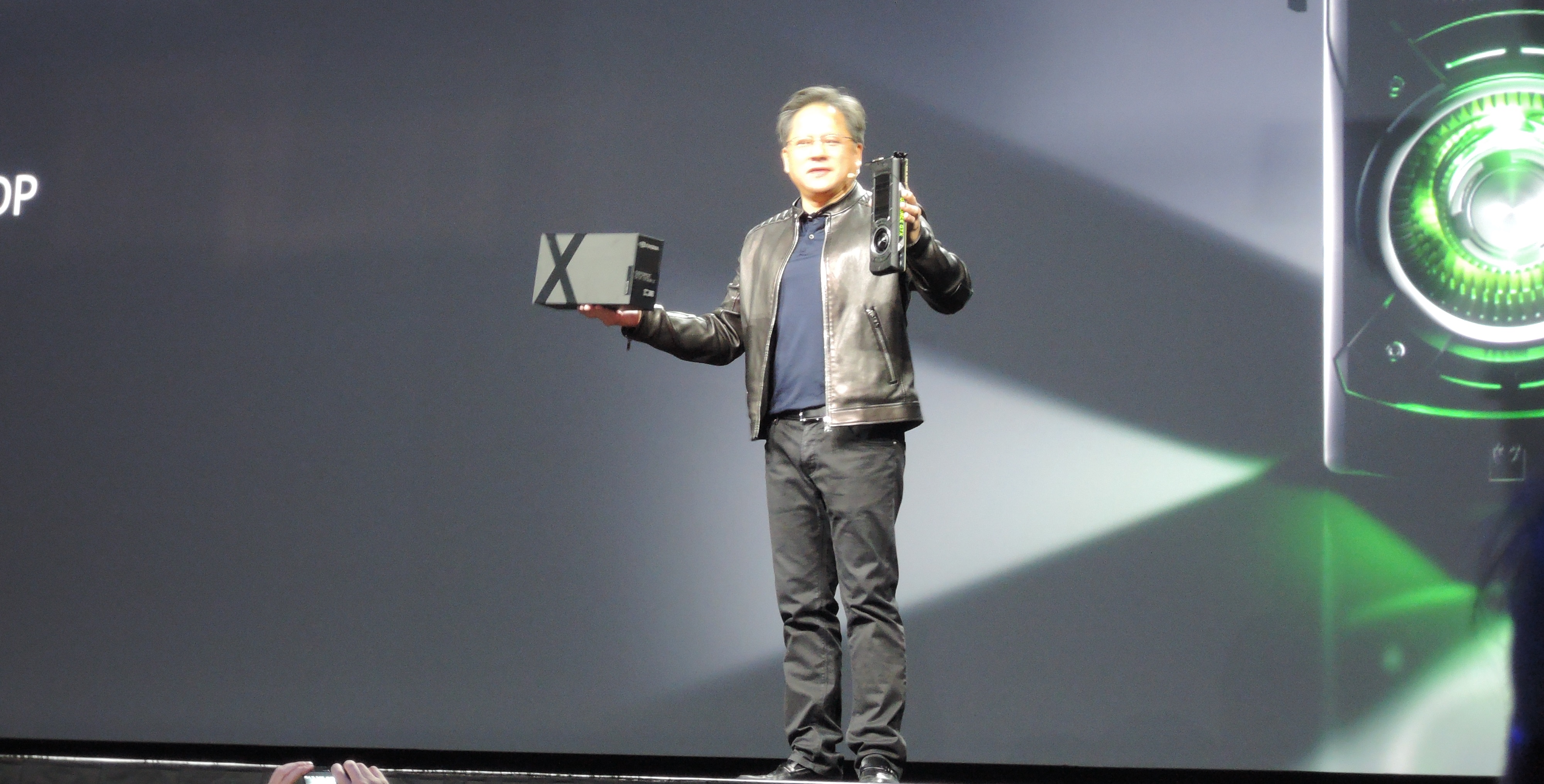

The GeForce GTX Titan X

The "New GPU" was the GTX Titan X, which was revealed two weeks ago at GDC. We already knew that the card carries eight billion transistors and that it carries a Maxwell GPU, but we didn't know that it carries a grand total of 3072 CUDA cores. Single floating point performance sits at a staggering 7.0 TFlops, and double floating point precision sits at 0.2 TFlops. Clearly this card is aimed at folks looking for SP performance, as Nvidia also told us that those looking for DP performance should look at the Titan Z. (Our full review of the Titan X is here.)

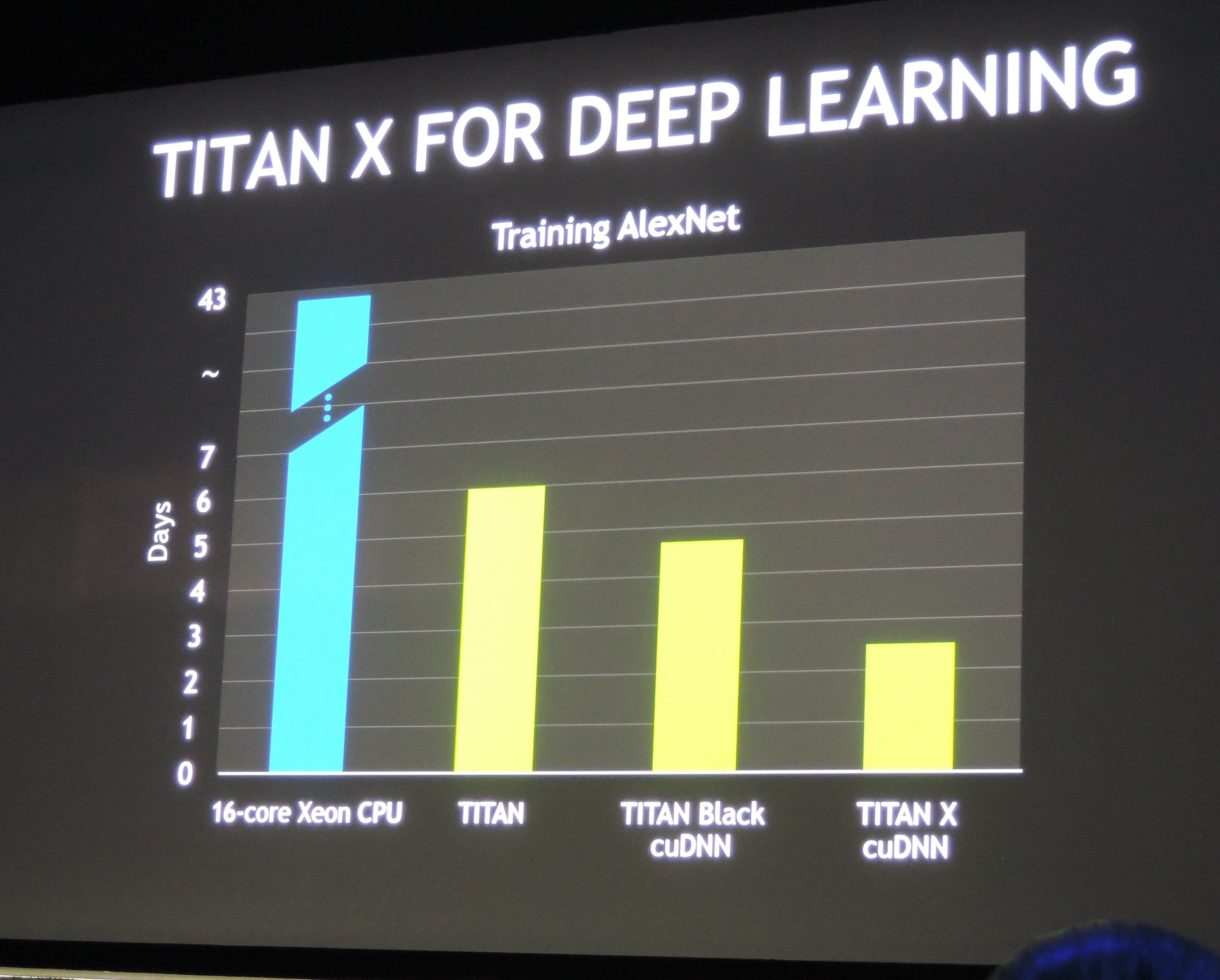

What followed was a demonstration of how much faster the Titan X really is. Forget about the Unreal Engine 4 Kite demo; what's really interesting is the advancement in its ability to train a neural net using deep learning. According to Jen-Hsun, on a modern-day 16-core Xeon CPU, you'd need 43 days in order to train AlexNet, and on the original Titan it would take about a week. On the GTX Titan X, it took a mere three and a half days.

Jen-Hsun also announced that the GTX Titan X will carry a price tag of $999.

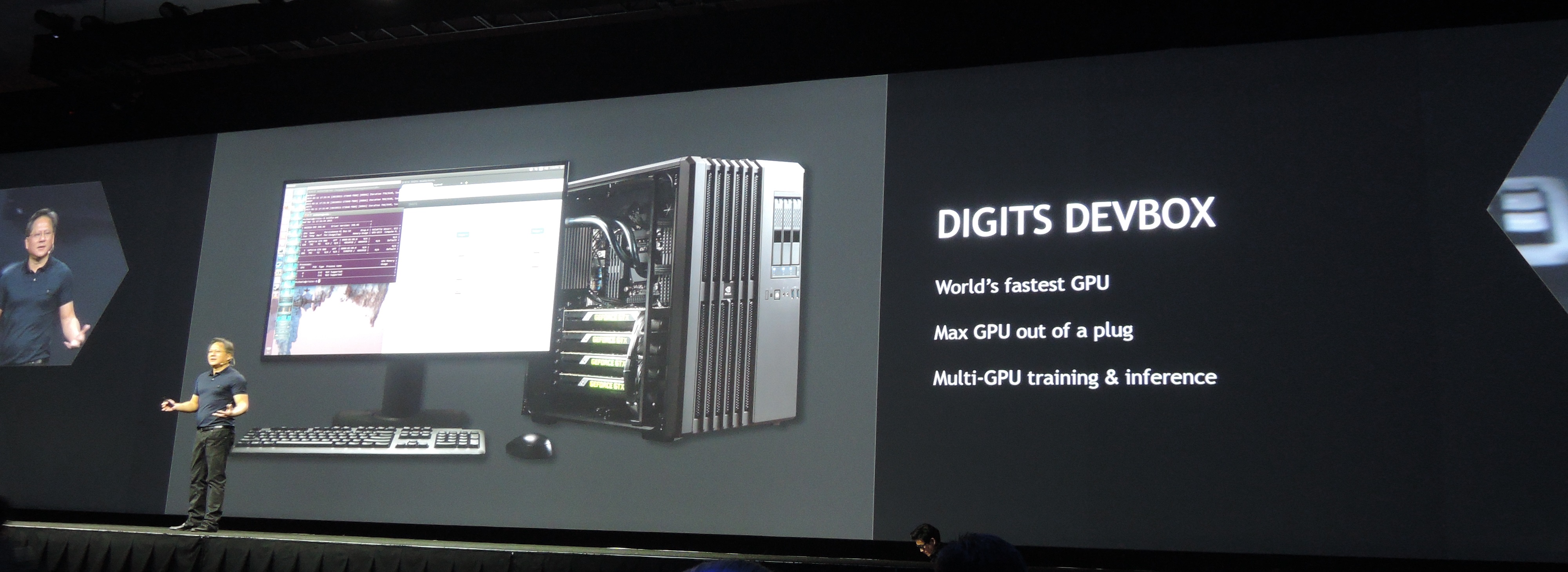

A Very Fast Box

He's a funny guy, Jen-Hsun, because throughout this entire part of the keynote, at no single point did he call this very fast box a PC, or a system, or anything of the like. It was simply a box.

The Very Fast Box is the DIGITS DevBox, which is basically a pre-built system that comes with Linux pre-installed along with the DIGITS software. The DIGITS software is a suite that you can use to set up neural networks and train them using (you guessed it) deep learning.

Inside the Digits DevBox, buyers will find four GTX Titan X graphics cards along with the remainder of the system. The exact specifications were not revealed. What we do know is that it uses a 1300 W PSU, and Jen-Hsun boasted that this is the most computational power you can draw out of a wall socket. He also emphasized that in the year 2000, to reach 1 TFlop of SP performance you'd need a million watts of power, and that the DIGITS DevBox manages to do 28 TFlops of SP compute on its GPUs off of just 1300 W.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The DIGITS DevBox will be available in May and will sell for $15,000. Obviously, you're paying for far more than just the hardware.

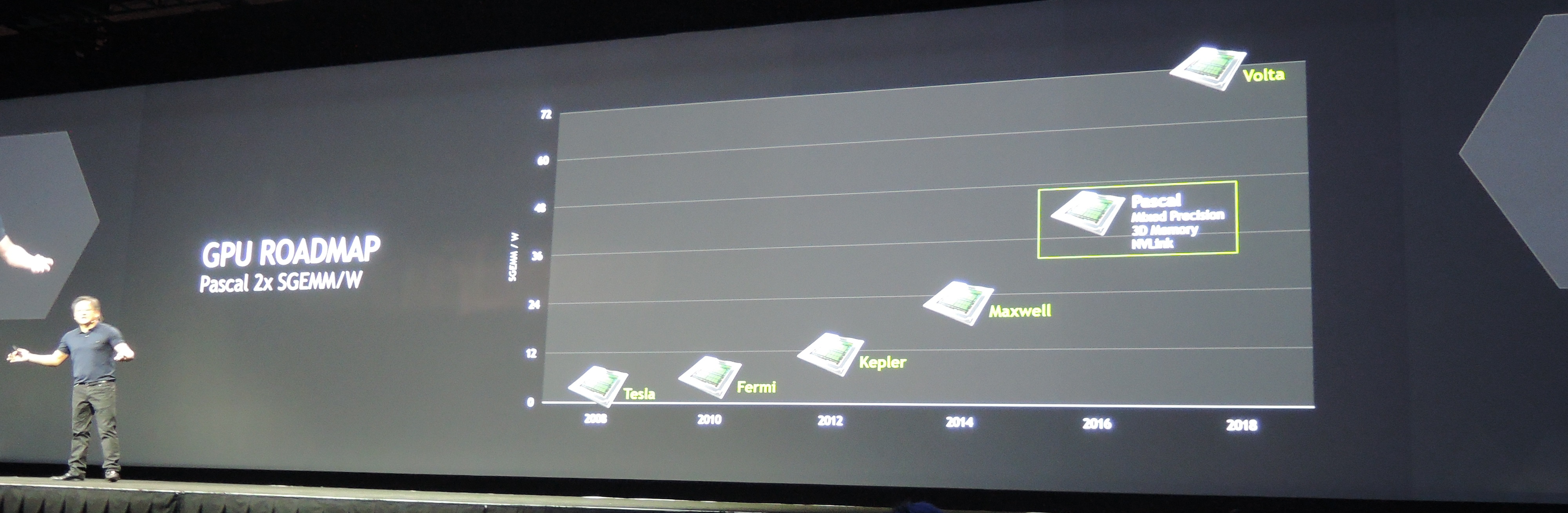

Nvidia GPUs Down The Road

The third announcement was Nvidia's roadmap. We've just moved from the Kepler architecture to Maxwell, and the biggest difference we're seeing is that Maxwell is simply a more efficient GPU architecture that's capable of doing more with less. Regarding new features, however, it doesn't actually bring that much to the table. Not compared to what Pascal will, at least.

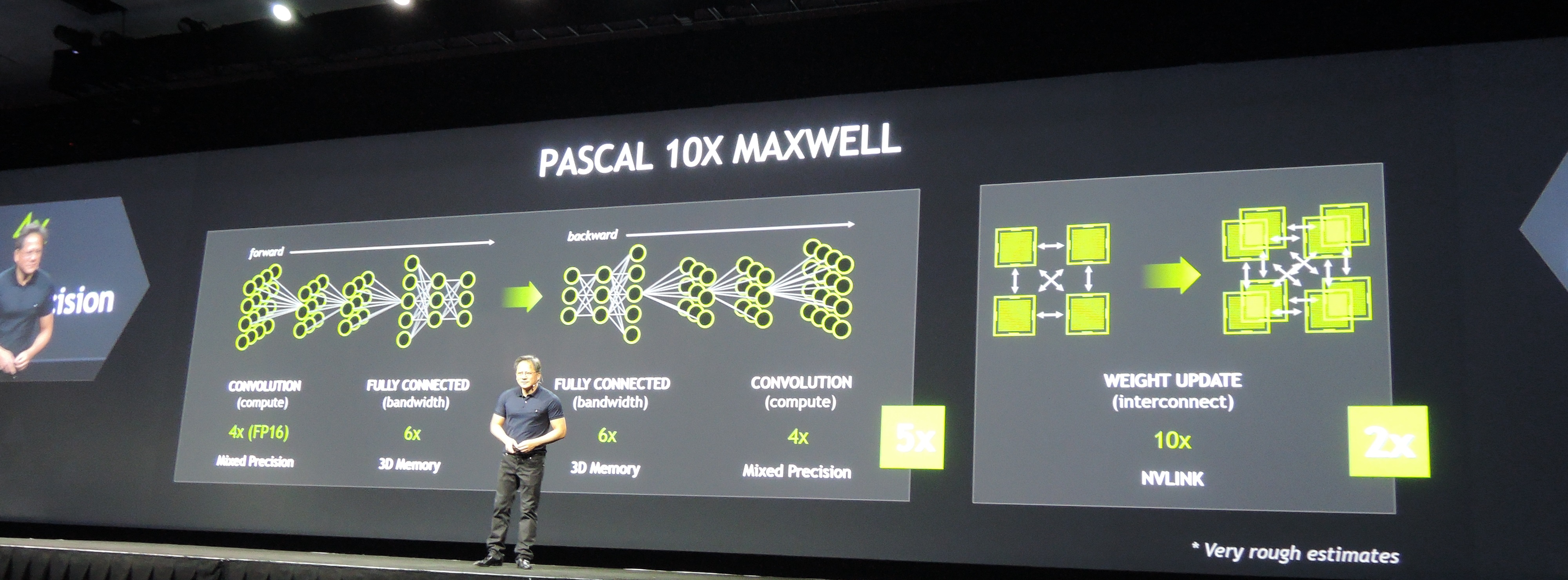

Nvidia's Pascal architecture will bring three new key features: Mixed Precision performance, 3D Memory, and NVLink.

Mixed Precision computation basically allows you to mix single and double precision data points in computations, retaining the same accuracy but improving performance. The double floats would be to retain precision, while the single floating point data mixed with that would improve performance.

Perhaps more interesting to consumers, however, is the 3D memory. It sounds a bit like what Samsung is doing with its 3D V-NAND, where it stacks the memory in a third dimension in order to increase data densities. The purpose of 3D memory in GPUs isn't the same, however. In GPUs, increasing your memory doesn't increase your bandwidth, but with 3D memory that can be done. Today's GPU memory will cap out at a bandwidth of about 350 GB/s, and Jen-Hsun indicated that Pascal's GPUs would have memory operating with bandwidths of around 750 GB/s.

To top it off, Jen-Hsun briefly explained the NVLink. What NVLink will allow you to do is link many more GPUs together than we can today, and all these GPUs will be able to communicate together much faster with SLI. Instead of being limited to pairing up to four GPUs together with SLI, NVLink should allow you to run up to 64 GPUs together.

Jen-Hsun indicated that when you combine the mixed precision, 3D memory, and NVLink, systems with Pascal GPUs can perform ten times faster than Maxwell. Of course, he also humorously pointed to the small text in the corner of the slide that read "*Very rough estimates." For single-GPU performance, it's still unclear how much faster they will be. What we do know is that Nvidia is targeting twice the performance per watt offered by Maxwell.

Self-Driving Cars and Elon Musk

The last announcement is about the Nvidia Drive PX system. This system was revealed earlier this year, at CES, which we covered in detail here. What we didn't know, however, is when it would be available or what it would cost.

The Drive PX system is what Nvidia describes as a miniature supercomputer that can drive your car. It packs 2.1 TFlops of computational performance, which it gets from its two Tegra X1 SoCs. It can feed off information from 12 individual cameras at up to 184 frames per second.

To process the data it uses, as you probably already figured, a neural network. Inside its AlexNet network are 630 million individual connections, and the Drive PX can process up to 116 billion of these per second.

The benefit of this kind of system may seem questionable at first, but it is really quite easy to explain. Today's ADAS systems, like adaptive cruise control and lane assist, won't actually make any behavioral decisions for you. The adaptive cruise control will slow you down when the car in front of you does, and the lane assist will ensure you don't swerve out of your lane.

When such a system expects an accident to happen, the best it will do is brake for you. A self-driving car using a properly trained neural network should react the way a human would under the right circumstances, but much faster. Instead of braking to prevent an accident, for example, it may just decide to change lanes and drive on.

The Nvidia Drive PX system will be available in May 2015 as a developer's kit and will set you back $10,000. That's a lot of money for the hardware, but you mustn't forget who the target audience is. As a devkit, this isn't a device that will sell in massive quantities, and Nvidia has a lot of research costs to make up.

At the end of the Keynote, Tesla's Elon Musk also showed up for a brief chat with Jen-Hsun. They exchanged a number of thoughts regarding the future of self-driving cars, with topics such as the legislation behind it, how long it will take to replace the entire planet's fleet of cars for either self-driving or electric cars (the answer is 20 years), and the security preventing self-driving cars from being hacked.

One interesting thought that Musk mentioned is that artificial intelligence could possibly be more dangerous than nuclear weapons. He wasn't worried about self-driving cars, however, as they are a very narrow form of artificial intelligence. He believes that one day we'll look at self-driving cars like we look at elevators today. You'll call it, it will pick you up and take you where you want to go, and nobody needs to operate it. He even envisions a day when driving a car may be outlawed, because no human should have control over a two-ton death machine.

And lastly, Elon Musk drives himself half the time, and he keeps his Model S in Insane mode.

What to Expect From GTC 2015

From the keynote one thing is clear: GTC 2015 is going to be all about deep learning. Over the course of the next few days, we'll be attending various sessions at the event to really learn what deep learning is, how it works, and what it can be used for, so that hopefully we can bring this knowledge to you.

Beyond deep learning, there will of course be many other applications for GPUs. There are numerous consumer-geared applications present at GTC, but one thing to keep in mind about the show is that it is very developer-oriented. Lots of new ideas and concepts are presented at the conference, some of which have the potential to become something, and some of which don't.

Stay tuned for more GTC coverage over the coming days.

Follow Niels Broekhuijsen @NBroekhuijsen. Follow us @tomshardware, on Facebook and on Google+.

Niels Broekhuijsen is a Contributing Writer for Tom's Hardware US. He reviews cases, water cooling and pc builds.