PCIe 4.0 Card Hosts 21 M.2 SSDs: Up To 168TB, 31 GB/s

Apex Storage, a newcomer to the storage game, has launched the X21, an AIC (add-in-card) that accommodates up to 21 PCIe 4.0 M.2 SSDs. With the X21, consumers can use the best SSDs to build configurations with capacities of up to 168TB and experience speeds up to 31 GBps.

Apex Storage has its headquarters in Utah and focuses on AIC products. Mike Spicer and Henry Hill appear to be the team behind Apex Storage. You may have heard of Spicer as he kickstarted the Storage Scaler expansion card in 2021 that houses up to 16 M.2 SATA drives. The X21, the only listed product on Apex Storage's website, seems to be the jacked-up version of the original Storage Scaler. But, strangely, Apex Storage opted for PCIe 4.0 on the X21 since PCIe 5.0 SSDs are already available on the retail market.

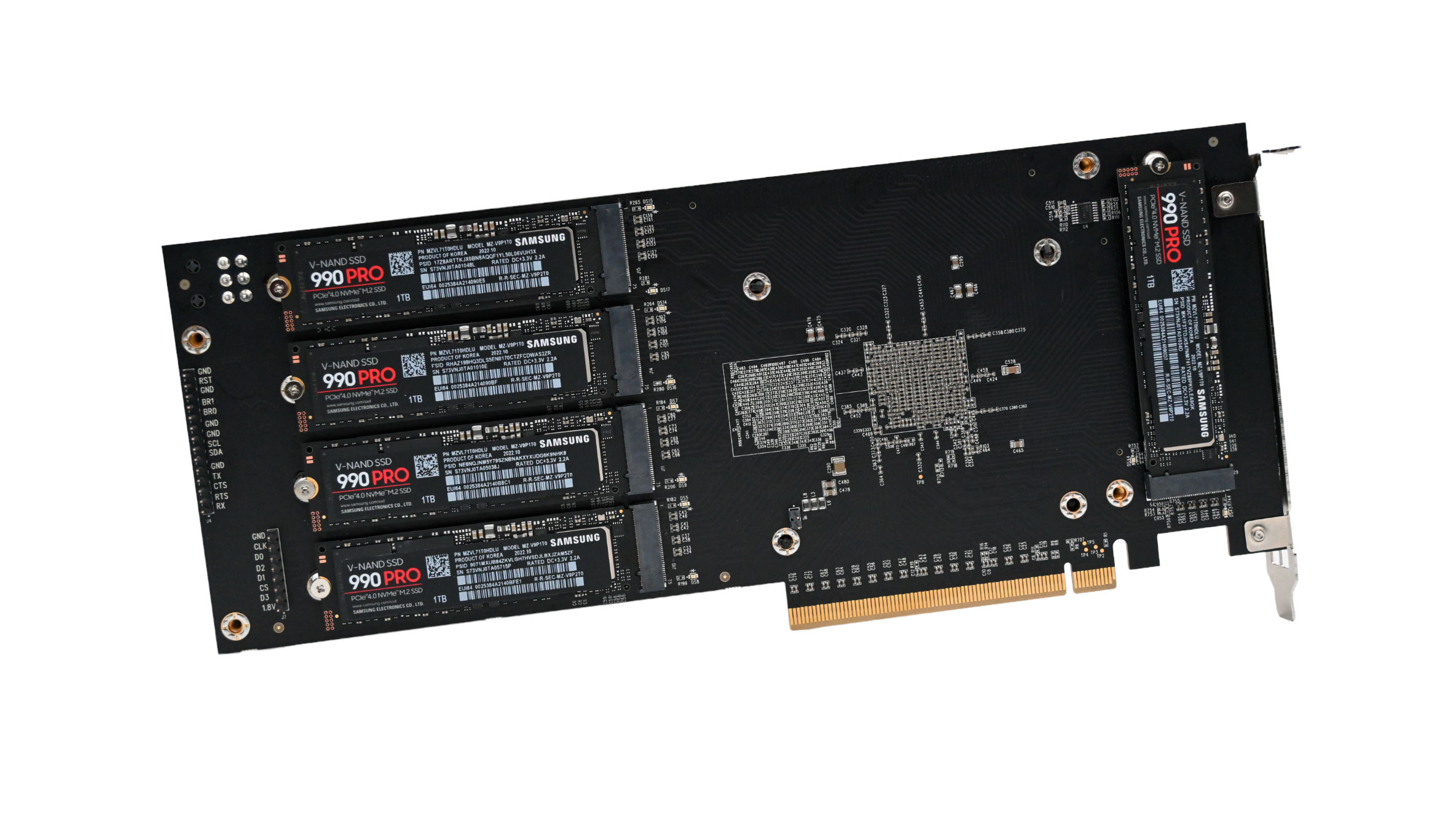

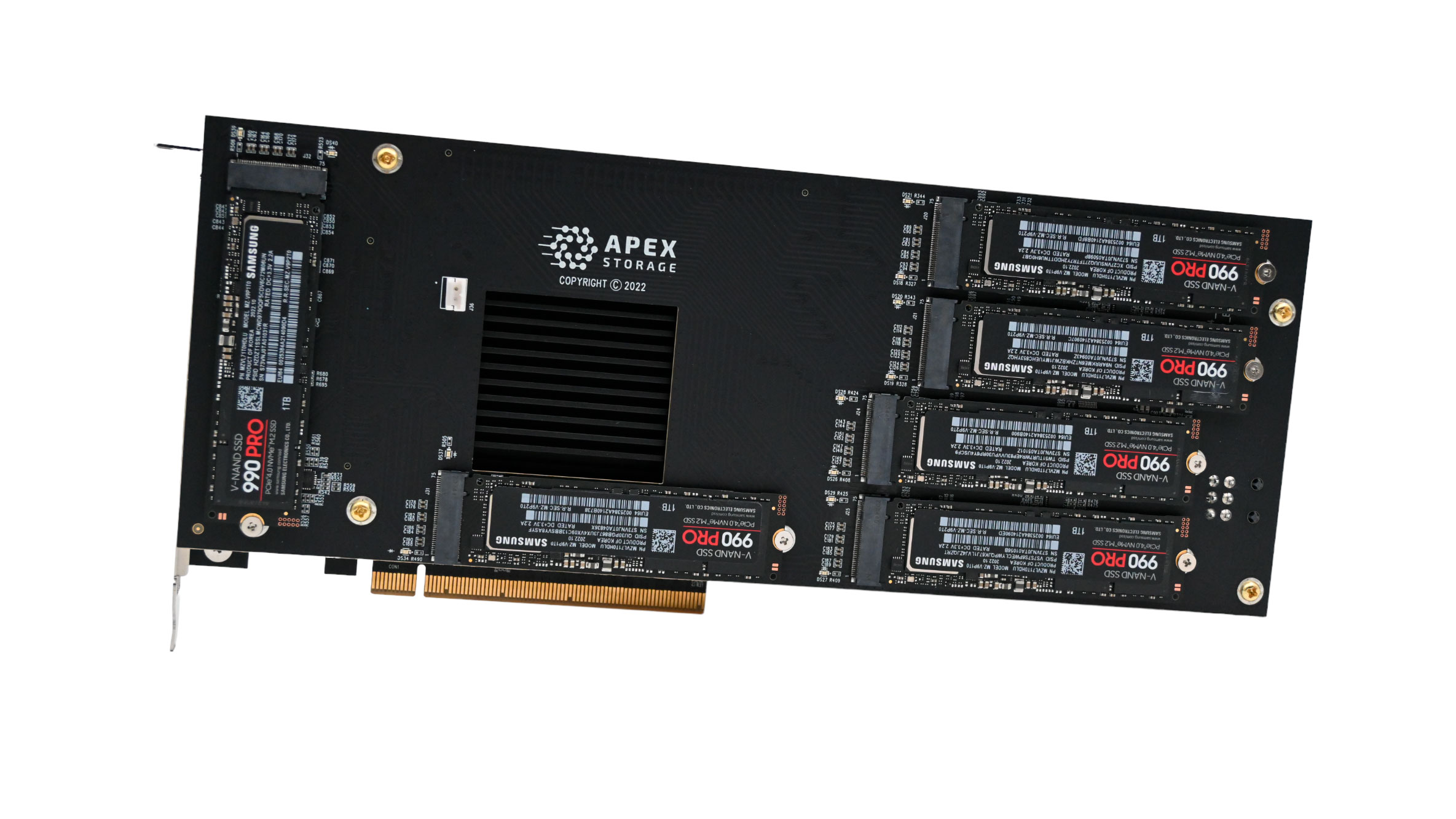

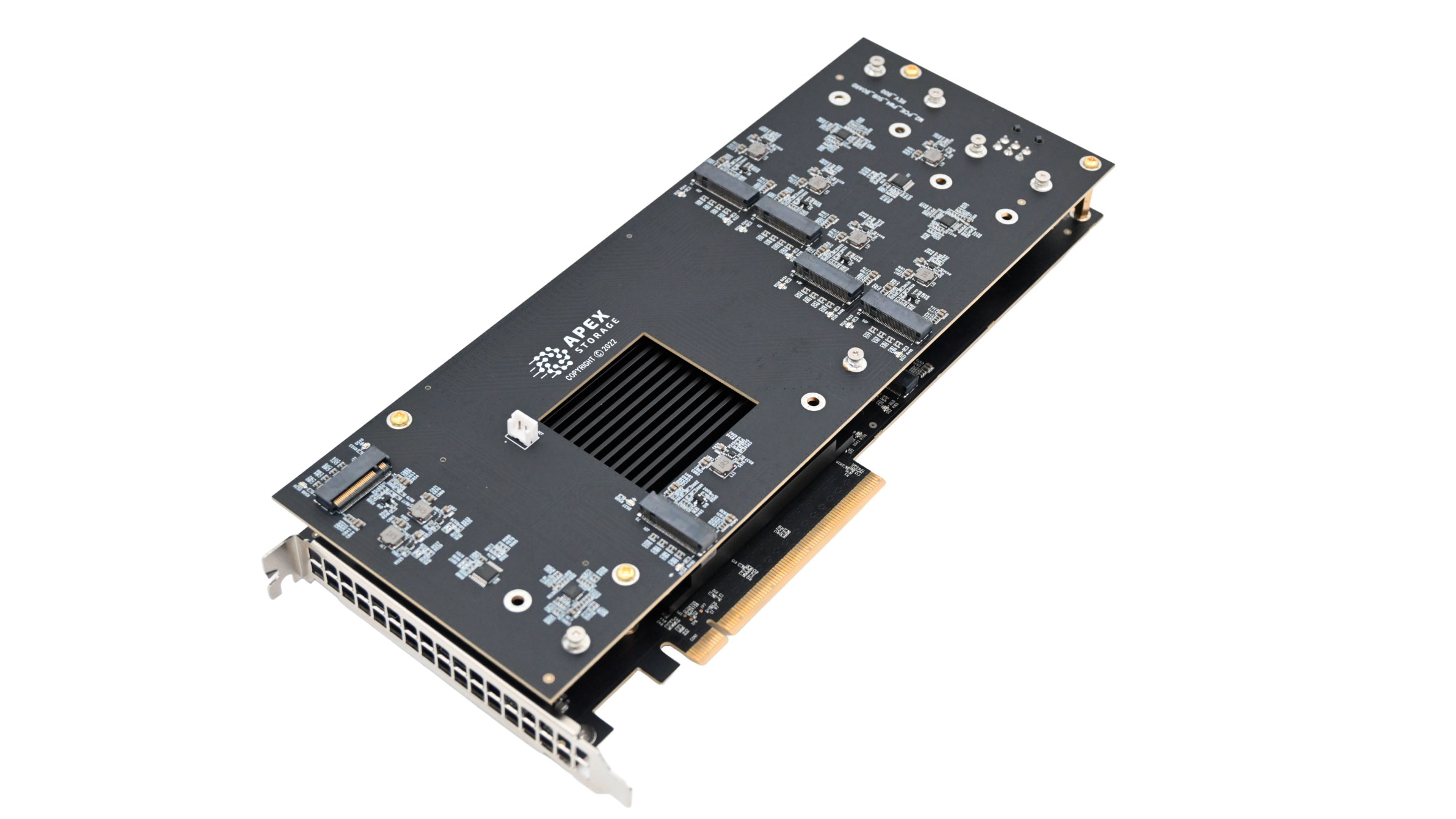

The X21 sticks to a double-width full-height full-length (FHFL) form factor with a single-slot PCI design. The AIC, which measures 274.2mm long, communicates through a standard PCIe 4.0 x16 expansion slot. It's backward compatible with PCIe 3.0, but performance will take a significant hit. Apex Storage essentially bonded two PCBs in a sandwich with the X21, which is why the AIC can handle up to 21 M.2 SSDs putting rival options like the Sabrent Rocket 4 Plus Destroyer 2 to shame. The concept takes us on a trip down memory lane, for sure. If you're old enough, it's the same concept Nvidia used for the prehistoric GeForce 7900 GX2.

There are 10 PCIe 4.0 M.2 slots and a massive heatsink that covers the unspecified controller in the X21's interior. We suspect a PCIe switch is under the heatsink, likely making the X21 tick. The remaining 11 PCIe 4.0 M.2 slots are located on the exterior of the PCBs.

Given the vast number of SSDs the X21 can support, the AIC can't get all the power from the single expansion slot. Therefore, Apex Storage implemented two regular 6-pin PCIe power connectors to provide auxiliary power. This configuration allows for up to 225W. Although the X21 features a passive cooling design, the manufacturer does recommend a minimum airflow of 400 LFM for optimal operation.

The X21 photographs show the AIC with Samsung 990 Pro SSDs and the card only supports M.2 2280 drives. It also accepts Intel's Optane M.2 SSDs, like the H20; not like there are a lot of Optane drives floating around now that Intel has axed its Optane business. Apex Storage doesn't reveal the inner details of the X21. However, the manufacturer confirmed that the X21 offers 100 PCIe lanes, suggesting the presence of a PCIe switch.

In a single-card configuration, the X21 delivers sequential read and write speeds up to 30.5 GBps and 28.5 GBps, respectively. The random performance on the AIC consists of 7.5 million IOPS reads and 6.2 million IOPS writes. The X21 shines even more in a multi-card setup. According to Apex Storage, consumers can enjoy up to 107 GBps sequential reads and 80 GBps sequential writes. The random performance also gets a substantial boost. The X21 flaunts over 20 million IOPS reads and 10 million IOPS writes in a multi-card arrangement. The AIC has an average read and write access latency of 79us and 52us, respectively.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

With 8TB SSDs, such as the Corsair MP400 or the Sabrent Rocket Q, the X21 can supply up to 168TB on a single card. It also supports higher-capacity drives. Once future 16TB M.2 SSDs hit the market, consumers can have up to 336TB of storage on the X21. Regarding the feature set, the X21 supports RAID configurations in Windows and Linux environments. However, Apex Storage didn't expose the type of RAID arrays. The X21 also flaunts "enterprise-grade reliability," NVMe 2.0 support, advanced EEC, data protection, and error recovery.

Apex Storage didn't reveal the pricing or availability for the X21.

Zhiye Liu is a news editor, memory reviewer, and SSD tester at Tom’s Hardware. Although he loves everything that’s hardware, he has a soft spot for CPUs, GPUs, and RAM.

-

btmedic04 It makes sense why they chose pcie 4.0. I couldn't imagine trying to cool 21 pcie 5.0 nvme drivesReply -

TechieTwo Replybtmedic04 said:It makes sense why they chose pcie 4.0. I couldn't imagine trying to cool 21 pcie 5.0 nvme drives

PCIe 5.0 SSDs do not need the big bulky heatsinks being offered. That is a marketing gimmick. The flat heatsink used on PCIe 4.0 SSDs and supplied by most mobo makers is all that is typically required on a PCIe 5.0 SSD unless some company is doing a very poor job of implementing PCIe 5.0 on their SSD. -

domih I wish them good luck selling this thing.Reply

8TB NVMe are around $1,000, so 21 of them means $21,000. Plus the unknown price of the card.

Given the large size, you'll probably want to use them as RAID10. Minus the FS overhead, you end up with 75TB usage. Which is nice in itself.

IMHO, you better go with a data center server where you can replace an NVMe live, with probably an LED telling you which NVMe is faulty. No disassembly, no shutdown. But probably more $$$ though.

But pretty good for people who want a trophy PC, to go with the trophy car(s) and the trophy girlfriend(s). -

USAFRet Reply

In what way do you think this device is useful for a consumer level PC?domih said:I wish them good luck selling this thing.

8TB NVMe are around $1,000, so 21 of them means $21,000. Plus the unknown price of the card.

Given the large size, you'll probably want to use them as RAID10. Minus the FS overhead, you end up with 75TB usage. Which is nice in itself.

IMHO, you better go with a data center server where you can replace an NVMe live, with probably an LED telling you which NVMe is faulty. No disassembly, no shutdown. But probably more $$$ though.

But pretty good for people who want a trophy PC, to go with the trophy car(s) and the trophy girlfriend(s). -

twotwotwo Are there limits to PCIe bifurcation? I know there are cards that split an x16 slot into 4 x4; could you do 8 SSDs connected as x2 or 16 as x1?Reply

It's throwing away a lot of bandwidth (you don't fill it with the fastest SSDs, probably), you still need enough area to hold all the SSDs, and if you have trace length matching/a maximum trace length and a layout holding lots of m.2 sticks, that may complicate things.

But if it is possible, it still gets you the capacity, without PLX chips or similar. -

Geef For something this big I imagine the noise from Back to the Future when Marty flips the switch to turn on the speaker at the beginning of the movie.Reply

Turn on your computer and the lights dim for a second.

:p:p -

domih ReplyUSAFRet said:In what way do you think this device is useful for a consumer level PC?

As sub-text of my OP, not in many ways.

For server, the disassembling when you have to replace an NVMe is a no no. -

Amdlova Who spent 60.000us on a red camera need that.Reply

Last time I have see a red working we got 1.5tb of data on small takes. :) 336tb of data my have one year of work... -

TheOtherOne ReplyWith the X21, consumers can use the best SSDs to build configurations with capacities of up to "168GB" and experience speeds up to 31 GBps.

I think there's a minor typo in the last line of first paragraph! Shouldn't it be 168 "TB"? 🤔