Tested: Default Windows VBS Setting Slows Games Up to 10%, Even on RTX 4090

Virtualization Based Security (VBS) has a big impact on frame rates.

Remember back when Windows 11 launched and there was a concern about how the default of enabling Virtualization Based Security (VBS) and HyperVisor-Enforced Code Integrity (HVCI) might impact performance? There was a lot of noise made, benchmarks were run... and then we all moved on. Flash forward to 2023, and I recently discovered that sometime in the past few months, the PC I use for the GPU benchmarks hierarchy had it turned on, which could have been the result of mucking about with Windows Subsystem for Linux while trying to get Stable Diffusion benchmarks on various GPUs. (We have an article on how to disable VBS should you want to).

Correction (3/22/23): An earlier version of this article incorrectly stated that a Windows update had enabled VBS on my PC. However, we have since learned that Windows updates do not enable VBS, so it must have been something else I installed or enabled. Whatever the case, most new PCs ship with VBS on and clean Windows 11 installs have it enabled also.

This defaulting to VBS on, everywhere, worried me, because I'm already in the middle of retesting all the pertinent graphics cards for the 2023 version of the GPU hierarchy, on a new testbed that includes a Core i9-13900K CPU, 32GB of DDR5-6600 G.Skill memory, and a Sabrent Rocket 4 Plus-G 4TB M.2 SSD. Needless to say, you don't put together best-in-class parts only to run extra features that can hurt performance.

Except... I did. When I put together the new testbed for the coming year back in November, just before the RTX 4080 and RX 7900 XTX/XT launches, I was under a time crunch. I got Windows 11 installed and updated, downloaded the rather massive 1.5TB of games that I use for testing onto the SSD, and got to work — all with VBS enabled. Having now caught my breath and with a bit of extra time, I belatedly realized my error, if you can call it that.

So I set about testing, and retesting, performance of the fastest graphics card, the GeForce RTX 4090, with and without VBS enabled. After all, we're now two new CPU generations beyond what we had at the Windows 11 launch, and with faster CPUs and new architectures, perhaps VBS has even less of an impact than before. At the same time, we're also using new GPUs that deliver substantially more performance than the RTX 3090, which was the fastest GPU back in 2021, which could make CPU bottlenecks and extras like VBS more of a hindrance than before.

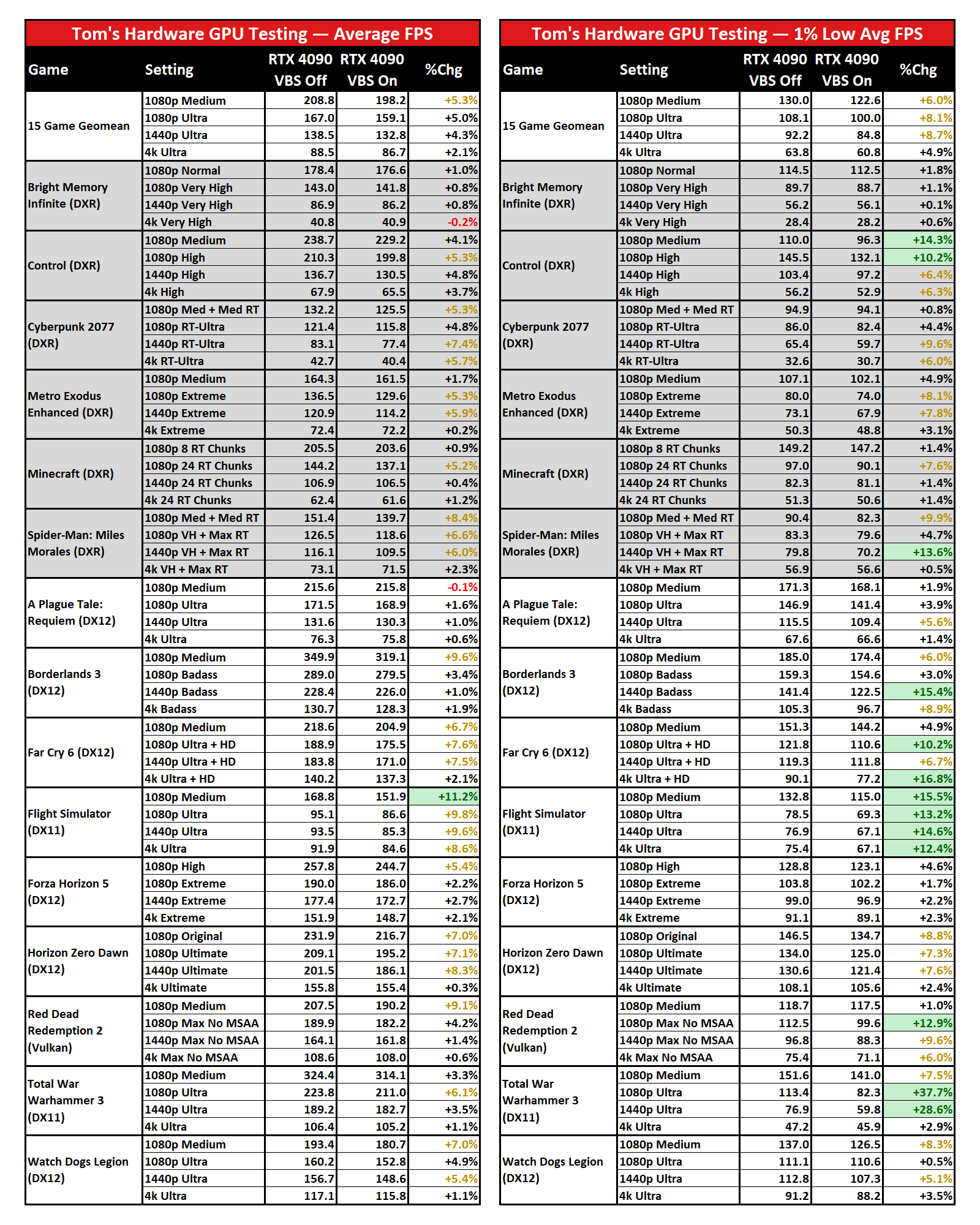

You can see our test PC hardware, using Nvidia's 528.49 drivers (which have now been superseded, thrice). Let's get straight to the results, with our updated test suite and settings that consist of a battery of 15 games, at four different settings/resolution combinations. We're going to summarize things in a table, split into average FPS on the left and 1% low FPS (the average FPS of the bottom 1% of frametimes) on the right.

To be clear, all of the testing was done on the same PC, over a period of a few days. No game updates were applied, no new drivers were installed, etc. to keep things as apples-to-apples as possible. The one change was to disable VBS (because it was on initially, the Windows 11 default).

Each test was run multiple times to ensure consistency of results, which does bring up the one discrepancy: Total War: Warhammer 3 performance is all over the place right now. I don't recall that being the case in the past, but sometime in February or perhaps early March, things seem to have changed for the worse. I'm still investigating the cause, and I'm not sure if it's the game, my system, or something else.

Taking the high level view of things, perhaps it doesn't look too bad. Disabling VBS improved performance by up to 5% overall, and that dropped to just 2% at 4K ultra. And if you're running this level of gaming hardware, we think you're probably also hoping to run 4K ultra. But even at our highest possible settings, there are still some noteworthy exceptions.

The biggest improvement overall comes in Microsoft Flight Simulator, which makes sense as that game tends to be very CPU limited even with the fastest possible processors. Turning off VBS consistently improved performance in our RTX 4090 testing by around 10%, and the 1% lows increased by as much as 15%.

Not coincidentally, Flight Simulator is also one of the games that absolutely loves AMD's large 3D V-Cache on the Ryzen 9 7950X3D. Our CPU tests use a different, less demanding test sequence, but even there the AMD chips with large caches are anywhere from about 20% (Ryzen 7 5800X3D) to 40% (7900X3D) faster than the Core i9-13900K. Perhaps VSB would have less of an impact on AMD's X3D CPUs, but I didn't have access to one of those for testing.

Another game that tends to bump into CPU bottlenecks at lower settings is Far Cry 6, and it also saw pretty consistent 5% or higher increases in performance — noticeable in benchmarks, but less so in actual gaming. Interestingly, Cyberpunk 2077 with ray tracing enabled also still saw about 5% higher performance. That's perhaps because the work of building the BVH structures for ray tracing calculations happens on the CPU; many of the other ray tracing games also showed 5% or higher increases.

What about games where VBS didn't matter much if at all? Bright Memory Infinite (the standalone benchmark, not the full game) showed almost no change, and Minecraft only showed a modest improvement at 1080p with our more taxing settings (24 RT render chunk distance). A Plague Tale: Requiem, Borderlands 3, Forza Horizon 5, and Red Dead Redemption 2 also showed less impact, though in some cases the minimum FPS may have changed more.

(And again, I'm not really saying anything about Total War: Warhammer 3 as performance fluctuated far too much. Even after more than 20 runs each, with and without VBS, there was no clear typical result. Instead of a bell curve, the results fell into three clumps at the low, mid, and high range, with the 1% lows showing even less consistency. Removing TWW3 from our geometric mean only changes the 1% low delta by less than two percent, though, so I left it in.)

The biggest deltas are generally at 1080p, and it didn't seem to matter much whether we were running "medium" or "ultra" settings. That's probably because ultra settings often hit the CPU harder for other calculations, so it's not just a case of higher resolution textures or shadows.

But the question remains: to VBS or not to VBS? Especially for my GPU testing. The good news is that it's pretty much a never-ending process, since new drivers and game patches seem to routinely invalidate older results. I could switch at some point to having VBS off, and maybe I will. But that retesting is also the bane of GPU benchmarks.

Windows VBS: The Bottom Line

So, should you leave VBS on or turn it off? It's not quite that clear cut of a question and answer. The actual security benefits, particularly for a home desktop that doesn't go anywhere, are probably minimal. And if you're serious about squeezing every last bit of performance out of your hardware — via improved cooling, overclocking, and buying more expensive hardware — losing 5% just to some obscure "security benefits" probably isn't worth doing, so you could disable VBS.

Still, having VBS turned on is now the default for new Windows installations (and I'm pretty sure one of the various Windows Updates that came out in late 2022 may have also switched it back on if it was disabled). So you can argue that Microsoft at least thinks it's important and it should be left on. However, the fact that Microsoft also has instructions on how to go about disabling it indicates the performance impact can be very real.

It's also worth noting that the 5~10 percent drop in performance remains consistent with what we measured way back in 2021 when Windows 11 first launched. Nearly two years of upgraded hardware later, sporting some of the most potent components money can buy, and we're still looking at a 5% loss on average in gaming performance. For a top-tier gaming setup, that's almost as much of a performance gain from a traditional CPU architecture update — though Raptor Lake and Zen 4 provided much bigger increases than in the past.

For a lot of people, particularly those with less extreme hardware, the performance penalty while gaming will more likely fall into the low single digit percentage points. But if you're trying to set a performance record, it could certainly hold you back. And now we're left wondering what new vulnerabilities and security mitigations will come next, and how much those may hurt performance. Progress isn't always in a single direction, unfortunately.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Viking2121 Yeah, I knew this hurt FPS long time ago, but I forgot all about it when I reinstalled windows, turning it back off lolReply -

JarredWaltonGPU Reply

This was me with the 13900K. New test build, totally forgot about VBS. Which is really why this article exists. I freaked out and thought, "OMG how much performance am I losing because of VBS!?" Thankfully, everything was tested on the 13900K with it enabled, so it's still "fair" in that sense. But I'm left trying to decide if I should retest the various GPUs with VBS disabled, or stick with the Microsoft default of having it on? Decisions, decisions...Viking2121 said:Yeah, I knew this hurt FPS long time ago, but I forgot all about it when I reinstalled windows, turning it back off lol -

hotaru.hino Reply

Security is all about risk management. We have to know what these features do in detail, how they impact our system usage, and the probability of scenarios in which not having this security feature enabled or available would be a problem. For example, you might say it's incredibly dumb to run Windows XP because of how insecure it is now. Except... just don't plug it into the internet (or a network that has free access to the internet) and nearly all of that risk goes away.flashflood101 said:It's incredibly irresponsible to promote disabling important security features.

In the case of businesses and whatnot, some may not deploy security patches immediately, or at least not to every system they have. If the security patch creates other issues, that could bring down the entire business, and they would have to roll back the patch anyway.

Otherwise blindly saying every security feature should be used may as well be telling everyone they need to get on Windows 11 right now (and if they don't have supported hardware, they need to buy it) and saying they can stay on 10 is irresponsible. -

Alvar "Miles" Udell I would say that with a 5% average FPS difference at 1920x1080 and 2% difference at 3840x2160, that's a negligible and expected difference between a prepared benchmark machine and typical PC, especially if you look at the actual performance differences and see that the most affected game, MS Flight Sim, goes from 151.9 to 168.8 fps, you're not jumping into any new performance tiers, you're still a solid 144hz.Reply

I think anyone using a system costing the better part of $3000 would be more concerned about getting just 40FPS in Cyberpunk 4K than going from 319FPS to 350FPS in Borderlands at FHD. -

Elusive Ruse Thanks Jarred, this was a timely headsup! Also I'm glad to see you're diligent about squeezing every bit of performance out of your setup.Reply -

PlaneInTheSky Replyflashflood101 said:It's incredibly irresponsible to promote disabling important security features.

I've been reading about what this feature does for the last 30 minutes and I have to agree.

This should not be disabled.

This is the windows hypervisor, it is a critical part of Windows their security system. It abstracts running processes from the core OS and private user data.

Not only does it protect you from viruses, malware, etc, it also protects you from programs that are not viruses, from accessing your files, even in offline mode. -

mhmarefat Reply

WOW! Windows users can now go to sleep with the ease of mind that their private data is safe at the trustworthy hands of Microsoft. A small performance regression (on a 13900k no less.. not sure how severe the penalty would be on lower end CPUs that normal people use) is the price to pay for guranteed private user data protection (Microsoft's red line).PlaneInTheSky said:It abstracts running processes from the core OS and private user data.

Nothing to see here.. move along people.