Intel fires back at AMD's AI benchmarks, shares results claiming current-gen Xeon chips are faster at AI than AMD's next-gen 128-core EPYC Turin

The AI flame war heats up.

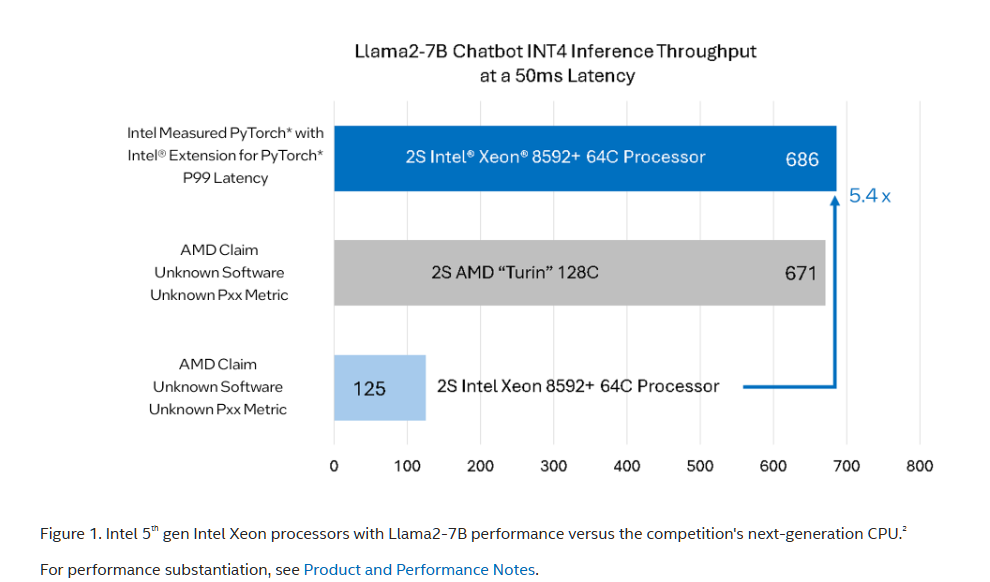

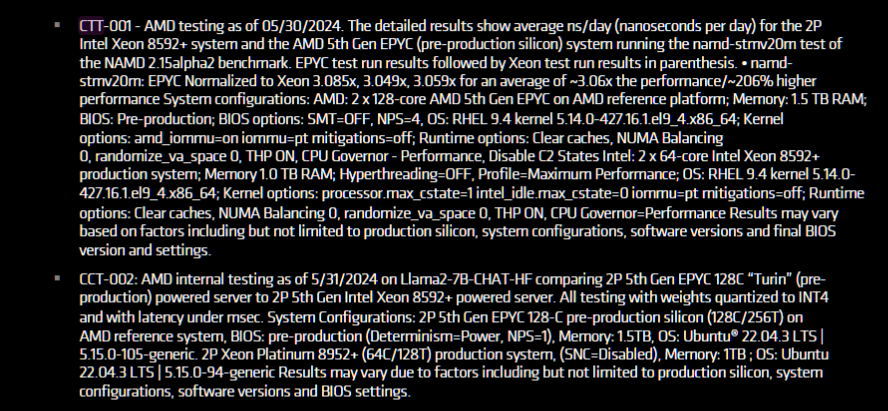

Intel is firing back at AMD's recent AI benchmarks that it shared during its Computex keynote that claimed AMD's Zen 5-based EPYC Turin is 5.4 times faster than Intel's Xeon chips in AI workloads. Intel penned a blog today highlighting the performance of its current-gen Xeon processors in its own benchmarks, claiming that its fifth-gen Xeon chips, which are currently shipping, are faster than AMD's upcoming 3nm EPYC Turin processors that will arrive in the second half of 2024. Intel says AMD's benchmarks are an 'inaccurate representation' of Xeon's performance and shared its own benchmarks to dispute AMD's claims.

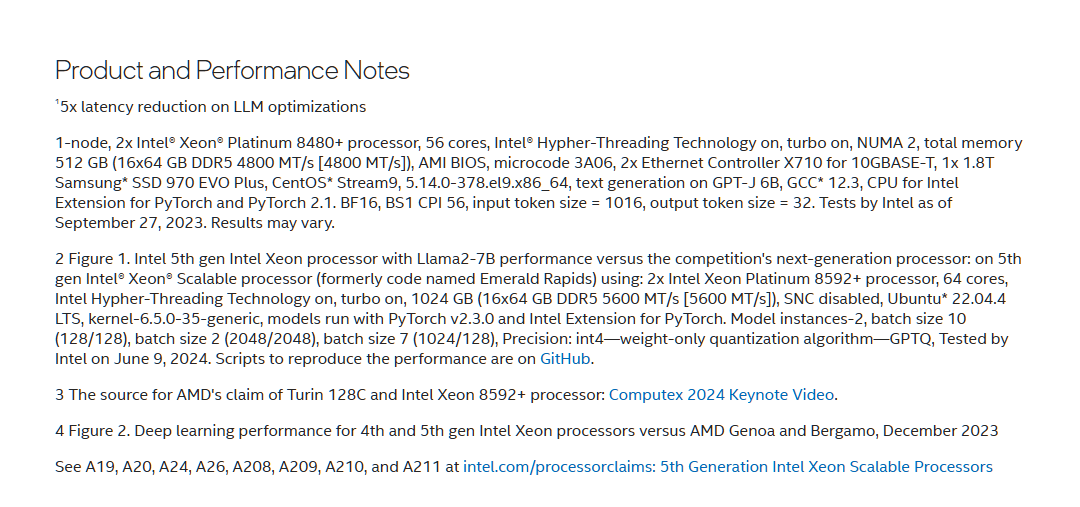

As always, we should approach vendor-provided benchmark results with caution and pay close attention to the test configurations. We've included Intel's test notes in the above album. These chips were all tested in dual-socket servers.

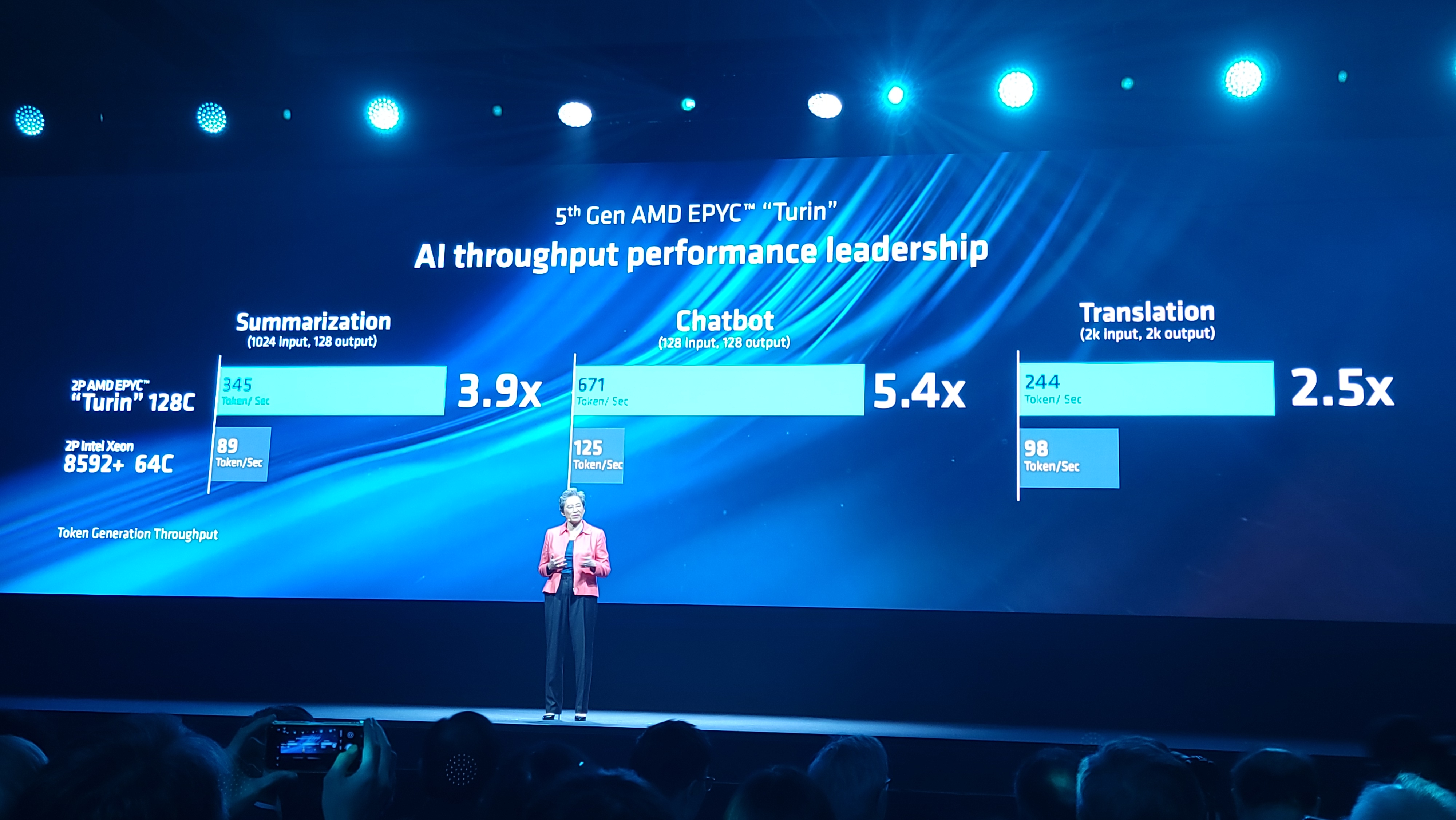

AMD's benchmarks showed a range of advantages over Xeon, but the Llama2-7B chatbot results highlighted the most extreme win, showing a 5.4x advantage for the 128-core Turin (256 cores total) over Intel's 64-core Emerald Rapids Xeon 8592+ (128 cores total).

Intel's own internal results are in turn 5.4x faster than AMD's benchmarks, thus giving the currently shipping 64-core Xeon an advantage over AMD's future 128-core model — a quite impressive claim indeed, and quite the swing in performance.

Intel says AMD didn't share the details of the software it used for its benchmarks or the SLAs required for the test, and we can't find a listing of the batch sizes used (AMD test notes below). Regardless, Intel says AMD's results don't match its own internal benchmarking with widely available open-source software (Intel Extension for PyTorch). Intel assumed a "stringent" 50ms P99 latency constraint for its benchmark and used the same INT4 data type.

If this benchmark represents true performance, the likely disparity here is Intel's support for AMX (Advanced Matrix Extensions) math extensions. These matrix math functions boost performance in AI workloads tremendously, and it isn't clear if AMD employed AMX when it tested Intel's chip. Notably, AMX supports BF16/INT8, so the software engine typically converts the INT4 weights into larger data types to drive through the AMX engine. AMD's current-gen chips don't support native matrix math operations, and it isn't clear if Turin does either.

AMD's Computex benchmarks also included Turin wins over fifth-gen Xeon in AI-driven summarization and translation workloads, claiming 3.9x and 2.5x advantages, respectively. Again, Intel begs to differ, with its own results showing 2.3x and 1.2x higher performance than AMD attained with the Xeon 8592+.

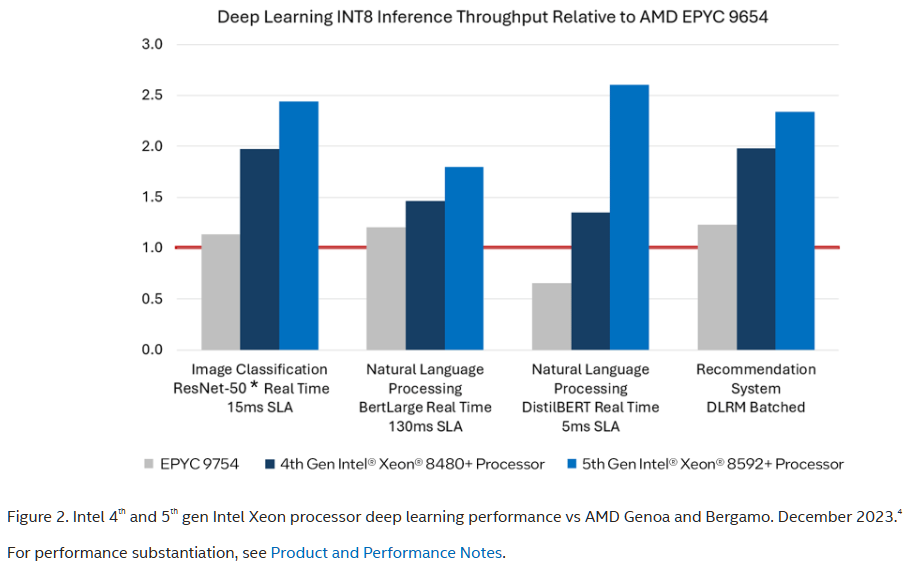

Intel doesn't align its benchmark results against AMD's Turin claims for this chart (we included AMD's claims above). Instead, it chose to compare to AMD's shipping 96-core EPYC 9754 processors and shows performance gains as a relative percentage against that chip. AMD's test notes (last slide above) don't indicate which models it used for its summarization and translation workloads, so it doesn't seem possible to work out the relative performance to Turin in these workloads. However, it does seem that Turin would still come out on top in these benchmarks, but by a slimmer margin.

Notably, Intel claims that even its last-gen Xeons are faster than AMD's current-gen EPYC Genoa and that its newer fifth-gen chips are up to 2.5x faster than Genoa.

Intel's blog points out that its even newer Granite Rapids Xeon 6 chips, not benchmarked here, support up to 2.3x the memory bandwidth over its current-gen chips used here as a byproduct of moving from eight memory channels to 12, along with support for bandwidth-boosting MCR DIMMs. As such, it expects even higher performance in these workloads from its soon-to-launch chips. Intel's newer chips also have up to 128 cores, which should help Intel's performance relative to Turin — these comparisons are with 64-core models. Notably, Intel didn't provide a counter to AMD's claims that Turin is 3.1x faster in molecular dynamics workloads in NAMD.

Thoughts

The AI benchmark wars are heating up as Intel and AMD vie for a leadership position in AI workloads that run on the CPU. It's harder than ever to take vendor-provided benchmarks at face value — not that we ever did anyway. That said, we do expect to see clearly defined benchmark configurations with vendor-provided benchmarks, and AMD's Turin test notes don't meet that bar. To be clear, Intel also doesn't fully describe its comparative test platforms at times, so both vendors could improve here.

Notably, Intel does submit its CPU and Gaudi test results to the publicly available industry-accepted MLPerf database to allow for easily verifiable AI benchmark results, whereas AMD has yet to submit any benchmarks for comparison.

We expect the tit-for-tat benchmarking to only intensify as both companies launch their newest chips, but we'll also put these systems to the test ourselves to suss out the differences. We currently have Intel's Xeon 6 chips under the benchmarking microscope; stay tuned for that.

In the meantime, we've contacted AMD for comment on Intel's counterclaims and will update you when we hear back. Optimizations for AI workloads are becoming a critical factor, so that it's quite easy to see massive gains — or losses — in relative performance. Even on GPUs, we've seen performance improvements over time of 100% or more. Clearly, this won't be the last we see of comparisons between tuned and untuned configurations.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Tech0000 I think Paul is right in his analysis of the discrepancy: "If this benchmark represents true performance, the likely disparity here is Intel's support for AMX (Advanced Matrix Extensions) math extensions. These math functions boost performance in AI workloads tremendously, and it isn't clear if AMD employed AMX when it tested Intel's chip."Reply

The hugely dominating computational and data complexity in AI is the matrix multiplications that are performed repeatedly in both forward and backwards passes. Any direct silicon implementation of matrix multiplications reducing computational latency, increasing computational throughput, AND reduces data bandwidth requirement and latency, will greatly improve the AI performance. AMX does that and not by a small amount either. Given the fundamental laws in complexity theory, if you apply AMX machine instructions intelligently, there is no theoretical possibility a scalar or vector based solution can compete (all else equal # CPUs, #cores, etc, etc). If you want to dive deeper (i.e. completely geek out) into the understanding of AMX and how it can be applied to AI, I suggest reading Intel 64 and IA-32 Architectures Optimization Reference Manual: Volume 1 chapter 20. -

TinyFatMan Who remembers the “war” between Lotus 1-2-3 (Lotus Software) and Quattro Pro (Borland)?Reply

And even more, who remembers the winner? A clue. You probably use it today. Long live the monopolies.

Smile

Intel vs AMD… And ARM Meanwhile…

Intel, AMD (and Microsoft) continuing to ignore 4 billion consumers, smartphone users…

Just because you have trillions doesn't mean you're more intelligent than everyone else...

We must not confuse “brilliance/giftedness”, which can be “measured”, finally, we must take these measurements with a grain of salt, with intelligence, which is too complex to be measured and will remain so. Intelligence being everything that makes an organism a living being. -

rluker5 Since Intel is providing specific details into it's testing methods I find them more believable than some unverifiable claims made by AMD.Reply

The differences in claims are huge. It would be like one brand of potato chips saying their new and improved one ounce bag has four times the weight in potato chips as their competitors one ounce bag and thus is four times the value when in fact they both have one ounce bags. -

rluker5 Reply

I have an Intel CPU smartphone. Intel did try that market when they were giving out the 2w atoms and they were in all of those cheap tablets. If you look at the jumps Intel is making in efficiency in their mobile line, there might be a return to them. Hopefully running Windows. I have wanted a real Windows phone for so long, Windows Mobile was just a tease.TinyFatMan said:Who remembers the “war” between Lotus 1-2-3 (Lotus Software) and Quattro Pro (Borland)?

And even more, who remembers the winner? A clue. You probably use it today. Long live the monopolies.

Smile

Intel vs AMD… And ARM Meanwhile…

Intel, AMD (and Microsoft) continuing to ignore 4 billion consumers, smartphone users…

Just because you have trillions doesn't mean you're more intelligent than everyone else...

We must not confuse “brilliance/giftedness”, which can be “measured”, finally, we must take these measurements with a grain of salt, with intelligence, which is too complex to be measured and will remain so. Intelligence being everything that makes an organism a living being. -

Avro Arrow Intel is claiming that Xeon is superior to EPYC?Reply

Yeah, I'll believe that when I see it (and I don't mean seeing it from Intel). -

purposelycryptic Reply

AMD and Intel specialize in x86 processors, which don't really lend themselves to phones. This is fine. In a world with finite resources, you have to make choices on what to allocate your resources towards, or risk spreading them too thin. In big business, a jack of all trades, master of none is going to lose to masters in their individual fields.TinyFatMan said:Who remembers the “war” between Lotus 1-2-3 (Lotus Software) and Quattro Pro (Borland)?

And even more, who remembers the winner? A clue. You probably use it today. Long live the monopolies.

Smile

Intel vs AMD… And ARM Meanwhile…

Intel, AMD (and Microsoft) continuing to ignore 4 billion consumers, smartphone users…

Just because you have trillions doesn't mean you're more intelligent than everyone else...

We must not confuse “brilliance/giftedness”, which can be “measured”, finally, we must take these measurements with a grain of salt, with intelligence, which is too complex to be measured and will remain so. Intelligence being everything that makes an organism a living being.

ARM doesn't make or sell processors, they only design them, as well as other chips, and develop and sell software and programming tools. They aren't really comparable to AMD or Intel: ARM just designs and licenses out IP, which their licensees then use as a base to manufacture processors and SoCs. There is plenty of competition worldwide in the field of ARM processors and SoCs.

ARM (the architecture, not the company) processors compete with x86 processors in the server space, and companies like Qualcomm are currently trying to make inroads in the Windows laptop space, as a result of Apple having moved their Mac OS computers entirely to the ARM architecture.

Microsoft's repeated attempts at the same over the years met with abject failure, so it will be interesting to see the results this time; both Microsoft and Qualcomm have already invested significant amounts of money in this attempt, as have various OEMs and several other ARM processor manufacturers.

Unlike with Apple, the ARM architecture will fracture the Windows software ecosystem if successful, with likely significant negative repercussions to the overall perceived stability and reliability of the Windows platform, as a PC can no longer be relied upon to "just work" with software, due to the split architecture.

Emulation offers a band-aid solution for the time being, but for the average consumer, and especially the large SMB market, who don't fully understand the difference and simply need their software to work, which for many companies can be positively ancient and barely able to run on modern x86 systems, this is likely to result in significant frustration which, if not handled just right, may collapse majority opinion on Windows ARM machines, resulting in a death spiral they cannot recover from.

Which, honestly, is likely to be the best result for both end users and developers - the power efficiency benefits ARM offers can't really outweigh the chaos adding a second system architecture to the Windows ecosystem will result in.

Obviously, Microsoft, Qualcomm, and everybody else who has invested significant money and resources in the idea is likely to disagree. Just one of the reasons I've sold my stock in them before the launch.